Spaces:

Sleeping

Sleeping

Commit

·

23d4b26

1

Parent(s):

1381d31

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

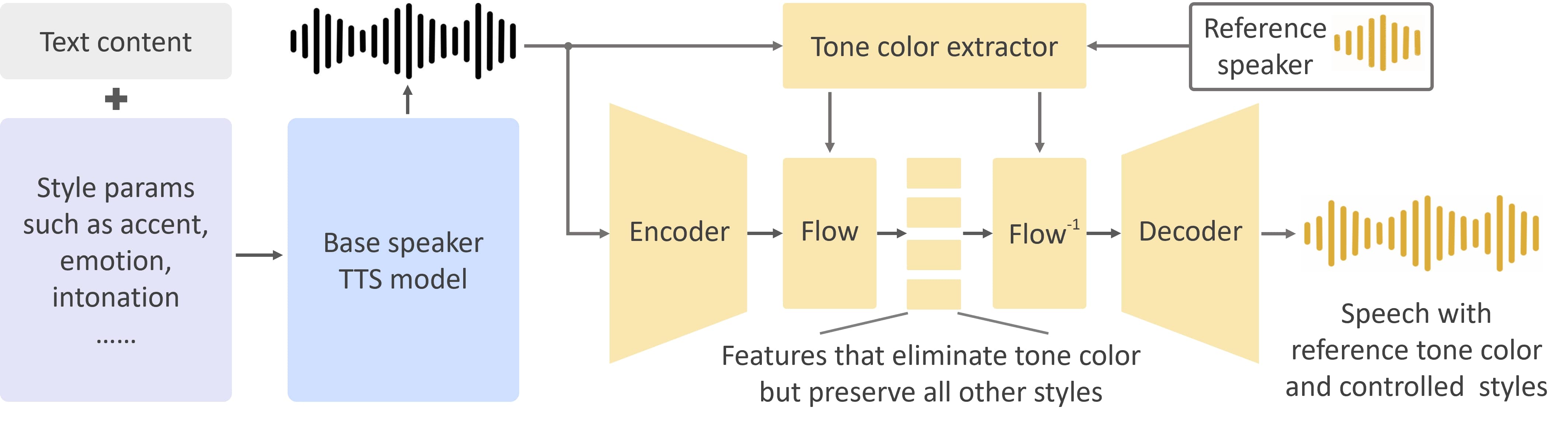

See raw diff

- .gitignore +8 -0

- .ipynb_checkpoints/demo_part1-checkpoint.ipynb +236 -0

- .ipynb_checkpoints/requirements-checkpoint.txt +14 -0

- LICENSE +333 -0

- README.md +100 -13

- __pycache__/api.cpython-310.pyc +0 -0

- __pycache__/attentions.cpython-310.pyc +0 -0

- __pycache__/commons.cpython-310.pyc +0 -0

- __pycache__/mel_processing.cpython-310.pyc +0 -0

- __pycache__/models.cpython-310.pyc +0 -0

- __pycache__/modules.cpython-310.pyc +0 -0

- __pycache__/se_extractor.cpython-310.pyc +0 -0

- __pycache__/transforms.cpython-310.pyc +0 -0

- __pycache__/utils.cpython-310.pyc +0 -0

- api.py +201 -0

- attentions.py +465 -0

- checkpoints/checkpoints/base_speakers/EN/checkpoint.pth +3 -0

- checkpoints/checkpoints/base_speakers/EN/config.json +145 -0

- checkpoints/checkpoints/base_speakers/EN/en_default_se.pth +3 -0

- checkpoints/checkpoints/base_speakers/EN/en_style_se.pth +3 -0

- checkpoints/checkpoints/base_speakers/ZH/checkpoint.pth +3 -0

- checkpoints/checkpoints/base_speakers/ZH/config.json +137 -0

- checkpoints/checkpoints/base_speakers/ZH/zh_default_se.pth +3 -0

- checkpoints/checkpoints/converter/checkpoint.pth +3 -0

- checkpoints/checkpoints/converter/config.json +57 -0

- checkpoints_1226.zip +3 -0

- commons.py +160 -0

- demo_part1.ipynb +385 -0

- demo_part2.ipynb +195 -0

- mel_processing.py +183 -0

- models.py +497 -0

- modules.py +598 -0

- requirements.txt +14 -0

- resources/example_reference.mp3 +0 -0

- resources/framework.jpg +0 -0

- resources/lepton.jpg +0 -0

- resources/myshell.jpg +0 -0

- resources/openvoicelogo.jpg +0 -0

- se_extractor.py +139 -0

- text/__init__.py +79 -0

- text/__pycache__/__init__.cpython-310.pyc +0 -0

- text/__pycache__/cleaners.cpython-310.pyc +0 -0

- text/__pycache__/english.cpython-310.pyc +0 -0

- text/__pycache__/mandarin.cpython-310.pyc +0 -0

- text/__pycache__/symbols.cpython-310.pyc +0 -0

- text/cleaners.py +16 -0

- text/english.py +188 -0

- text/mandarin.py +326 -0

- text/symbols.py +88 -0

- transforms.py +209 -0

.gitignore

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

.ipynb_checkpoints/

|

| 3 |

+

processed

|

| 4 |

+

outputs

|

| 5 |

+

checkpoints

|

| 6 |

+

trash

|

| 7 |

+

examples*

|

| 8 |

+

.env

|

.ipynb_checkpoints/demo_part1-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,236 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "b6ee1ede",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"## Voice Style Control Demo"

|

| 9 |

+

]

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "code",

|

| 13 |

+

"execution_count": null,

|

| 14 |

+

"id": "b7f043ee",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"import os\n",

|

| 19 |

+

"import torch\n",

|

| 20 |

+

"import se_extractor\n",

|

| 21 |

+

"from api import BaseSpeakerTTS, ToneColorConverter"

|

| 22 |

+

]

|

| 23 |

+

},

|

| 24 |

+

{

|

| 25 |

+

"cell_type": "markdown",

|

| 26 |

+

"id": "15116b59",

|

| 27 |

+

"metadata": {},

|

| 28 |

+

"source": [

|

| 29 |

+

"### Initialization"

|

| 30 |

+

]

|

| 31 |

+

},

|

| 32 |

+

{

|

| 33 |

+

"cell_type": "code",

|

| 34 |

+

"execution_count": null,

|

| 35 |

+

"id": "aacad912",

|

| 36 |

+

"metadata": {},

|

| 37 |

+

"outputs": [],

|

| 38 |

+

"source": [

|

| 39 |

+

"ckpt_base = 'checkpoints/base_speakers/EN'\n",

|

| 40 |

+

"ckpt_converter = 'checkpoints/converter'\n",

|

| 41 |

+

"device = 'cuda:0'\n",

|

| 42 |

+

"output_dir = 'outputs'\n",

|

| 43 |

+

"\n",

|

| 44 |

+

"base_speaker_tts = BaseSpeakerTTS(f'{ckpt_base}/config.json', device=device)\n",

|

| 45 |

+

"base_speaker_tts.load_ckpt(f'{ckpt_base}/checkpoint.pth')\n",

|

| 46 |

+

"\n",

|

| 47 |

+

"tone_color_converter = ToneColorConverter(f'{ckpt_converter}/config.json', device=device)\n",

|

| 48 |

+

"tone_color_converter.load_ckpt(f'{ckpt_converter}/checkpoint.pth')\n",

|

| 49 |

+

"\n",

|

| 50 |

+

"os.makedirs(output_dir, exist_ok=True)"

|

| 51 |

+

]

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"cell_type": "markdown",

|

| 55 |

+

"id": "7f67740c",

|

| 56 |

+

"metadata": {},

|

| 57 |

+

"source": [

|

| 58 |

+

"### Obtain Tone Color Embedding"

|

| 59 |

+

]

|

| 60 |

+

},

|

| 61 |

+

{

|

| 62 |

+

"cell_type": "markdown",

|

| 63 |

+

"id": "f8add279",

|

| 64 |

+

"metadata": {},

|

| 65 |

+

"source": [

|

| 66 |

+

"The `source_se` is the tone color embedding of the base speaker. \n",

|

| 67 |

+

"It is an average of multiple sentences generated by the base speaker. We directly provide the result here but\n",

|

| 68 |

+

"the readers feel free to extract `source_se` by themselves."

|

| 69 |

+

]

|

| 70 |

+

},

|

| 71 |

+

{

|

| 72 |

+

"cell_type": "code",

|

| 73 |

+

"execution_count": null,

|

| 74 |

+

"id": "63ff6273",

|

| 75 |

+

"metadata": {},

|

| 76 |

+

"outputs": [],

|

| 77 |

+

"source": [

|

| 78 |

+

"source_se = torch.load(f'{ckpt_base}/en_default_se.pth').to(device)"

|

| 79 |

+

]

|

| 80 |

+

},

|

| 81 |

+

{

|

| 82 |

+

"cell_type": "markdown",

|

| 83 |

+

"id": "4f71fcc3",

|

| 84 |

+

"metadata": {},

|

| 85 |

+

"source": [

|

| 86 |

+

"The `reference_speaker.mp3` below points to the short audio clip of the reference whose voice we want to clone. We provide an example here. If you use your own reference speakers, please **make sure each speaker has a unique filename.** The `se_extractor` will save the `targeted_se` using the filename of the audio and **will not automatically overwrite.**"

|

| 87 |

+

]

|

| 88 |

+

},

|

| 89 |

+

{

|

| 90 |

+

"cell_type": "code",

|

| 91 |

+

"execution_count": null,

|

| 92 |

+

"id": "55105eae",

|

| 93 |

+

"metadata": {},

|

| 94 |

+

"outputs": [],

|

| 95 |

+

"source": [

|

| 96 |

+

"reference_speaker = 'resources/example_reference.mp3'\n",

|

| 97 |

+

"target_se, audio_name = se_extractor.get_se(reference_speaker, tone_color_converter, target_dir='processed', vad=True)"

|

| 98 |

+

]

|

| 99 |

+

},

|

| 100 |

+

{

|

| 101 |

+

"cell_type": "markdown",

|

| 102 |

+

"id": "a40284aa",

|

| 103 |

+

"metadata": {},

|

| 104 |

+

"source": [

|

| 105 |

+

"### Inference"

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"cell_type": "code",

|

| 110 |

+

"execution_count": null,

|

| 111 |

+

"id": "73dc1259",

|

| 112 |

+

"metadata": {},

|

| 113 |

+

"outputs": [],

|

| 114 |

+

"source": [

|

| 115 |

+

"save_path = f'{output_dir}/output_en_default.wav'\n",

|

| 116 |

+

"\n",

|

| 117 |

+

"# Run the base speaker tts\n",

|

| 118 |

+

"text = \"This audio is generated by OpenVoice.\"\n",

|

| 119 |

+

"src_path = f'{output_dir}/tmp.wav'\n",

|

| 120 |

+

"base_speaker_tts.tts(text, src_path, speaker='default', language='English', speed=1.0)\n",

|

| 121 |

+

"\n",

|

| 122 |

+

"# Run the tone color converter\n",

|

| 123 |

+

"encode_message = \"@MyShell\"\n",

|

| 124 |

+

"tone_color_converter.convert(\n",

|

| 125 |

+

" audio_src_path=src_path, \n",

|

| 126 |

+

" src_se=source_se, \n",

|

| 127 |

+

" tgt_se=target_se, \n",

|

| 128 |

+

" output_path=save_path,\n",

|

| 129 |

+

" message=encode_message)"

|

| 130 |

+

]

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"cell_type": "markdown",

|

| 134 |

+

"id": "6e3ea28a",

|

| 135 |

+

"metadata": {},

|

| 136 |

+

"source": [

|

| 137 |

+

"**Try with different styles and speed.** The style can be controlled by the `speaker` parameter in the `base_speaker_tts.tts` method. Available choices: friendly, cheerful, excited, sad, angry, terrified, shouting, whispering. Note that the tone color embedding need to be updated. The speed can be controlled by the `speed` parameter. Let's try whispering with speed 0.9."

|

| 138 |

+

]

|

| 139 |

+

},

|

| 140 |

+

{

|

| 141 |

+

"cell_type": "code",

|

| 142 |

+

"execution_count": null,

|

| 143 |

+

"id": "fd022d38",

|

| 144 |

+

"metadata": {},

|

| 145 |

+

"outputs": [],

|

| 146 |

+

"source": [

|

| 147 |

+

"source_se = torch.load(f'{ckpt_base}/en_style_se.pth').to(device)\n",

|

| 148 |

+

"save_path = f'{output_dir}/output_whispering.wav'\n",

|

| 149 |

+

"\n",

|

| 150 |

+

"# Run the base speaker tts\n",

|

| 151 |

+

"text = \"This audio is generated by OpenVoice with a half-performance model.\"\n",

|

| 152 |

+

"src_path = f'{output_dir}/tmp.wav'\n",

|

| 153 |

+

"base_speaker_tts.tts(text, src_path, speaker='whispering', language='English', speed=0.9)\n",

|

| 154 |

+

"\n",

|

| 155 |

+

"# Run the tone color converter\n",

|

| 156 |

+

"encode_message = \"@MyShell\"\n",

|

| 157 |

+

"tone_color_converter.convert(\n",

|

| 158 |

+

" audio_src_path=src_path, \n",

|

| 159 |

+

" src_se=source_se, \n",

|

| 160 |

+

" tgt_se=target_se, \n",

|

| 161 |

+

" output_path=save_path,\n",

|

| 162 |

+

" message=encode_message)"

|

| 163 |

+

]

|

| 164 |

+

},

|

| 165 |

+

{

|

| 166 |

+

"cell_type": "markdown",

|

| 167 |

+

"id": "5fcfc70b",

|

| 168 |

+

"metadata": {},

|

| 169 |

+

"source": [

|

| 170 |

+

"**Try with different languages.** OpenVoice can achieve multi-lingual voice cloning by simply replace the base speaker. We provide an example with a Chinese base speaker here and we encourage the readers to try `demo_part2.ipynb` for a detailed demo."

|

| 171 |

+

]

|

| 172 |

+

},

|

| 173 |

+

{

|

| 174 |

+

"cell_type": "code",

|

| 175 |

+

"execution_count": null,

|

| 176 |

+

"id": "a71d1387",

|

| 177 |

+

"metadata": {},

|

| 178 |

+

"outputs": [],

|

| 179 |

+

"source": [

|

| 180 |

+

"\n",

|

| 181 |

+

"ckpt_base = 'checkpoints/base_speakers/ZH'\n",

|

| 182 |

+

"base_speaker_tts = BaseSpeakerTTS(f'{ckpt_base}/config.json', device=device)\n",

|

| 183 |

+

"base_speaker_tts.load_ckpt(f'{ckpt_base}/checkpoint.pth')\n",

|

| 184 |

+

"\n",

|

| 185 |

+

"source_se = torch.load(f'{ckpt_base}/zh_default_se.pth').to(device)\n",

|

| 186 |

+

"save_path = f'{output_dir}/output_chinese.wav'\n",

|

| 187 |

+

"\n",

|

| 188 |

+

"# Run the base speaker tts\n",

|

| 189 |

+

"text = \"今天天气真好,我们一起出去吃饭吧。\"\n",

|

| 190 |

+

"src_path = f'{output_dir}/tmp.wav'\n",

|

| 191 |

+

"base_speaker_tts.tts(text, src_path, speaker='default', language='Chinese', speed=1.0)\n",

|

| 192 |

+

"\n",

|

| 193 |

+

"# Run the tone color converter\n",

|

| 194 |

+

"encode_message = \"@MyShell\"\n",

|

| 195 |

+

"tone_color_converter.convert(\n",

|

| 196 |

+

" audio_src_path=src_path, \n",

|

| 197 |

+

" src_se=source_se, \n",

|

| 198 |

+

" tgt_se=target_se, \n",

|

| 199 |

+

" output_path=save_path,\n",

|

| 200 |

+

" message=encode_message)"

|

| 201 |

+

]

|

| 202 |

+

},

|

| 203 |

+

{

|

| 204 |

+

"cell_type": "markdown",

|

| 205 |

+

"id": "8e513094",

|

| 206 |

+

"metadata": {},

|

| 207 |

+

"source": [

|

| 208 |

+

"**Tech for good.** For people who will deploy OpenVoice for public usage: We offer you the option to add watermark to avoid potential misuse. Please see the ToneColorConverter class. **MyShell reserves the ability to detect whether an audio is generated by OpenVoice**, no matter whether the watermark is added or not."

|

| 209 |

+

]

|

| 210 |

+

}

|

| 211 |

+

],

|

| 212 |

+

"metadata": {

|

| 213 |

+

"interpreter": {

|

| 214 |

+

"hash": "9d70c38e1c0b038dbdffdaa4f8bfa1f6767c43760905c87a9fbe7800d18c6c35"

|

| 215 |

+

},

|

| 216 |

+

"kernelspec": {

|

| 217 |

+

"display_name": "Python 3.9.18 ('openvoice')",

|

| 218 |

+

"language": "python",

|

| 219 |

+

"name": "python3"

|

| 220 |

+

},

|

| 221 |

+

"language_info": {

|

| 222 |

+

"codemirror_mode": {

|

| 223 |

+

"name": "ipython",

|

| 224 |

+

"version": 3

|

| 225 |

+

},

|

| 226 |

+

"file_extension": ".py",

|

| 227 |

+

"mimetype": "text/x-python",

|

| 228 |

+

"name": "python",

|

| 229 |

+

"nbconvert_exporter": "python",

|

| 230 |

+

"pygments_lexer": "ipython3",

|

| 231 |

+

"version": "3.9.18"

|

| 232 |

+

}

|

| 233 |

+

},

|

| 234 |

+

"nbformat": 4,

|

| 235 |

+

"nbformat_minor": 5

|

| 236 |

+

}

|

.ipynb_checkpoints/requirements-checkpoint.txt

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

librosa==0.9.1

|

| 2 |

+

faster-whisper==0.9.0

|

| 3 |

+

pydub==0.25.1

|

| 4 |

+

wavmark==0.0.2

|

| 5 |

+

numpy==1.22.0

|

| 6 |

+

eng_to_ipa==0.0.2

|

| 7 |

+

inflect==7.0.0

|

| 8 |

+

unidecode==1.3.7

|

| 9 |

+

whisper-timestamped==1.14.2

|

| 10 |

+

openai

|

| 11 |

+

python-dotenv

|

| 12 |

+

pypinyin

|

| 13 |

+

jieba

|

| 14 |

+

cn2an

|

LICENSE

ADDED

|

@@ -0,0 +1,333 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Creative Commons Attribution-NonCommercial 4.0 International Public

|

| 2 |

+

License

|

| 3 |

+

|

| 4 |

+

By exercising the Licensed Rights (defined below), You accept and agree

|

| 5 |

+

to be bound by the terms and conditions of this Creative Commons

|

| 6 |

+

Attribution-NonCommercial 4.0 International Public License ("Public

|

| 7 |

+

License"). To the extent this Public License may be interpreted as a

|

| 8 |

+

contract, You are granted the Licensed Rights in consideration of Your

|

| 9 |

+

acceptance of these terms and conditions, and the Licensor grants You

|

| 10 |

+

such rights in consideration of benefits the Licensor receives from

|

| 11 |

+

making the Licensed Material available under these terms and

|

| 12 |

+

conditions.

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

Section 1 -- Definitions.

|

| 16 |

+

|

| 17 |

+

a. Adapted Material means material subject to Copyright and Similar

|

| 18 |

+

Rights that is derived from or based upon the Licensed Material

|

| 19 |

+

and in which the Licensed Material is translated, altered,

|

| 20 |

+

arranged, transformed, or otherwise modified in a manner requiring

|

| 21 |

+

permission under the Copyright and Similar Rights held by the

|

| 22 |

+

Licensor. For purposes of this Public License, where the Licensed

|

| 23 |

+

Material is a musical work, performance, or sound recording,

|

| 24 |

+

Adapted Material is always produced where the Licensed Material is

|

| 25 |

+

synched in timed relation with a moving image.

|

| 26 |

+

|

| 27 |

+

b. Adapter's License means the license You apply to Your Copyright

|

| 28 |

+

and Similar Rights in Your contributions to Adapted Material in

|

| 29 |

+

accordance with the terms and conditions of this Public License.

|

| 30 |

+

|

| 31 |

+

c. Copyright and Similar Rights means copyright and/or similar rights

|

| 32 |

+

closely related to copyright including, without limitation,

|

| 33 |

+

performance, broadcast, sound recording, and Sui Generis Database

|

| 34 |

+

Rights, without regard to how the rights are labeled or

|

| 35 |

+

categorized. For purposes of this Public License, the rights

|

| 36 |

+

specified in Section 2(b)(1)-(2) are not Copyright and Similar

|

| 37 |

+

Rights.

|

| 38 |

+

d. Effective Technological Measures means those measures that, in the

|

| 39 |

+

absence of proper authority, may not be circumvented under laws

|

| 40 |

+

fulfilling obligations under Article 11 of the WIPO Copyright

|

| 41 |

+

Treaty adopted on December 20, 1996, and/or similar international

|

| 42 |

+

agreements.

|

| 43 |

+

|

| 44 |

+

e. Exceptions and Limitations means fair use, fair dealing, and/or

|

| 45 |

+

any other exception or limitation to Copyright and Similar Rights

|

| 46 |

+

that applies to Your use of the Licensed Material.

|

| 47 |

+

|

| 48 |

+

f. Licensed Material means the artistic or literary work, database,

|

| 49 |

+

or other material to which the Licensor applied this Public

|

| 50 |

+

License.

|

| 51 |

+

|

| 52 |

+

g. Licensed Rights means the rights granted to You subject to the

|

| 53 |

+

terms and conditions of this Public License, which are limited to

|

| 54 |

+

all Copyright and Similar Rights that apply to Your use of the

|

| 55 |

+

Licensed Material and that the Licensor has authority to license.

|

| 56 |

+

|

| 57 |

+

h. Licensor means the individual(s) or entity(ies) granting rights

|

| 58 |

+

under this Public License.

|

| 59 |

+

|

| 60 |

+

i. NonCommercial means not primarily intended for or directed towards

|

| 61 |

+

commercial advantage or monetary compensation. For purposes of

|

| 62 |

+

this Public License, the exchange of the Licensed Material for

|

| 63 |

+

other material subject to Copyright and Similar Rights by digital

|

| 64 |

+

file-sharing or similar means is NonCommercial provided there is

|

| 65 |

+

no payment of monetary compensation in connection with the

|

| 66 |

+

exchange.

|

| 67 |

+

|

| 68 |

+

j. Share means to provide material to the public by any means or

|

| 69 |

+

process that requires permission under the Licensed Rights, such

|

| 70 |

+

as reproduction, public display, public performance, distribution,

|

| 71 |

+

dissemination, communication, or importation, and to make material

|

| 72 |

+

available to the public including in ways that members of the

|

| 73 |

+

public may access the material from a place and at a time

|

| 74 |

+

individually chosen by them.

|

| 75 |

+

|

| 76 |

+

k. Sui Generis Database Rights means rights other than copyright

|

| 77 |

+

resulting from Directive 96/9/EC of the European Parliament and of

|

| 78 |

+

the Council of 11 March 1996 on the legal protection of databases,

|

| 79 |

+

as amended and/or succeeded, as well as other essentially

|

| 80 |

+

equivalent rights anywhere in the world.

|

| 81 |

+

|

| 82 |

+

l. You means the individual or entity exercising the Licensed Rights

|

| 83 |

+

under this Public License. Your has a corresponding meaning.

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

Section 2 -- Scope.

|

| 87 |

+

|

| 88 |

+

a. License grant.

|

| 89 |

+

|

| 90 |

+

1. Subject to the terms and conditions of this Public License,

|

| 91 |

+

the Licensor hereby grants You a worldwide, royalty-free,

|

| 92 |

+

non-sublicensable, non-exclusive, irrevocable license to

|

| 93 |

+

exercise the Licensed Rights in the Licensed Material to:

|

| 94 |

+

|

| 95 |

+

a. reproduce and Share the Licensed Material, in whole or

|

| 96 |

+

in part, for NonCommercial purposes only; and

|

| 97 |

+

|

| 98 |

+

b. produce, reproduce, and Share Adapted Material for

|

| 99 |

+

NonCommercial purposes only.

|

| 100 |

+

|

| 101 |

+

2. Exceptions and Limitations. For the avoidance of doubt, where

|

| 102 |

+

Exceptions and Limitations apply to Your use, this Public

|

| 103 |

+

License does not apply, and You do not need to comply with

|

| 104 |

+

its terms and conditions.

|

| 105 |

+

|

| 106 |

+

3. Term. The term of this Public License is specified in Section

|

| 107 |

+

6(a).

|

| 108 |

+

|

| 109 |

+

4. Media and formats; technical modifications allowed. The

|

| 110 |

+

Licensor authorizes You to exercise the Licensed Rights in

|

| 111 |

+

all media and formats whether now known or hereafter created,

|

| 112 |

+

and to make technical modifications necessary to do so. The

|

| 113 |

+

Licensor waives and/or agrees not to assert any right or

|

| 114 |

+

authority to forbid You from making technical modifications

|

| 115 |

+

necessary to exercise the Licensed Rights, including

|

| 116 |

+

technical modifications necessary to circumvent Effective

|

| 117 |

+

Technological Measures. For purposes of this Public License,

|

| 118 |

+

simply making modifications authorized by this Section 2(a)

|

| 119 |

+

(4) never produces Adapted Material.

|

| 120 |

+

|

| 121 |

+

5. Downstream recipients.

|

| 122 |

+

|

| 123 |

+

a. Offer from the Licensor -- Licensed Material. Every

|

| 124 |

+

recipient of the Licensed Material automatically

|

| 125 |

+

receives an offer from the Licensor to exercise the

|

| 126 |

+

Licensed Rights under the terms and conditions of this

|

| 127 |

+

Public License.

|

| 128 |

+

|

| 129 |

+

b. No downstream restrictions. You may not offer or impose

|

| 130 |

+

any additional or different terms or conditions on, or

|

| 131 |

+

apply any Effective Technological Measures to, the

|

| 132 |

+

Licensed Material if doing so restricts exercise of the

|

| 133 |

+

Licensed Rights by any recipient of the Licensed

|

| 134 |

+

Material.

|

| 135 |

+

|

| 136 |

+

6. No endorsement. Nothing in this Public License constitutes or

|

| 137 |

+

may be construed as permission to assert or imply that You

|

| 138 |

+

are, or that Your use of the Licensed Material is, connected

|

| 139 |

+

with, or sponsored, endorsed, or granted official status by,

|

| 140 |

+

the Licensor or others designated to receive attribution as

|

| 141 |

+

provided in Section 3(a)(1)(A)(i).

|

| 142 |

+

|

| 143 |

+

b. Other rights.

|

| 144 |

+

|

| 145 |

+

1. Moral rights, such as the right of integrity, are not

|

| 146 |

+

licensed under this Public License, nor are publicity,

|

| 147 |

+

privacy, and/or other similar personality rights; however, to

|

| 148 |

+

the extent possible, the Licensor waives and/or agrees not to

|

| 149 |

+

assert any such rights held by the Licensor to the limited

|

| 150 |

+

extent necessary to allow You to exercise the Licensed

|

| 151 |

+

Rights, but not otherwise.

|

| 152 |

+

|

| 153 |

+

2. Patent and trademark rights are not licensed under this

|

| 154 |

+

Public License.

|

| 155 |

+

|

| 156 |

+

3. To the extent possible, the Licensor waives any right to

|

| 157 |

+

collect royalties from You for the exercise of the Licensed

|

| 158 |

+

Rights, whether directly or through a collecting society

|

| 159 |

+

under any voluntary or waivable statutory or compulsory

|

| 160 |

+

licensing scheme. In all other cases the Licensor expressly

|

| 161 |

+

reserves any right to collect such royalties, including when

|

| 162 |

+

the Licensed Material is used other than for NonCommercial

|

| 163 |

+

purposes.

|

| 164 |

+

|

| 165 |

+

|

| 166 |

+

Section 3 -- License Conditions.

|

| 167 |

+

|

| 168 |

+

Your exercise of the Licensed Rights is expressly made subject to the

|

| 169 |

+

following conditions.

|

| 170 |

+

|

| 171 |

+

a. Attribution.

|

| 172 |

+

|

| 173 |

+

1. If You Share the Licensed Material (including in modified

|

| 174 |

+

form), You must:

|

| 175 |

+

|

| 176 |

+

a. retain the following if it is supplied by the Licensor

|

| 177 |

+

with the Licensed Material:

|

| 178 |

+

|

| 179 |

+

i. identification of the creator(s) of the Licensed

|

| 180 |

+

Material and any others designated to receive

|

| 181 |

+

attribution, in any reasonable manner requested by

|

| 182 |

+

the Licensor (including by pseudonym if

|

| 183 |

+

designated);

|

| 184 |

+

|

| 185 |

+

ii. a copyright notice;

|

| 186 |

+

|

| 187 |

+

iii. a notice that refers to this Public License;

|

| 188 |

+

|

| 189 |

+

iv. a notice that refers to the disclaimer of

|

| 190 |

+

warranties;

|

| 191 |

+

|

| 192 |

+

v. a URI or hyperlink to the Licensed Material to the

|

| 193 |

+

extent reasonably practicable;

|

| 194 |

+

|

| 195 |

+

b. indicate if You modified the Licensed Material and

|

| 196 |

+

retain an indication of any previous modifications; and

|

| 197 |

+

|

| 198 |

+

c. indicate the Licensed Material is licensed under this

|

| 199 |

+

Public License, and include the text of, or the URI or

|

| 200 |

+

hyperlink to, this Public License.

|

| 201 |

+

|

| 202 |

+

2. You may satisfy the conditions in Section 3(a)(1) in any

|

| 203 |

+

reasonable manner based on the medium, means, and context in

|

| 204 |

+

which You Share the Licensed Material. For example, it may be

|

| 205 |

+

reasonable to satisfy the conditions by providing a URI or

|

| 206 |

+

hyperlink to a resource that includes the required

|

| 207 |

+

information.

|

| 208 |

+

|

| 209 |

+

3. If requested by the Licensor, You must remove any of the

|

| 210 |

+

information required by Section 3(a)(1)(A) to the extent

|

| 211 |

+

reasonably practicable.

|

| 212 |

+

|

| 213 |

+

4. If You Share Adapted Material You produce, the Adapter's

|

| 214 |

+

License You apply must not prevent recipients of the Adapted

|

| 215 |

+

Material from complying with this Public License.

|

| 216 |

+

|

| 217 |

+

|

| 218 |

+

Section 4 -- Sui Generis Database Rights.

|

| 219 |

+

|

| 220 |

+

Where the Licensed Rights include Sui Generis Database Rights that

|

| 221 |

+

apply to Your use of the Licensed Material:

|

| 222 |

+

|

| 223 |

+

a. for the avoidance of doubt, Section 2(a)(1) grants You the right

|

| 224 |

+

to extract, reuse, reproduce, and Share all or a substantial

|

| 225 |

+

portion of the contents of the database for NonCommercial purposes

|

| 226 |

+

only;

|

| 227 |

+

|

| 228 |

+

b. if You include all or a substantial portion of the database

|

| 229 |

+

contents in a database in which You have Sui Generis Database

|

| 230 |

+

Rights, then the database in which You have Sui Generis Database

|

| 231 |

+

Rights (but not its individual contents) is Adapted Material; and

|

| 232 |

+

|

| 233 |

+

c. You must comply with the conditions in Section 3(a) if You Share

|

| 234 |

+

all or a substantial portion of the contents of the database.

|

| 235 |

+

|

| 236 |

+

For the avoidance of doubt, this Section 4 supplements and does not

|

| 237 |

+

replace Your obligations under this Public License where the Licensed

|

| 238 |

+

Rights include other Copyright and Similar Rights.

|

| 239 |

+

|

| 240 |

+

|

| 241 |

+

Section 5 -- Disclaimer of Warranties and Limitation of Liability.

|

| 242 |

+

|

| 243 |

+

a. UNLESS OTHERWISE SEPARATELY UNDERTAKEN BY THE LICENSOR, TO THE

|

| 244 |

+

EXTENT POSSIBLE, THE LICENSOR OFFERS THE LICENSED MATERIAL AS-IS

|

| 245 |

+

AND AS-AVAILABLE, AND MAKES NO REPRESENTATIONS OR WARRANTIES OF

|

| 246 |

+

ANY KIND CONCERNING THE LICENSED MATERIAL, WHETHER EXPRESS,

|

| 247 |

+

IMPLIED, STATUTORY, OR OTHER. THIS INCLUDES, WITHOUT LIMITATION,

|

| 248 |

+

WARRANTIES OF TITLE, MERCHANTABILITY, FITNESS FOR A PARTICULAR

|

| 249 |

+

PURPOSE, NON-INFRINGEMENT, ABSENCE OF LATENT OR OTHER DEFECTS,

|

| 250 |

+

ACCURACY, OR THE PRESENCE OR ABSENCE OF ERRORS, WHETHER OR NOT

|

| 251 |

+

KNOWN OR DISCOVERABLE. WHERE DISCLAIMERS OF WARRANTIES ARE NOT

|

| 252 |

+

ALLOWED IN FULL OR IN PART, THIS DISCLAIMER MAY NOT APPLY TO YOU.

|

| 253 |

+

|

| 254 |

+

b. TO THE EXTENT POSSIBLE, IN NO EVENT WILL THE LICENSOR BE LIABLE

|

| 255 |

+

TO YOU ON ANY LEGAL THEORY (INCLUDING, WITHOUT LIMITATION,

|

| 256 |

+

NEGLIGENCE) OR OTHERWISE FOR ANY DIRECT, SPECIAL, INDIRECT,

|

| 257 |

+

INCIDENTAL, CONSEQUENTIAL, PUNITIVE, EXEMPLARY, OR OTHER LOSSES,

|

| 258 |

+

COSTS, EXPENSES, OR DAMAGES ARISING OUT OF THIS PUBLIC LICENSE OR

|

| 259 |

+

USE OF THE LICENSED MATERIAL, EVEN IF THE LICENSOR HAS BEEN

|

| 260 |

+

ADVISED OF THE POSSIBILITY OF SUCH LOSSES, COSTS, EXPENSES, OR

|

| 261 |

+

DAMAGES. WHERE A LIMITATION OF LIABILITY IS NOT ALLOWED IN FULL OR

|

| 262 |

+

IN PART, THIS LIMITATION MAY NOT APPLY TO YOU.

|

| 263 |

+

|

| 264 |

+

c. The disclaimer of warranties and limitation of liability provided

|

| 265 |

+

above shall be interpreted in a manner that, to the extent

|

| 266 |

+

possible, most closely approximates an absolute disclaimer and

|

| 267 |

+

waiver of all liability.

|

| 268 |

+

|

| 269 |

+

|

| 270 |

+

Section 6 -- Term and Termination.

|

| 271 |

+

|

| 272 |

+

a. This Public License applies for the term of the Copyright and

|

| 273 |

+

Similar Rights licensed here. However, if You fail to comply with

|

| 274 |

+

this Public License, then Your rights under this Public License

|

| 275 |

+

terminate automatically.

|

| 276 |

+

|

| 277 |

+

b. Where Your right to use the Licensed Material has terminated under

|

| 278 |

+

Section 6(a), it reinstates:

|

| 279 |

+

|

| 280 |

+

1. automatically as of the date the violation is cured, provided

|

| 281 |

+

it is cured within 30 days of Your discovery of the

|

| 282 |

+

violation; or

|

| 283 |

+

|

| 284 |

+

2. upon express reinstatement by the Licensor.

|

| 285 |

+

|

| 286 |

+

For the avoidance of doubt, this Section 6(b) does not affect any

|

| 287 |

+

right the Licensor may have to seek remedies for Your violations

|

| 288 |

+

of this Public License.

|

| 289 |

+

|

| 290 |

+

c. For the avoidance of doubt, the Licensor may also offer the

|

| 291 |

+

Licensed Material under separate terms or conditions or stop

|

| 292 |

+

distributing the Licensed Material at any time; however, doing so

|

| 293 |

+

will not terminate this Public License.

|

| 294 |

+

|

| 295 |

+

d. Sections 1, 5, 6, 7, and 8 survive termination of this Public

|

| 296 |

+

License.

|

| 297 |

+

|

| 298 |

+

|

| 299 |

+

Section 7 -- Other Terms and Conditions.

|

| 300 |

+

|

| 301 |

+

a. The Licensor shall not be bound by any additional or different

|

| 302 |

+

terms or conditions communicated by You unless expressly agreed.

|

| 303 |

+

|

| 304 |

+

b. Any arrangements, understandings, or agreements regarding the

|

| 305 |

+

Licensed Material not stated herein are separate from and

|

| 306 |

+

independent of the terms and conditions of this Public License.

|

| 307 |

+

|

| 308 |

+

|

| 309 |

+

Section 8 -- Interpretation.

|

| 310 |

+

|

| 311 |

+

a. For the avoidance of doubt, this Public License does not, and

|

| 312 |

+

shall not be interpreted to, reduce, limit, restrict, or impose

|

| 313 |

+

conditions on any use of the Licensed Material that could lawfully

|

| 314 |

+

be made without permission under this Public License.

|

| 315 |

+

|

| 316 |

+

b. To the extent possible, if any provision of this Public License is

|

| 317 |

+

deemed unenforceable, it shall be automatically reformed to the

|

| 318 |

+

minimum extent necessary to make it enforceable. If the provision

|

| 319 |

+

cannot be reformed, it shall be severed from this Public License

|

| 320 |

+

without affecting the enforceability of the remaining terms and

|

| 321 |

+

conditions.

|

| 322 |

+

|

| 323 |

+

c. No term or condition of this Public License will be waived and no

|

| 324 |

+

failure to comply consented to unless expressly agreed to by the

|

| 325 |

+

Licensor.

|

| 326 |

+

|

| 327 |

+

d. Nothing in this Public License constitutes or may be interpreted

|

| 328 |

+

as a limitation upon, or waiver of, any privileges and immunities

|

| 329 |

+

that apply to the Licensor or You, including from the legal

|

| 330 |

+

processes of any jurisdiction or authority.

|

| 331 |

+

|

| 332 |

+

=======================================================================

|

| 333 |

+

|

README.md

CHANGED

|

@@ -1,13 +1,100 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<div align="center">

|

| 2 |

+

<div> </div>

|

| 3 |

+

<img src="resources/openvoicelogo.jpg" width="400"/>

|

| 4 |

+

|

| 5 |

+

[Paper](https://arxiv.org/abs/2312.01479) |

|

| 6 |

+

[Website](https://research.myshell.ai/open-voice)

|

| 7 |

+

|

| 8 |

+

</div>

|

| 9 |

+

|

| 10 |

+

## Introduction

|

| 11 |

+

As we detailed in our [paper](https://arxiv.org/abs/2312.01479) and [website](https://research.myshell.ai/open-voice), the advantages of OpenVoice are three-fold:

|

| 12 |

+

|

| 13 |

+

**1. Accurate Tone Color Cloning.**

|

| 14 |

+

OpenVoice can accurately clone the reference tone color and generate speech in multiple languages and accents.

|

| 15 |

+

|

| 16 |

+

**2. Flexible Voice Style Control.**

|

| 17 |

+

OpenVoice enables granular control over voice styles, such as emotion and accent, as well as other style parameters including rhythm, pauses, and intonation.

|

| 18 |

+

|

| 19 |

+

**3. Zero-shot Cross-lingual Voice Cloning.**

|

| 20 |

+

Neither of the language of the generated speech nor the language of the reference speech needs to be presented in the massive-speaker multi-lingual training dataset.

|

| 21 |

+

|

| 22 |

+

[Video](https://github.com/myshell-ai/OpenVoice/assets/40556743/3cba936f-82bf-476c-9e52-09f0f417bb2f)

|

| 23 |

+

|

| 24 |

+

<div align="center">

|

| 25 |

+

<div> </div>

|

| 26 |

+

<img src="resources/framework.jpg" width="800"/>

|

| 27 |

+

<div> </div>

|

| 28 |

+

</div>

|

| 29 |

+

|

| 30 |

+

OpenVoice has been powering the instant voice cloning capability of [myshell.ai](https://app.myshell.ai/explore) since May 2023. Until Nov 2023, the voice cloning model has been used tens of millions of times by users worldwide, and witnessed the explosive user growth on the platform.

|

| 31 |

+

|

| 32 |

+

## Main Contributors

|

| 33 |

+

|

| 34 |

+

- [Zengyi Qin](https://www.qinzy.tech) at MIT and MyShell

|

| 35 |

+

- [Wenliang Zhao](https://wl-zhao.github.io) at Tsinghua University

|

| 36 |

+

- [Xumin Yu](https://yuxumin.github.io) at Tsinghua University

|

| 37 |

+

- [Ethan Sun](https://twitter.com/ethan_myshell) at MyShell

|

| 38 |

+

|

| 39 |

+

## Live Demo

|

| 40 |

+

|

| 41 |

+

<div align="center">

|

| 42 |

+

<a href="https://www.lepton.ai/playground/openvoice"><img src="resources/lepton.jpg"></a>

|

| 43 |

+

|

| 44 |

+

<a href="https://app.myshell.ai/explore"><img src="resources/myshell.jpg"></a>

|

| 45 |

+

</div>

|

| 46 |

+

|

| 47 |

+

## Disclaimer

|

| 48 |

+

|

| 49 |

+

This is an open-source implementation that approximates the performance of the internal voice clone technology of [myshell.ai](https://app.myshell.ai/explore). The online version in myshell.ai has better 1) audio quality, 2) voice cloning similarity, 3) speech naturalness and 4) computational efficiency.

|

| 50 |

+

|

| 51 |

+

## Installation

|

| 52 |

+

Clone this repo, and run

|

| 53 |

+

```

|

| 54 |

+

conda create -n openvoice python=3.9

|

| 55 |

+

conda activate openvoice

|

| 56 |

+

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.7 -c pytorch -c nvidia

|

| 57 |

+

pip install -r requirements.txt

|

| 58 |

+

```

|

| 59 |

+

Download the checkpoint from [here](https://myshell-public-repo-hosting.s3.amazonaws.com/checkpoints_1226.zip) and extract it to the `checkpoints` folder

|

| 60 |

+

|

| 61 |

+

## Usage

|

| 62 |

+

|

| 63 |

+

**1. Flexible Voice Style Control.**

|

| 64 |

+

Please see `demo_part1.ipynb` for an example usage of how OpenVoice enables flexible style control over the cloned voice.

|

| 65 |

+

|

| 66 |

+

**2. Cross-Lingual Voice Cloning.**

|

| 67 |

+

Please see `demo_part2.ipynb` for an example for languages seen or unseen in the MSML training set.

|

| 68 |

+

|

| 69 |

+

**3. Advanced Usage.**

|

| 70 |

+

The base speaker model can be replaced with any model (in any language and style) that the user prefer. Please use the `se_extractor.get_se` function as demonstrated in the demo to extract the tone color embedding for the new base speaker.

|

| 71 |

+

|

| 72 |

+

**4. Tips to Generate Natural Speech.**

|

| 73 |

+

There are many single or multi-speaker TTS methods that can generate natural speech, and are readily available. By simply replacing the base speaker model with the model you prefer, you can push the speech naturalness to a level you desire.

|

| 74 |

+

|

| 75 |

+

## Roadmap

|

| 76 |

+

|

| 77 |

+

- [x] Inference code

|

| 78 |

+

- [x] Tone color converter model

|

| 79 |

+

- [x] Multi-style base speaker model

|

| 80 |

+

- [x] Multi-style and multi-lingual demo

|

| 81 |

+

- [x] Base speaker model in other languages

|

| 82 |

+

- [x] EN base speaker model with better naturalness

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

## Citation

|

| 86 |

+

```

|

| 87 |

+

@article{qin2023openvoice,

|

| 88 |

+

title={OpenVoice: Versatile Instant Voice Cloning},

|

| 89 |

+

author={Qin, Zengyi and Zhao, Wenliang and Yu, Xumin and Sun, Xin},

|

| 90 |

+

journal={arXiv preprint arXiv:2312.01479},

|

| 91 |

+

year={2023}

|

| 92 |

+

}

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

## License

|

| 96 |

+

This repository is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which prohibits commercial usage. **MyShell reserves the ability to detect whether an audio is generated by OpenVoice**, no matter whether the watermark is added or not.

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

## Acknowledgements

|

| 100 |

+

This open-source implementation is based on several open-source projects, [TTS](https://github.com/coqui-ai/TTS), [VITS](https://github.com/jaywalnut310/vits), and [VITS2](https://github.com/daniilrobnikov/vits2). Thanks for their awesome work!

|

__pycache__/api.cpython-310.pyc

ADDED

|

Binary file (7.14 kB). View file

|

|

|

__pycache__/attentions.cpython-310.pyc

ADDED

|

Binary file (11.1 kB). View file

|

|

|

__pycache__/commons.cpython-310.pyc

ADDED

|

Binary file (5.68 kB). View file

|

|

|

__pycache__/mel_processing.cpython-310.pyc

ADDED

|

Binary file (4.12 kB). View file

|

|

|

__pycache__/models.cpython-310.pyc

ADDED

|

Binary file (12.6 kB). View file

|

|

|

__pycache__/modules.cpython-310.pyc

ADDED

|

Binary file (12.6 kB). View file

|

|

|

__pycache__/se_extractor.cpython-310.pyc

ADDED

|

Binary file (3.71 kB). View file

|

|

|

__pycache__/transforms.cpython-310.pyc

ADDED

|

Binary file (3.89 kB). View file

|

|

|

__pycache__/utils.cpython-310.pyc

ADDED

|

Binary file (6.17 kB). View file

|

|

|

api.py

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import numpy as np

|

| 3 |

+

import re

|

| 4 |

+

import soundfile

|

| 5 |

+

import utils

|

| 6 |

+

import commons

|

| 7 |

+

import os

|

| 8 |

+

import librosa

|

| 9 |

+

from text import text_to_sequence

|

| 10 |

+

from mel_processing import spectrogram_torch

|

| 11 |

+

from models import SynthesizerTrn

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class OpenVoiceBaseClass(object):

|

| 15 |

+

def __init__(self,

|

| 16 |

+

config_path,

|

| 17 |

+

device='cuda:0'):

|

| 18 |

+

if 'cuda' in device:

|

| 19 |

+

assert torch.cuda.is_available()

|

| 20 |

+

|

| 21 |

+