Spaces:

Runtime error

Runtime error

inference logic

Browse files- .gitignore +4 -1

- README.md +2 -0

- app.py +87 -0

- conditioning_images/conditioning_image_1.jpg +0 -0

- conditioning_images/conditioning_image_1_prompt.txt +1 -0

- conditioning_images/conditioning_image_1_raw.jpg +0 -0

- conditioning_images/conditioning_image_2.jpg +0 -0

- conditioning_images/conditioning_image_2_prompt.txt +1 -0

- conditioning_images/conditioning_image_2_raw.jpg +0 -0

- requirements.txt +5 -0

.gitignore

CHANGED

|

@@ -1,2 +1,5 @@

|

|

| 1 |

|

| 2 |

-

.idea

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

|

| 2 |

+

.idea

|

| 3 |

+

|

| 4 |

+

venv

|

| 5 |

+

.venv

|

README.md

CHANGED

|

@@ -7,6 +7,8 @@ sdk: gradio

|

|

| 7 |

sdk_version: 3.27.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

|

|

|

|

|

|

| 10 |

---

|

| 11 |

|

| 12 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 7 |

sdk_version: 3.27.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

+

tags:

|

| 11 |

+

- jax-diffusers-event

|

| 12 |

---

|

| 13 |

|

| 14 |

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

app.py

ADDED

|

@@ -0,0 +1,87 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from PIL import Image

|

| 2 |

+

import gradio as gr

|

| 3 |

+

from diffusers import StableDiffusionControlNetPipeline, ControlNetModel, UniPCMultistepScheduler

|

| 4 |

+

import torch

|

| 5 |

+

|

| 6 |

+

controlnet = ControlNetModel.from_pretrained("ioclab/control_v1p_sd15_brightness", torch_dtype=torch.float32, use_safetensors=True)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 10 |

+

"runwayml/stable-diffusion-v1-5", controlnet=controlnet, torch_dtype=torch.float32,

|

| 11 |

+

)

|

| 12 |

+

|

| 13 |

+

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

| 14 |

+

|

| 15 |

+

# pipe.enable_xformers_memory_efficient_attention()

|

| 16 |

+

pipe.enable_model_cpu_offload()

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def infer(prompt, negative_prompt, num_inference_steps, conditioning_image):

|

| 20 |

+

# conditioning_image = Image.open(conditioning_image)

|

| 21 |

+

conditioning_image = Image.fromarray(conditioning_image)

|

| 22 |

+

generator = torch.Generator(device="cpu").manual_seed(1500)

|

| 23 |

+

|

| 24 |

+

output_image = pipe(

|

| 25 |

+

prompt,

|

| 26 |

+

conditioning_image,

|

| 27 |

+

height=512,

|

| 28 |

+

width=512,

|

| 29 |

+

num_inference_steps=num_inference_steps,

|

| 30 |

+

generator=generator,

|

| 31 |

+

negative_prompt=negative_prompt,

|

| 32 |

+

controlnet_conditioning_scale=1.0,

|

| 33 |

+

).images[0]

|

| 34 |

+

|

| 35 |

+

return output_image

|

| 36 |

+

|

| 37 |

+

with gr.Blocks() as demo:

|

| 38 |

+

gr.Markdown(

|

| 39 |

+

"""

|

| 40 |

+

# ControlNet on Brightness

|

| 41 |

+

|

| 42 |

+

This is a demo on ControlNet based on brightness.

|

| 43 |

+

""")

|

| 44 |

+

|

| 45 |

+

with gr.Row():

|

| 46 |

+

with gr.Column():

|

| 47 |

+

prompt = gr.Textbox(

|

| 48 |

+

label="Prompt",

|

| 49 |

+

)

|

| 50 |

+

negative_prompt = gr.Textbox(

|

| 51 |

+

label="Negative Prompt",

|

| 52 |

+

)

|

| 53 |

+

num_inference_steps = gr.Slider(

|

| 54 |

+

10, 40, 20,

|

| 55 |

+

step=1,

|

| 56 |

+

label="Steps",

|

| 57 |

+

)

|

| 58 |

+

conditioning_image = gr.Image(

|

| 59 |

+

label="Conditioning Image",

|

| 60 |

+

)

|

| 61 |

+

submit_btn = gr.Button(

|

| 62 |

+

value="Submit",

|

| 63 |

+

variant="primary"

|

| 64 |

+

)

|

| 65 |

+

with gr.Column(min_width=300):

|

| 66 |

+

output = gr.Image(

|

| 67 |

+

label="Result",

|

| 68 |

+

)

|

| 69 |

+

|

| 70 |

+

submit_btn.click(

|

| 71 |

+

fn=infer,

|

| 72 |

+

inputs=[

|

| 73 |

+

prompt, negative_prompt, num_inference_steps, conditioning_image

|

| 74 |

+

],

|

| 75 |

+

outputs=output

|

| 76 |

+

)

|

| 77 |

+

gr.Examples(

|

| 78 |

+

examples=[

|

| 79 |

+

["a painting of a village in the mountains", "monochrome", "./conditioning_images/conditioning_image_1.jpg"],

|

| 80 |

+

["three people walking in an alleyway with hats and pants", "monochrome", "./conditioning_images/conditioning_image_2.jpg"],

|

| 81 |

+

],

|

| 82 |

+

inputs=[

|

| 83 |

+

prompt, negative_prompt, conditioning_image

|

| 84 |

+

],

|

| 85 |

+

)

|

| 86 |

+

|

| 87 |

+

demo.launch()

|

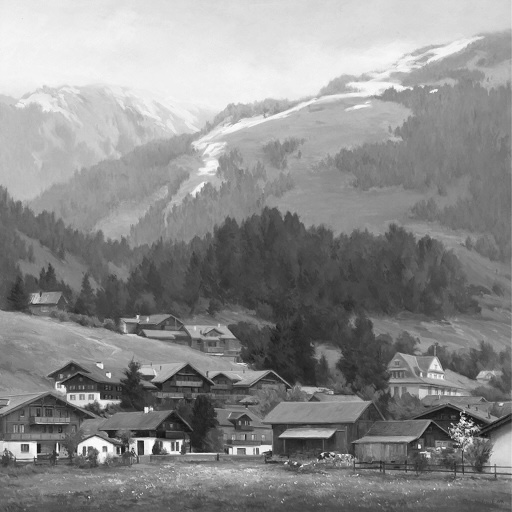

conditioning_images/conditioning_image_1.jpg

ADDED

|

conditioning_images/conditioning_image_1_prompt.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

a painting of a village in the mountains

|

conditioning_images/conditioning_image_1_raw.jpg

ADDED

|

conditioning_images/conditioning_image_2.jpg

ADDED

|

conditioning_images/conditioning_image_2_prompt.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

three people walking in an alleyway with hats and pants

|

conditioning_images/conditioning_image_2_raw.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

transformers

|

| 2 |

+

torch

|

| 3 |

+

safetensors

|

| 4 |

+

accelerate

|

| 5 |

+

git+https://github.com/huggingface/diffusers@main

|