Spaces:

Runtime error

Runtime error

cache

Browse files- .gitattributes +1 -0

- .gitignore +3 -1

- app.py +19 -4

- conditioning_images/conditioning_image_5.jpg +0 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

CHANGED

|

@@ -2,4 +2,6 @@

|

|

| 2 |

.idea

|

| 3 |

|

| 4 |

venv

|

| 5 |

-

.venv

|

|

|

|

|

|

|

|

|

| 2 |

.idea

|

| 3 |

|

| 4 |

venv

|

| 5 |

+

.venv

|

| 6 |

+

|

| 7 |

+

gradio_cached_examples

|

app.py

CHANGED

|

@@ -7,7 +7,10 @@ torch.backends.cuda.matmul.allow_tf32 = True

|

|

| 7 |

controlnet = ControlNetModel.from_pretrained("ioclab/control_v1p_sd15_brightness", torch_dtype=torch.float16, use_safetensors=True)

|

| 8 |

|

| 9 |

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 10 |

-

"runwayml/stable-diffusion-v1-5",

|

|

|

|

|

|

|

|

|

|

| 11 |

)

|

| 12 |

|

| 13 |

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

|

@@ -16,7 +19,15 @@ pipe.enable_xformers_memory_efficient_attention()

|

|

| 16 |

pipe.enable_model_cpu_offload()

|

| 17 |

pipe.enable_attention_slicing()

|

| 18 |

|

| 19 |

-

def infer(

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

|

| 21 |

conditioning_image = Image.fromarray(conditioning_image)

|

| 22 |

conditioning_image = conditioning_image.convert('L')

|

|

@@ -109,12 +120,16 @@ with gr.Blocks() as demo:

|

|

| 109 |

examples=[

|

| 110 |

["a village in the mountains", "monochrome", "./conditioning_images/conditioning_image_1.jpg"],

|

| 111 |

["three people walking in an alleyway with hats and pants", "monochrome", "./conditioning_images/conditioning_image_2.jpg"],

|

| 112 |

-

["an anime character, natural skin", "monochrome", "./conditioning_images/conditioning_image_3.jpg"],

|

| 113 |

-

["white object standing on colorful ground", "monochrome", "./conditioning_images/conditioning_image_4.jpg"],

|

|

|

|

| 114 |

],

|

| 115 |

inputs=[

|

| 116 |

prompt, negative_prompt, conditioning_image

|

| 117 |

],

|

|

|

|

|

|

|

|

|

|

| 118 |

)

|

| 119 |

gr.Markdown(

|

| 120 |

"""

|

|

|

|

| 7 |

controlnet = ControlNetModel.from_pretrained("ioclab/control_v1p_sd15_brightness", torch_dtype=torch.float16, use_safetensors=True)

|

| 8 |

|

| 9 |

pipe = StableDiffusionControlNetPipeline.from_pretrained(

|

| 10 |

+

"runwayml/stable-diffusion-v1-5",

|

| 11 |

+

controlnet=controlnet,

|

| 12 |

+

torch_dtype=torch.float16,

|

| 13 |

+

safety_checker=None,

|

| 14 |

)

|

| 15 |

|

| 16 |

pipe.scheduler = UniPCMultistepScheduler.from_config(pipe.scheduler.config)

|

|

|

|

| 19 |

pipe.enable_model_cpu_offload()

|

| 20 |

pipe.enable_attention_slicing()

|

| 21 |

|

| 22 |

+

def infer(

|

| 23 |

+

prompt,

|

| 24 |

+

negative_prompt,

|

| 25 |

+

conditioning_image,

|

| 26 |

+

num_inference_steps=30,

|

| 27 |

+

size=768,

|

| 28 |

+

guidance_scale=7.0,

|

| 29 |

+

seed=-1,

|

| 30 |

+

):

|

| 31 |

|

| 32 |

conditioning_image = Image.fromarray(conditioning_image)

|

| 33 |

conditioning_image = conditioning_image.convert('L')

|

|

|

|

| 120 |

examples=[

|

| 121 |

["a village in the mountains", "monochrome", "./conditioning_images/conditioning_image_1.jpg"],

|

| 122 |

["three people walking in an alleyway with hats and pants", "monochrome", "./conditioning_images/conditioning_image_2.jpg"],

|

| 123 |

+

["an anime character, natural skin", "monochrome, blue skin, grayscale", "./conditioning_images/conditioning_image_3.jpg"],

|

| 124 |

+

["white object standing on colorful metal ground, soft_light, cray", "monochrome, grayscale", "./conditioning_images/conditioning_image_4.jpg"],

|

| 125 |

+

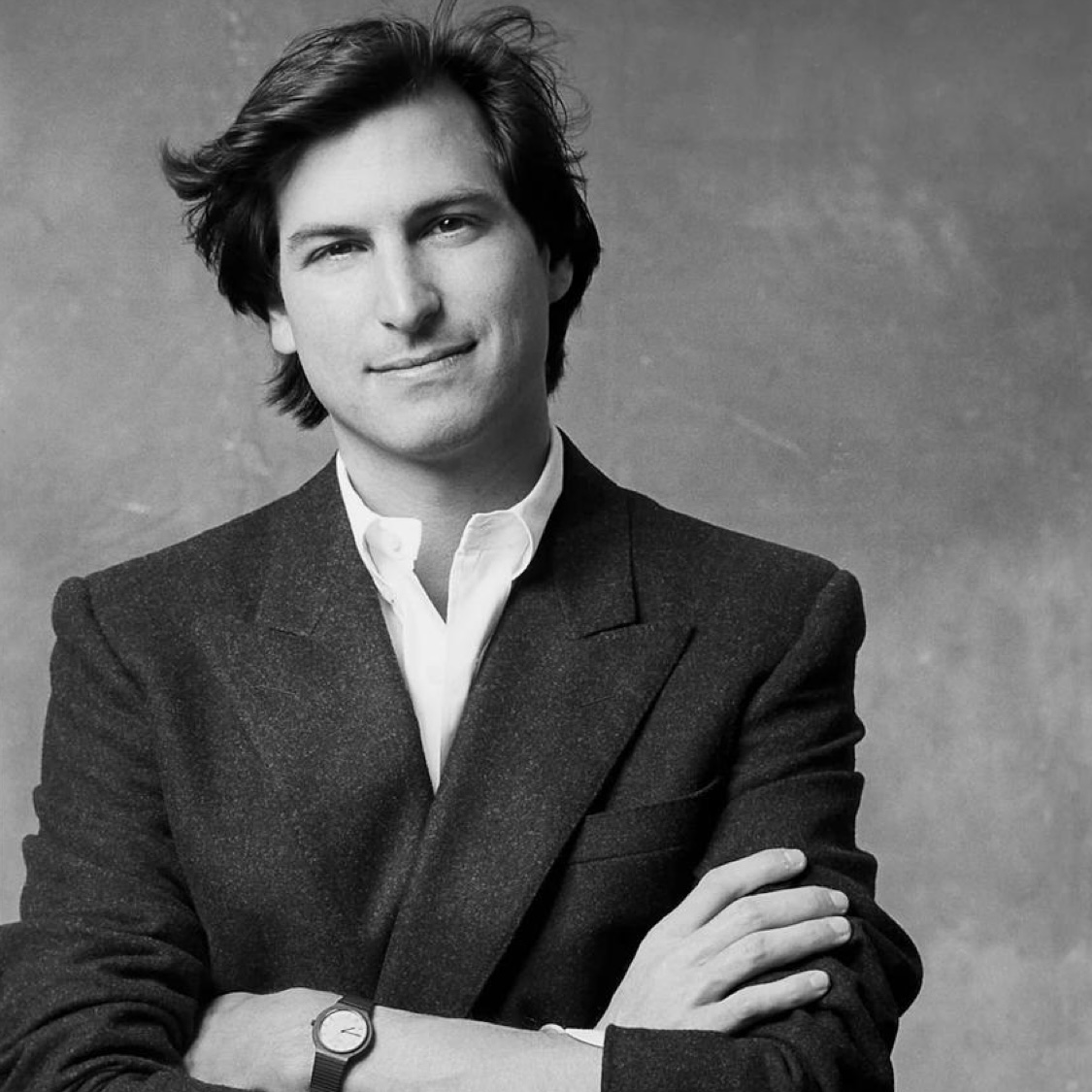

["a man in a black suit", "monochrome", "./conditioning_images/conditioning_image_5.jpg"],

|

| 126 |

],

|

| 127 |

inputs=[

|

| 128 |

prompt, negative_prompt, conditioning_image

|

| 129 |

],

|

| 130 |

+

outputs=output,

|

| 131 |

+

fn=infer,

|

| 132 |

+

cache_examples=True,

|

| 133 |

)

|

| 134 |

gr.Markdown(

|

| 135 |

"""

|

conditioning_images/conditioning_image_5.jpg

ADDED

|