Spaces:

Running

Running

vlff李飞飞

commited on

Commit

·

2319518

1

Parent(s):

8d16531

update md

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +24 -0

- .pre-commit-config.yaml +27 -0

- Dockerfile +14 -0

- LICENSE +53 -0

- README_CN.md +252 -0

- assets/screenshot-ci.png +0 -0

- assets/screenshot-editor-movie.png +0 -0

- assets/screenshot-multi-web-qa.png +0 -0

- assets/screenshot-pdf-qa.png +0 -0

- assets/screenshot-web-qa.png +0 -0

- assets/screenshot-writing.png +0 -0

- benchmark/README.md +248 -0

- benchmark/code_interpreter.py +250 -0

- benchmark/config.py +66 -0

- benchmark/inference_and_execute.py +280 -0

- benchmark/metrics/__init__.py +0 -0

- benchmark/metrics/code_execution.py +257 -0

- benchmark/metrics/gsm8k.py +54 -0

- benchmark/metrics/visualization.py +179 -0

- benchmark/models/__init__.py +4 -0

- benchmark/models/base.py +17 -0

- benchmark/models/dashscope.py +40 -0

- benchmark/models/llm.py +26 -0

- benchmark/models/qwen.py +36 -0

- benchmark/parser/__init__.py +2 -0

- benchmark/parser/internlm_parser.py +11 -0

- benchmark/parser/react_parser.py +46 -0

- benchmark/prompt/__init__.py +4 -0

- benchmark/prompt/internlm_react.py +103 -0

- benchmark/prompt/llama_react.py +20 -0

- benchmark/prompt/qwen_react.py +80 -0

- benchmark/prompt/react.py +87 -0

- benchmark/requirements.txt +13 -0

- benchmark/utils/__init__.py +0 -0

- benchmark/utils/code_utils.py +31 -0

- benchmark/utils/data_utils.py +28 -0

- browser_qwen/background.js +58 -0

- browser_qwen/img/copy.png +0 -0

- browser_qwen/img/logo.png +0 -0

- browser_qwen/img/popup.png +0 -0

- browser_qwen/manifest.json +45 -0

- browser_qwen/src/content.js +86 -0

- browser_qwen/src/popup.html +121 -0

- browser_qwen/src/popup.js +65 -0

- openai_api.py +564 -0

- qwen_agent/__init__.py +0 -0

- qwen_agent/actions/__init__.py +13 -0

- qwen_agent/actions/base.py +40 -0

- qwen_agent/actions/continue_writing.py +35 -0

- qwen_agent/actions/expand_writing.py +62 -0

.gitignore

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

env

|

| 2 |

+

*.pyc

|

| 3 |

+

__pycache__

|

| 4 |

+

|

| 5 |

+

.idea

|

| 6 |

+

.vscode

|

| 7 |

+

.DS_Store

|

| 8 |

+

|

| 9 |

+

qwen_agent/llm/gpt.py

|

| 10 |

+

qwen_agent/llm/tools.py

|

| 11 |

+

workspace/*

|

| 12 |

+

|

| 13 |

+

benchmark/log/*

|

| 14 |

+

benchmark/output_data/*

|

| 15 |

+

benchmark/upload_file/*

|

| 16 |

+

benchmark/upload_file_clean/*

|

| 17 |

+

benchmark/eval_data/

|

| 18 |

+

Qwen-Agent

|

| 19 |

+

|

| 20 |

+

docqa/*

|

| 21 |

+

log/*

|

| 22 |

+

ai_builder/*

|

| 23 |

+

qwen_agent.egg-info/*

|

| 24 |

+

build/*

|

.pre-commit-config.yaml

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

repos:

|

| 2 |

+

- repo: https://github.com/pycqa/flake8.git

|

| 3 |

+

rev: 5.0.4

|

| 4 |

+

hooks:

|

| 5 |

+

- id: flake8

|

| 6 |

+

args: ["--max-line-length=300"]

|

| 7 |

+

- repo: https://github.com/PyCQA/isort.git

|

| 8 |

+

rev: 5.11.5

|

| 9 |

+

hooks:

|

| 10 |

+

- id: isort

|

| 11 |

+

- repo: https://github.com/pre-commit/mirrors-yapf.git

|

| 12 |

+

rev: v0.32.0

|

| 13 |

+

hooks:

|

| 14 |

+

- id: yapf

|

| 15 |

+

- repo: https://github.com/pre-commit/pre-commit-hooks.git

|

| 16 |

+

rev: v4.3.0

|

| 17 |

+

hooks:

|

| 18 |

+

- id: trailing-whitespace

|

| 19 |

+

- id: check-yaml

|

| 20 |

+

- id: end-of-file-fixer

|

| 21 |

+

- id: requirements-txt-fixer

|

| 22 |

+

- id: double-quote-string-fixer

|

| 23 |

+

- id: check-merge-conflict

|

| 24 |

+

- id: fix-encoding-pragma

|

| 25 |

+

args: ["--remove"]

|

| 26 |

+

- id: mixed-line-ending

|

| 27 |

+

args: ["--fix=lf"]

|

Dockerfile

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

|

| 2 |

+

# you will also find guides on how best to write your Dockerfile

|

| 3 |

+

|

| 4 |

+

FROM python:3.10

|

| 5 |

+

|

| 6 |

+

WORKDIR /code

|

| 7 |

+

|

| 8 |

+

COPY ./requirements.txt /code/requirements.txt

|

| 9 |

+

|

| 10 |

+

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

|

| 11 |

+

|

| 12 |

+

COPY . .

|

| 13 |

+

|

| 14 |

+

CMD ["python", "run_server.py", "--llm", "Qwen/Qwen-1_8B-Chat", "--model_server", "http://127.0.0.1:7905/v1", "--server_host", "0.0.0.0", "--workstation_port", "7860"]

|

LICENSE

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Tongyi Qianwen LICENSE AGREEMENT

|

| 2 |

+

|

| 3 |

+

Tongyi Qianwen Release Date: August 3, 2023

|

| 4 |

+

|

| 5 |

+

By clicking to agree or by using or distributing any portion or element of the Tongyi Qianwen Materials, you will be deemed to have recognized and accepted the content of this Agreement, which is effective immediately.

|

| 6 |

+

|

| 7 |

+

1. Definitions

|

| 8 |

+

a. This Tongyi Qianwen LICENSE AGREEMENT (this "Agreement") shall mean the terms and conditions for use, reproduction, distribution and modification of the Materials as defined by this Agreement.

|

| 9 |

+

b. "We"(or "Us") shall mean Alibaba Cloud.

|

| 10 |

+

c. "You" (or "Your") shall mean a natural person or legal entity exercising the rights granted by this Agreement and/or using the Materials for any purpose and in any field of use.

|

| 11 |

+

d. "Third Parties" shall mean individuals or legal entities that are not under common control with Us or You.

|

| 12 |

+

e. "Tongyi Qianwen" shall mean the large language models (including Qwen model and Qwen-Chat model), and software and algorithms, consisting of trained model weights, parameters (including optimizer states), machine-learning model code, inference-enabling code, training-enabling code, fine-tuning enabling code and other elements of the foregoing distributed by Us.

|

| 13 |

+

f. "Materials" shall mean, collectively, Alibaba Cloud's proprietary Tongyi Qianwen and Documentation (and any portion thereof) made available under this Agreement.

|

| 14 |

+

g. "Source" form shall mean the preferred form for making modifications, including but not limited to model source code, documentation source, and configuration files.

|

| 15 |

+

h. "Object" form shall mean any form resulting from mechanical transformation or translation of a Source form, including but not limited to compiled object code, generated documentation,

|

| 16 |

+

and conversions to other media types.

|

| 17 |

+

|

| 18 |

+

2. Grant of Rights

|

| 19 |

+

You are granted a non-exclusive, worldwide, non-transferable and royalty-free limited license under Alibaba Cloud's intellectual property or other rights owned by Us embodied in the Materials to use, reproduce, distribute, copy, create derivative works of, and make modifications to the Materials.

|

| 20 |

+

|

| 21 |

+

3. Redistribution

|

| 22 |

+

You may reproduce and distribute copies of the Materials or derivative works thereof in any medium, with or without modifications, and in Source or Object form, provided that You meet the following conditions:

|

| 23 |

+

a. You shall give any other recipients of the Materials or derivative works a copy of this Agreement;

|

| 24 |

+

b. You shall cause any modified files to carry prominent notices stating that You changed the files;

|

| 25 |

+

c. You shall retain in all copies of the Materials that You distribute the following attribution notices within a "Notice" text file distributed as a part of such copies: "Tongyi Qianwen is licensed under the Tongyi Qianwen LICENSE AGREEMENT, Copyright (c) Alibaba Cloud. All Rights Reserved."; and

|

| 26 |

+

d. You may add Your own copyright statement to Your modifications and may provide additional or different license terms and conditions for use, reproduction, or distribution of Your modifications, or for any such derivative works as a whole, provided Your use, reproduction, and distribution of the work otherwise complies with the terms and conditions of this Agreement.

|

| 27 |

+

|

| 28 |

+

4. Restrictions

|

| 29 |

+

If you are commercially using the Materials, and your product or service has more than 100 million monthly active users, You shall request a license from Us. You cannot exercise your rights under this Agreement without our express authorization.

|

| 30 |

+

|

| 31 |

+

5. Rules of use

|

| 32 |

+

a. The Materials may be subject to export controls or restrictions in China, the United States or other countries or regions. You shall comply with applicable laws and regulations in your use of the Materials.

|

| 33 |

+

b. You can not use the Materials or any output therefrom to improve any other large language model (excluding Tongyi Qianwen or derivative works thereof).

|

| 34 |

+

|

| 35 |

+

6. Intellectual Property

|

| 36 |

+

a. We retain ownership of all intellectual property rights in and to the Materials and derivatives made by or for Us. Conditioned upon compliance with the terms and conditions of this Agreement, with respect to any derivative works and modifications of the Materials that are made by you, you are and will be the owner of such derivative works and modifications.

|

| 37 |

+

b. No trademark license is granted to use the trade names, trademarks, service marks, or product names of Us, except as required to fulfill notice requirements under this Agreement or as required for reasonable and customary use in describing and redistributing the Materials.

|

| 38 |

+

c. If you commence a lawsuit or other proceedings (including a cross-claim or counterclaim in a lawsuit) against Us or any entity alleging that the Materials or any output therefrom, or any part of the foregoing, infringe any intellectual property or other right owned or licensable by you, then all licences granted to you under this Agreement shall terminate as of the date such lawsuit or other proceeding is commenced or brought.

|

| 39 |

+

|

| 40 |

+

7. Disclaimer of Warranty and Limitation of Liability

|

| 41 |

+

|

| 42 |

+

a. We are not obligated to support, update, provide training for, or develop any further version of the Tongyi Qianwen Materials or to grant any license thereto.

|

| 43 |

+

b. THE MATERIALS ARE PROVIDED "AS IS" WITHOUT ANY EXPRESS OR IMPLIED WARRANTY OF ANY KIND INCLUDING WARRANTIES OF MERCHANTABILITY, NONINFRINGEMENT, OR FITNESS FOR A PARTICULAR PURPOSE. WE MAKE NO WARRANTY AND ASSUME NO RESPONSIBILITY FOR THE SAFETY OR STABILITY OF THE MATERIALS AND ANY OUTPUT THEREFROM.

|

| 44 |

+

c. IN NO EVENT SHALL WE BE LIABLE TO YOU FOR ANY DAMAGES, INCLUDING, BUT NOT LIMITED TO ANY DIRECT, OR INDIRECT, SPECIAL OR CONSEQUENTIAL DAMAGES ARISING FROM YOUR USE OR INABILITY TO USE THE MATERIALS OR ANY OUTPUT OF IT, NO MATTER HOW IT’S CAUSED.

|

| 45 |

+

d. You will defend, indemnify and hold harmless Us from and against any claim by any third party arising out of or related to your use or distribution of the Materials.

|

| 46 |

+

|

| 47 |

+

8. Survival and Termination.

|

| 48 |

+

a. The term of this Agreement shall commence upon your acceptance of this Agreement or access to the Materials and will continue in full force and effect until terminated in accordance with the terms and conditions herein.

|

| 49 |

+

b. We may terminate this Agreement if you breach any of the terms or conditions of this Agreement. Upon termination of this Agreement, you must delete and cease use of the Materials. Sections 7 and 9 shall survive the termination of this Agreement.

|

| 50 |

+

|

| 51 |

+

9. Governing Law and Jurisdiction.

|

| 52 |

+

a. This Agreement and any dispute arising out of or relating to it will be governed by the laws of China, without regard to conflict of law principles, and the UN Convention on Contracts for the International Sale of Goods does not apply to this Agreement.

|

| 53 |

+

b. The People's Courts in Hangzhou City shall have exclusive jurisdiction over any dispute arising out of this Agreement.

|

README_CN.md

ADDED

|

@@ -0,0 +1,252 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Qwen Agent

|

| 3 |

+

emoji: 📈

|

| 4 |

+

colorFrom: yellow

|

| 5 |

+

colorTo: purple

|

| 6 |

+

sdk: docker

|

| 7 |

+

pinned: false

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

app_port: 7860

|

| 10 |

+

---

|

| 11 |

+

|

| 12 |

+

中文 | [English](./README.md)

|

| 13 |

+

|

| 14 |

+

<p align="center">

|

| 15 |

+

<img src="https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/qwen_agent/logo-qwen-agent.png" width="400"/>

|

| 16 |

+

<p>

|

| 17 |

+

<br>

|

| 18 |

+

|

| 19 |

+

Qwen-Agent是一个代码框架,用于发掘开源通义千问模型([Qwen](https://github.com/QwenLM/Qwen))的工具使用、规划、记忆能力。

|

| 20 |

+

在Qwen-Agent的基础上,我们开发了一个名为BrowserQwen的**Chrome浏览器扩展**,它具有以下主要功能:

|

| 21 |

+

|

| 22 |

+

- 与Qwen讨论当前网页或PDF文档的内容。

|

| 23 |

+

- 在获得您的授权后,BrowserQwen会记录您浏览过的网页和PDF/Word/PPT材料,以帮助您快速了解多个页面的内容,总结您浏览过的内容,并自动化繁琐的文字工作。

|

| 24 |

+

- 集成各种插件,包括可用于数学问题求解、数据分析与可视化、处理文件等的**代码解释器**(**Code Interpreter**)。

|

| 25 |

+

|

| 26 |

+

# 用例演示

|

| 27 |

+

|

| 28 |

+

如果您更喜欢观看视频,而不是效果截图,可以参见[视频演示](#视频演示)。

|

| 29 |

+

|

| 30 |

+

## 工作台 - 创作模式

|

| 31 |

+

|

| 32 |

+

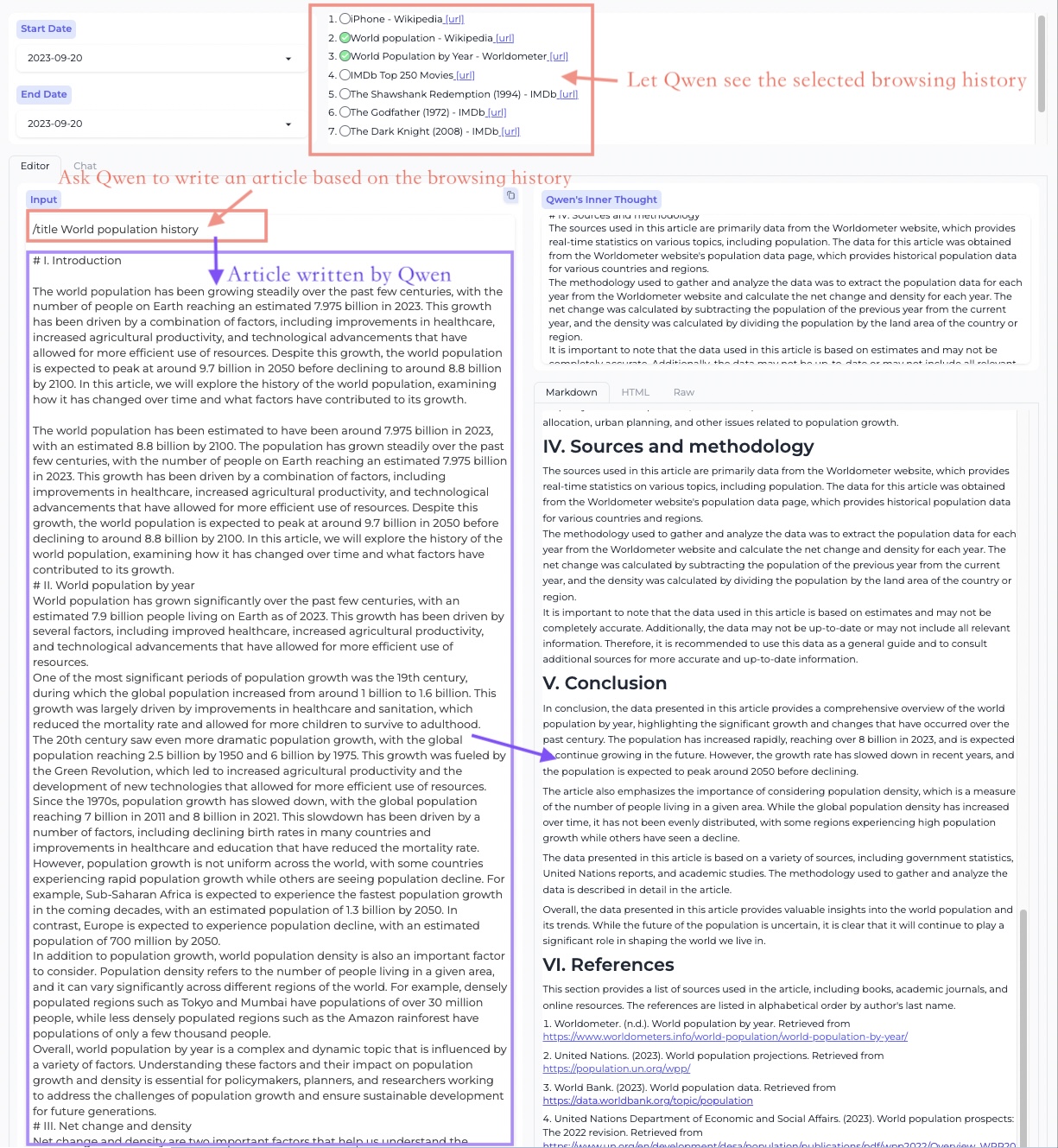

**根据浏览过的网页、PDFs素材进行长文创作**

|

| 33 |

+

|

| 34 |

+

<figure>

|

| 35 |

+

<img src="assets/screenshot-writing.png">

|

| 36 |

+

</figure>

|

| 37 |

+

|

| 38 |

+

**调用插件辅助富文本创作**

|

| 39 |

+

|

| 40 |

+

<figure>

|

| 41 |

+

<img src="assets/screenshot-editor-movie.png">

|

| 42 |

+

</figure>

|

| 43 |

+

|

| 44 |

+

## 工作台 - 对话模式

|

| 45 |

+

|

| 46 |

+

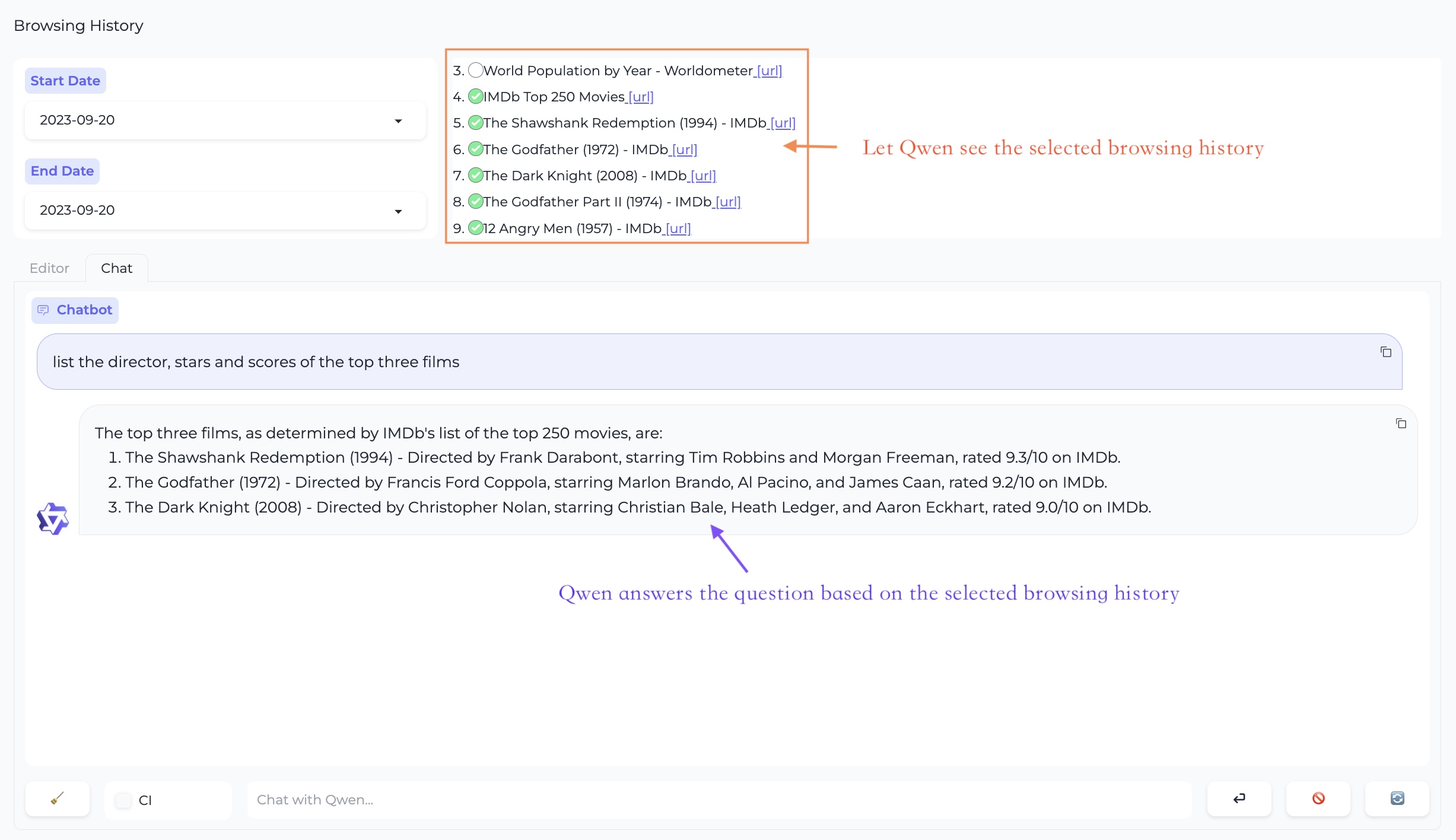

**多网页问答**

|

| 47 |

+

|

| 48 |

+

<figure >

|

| 49 |

+

<img src="assets/screenshot-multi-web-qa.png">

|

| 50 |

+

</figure>

|

| 51 |

+

|

| 52 |

+

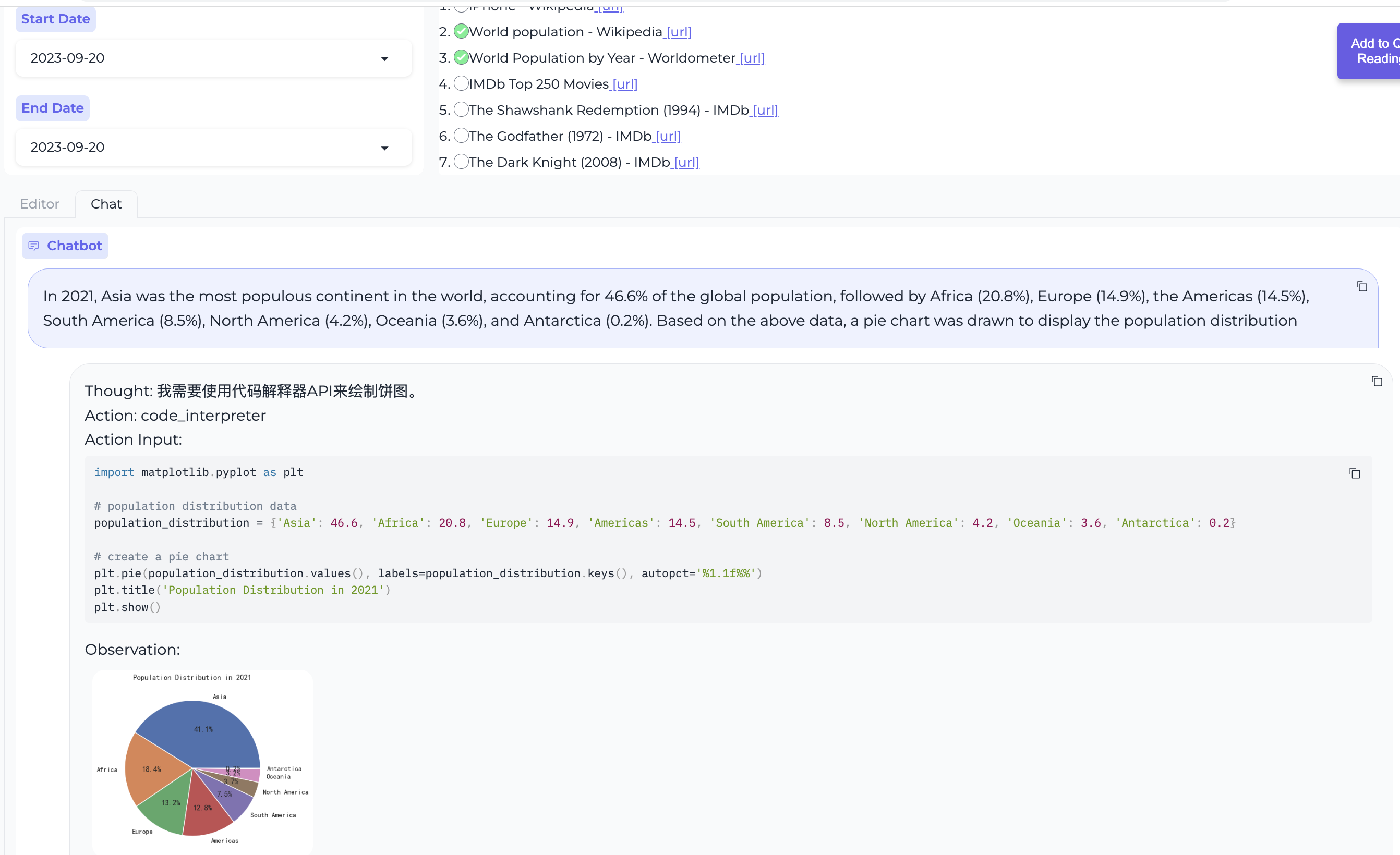

**使用代码解释器绘制数据图表**

|

| 53 |

+

|

| 54 |

+

<figure>

|

| 55 |

+

<img src="assets/screenshot-ci.png">

|

| 56 |

+

</figure>

|

| 57 |

+

|

| 58 |

+

## 浏览器助手

|

| 59 |

+

|

| 60 |

+

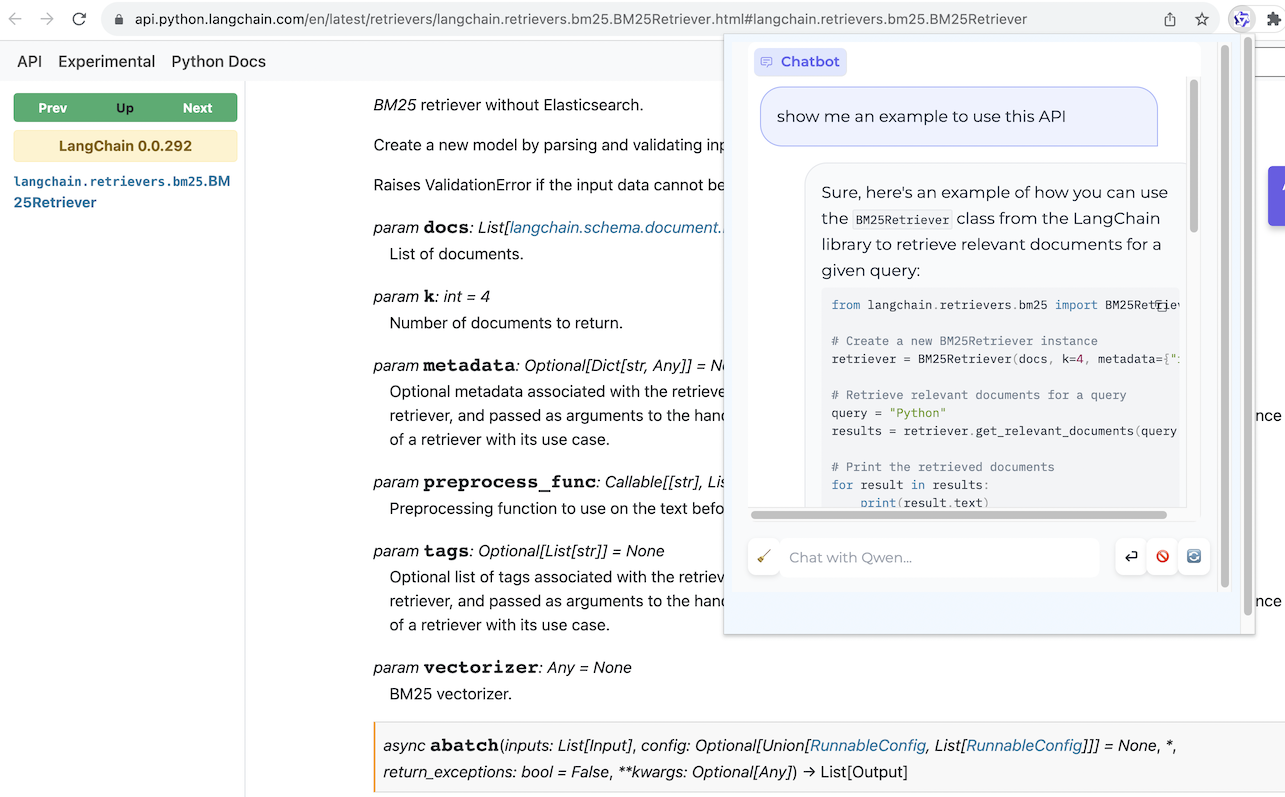

**网页问答**

|

| 61 |

+

|

| 62 |

+

<figure>

|

| 63 |

+

<img src="assets/screenshot-web-qa.png">

|

| 64 |

+

</figure>

|

| 65 |

+

|

| 66 |

+

**PDF文档问答**

|

| 67 |

+

|

| 68 |

+

<figure>

|

| 69 |

+

<img src="assets/screenshot-pdf-qa.png">

|

| 70 |

+

</figure>

|

| 71 |

+

|

| 72 |

+

# BrowserQwen 使用说明

|

| 73 |

+

|

| 74 |

+

支持环境:MacOS,Linux,Windows。

|

| 75 |

+

|

| 76 |

+

## 第一步 - 部署模型服务

|

| 77 |

+

|

| 78 |

+

***如果您正在使用阿里云提供的[DashScope](https://help.aliyun.com/zh/dashscope/developer-reference/quick-start)服务来访问Qwen系列模型,可以跳过这一步,直接到第二步。***

|

| 79 |

+

|

| 80 |

+

但如果您不想使用DashScope,而是希望自己部署一个模型服务。那么可以参考[Qwen项目](https://github.com/QwenLM/Qwen/blob/main/README_CN.md#api),部署一个兼容OpenAI API的模型服务:

|

| 81 |

+

|

| 82 |

+

```bash

|

| 83 |

+

# 安装依赖

|

| 84 |

+

git clone git@github.com:QwenLM/Qwen.git

|

| 85 |

+

cd Qwen

|

| 86 |

+

pip install -r requirements.txt

|

| 87 |

+

pip install fastapi uvicorn "openai<1.0.0" "pydantic>=2.3.0" sse_starlette

|

| 88 |

+

|

| 89 |

+

# 启动模型服务,通过 -c 参数指定模型版本

|

| 90 |

+

# - 指定 --server-name 0.0.0.0 将允许其他机器访问您的模型服务

|

| 91 |

+

# - 指定 --server-name 127.0.0.1 则只允许部署模型的机器自身访问该模型服务

|

| 92 |

+

python openai_api.py --server-name 0.0.0.0 --server-port 7905 -c Qwen/Qwen-14B-Chat

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

目前,我们支持指定-c参数以加载 [Qwen 的 Hugging Face主页](https://huggingface.co/Qwen) 上的模型,比如`Qwen/Qwen-1_8B-Chat`、`Qwen/Qwen-7B-Chat`、`Qwen/Qwen-14B-Chat`、`Qwen/Qwen-72B-Chat`,以及它们的`Int4`和`Int8`版本。

|

| 96 |

+

|

| 97 |

+

## 第二步 - 部署本地数据库服务

|

| 98 |

+

|

| 99 |

+

在这一步,您需要在您的本地机器上(即您可以打开Chrome浏览器的那台机器),部署维护个人浏览历史、对话历史的数据库服务。

|

| 100 |

+

|

| 101 |

+

首次启动数据库服务前,请记得安装相关的依赖:

|

| 102 |

+

|

| 103 |

+

```bash

|

| 104 |

+

# 安装依赖

|

| 105 |

+

git clone https://github.com/QwenLM/Qwen-Agent.git

|

| 106 |

+

cd Qwen-Agent

|

| 107 |

+

pip install -r requirements.txt

|

| 108 |

+

```

|

| 109 |

+

|

| 110 |

+

如果跳过了第一步、因为您打算使用DashScope提供的模型服务的话,请执行以下命令启动数据库服务:

|

| 111 |

+

|

| 112 |

+

```bash

|

| 113 |

+

# 启动数据库服务,通过 --llm 参数指定您希望通过DashScope使用的具体模型

|

| 114 |

+

# 参数 --llm 可以是如下之一,按资源消耗从小到大排序:

|

| 115 |

+

# - qwen-7b-chat (与开源的Qwen-7B-Chat相同模型)

|

| 116 |

+

# - qwen-14b-chat (与开源的Qwen-14B-Chat相同模型)

|

| 117 |

+

# - qwen-turbo

|

| 118 |

+

# - qwen-plus

|

| 119 |

+

# 您需要将YOUR_DASHSCOPE_API_KEY替换为您的真实API-KEY。

|

| 120 |

+

export DASHSCOPE_API_KEY=YOUR_DASHSCOPE_API_KEY

|

| 121 |

+

python run_server.py --model_server dashscope --llm qwen-7b-chat --workstation_port 7864

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

如果您没有在使用DashScope、而是参考第一步部署了自己的模型服务的话,请执行以下命令:

|

| 125 |

+

|

| 126 |

+

```bash

|

| 127 |

+

# 启动数据库服务,通过 --model_server 参数指定您在 Step 1 里部署好的模型服务

|

| 128 |

+

# - 若 Step 1 的机器 IP 为 123.45.67.89,则可指定 --model_server http://123.45.67.89:7905/v1

|

| 129 |

+

# - 若 Step 1 和 Step 2 是同一台机器,则可指定 --model_server http://127.0.0.1:7905/v1

|

| 130 |

+

python run_server.py --model_server http://{MODEL_SERVER_IP}:7905/v1 --workstation_port 7864

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

现在您可以访问 [http://127.0.0.1:7864/](http://127.0.0.1:7864/) 来使用工作台(Workstation)的创作模式(Editor模式)和对话模式(Chat模式)了。

|

| 134 |

+

|

| 135 |

+

关于工作台的使用技巧,请参见工作台���面的文字说明、或观看[视频演示](#视频演示)。

|

| 136 |

+

|

| 137 |

+

## Step 3. 安装浏览器助手

|

| 138 |

+

|

| 139 |

+

安装BrowserQwen的Chrome插件(又称Chrome扩展程序):

|

| 140 |

+

|

| 141 |

+

1. 打开Chrome浏览器,在浏览器的地址栏中输入 `chrome://extensions/` 并按下回车键;

|

| 142 |

+

2. 确保右上角的 `开发者模式` 处于打开状态,之后点击 `加载已解压的扩展程序` 上传本项目下的 `browser_qwen` 目录并启用;

|

| 143 |

+

3. 单击谷歌浏览器右上角```扩展程序```图标,将BrowserQwen固定在工具栏。

|

| 144 |

+

|

| 145 |

+

注意,安装Chrome插件后,需要刷新页面,插件才能生效。

|

| 146 |

+

|

| 147 |

+

当您想让Qwen阅读当前网页的内容时:

|

| 148 |

+

|

| 149 |

+

1. 请先点击屏幕上的 `Add to Qwen's Reading List` 按钮,以授权Qwen在后台分析本页面。

|

| 150 |

+

2. 再单击浏览器右上角扩展程序栏的Qwen图标,便可以和Qwen交流当前页面的内容了。

|

| 151 |

+

|

| 152 |

+

## 视频演示

|

| 153 |

+

|

| 154 |

+

可查看以下几个演示视频,了解BrowserQwen的基本操作:

|

| 155 |

+

|

| 156 |

+

- 根据浏览过的网页、PDFs进行长文创作 [video](https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/qwen_agent/showcase_write_article_based_on_webpages_and_pdfs.mp4)

|

| 157 |

+

- 提取浏览内容使用代码解释器画图 [video](https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/qwen_agent/showcase_chat_with_docs_and_code_interpreter.mp4)

|

| 158 |

+

- 上传文件、多轮对话利用代码解释器分析数据 [video](https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/qwen_agent/showcase_code_interpreter_multi_turn_chat.mp4)

|

| 159 |

+

|

| 160 |

+

# 评测基准

|

| 161 |

+

|

| 162 |

+

我们也开源了一个评测基准,用于评估一个模型写Python代码并使用Code Interpreter进行数学解题、数据分析、及其他通用任务时的表现。评测基准见 [benchmark](benchmark/README.md) 目录,当前的评测结果如下:

|

| 163 |

+

|

| 164 |

+

<table>

|

| 165 |

+

<tr>

|

| 166 |

+

<th colspan="5" align="center">In-house Code Interpreter Benchmark (Version 20231206)</th>

|

| 167 |

+

</tr>

|

| 168 |

+

<tr>

|

| 169 |

+

<th rowspan="2" align="center">Model</th>

|

| 170 |

+

<th colspan="3" align="center">代码执行结果正确性 (%)</th>

|

| 171 |

+

<th colspan="1" align="center">生成代码的可执行率 (%)</th>

|

| 172 |

+

</tr>

|

| 173 |

+

<tr>

|

| 174 |

+

<th align="center">Math↑</th><th align="center">Visualization-Hard↑</th><th align="center">Visualization-Easy↑</th><th align="center">General↑</th>

|

| 175 |

+

</tr>

|

| 176 |

+

<tr>

|

| 177 |

+

<td>GPT-4</td>

|

| 178 |

+

<td align="center">82.8</td>

|

| 179 |

+

<td align="center">66.7</td>

|

| 180 |

+

<td align="center">60.8</td>

|

| 181 |

+

<td align="center">82.8</td>

|

| 182 |

+

</tr>

|

| 183 |

+

<tr>

|

| 184 |

+

<td>GPT-3.5</td>

|

| 185 |

+

<td align="center">47.3</td>

|

| 186 |

+

<td align="center">33.3</td>

|

| 187 |

+

<td align="center">55.7</td>

|

| 188 |

+

<td align="center">74.1</td>

|

| 189 |

+

</tr>

|

| 190 |

+

<tr>

|

| 191 |

+

<td>LLaMA2-13B-Chat</td>

|

| 192 |

+

<td align="center">8.3</td>

|

| 193 |

+

<td align="center">1.2</td>

|

| 194 |

+

<td align="center">15.2</td>

|

| 195 |

+

<td align="center">48.3</td>

|

| 196 |

+

</tr>

|

| 197 |

+

<tr>

|

| 198 |

+

<td>CodeLLaMA-13B-Instruct</td>

|

| 199 |

+

<td align="center">28.2</td>

|

| 200 |

+

<td align="center">15.5</td>

|

| 201 |

+

<td align="center">21.5</td>

|

| 202 |

+

<td align="center">74.1</td>

|

| 203 |

+

</tr>

|

| 204 |

+

<tr>

|

| 205 |

+

<td>InternLM-20B-Chat</td>

|

| 206 |

+

<td align="center">34.6</td>

|

| 207 |

+

<td align="center">10.7</td>

|

| 208 |

+

<td align="center">24.1</td>

|

| 209 |

+

<td align="center">65.5</td>

|

| 210 |

+

</tr>

|

| 211 |

+

<tr>

|

| 212 |

+

<td>ChatGLM3-6B</td>

|

| 213 |

+

<td align="center">54.2</td>

|

| 214 |

+

<td align="center">4.8</td>

|

| 215 |

+

<td align="center">15.2</td>

|

| 216 |

+

<td align="center">62.1</td>

|

| 217 |

+

</tr>

|

| 218 |

+

<tr>

|

| 219 |

+

<td>Qwen-1.8B-Chat</td>

|

| 220 |

+

<td align="center">25.6</td>

|

| 221 |

+

<td align="center">21.4</td>

|

| 222 |

+

<td align="center">22.8</td>

|

| 223 |

+

<td align="center">65.5</td>

|

| 224 |

+

</tr>

|

| 225 |

+

<tr>

|

| 226 |

+

<td>Qwen-7B-Chat</td>

|

| 227 |

+

<td align="center">41.9</td>

|

| 228 |

+

<td align="center">23.8</td>

|

| 229 |

+

<td align="center">38.0</td>

|

| 230 |

+

<td align="center">67.2</td>

|

| 231 |

+

</tr>

|

| 232 |

+

<tr>

|

| 233 |

+

<td>Qwen-14B-Chat</td>

|

| 234 |

+

<td align="center">58.4</td>

|

| 235 |

+

<td align="center">31.0</td>

|

| 236 |

+

<td align="center">45.6</td>

|

| 237 |

+

<td align="center">65.5</td>

|

| 238 |

+

</tr>

|

| 239 |

+

<tr>

|

| 240 |

+

<td>Qwen-72B-Chat</td>

|

| 241 |

+

<td align="center">72.7</td>

|

| 242 |

+

<td align="center">41.7</td>

|

| 243 |

+

<td align="center">43.0</td>

|

| 244 |

+

<td align="center">82.8</td>

|

| 245 |

+

</tr>

|

| 246 |

+

</table>

|

| 247 |

+

|

| 248 |

+

# 免责声明

|

| 249 |

+

|

| 250 |

+

本项目并非正式产品,而是一个概念验证项目,用于演示Qwen系列模型的能力。

|

| 251 |

+

|

| 252 |

+

> 重要提示:代码解释器未进行沙盒隔离,会在部署环境中执行代码。请避免向Qwen发出危险指令,切勿将该代码解释器直接用于生产目的。

|

assets/screenshot-ci.png

ADDED

|

assets/screenshot-editor-movie.png

ADDED

|

assets/screenshot-multi-web-qa.png

ADDED

|

assets/screenshot-pdf-qa.png

ADDED

|

assets/screenshot-web-qa.png

ADDED

|

assets/screenshot-writing.png

ADDED

|

benchmark/README.md

ADDED

|

@@ -0,0 +1,248 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Code Interpreter Benchmark

|

| 2 |

+

|

| 3 |

+

## Introduction

|

| 4 |

+

To assess LLM's ability to use the Python Code Interpreter for tasks such as mathematical problem solving, data visualization, and other general-purpose tasks such as file handling and web scraping, we have created and open-sourced a benchmark specifically designed for evaluating these capabilities.

|

| 5 |

+

|

| 6 |

+

### Metrics

|

| 7 |

+

The metrics are divided into two parts: code executability and code correctness.

|

| 8 |

+

- Code executability: evaluating the ability of the LLM-generated code to be executed.

|

| 9 |

+

- Code correctness: evaluating whether the LLM-generated code runs correctly.

|

| 10 |

+

|

| 11 |

+

### Domain

|

| 12 |

+

When evaluating the accuracy of the code execution results for code correctness, we further divide it into two specific domains: `Math`, `Visualization`.

|

| 13 |

+

In terms of code executability, we calculate executable rate of the generated code for `General problem-solving`.

|

| 14 |

+

|

| 15 |

+

## Results

|

| 16 |

+

- Qwen-7B-Chat refers to the version updated after September 25, 2023.

|

| 17 |

+

- The code correctness judger model for `Visualization` has changed from `Qwen-vl-chat` to `gpt-4-vision-preview` in the version 20231206.

|

| 18 |

+

|

| 19 |

+

<table>

|

| 20 |

+

<tr>

|

| 21 |

+

<th colspan="5" align="center">In-house Code Interpreter Benchmark (Version 20231206)</th>

|

| 22 |

+

</tr>

|

| 23 |

+

<tr>

|

| 24 |

+

<th rowspan="2" align="center">Model</th>

|

| 25 |

+

<th colspan="3" align="center">Accuracy of Code Execution Results (%)</th>

|

| 26 |

+

<th colspan="1" align="center">Executable Rate of Code (%)</th>

|

| 27 |

+

</tr>

|

| 28 |

+

<tr>

|

| 29 |

+

<th align="center">Math↑</th><th align="center">Visualization-Hard↑</th><th align="center">Visualization-Easy↑</th><th align="center">General↑</th>

|

| 30 |

+

</tr>

|

| 31 |

+

<tr>

|

| 32 |

+

<td>GPT-4</td>

|

| 33 |

+

<td align="center">82.8</td>

|

| 34 |

+

<td align="center">66.7</td>

|

| 35 |

+

<td align="center">60.8</td>

|

| 36 |

+

<td align="center">82.8</td>

|

| 37 |

+

</tr>

|

| 38 |

+

<tr>

|

| 39 |

+

<td>GPT-3.5</td>

|

| 40 |

+

<td align="center">47.3</td>

|

| 41 |

+

<td align="center">33.3</td>

|

| 42 |

+

<td align="center">55.7</td>

|

| 43 |

+

<td align="center">74.1</td>

|

| 44 |

+

</tr>

|

| 45 |

+

<tr>

|

| 46 |

+

<td>LLaMA2-13B-Chat</td>

|

| 47 |

+

<td align="center">8.3</td>

|

| 48 |

+

<td align="center">1.2</td>

|

| 49 |

+

<td align="center">15.2</td>

|

| 50 |

+

<td align="center">48.3</td>

|

| 51 |

+

</tr>

|

| 52 |

+

<tr>

|

| 53 |

+

<td>CodeLLaMA-13B-Instruct</td>

|

| 54 |

+

<td align="center">28.2</td>

|

| 55 |

+

<td align="center">15.5</td>

|

| 56 |

+

<td align="center">21.5</td>

|

| 57 |

+

<td align="center">74.1</td>

|

| 58 |

+

</tr>

|

| 59 |

+

<tr>

|

| 60 |

+

<td>InternLM-20B-Chat</td>

|

| 61 |

+

<td align="center">34.6</td>

|

| 62 |

+

<td align="center">10.7</td>

|

| 63 |

+

<td align="center">24.1</td>

|

| 64 |

+

<td align="center">65.5</td>

|

| 65 |

+

</tr>

|

| 66 |

+

<tr>

|

| 67 |

+

<td>ChatGLM3-6B</td>

|

| 68 |

+

<td align="center">54.2</td>

|

| 69 |

+

<td align="center">4.8</td>

|

| 70 |

+

<td align="center">15.2</td>

|

| 71 |

+

<td align="center">62.1</td>

|

| 72 |

+

</tr>

|

| 73 |

+

<tr>

|

| 74 |

+

<td>Qwen-1.8B-Chat</td>

|

| 75 |

+

<td align="center">25.6</td>

|

| 76 |

+

<td align="center">21.4</td>

|

| 77 |

+

<td align="center">22.8</td>

|

| 78 |

+

<td align="center">65.5</td>

|

| 79 |

+

</tr>

|

| 80 |

+

<tr>

|

| 81 |

+

<td>Qwen-7B-Chat</td>

|

| 82 |

+

<td align="center">41.9</td>

|

| 83 |

+

<td align="center">23.8</td>

|

| 84 |

+

<td align="center">38.0</td>

|

| 85 |

+

<td align="center">67.2</td>

|

| 86 |

+

</tr>

|

| 87 |

+

<tr>

|

| 88 |

+

<td>Qwen-14B-Chat</td>

|

| 89 |

+

<td align="center">58.4</td>

|

| 90 |

+

<td align="center">31.0</td>

|

| 91 |

+

<td align="center">45.6</td>

|

| 92 |

+

<td align="center">65.5</td>

|

| 93 |

+

</tr>

|

| 94 |

+

<tr>

|

| 95 |

+

<td>Qwen-72B-Chat</td>

|

| 96 |

+

<td align="center">72.7</td>

|

| 97 |

+

<td align="center">41.7</td>

|

| 98 |

+

<td align="center">43.0</td>

|

| 99 |

+

<td align="center">82.8</td>

|

| 100 |

+

</tr>

|

| 101 |

+

</table>

|

| 102 |

+

|

| 103 |

+

Furthermore, we also provide the results of `Qwen-vl-plus` as the code correctness judger model for `Visualization` task to serve as a reference.

|

| 104 |

+

|

| 105 |

+

<table>

|

| 106 |

+

<tr>

|

| 107 |

+

<th colspan="3" align="center">Code Correctness Judger Model = Qwen-vl-plus</th>

|

| 108 |

+

</tr>

|

| 109 |

+

<tr>

|

| 110 |

+

<th rowspan="2" align="center">Model</th>

|

| 111 |

+

<th colspan="2" align="center">Accuracy of Code Execution Results (%)</th>

|

| 112 |

+

</tr>

|

| 113 |

+

<tr>

|

| 114 |

+

<th align="center">Visualization-Hard↑</th>

|

| 115 |

+

<th align="center">Visualization-Easy↑</th>

|

| 116 |

+

</tr>

|

| 117 |

+

<tr>

|

| 118 |

+

<td>LLaMA2-13B-Chat</td>

|

| 119 |

+

<td align="center">2.4</td>

|

| 120 |

+

<td align="center">17.7</td>

|

| 121 |

+

</tr>

|

| 122 |

+

<tr>

|

| 123 |

+

<td>CodeLLaMA-13B-Instruct</td>

|

| 124 |

+

<td align="center">17.9</td>

|

| 125 |

+

<td align="center">34.2</td>

|

| 126 |

+

</tr>

|

| 127 |

+

<tr>

|

| 128 |

+

<td>InternLM-20B-Chat</td>

|

| 129 |

+

<td align="center">9.5</td>

|

| 130 |

+

<td align="center">31.7</td>

|

| 131 |

+

</tr>

|

| 132 |

+

<tr>

|

| 133 |

+

<td>ChatGLM3-6B</td>

|

| 134 |

+

<td align="center">10.7</td>

|

| 135 |

+

<td align="center">29.1</td>

|

| 136 |

+

</tr>

|

| 137 |

+

<tr>

|

| 138 |

+

<td>Qwen-1.8B-Chat</td>

|

| 139 |

+

<td align="center">32.1</td>

|

| 140 |

+

<td align="center">32.9</td>

|

| 141 |

+

</tr>

|

| 142 |

+

<tr>

|

| 143 |

+

<td>Qwen-7B-Chat</td>

|

| 144 |

+

<td align="center">26.2</td>

|

| 145 |

+

<td align="center">39.2</td>

|

| 146 |

+

</tr>

|

| 147 |

+

<tr>

|

| 148 |

+

<td>Qwen-14B-Chat</td>

|

| 149 |

+

<td align="center">36.9</td>

|

| 150 |

+

<td align="center">41.8</td>

|

| 151 |

+

</tr>

|

| 152 |

+

<tr>

|

| 153 |

+

<td>Qwen-72B-Chat</td>

|

| 154 |

+

<td align="center">38.1</td>

|

| 155 |

+

<td align="center">38.0</td>

|

| 156 |

+

</tr>

|

| 157 |

+

</table>

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

|

| 161 |

+

## Usage

|

| 162 |

+

|

| 163 |

+

### Installation

|

| 164 |

+

|

| 165 |

+

```shell

|

| 166 |

+

git clone https://github.com/QwenLM/Qwen-Agent.git

|

| 167 |

+

cd benchmark

|

| 168 |

+

pip install -r requirements.txt

|

| 169 |

+

```

|

| 170 |

+

|

| 171 |

+

### Dataset Download

|

| 172 |

+

```shell

|

| 173 |

+

cd benchmark

|

| 174 |

+

wget https://qianwen-res.oss-cn-beijing.aliyuncs.com/assets/qwen_agent/benchmark_code_interpreter_data.zip

|

| 175 |

+

unzip benchmark_code_interpreter_data.zip

|

| 176 |

+

mkdir eval_data

|

| 177 |

+

mv eval_code_interpreter_v1.jsonl eval_data/

|

| 178 |

+

```

|

| 179 |

+

|

| 180 |

+

### Evaluation

|

| 181 |

+

To reproduce the comprehensive results of benchmark, you can run the following script:

|

| 182 |

+

|

| 183 |

+

```Shell

|

| 184 |

+

python inference_and_execute.py --model {model_name}

|

| 185 |

+

```

|

| 186 |

+

|

| 187 |

+

{model_name}:

|

| 188 |

+

- qwen-1.8b-chat

|

| 189 |

+

- qwen-7b-chat

|

| 190 |

+

- qwen-14b-chat

|

| 191 |

+

- qwen-72b-chat

|

| 192 |

+

- llama-2-7b-chat

|

| 193 |

+

- llama-2-13b-chat

|

| 194 |

+

- codellama-7b-instruct

|

| 195 |

+

- codellama-13b-instruct

|

| 196 |

+

- internlm-7b-chat-1.1

|

| 197 |

+

- internlm-20b-chat

|

| 198 |

+

|

| 199 |

+

The benchmark will run the test cases and generate the performance results. The results will be saved in the `output_data` directory.

|

| 200 |

+

|

| 201 |

+

**Notes**:

|

| 202 |

+

Please install `simhei.ttf` font for proper display in matplotlib when evaluating visualization task. You can do this by preparing `simhei.ttf` (which can be found on any Windows PC) and then running the following code snippet:

|

| 203 |

+

```python

|

| 204 |

+

import os

|

| 205 |

+

import matplotlib

|

| 206 |

+

target_font_path = os.path.join(

|

| 207 |

+

os.path.abspath(

|

| 208 |

+

os.path.join(matplotlib.matplotlib_fname(), os.path.pardir)),

|

| 209 |

+

'fonts', 'ttf', 'simhei.ttf')

|

| 210 |

+

os.system(f'cp simhei.ttf {target_font_path}')

|

| 211 |

+

font_list_cache = os.path.join(matplotlib.get_cachedir(), 'fontlist-*.json')

|

| 212 |

+

os.system(f'rm -f {font_list_cache}')

|

| 213 |

+

```

|

| 214 |

+

|

| 215 |

+

#### Code Executable Rate

|

| 216 |

+

```Shell

|

| 217 |

+

python inference_and_execute.py --task {task_name} --model {model_name}

|

| 218 |

+

```

|

| 219 |

+

|

| 220 |

+

{task_name}:

|

| 221 |

+

- `general`: General problem-solving task

|

| 222 |

+

|

| 223 |

+

|

| 224 |

+

#### Code Correctness Rate

|

| 225 |

+

```Shell

|

| 226 |

+

python inference_and_execute.py --task {task_name} --model {model_name}

|

| 227 |

+

```

|

| 228 |

+

|

| 229 |

+

{task_name}:

|

| 230 |

+

- `visualization`: Visualization task

|

| 231 |

+

- `gsm8k`: Math task

|

| 232 |

+

|

| 233 |

+

|

| 234 |

+

## Configuration

|

| 235 |

+

The inference_and_exec.py file contains the following configurable options:

|

| 236 |

+

|

| 237 |

+

- `--model`: The model to test which can be one of `qwen-72b-chat`, `qwen-14b-chat`, `qwen-7b-chat`, `qwen-1.8b-chat`, `qwen-7b-chat`, `llama-2-7b-chat`, `llama-2-13b-chat`, `codellama-7b-instruct`, `codellama-13b-instruct`, `internlm-7b-chat-1.1`, `internlm-20b-chat`.

|

| 238 |

+

- `--task`: The test task which can be one of `all`, `visualization`, `general`, `gsm8k`.

|

| 239 |

+

- `--output-path`: The path for saving evaluation result.

|

| 240 |

+

- `--input-path`: The path for placing evaluation data.

|

| 241 |

+

- `--output-fname`: The file name for evaluation result.

|

| 242 |

+

- `--input-fname`: The file name for evaluation data.

|

| 243 |

+

- `--force`: Force generation and will overwrite the cached results.

|

| 244 |

+

- `--eval-only`: Only calculate evaluation metrics without re-inference.

|

| 245 |

+

- `--eval-code-exec-only`: Only evaluate code executable rate

|

| 246 |

+

- `--gen-exec-only`: Only generate and execuate code without calculating evaluation metrics.

|

| 247 |

+

- `--gen-only`: Only generate without execuating code and calculating evaluation metrics.

|

| 248 |

+

- `--vis-judger`: The model to judge the result correctness for `Visualization` task which can be one of `gpt-4-vision-preview`, `qwen-vl-chat`, `qwen-vl-plus`. It is set to `gpt-4-vision-preview` by default in the version 20231206, and `Qwen-vl-chat` has been deprecated.

|

benchmark/code_interpreter.py

ADDED

|

@@ -0,0 +1,250 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import base64

|

| 2 |

+

import io

|

| 3 |

+

import json

|

| 4 |

+

import logging

|

| 5 |

+

import os

|

| 6 |

+

import queue

|

| 7 |

+

import re

|

| 8 |

+

import subprocess

|

| 9 |

+

import sys

|

| 10 |

+

import time

|

| 11 |

+

import traceback

|

| 12 |

+

import uuid

|

| 13 |

+

|

| 14 |

+

import matplotlib

|

| 15 |

+

import PIL.Image

|

| 16 |

+

from jupyter_client import BlockingKernelClient

|

| 17 |

+

from utils.code_utils import extract_code

|

| 18 |

+

|

| 19 |

+

WORK_DIR = os.getenv('CODE_INTERPRETER_WORK_DIR', '/tmp/workspace')

|

| 20 |

+

|

| 21 |

+

LAUNCH_KERNEL_PY = """

|

| 22 |

+

from ipykernel import kernelapp as app

|

| 23 |

+

app.launch_new_instance()

|

| 24 |

+

"""

|

| 25 |

+

|

| 26 |

+

_KERNEL_CLIENTS = {}

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

# Run this fix before jupyter starts if matplotlib cannot render CJK fonts.

|

| 30 |

+

# And we need to additionally run the following lines in the jupyter notebook.

|

| 31 |

+

# ```python

|

| 32 |

+

# import matplotlib.pyplot as plt

|

| 33 |

+

# plt.rcParams['font.sans-serif'] = ['SimHei']

|

| 34 |

+

# plt.rcParams['axes.unicode_minus'] = False

|

| 35 |

+

# ````

|

| 36 |

+

def fix_matplotlib_cjk_font_issue():

|

| 37 |

+

local_ttf = os.path.join(

|

| 38 |

+

os.path.abspath(

|

| 39 |

+

os.path.join(matplotlib.matplotlib_fname(), os.path.pardir)),

|

| 40 |

+

'fonts', 'ttf', 'simhei.ttf')

|

| 41 |

+

if not os.path.exists(local_ttf):

|

| 42 |

+

logging.warning(

|

| 43 |

+

f'Missing font file `{local_ttf}` for matplotlib. It may cause some error when using matplotlib.'

|

| 44 |

+

)

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def start_kernel(pid):

|

| 48 |

+

fix_matplotlib_cjk_font_issue()

|

| 49 |

+

|

| 50 |

+

connection_file = os.path.join(WORK_DIR,

|

| 51 |

+

f'kernel_connection_file_{pid}.json')

|

| 52 |

+

launch_kernel_script = os.path.join(WORK_DIR, f'launch_kernel_{pid}.py')

|

| 53 |

+

for f in [connection_file, launch_kernel_script]:

|

| 54 |

+

if os.path.exists(f):

|

| 55 |

+

logging.warning(f'{f} already exists')

|

| 56 |

+

os.remove(f)

|

| 57 |

+

|

| 58 |

+

os.makedirs(WORK_DIR, exist_ok=True)

|

| 59 |

+

|

| 60 |

+

with open(launch_kernel_script, 'w') as fout:

|

| 61 |

+

fout.write(LAUNCH_KERNEL_PY)

|

| 62 |

+

|

| 63 |

+

kernel_process = subprocess.Popen([

|

| 64 |

+

sys.executable,

|

| 65 |

+

launch_kernel_script,

|

| 66 |

+

'--IPKernelApp.connection_file',

|

| 67 |

+

connection_file,

|

| 68 |

+

'--matplotlib=inline',

|

| 69 |

+

'--quiet',

|

| 70 |

+

],

|

| 71 |

+

cwd=WORK_DIR)

|

| 72 |

+

logging.info(f"INFO: kernel process's PID = {kernel_process.pid}")

|

| 73 |

+

|

| 74 |

+

# Wait for kernel connection file to be written

|

| 75 |

+

while True:

|

| 76 |

+

if not os.path.isfile(connection_file):

|

| 77 |

+

time.sleep(0.1)

|

| 78 |

+

else:

|

| 79 |

+

# Keep looping if JSON parsing fails, file may be partially written

|

| 80 |

+

try:

|

| 81 |

+

with open(connection_file, 'r') as fp:

|

| 82 |

+

json.load(fp)

|

| 83 |

+

break

|

| 84 |

+

except json.JSONDecodeError:

|

| 85 |

+

pass

|

| 86 |

+

|

| 87 |

+

# Client

|

| 88 |

+

kc = BlockingKernelClient(connection_file=connection_file)

|

| 89 |

+

kc.load_connection_file()

|

| 90 |

+

kc.start_channels()

|

| 91 |

+

kc.wait_for_ready()

|

| 92 |

+

return kc

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

def escape_ansi(line):

|

| 96 |

+

ansi_escape = re.compile(r'(?:\x1B[@-_]|[\x80-\x9F])[0-?]*[ -/]*[@-~]')

|

| 97 |

+

return ansi_escape.sub('', line)

|

| 98 |

+

|

| 99 |

+

|

| 100 |

+

def publish_image_to_local(image_base64: str):

|

| 101 |

+

image_file = str(uuid.uuid4()) + '.png'

|

| 102 |

+

local_image_file = os.path.join(WORK_DIR, image_file)

|

| 103 |

+

|

| 104 |

+

png_bytes = base64.b64decode(image_base64)

|

| 105 |

+

assert isinstance(png_bytes, bytes)

|

| 106 |

+

bytes_io = io.BytesIO(png_bytes)

|

| 107 |

+

PIL.Image.open(bytes_io).save(local_image_file, 'png')

|

| 108 |

+

|

| 109 |

+

return local_image_file

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

START_CODE = """

|

| 113 |

+

import signal

|

| 114 |

+

def _m6_code_interpreter_timeout_handler(signum, frame):

|

| 115 |

+

raise TimeoutError("M6_CODE_INTERPRETER_TIMEOUT")

|

| 116 |

+

signal.signal(signal.SIGALRM, _m6_code_interpreter_timeout_handler)

|

| 117 |

+

|

| 118 |

+

def input(*args, **kwargs):

|

| 119 |

+

raise NotImplementedError('Python input() function is disabled.')

|

| 120 |

+

|

| 121 |

+

import os

|

| 122 |

+

if 'upload_file' not in os.getcwd():

|

| 123 |

+

os.chdir("./upload_file/")

|

| 124 |

+

|

| 125 |

+

import math

|

| 126 |

+

import re

|

| 127 |

+

import json

|

| 128 |

+

|

| 129 |

+

import seaborn as sns

|

| 130 |

+

sns.set_theme()

|

| 131 |

+

|

| 132 |

+

import matplotlib

|

| 133 |

+

import matplotlib.pyplot as plt

|

| 134 |

+

plt.rcParams['font.sans-serif'] = ['SimHei']

|

| 135 |

+

plt.rcParams['axes.unicode_minus'] = False

|

| 136 |

+

|

| 137 |

+

import numpy as np

|

| 138 |

+

import pandas as pd

|

| 139 |

+

|

| 140 |

+

from sympy import Eq, symbols, solve

|

| 141 |

+

"""