Spaces:

Runtime error

Runtime error

Arash

commited on

Commit

·

c334626

1

Parent(s):

0e6bdc0

initial code release

Browse files- .gitignore +46 -0

- EMA.py +90 -0

- LICENSE +63 -0

- README.md +0 -16

- assets/teaser.png +0 -0

- datasets_prep/LICENSE_PyTorch +70 -0

- datasets_prep/LICENSE_torchvision +29 -0

- datasets_prep/lmdb_datasets.py +58 -0

- datasets_prep/lsun.py +170 -0

- datasets_prep/stackmnist_data.py +65 -0

- pytorch_fid/LICENSE_MIT +21 -0

- pytorch_fid/LICENSE_inception +201 -0

- pytorch_fid/LICENSE_pytorch_fid +201 -0

- pytorch_fid/fid_score.py +305 -0

- pytorch_fid/inception.py +337 -0

- pytorch_fid/inception_score.py +103 -0

- readme.md +113 -0

- requirements.txt +10 -0

- score_sde/LICENSE_Apache +201 -0

- score_sde/__init__.py +0 -0

- score_sde/models/LICENSE_MIT +21 -0

- score_sde/models/__init__.py +15 -0

- score_sde/models/dense_layer.py +83 -0

- score_sde/models/discriminator.py +239 -0

- score_sde/models/layers.py +619 -0

- score_sde/models/layerspp.py +380 -0

- score_sde/models/ncsnpp_generator_adagn.py +431 -0

- score_sde/models/up_or_down_sampling.py +262 -0

- score_sde/models/utils.py +148 -0

- score_sde/op/LICENSE_MIT +21 -0

- score_sde/op/__init__.py +2 -0

- score_sde/op/fused_act.py +105 -0

- score_sde/op/fused_bias_act.cpp +28 -0

- score_sde/op/fused_bias_act_kernel.cu +101 -0

- score_sde/op/upfirdn2d.cpp +31 -0

- score_sde/op/upfirdn2d.py +225 -0

- score_sde/op/upfirdn2d_kernel.cu +371 -0

- test_ddgan.py +275 -0

- train_ddgan.py +602 -0

.gitignore

ADDED

|

@@ -0,0 +1,46 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Node artifact files

|

| 2 |

+

node_modules/

|

| 3 |

+

dist/

|

| 4 |

+

ngc_scripts/

|

| 5 |

+

|

| 6 |

+

# Compiled Java class files

|

| 7 |

+

*.class

|

| 8 |

+

|

| 9 |

+

# Compiled Python bytecode

|

| 10 |

+

*.py[cod]

|

| 11 |

+

|

| 12 |

+

# Log files

|

| 13 |

+

*.log

|

| 14 |

+

|

| 15 |

+

# Package files

|

| 16 |

+

*.jar

|

| 17 |

+

|

| 18 |

+

# Maven

|

| 19 |

+

target/

|

| 20 |

+

dist/

|

| 21 |

+

|

| 22 |

+

# JetBrains IDE

|

| 23 |

+

.idea/

|

| 24 |

+

|

| 25 |

+

# Unit test reports

|

| 26 |

+

TEST*.xml

|

| 27 |

+

|

| 28 |

+

# Generated by MacOS

|

| 29 |

+

.DS_Store

|

| 30 |

+

|

| 31 |

+

# Generated by Windows

|

| 32 |

+

Thumbs.db

|

| 33 |

+

|

| 34 |

+

# Applications

|

| 35 |

+

*.app

|

| 36 |

+

*.exe

|

| 37 |

+

*.war

|

| 38 |

+

|

| 39 |

+

# Large media files

|

| 40 |

+

*.mp4

|

| 41 |

+

*.tiff

|

| 42 |

+

*.avi

|

| 43 |

+

*.flv

|

| 44 |

+

*.mov

|

| 45 |

+

*.wmv

|

| 46 |

+

|

EMA.py

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ---------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# This work is licensed under the NVIDIA Source Code License

|

| 5 |

+

# for Denoising Diffusion GAN. To view a copy of this license, see the LICENSE file.

|

| 6 |

+

# ---------------------------------------------------------------

|

| 7 |

+

|

| 8 |

+

'''

|

| 9 |

+

Codes adapted from https://github.com/NVlabs/LSGM/blob/main/util/ema.py

|

| 10 |

+

'''

|

| 11 |

+

import warnings

|

| 12 |

+

|

| 13 |

+

import torch

|

| 14 |

+

from torch.optim import Optimizer

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class EMA(Optimizer):

|

| 18 |

+

def __init__(self, opt, ema_decay):

|

| 19 |

+

self.ema_decay = ema_decay

|

| 20 |

+

self.apply_ema = self.ema_decay > 0.

|

| 21 |

+

self.optimizer = opt

|

| 22 |

+

self.state = opt.state

|

| 23 |

+

self.param_groups = opt.param_groups

|

| 24 |

+

|

| 25 |

+

def step(self, *args, **kwargs):

|

| 26 |

+

retval = self.optimizer.step(*args, **kwargs)

|

| 27 |

+

|

| 28 |

+

# stop here if we are not applying EMA

|

| 29 |

+

if not self.apply_ema:

|

| 30 |

+

return retval

|

| 31 |

+

|

| 32 |

+

ema, params = {}, {}

|

| 33 |

+

for group in self.optimizer.param_groups:

|

| 34 |

+

for i, p in enumerate(group['params']):

|

| 35 |

+

if p.grad is None:

|

| 36 |

+

continue

|

| 37 |

+

state = self.optimizer.state[p]

|

| 38 |

+

|

| 39 |

+

# State initialization

|

| 40 |

+

if 'ema' not in state:

|

| 41 |

+

state['ema'] = p.data.clone()

|

| 42 |

+

|

| 43 |

+

if p.shape not in params:

|

| 44 |

+

params[p.shape] = {'idx': 0, 'data': []}

|

| 45 |

+

ema[p.shape] = []

|

| 46 |

+

|

| 47 |

+

params[p.shape]['data'].append(p.data)

|

| 48 |

+

ema[p.shape].append(state['ema'])

|

| 49 |

+

|

| 50 |

+

for i in params:

|

| 51 |

+

params[i]['data'] = torch.stack(params[i]['data'], dim=0)

|

| 52 |

+

ema[i] = torch.stack(ema[i], dim=0)

|

| 53 |

+

ema[i].mul_(self.ema_decay).add_(params[i]['data'], alpha=1. - self.ema_decay)

|

| 54 |

+

|

| 55 |

+

for p in group['params']:

|

| 56 |

+

if p.grad is None:

|

| 57 |

+

continue

|

| 58 |

+

idx = params[p.shape]['idx']

|

| 59 |

+

self.optimizer.state[p]['ema'] = ema[p.shape][idx, :]

|

| 60 |

+

params[p.shape]['idx'] += 1

|

| 61 |

+

|

| 62 |

+

return retval

|

| 63 |

+

|

| 64 |

+

def load_state_dict(self, state_dict):

|

| 65 |

+

super(EMA, self).load_state_dict(state_dict)

|

| 66 |

+

# load_state_dict loads the data to self.state and self.param_groups. We need to pass this data to

|

| 67 |

+

# the underlying optimizer too.

|

| 68 |

+

self.optimizer.state = self.state

|

| 69 |

+

self.optimizer.param_groups = self.param_groups

|

| 70 |

+

|

| 71 |

+

def swap_parameters_with_ema(self, store_params_in_ema):

|

| 72 |

+

""" This function swaps parameters with their ema values. It records original parameters in the ema

|

| 73 |

+

parameters, if store_params_in_ema is true."""

|

| 74 |

+

|

| 75 |

+

# stop here if we are not applying EMA

|

| 76 |

+

if not self.apply_ema:

|

| 77 |

+

warnings.warn('swap_parameters_with_ema was called when there is no EMA weights.')

|

| 78 |

+

return

|

| 79 |

+

|

| 80 |

+

for group in self.optimizer.param_groups:

|

| 81 |

+

for i, p in enumerate(group['params']):

|

| 82 |

+

if not p.requires_grad:

|

| 83 |

+

continue

|

| 84 |

+

ema = self.optimizer.state[p]['ema']

|

| 85 |

+

if store_params_in_ema:

|

| 86 |

+

tmp = p.data.detach()

|

| 87 |

+

p.data = ema.detach()

|

| 88 |

+

self.optimizer.state[p]['ema'] = tmp

|

| 89 |

+

else:

|

| 90 |

+

p.data = ema.detach()

|

LICENSE

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

NVIDIA License

|

| 2 |

+

|

| 3 |

+

1. Definitions

|

| 4 |

+

|

| 5 |

+

“Licensor” means any person or entity that distributes its Work.

|

| 6 |

+

|

| 7 |

+

“Work” means (a) the original work of authorship made available under this license, which may include software,

|

| 8 |

+

documentation, or other files, and (b) any additions to or derivative works thereof that are made available under this license.

|

| 9 |

+

|

| 10 |

+

The terms “reproduce,” “reproduction,” “derivative works,” and “distribution” have the meaning as provided under U.S.

|

| 11 |

+

copyright law; provided, however, that for the purposes of this license, derivative works shall not include works that

|

| 12 |

+

remain separable from, or merely link (or bind by name) to the interfaces of, the Work.

|

| 13 |

+

|

| 14 |

+

Works are “made available” under this license by including in or with the Work either (a) a copyright notice

|

| 15 |

+

referencing the applicability of this license to the Work, or (b) a copy of this license.

|

| 16 |

+

|

| 17 |

+

2. License Grant

|

| 18 |

+

|

| 19 |

+

2.1 Copyright Grant. Subject to the terms and conditions of this license, each Licensor grants to you a perpetual,

|

| 20 |

+

worldwide, non-exclusive, royalty-free, copyright license to use, reproduce, prepare derivative works of, publicly

|

| 21 |

+

display, publicly perform, sublicense and distribute its Work and any resulting derivative works in any form.

|

| 22 |

+

|

| 23 |

+

3. Limitations

|

| 24 |

+

|

| 25 |

+

3.1 Redistribution. You may reproduce or distribute the Work only if (a) you do so under this license, (b) you

|

| 26 |

+

include a complete copy of this license with your distribution, and (c) you retain without modification any

|

| 27 |

+

copyright, patent, trademark, or attribution notices that are present in the Work.

|

| 28 |

+

|

| 29 |

+

3.2 Derivative Works. You may specify that additional or different terms apply to the use, reproduction, and

|

| 30 |

+

distribution of your derivative works of the Work (“Your Terms”) only if (a) Your Terms provide that the use

|

| 31 |

+

limitation in Section 3.3 applies to your derivative works, and (b) you identify the specific derivative works

|

| 32 |

+

that are subject to Your Terms. Notwithstanding Your Terms, this license (including the redistribution

|

| 33 |

+

requirements in Section 3.1) will continue to apply to the Work itself.

|

| 34 |

+

|

| 35 |

+

3.3 Use Limitation. The Work and any derivative works thereof only may be used or intended for use non-commercially.

|

| 36 |

+

Notwithstanding the foregoing, NVIDIA Corporation and its affiliates may use the Work and any derivative

|

| 37 |

+

works commercially. As used herein, “non-commercially” means for research or evaluation purposes only.

|

| 38 |

+

|

| 39 |

+

3.4 Patent Claims. If you bring or threaten to bring a patent claim against any Licensor (including any claim,

|

| 40 |

+

cross-claim or counterclaim in a lawsuit) to enforce any patents that you allege are infringed by any Work, then

|

| 41 |

+

your rights under this license from such Licensor (including the grant in Section 2.1) will terminate immediately.

|

| 42 |

+

|

| 43 |

+

3.5 Trademarks. This license does not grant any rights to use any Licensor’s or its affiliates’ names, logos,

|

| 44 |

+

or trademarks, except as necessary to reproduce the notices described in this license.

|

| 45 |

+

|

| 46 |

+

3.6 Termination. If you violate any term of this license, then your rights under this license (including the

|

| 47 |

+

grant in Section 2.1) will terminate immediately.

|

| 48 |

+

|

| 49 |

+

4. Disclaimer of Warranty.

|

| 50 |

+

|

| 51 |

+

THE WORK IS PROVIDED “AS IS” WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING

|

| 52 |

+

WARRANTIES OR CONDITIONS OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, TITLE OR NON-INFRINGEMENT. YOU

|

| 53 |

+

BEAR THE RISK OF UNDERTAKING ANY ACTIVITIES UNDER THIS LICENSE.

|

| 54 |

+

|

| 55 |

+

5. Limitation of Liability.

|

| 56 |

+

|

| 57 |

+

EXCEPT AS PROHIBITED BY APPLICABLE LAW, IN NO EVENT AND UNDER NO LEGAL THEORY, WHETHER IN TORT (INCLUDING

|

| 58 |

+

NEGLIGENCE), CONTRACT, OR OTHERWISE SHALL ANY LICENSOR BE LIABLE TO YOU FOR DAMAGES, INCLUDING ANY DIRECT,

|

| 59 |

+

INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING OUT OF OR RELATED TO THIS LICENSE, THE USE OR

|

| 60 |

+

INABILITY TO USE THE WORK (INCLUDING BUT NOT LIMITED TO LOSS OF GOODWILL, BUSINESS INTERRUPTION, LOST PROFITS OR

|

| 61 |

+

DATA, COMPUTER FAILURE OR MALFUNCTION, OR ANY OTHER DAMAGES OR LOSSES), EVEN IF THE LICENSOR HAS BEEN

|

| 62 |

+

ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

|

| 63 |

+

|

README.md

DELETED

|

@@ -1,16 +0,0 @@

|

|

| 1 |

-

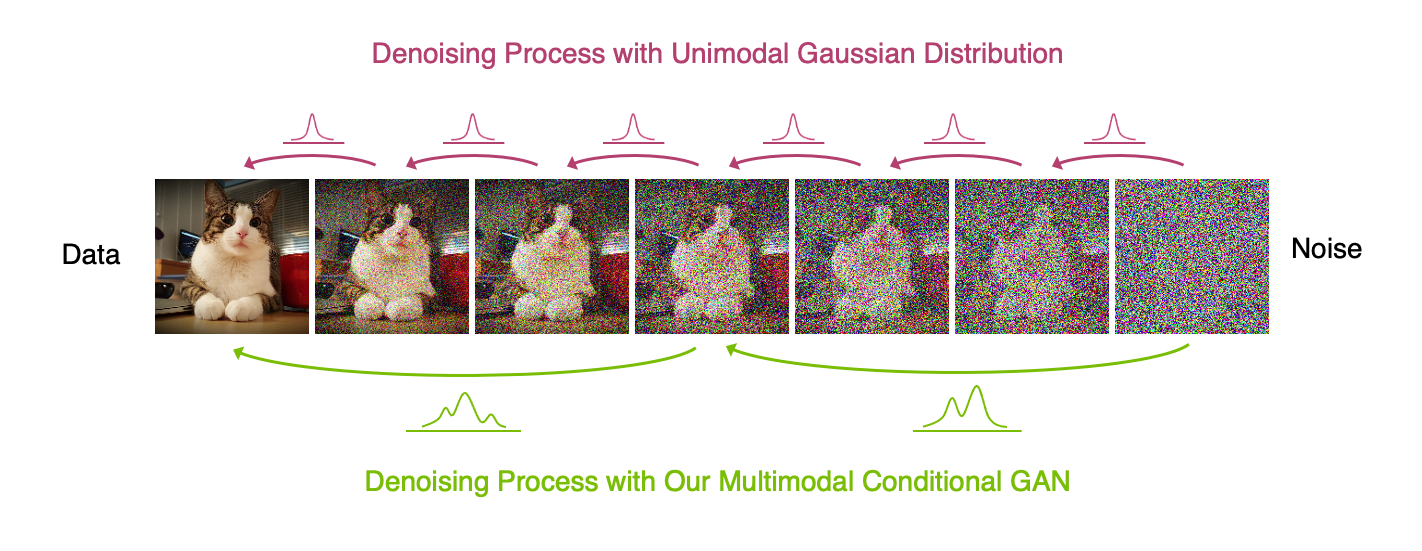

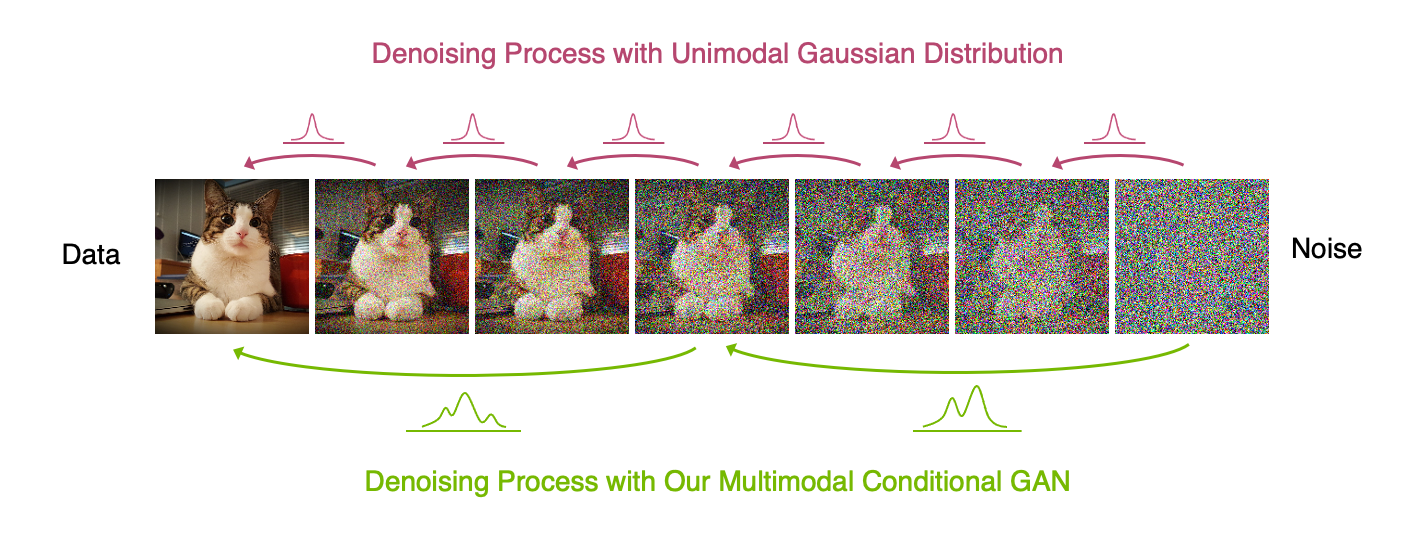

## <p align="center">Tackling the Generative Learning Trilemma with Denoising Diffusion GANs</p>

|

| 2 |

-

|

| 3 |

-

<div align="center">

|

| 4 |

-

<a href="https://xavierxiao.github.io/" target="_blank">Zhisheng Xiao</a>   <b>·</b>

|

| 5 |

-

<a href="https://karstenkreis.github.io/" target="_blank">Karsten Kreis</a>   <b>·</b>

|

| 6 |

-

<a href="http://latentspace.cc/" target="_blank">Arash Vahdat</a>

|

| 7 |

-

<br> <br>

|

| 8 |

-

<a href="https://nvlabs.github.io/denoising-diffusion-gan" target="_blank">Project Page</a>

|

| 9 |

-

</div>

|

| 10 |

-

<br><br>

|

| 11 |

-

<p align="center">:construction: :pick: :hammer_and_wrench: :construction_worker:</p>

|

| 12 |

-

<p align="center">Code coming soon (the current expected release is in March 2022). Stay tuned!</p>

|

| 13 |

-

<br><br>

|

| 14 |

-

<p align="center">

|

| 15 |

-

<img width="800" alt="teaser" src="assets/teaser.png"/>

|

| 16 |

-

</p>

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

assets/teaser.png

CHANGED

|

|

datasets_prep/LICENSE_PyTorch

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

From PyTorch:

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2016- Facebook, Inc (Adam Paszke)

|

| 4 |

+

Copyright (c) 2014- Facebook, Inc (Soumith Chintala)

|

| 5 |

+

Copyright (c) 2011-2014 Idiap Research Institute (Ronan Collobert)

|

| 6 |

+

Copyright (c) 2012-2014 Deepmind Technologies (Koray Kavukcuoglu)

|

| 7 |

+

Copyright (c) 2011-2012 NEC Laboratories America (Koray Kavukcuoglu)

|

| 8 |

+

Copyright (c) 2011-2013 NYU (Clement Farabet)

|

| 9 |

+

Copyright (c) 2006-2010 NEC Laboratories America (Ronan Collobert, Leon Bottou, Iain Melvin, Jason Weston)

|

| 10 |

+

Copyright (c) 2006 Idiap Research Institute (Samy Bengio)

|

| 11 |

+

Copyright (c) 2001-2004 Idiap Research Institute (Ronan Collobert, Samy Bengio, Johnny Mariethoz)

|

| 12 |

+

|

| 13 |

+

From Caffe2:

|

| 14 |

+

|

| 15 |

+

Copyright (c) 2016-present, Facebook Inc. All rights reserved.

|

| 16 |

+

|

| 17 |

+

All contributions by Facebook:

|

| 18 |

+

Copyright (c) 2016 Facebook Inc.

|

| 19 |

+

|

| 20 |

+

All contributions by Google:

|

| 21 |

+

Copyright (c) 2015 Google Inc.

|

| 22 |

+

All rights reserved.

|

| 23 |

+

|

| 24 |

+

All contributions by Yangqing Jia:

|

| 25 |

+

Copyright (c) 2015 Yangqing Jia

|

| 26 |

+

All rights reserved.

|

| 27 |

+

|

| 28 |

+

All contributions from Caffe:

|

| 29 |

+

Copyright(c) 2013, 2014, 2015, the respective contributors

|

| 30 |

+

All rights reserved.

|

| 31 |

+

|

| 32 |

+

All other contributions:

|

| 33 |

+

Copyright(c) 2015, 2016 the respective contributors

|

| 34 |

+

All rights reserved.

|

| 35 |

+

|

| 36 |

+

Caffe2 uses a copyright model similar to Caffe: each contributor holds

|

| 37 |

+

copyright over their contributions to Caffe2. The project versioning records

|

| 38 |

+

all such contribution and copyright details. If a contributor wants to further

|

| 39 |

+

mark their specific copyright on a particular contribution, they should

|

| 40 |

+

indicate their copyright solely in the commit message of the change when it is

|

| 41 |

+

committed.

|

| 42 |

+

|

| 43 |

+

All rights reserved.

|

| 44 |

+

|

| 45 |

+

Redistribution and use in source and binary forms, with or without

|

| 46 |

+

modification, are permitted provided that the following conditions are met:

|

| 47 |

+

|

| 48 |

+

1. Redistributions of source code must retain the above copyright

|

| 49 |

+

notice, this list of conditions and the following disclaimer.

|

| 50 |

+

|

| 51 |

+

2. Redistributions in binary form must reproduce the above copyright

|

| 52 |

+

notice, this list of conditions and the following disclaimer in the

|

| 53 |

+

documentation and/or other materials provided with the distribution.

|

| 54 |

+

|

| 55 |

+

3. Neither the names of Facebook, Deepmind Technologies, NYU, NEC Laboratories America

|

| 56 |

+

and IDIAP Research Institute nor the names of its contributors may be

|

| 57 |

+

used to endorse or promote products derived from this software without

|

| 58 |

+

specific prior written permission.

|

| 59 |

+

|

| 60 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 61 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 62 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

|

| 63 |

+

ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT OWNER OR CONTRIBUTORS BE

|

| 64 |

+

LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

|

| 65 |

+

CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

|

| 66 |

+

SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

|

| 67 |

+

INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

|

| 68 |

+

CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE)

|

| 69 |

+

ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE

|

| 70 |

+

POSSIBILITY OF SUCH DAMAGE.

|

datasets_prep/LICENSE_torchvision

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

BSD 3-Clause License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Soumith Chintala 2016,

|

| 4 |

+

All rights reserved.

|

| 5 |

+

|

| 6 |

+

Redistribution and use in source and binary forms, with or without

|

| 7 |

+

modification, are permitted provided that the following conditions are met:

|

| 8 |

+

|

| 9 |

+

* Redistributions of source code must retain the above copyright notice, this

|

| 10 |

+

list of conditions and the following disclaimer.

|

| 11 |

+

|

| 12 |

+

* Redistributions in binary form must reproduce the above copyright notice,

|

| 13 |

+

this list of conditions and the following disclaimer in the documentation

|

| 14 |

+

and/or other materials provided with the distribution.

|

| 15 |

+

|

| 16 |

+

* Neither the name of the copyright holder nor the names of its

|

| 17 |

+

contributors may be used to endorse or promote products derived from

|

| 18 |

+

this software without specific prior written permission.

|

| 19 |

+

|

| 20 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 21 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

|

| 22 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

|

| 23 |

+

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

|

| 24 |

+

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

|

| 25 |

+

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

|

| 26 |

+

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

|

| 27 |

+

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

|

| 28 |

+

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

|

| 29 |

+

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

datasets_prep/lmdb_datasets.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ---------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# This work is licensed under the NVIDIA Source Code License

|

| 5 |

+

# for Denoising Diffusion GAN. To view a copy of this license, see the LICENSE file.

|

| 6 |

+

# ---------------------------------------------------------------

|

| 7 |

+

|

| 8 |

+

import torch.utils.data as data

|

| 9 |

+

import numpy as np

|

| 10 |

+

import lmdb

|

| 11 |

+

import os

|

| 12 |

+

import io

|

| 13 |

+

from PIL import Image

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def num_samples(dataset, train):

|

| 17 |

+

if dataset == 'celeba':

|

| 18 |

+

return 27000 if train else 3000

|

| 19 |

+

|

| 20 |

+

else:

|

| 21 |

+

raise NotImplementedError('dataset %s is unknown' % dataset)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

class LMDBDataset(data.Dataset):

|

| 25 |

+

def __init__(self, root, name='', train=True, transform=None, is_encoded=False):

|

| 26 |

+

self.train = train

|

| 27 |

+

self.name = name

|

| 28 |

+

self.transform = transform

|

| 29 |

+

if self.train:

|

| 30 |

+

lmdb_path = os.path.join(root, 'train.lmdb')

|

| 31 |

+

else:

|

| 32 |

+

lmdb_path = os.path.join(root, 'validation.lmdb')

|

| 33 |

+

self.data_lmdb = lmdb.open(lmdb_path, readonly=True, max_readers=1,

|

| 34 |

+

lock=False, readahead=False, meminit=False)

|

| 35 |

+

self.is_encoded = is_encoded

|

| 36 |

+

|

| 37 |

+

def __getitem__(self, index):

|

| 38 |

+

target = [0]

|

| 39 |

+

with self.data_lmdb.begin(write=False, buffers=True) as txn:

|

| 40 |

+

data = txn.get(str(index).encode())

|

| 41 |

+

if self.is_encoded:

|

| 42 |

+

img = Image.open(io.BytesIO(data))

|

| 43 |

+

img = img.convert('RGB')

|

| 44 |

+

else:

|

| 45 |

+

img = np.asarray(data, dtype=np.uint8)

|

| 46 |

+

# assume data is RGB

|

| 47 |

+

size = int(np.sqrt(len(img) / 3))

|

| 48 |

+

img = np.reshape(img, (size, size, 3))

|

| 49 |

+

img = Image.fromarray(img, mode='RGB')

|

| 50 |

+

|

| 51 |

+

if self.transform is not None:

|

| 52 |

+

img = self.transform(img)

|

| 53 |

+

|

| 54 |

+

return img, target

|

| 55 |

+

|

| 56 |

+

def __len__(self):

|

| 57 |

+

return num_samples(self.name, self.train)

|

| 58 |

+

|

datasets_prep/lsun.py

ADDED

|

@@ -0,0 +1,170 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ---------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# This file has been modified from a file in the torchvision library

|

| 5 |

+

# which was released under the BSD 3-Clause License.

|

| 6 |

+

#

|

| 7 |

+

# Source:

|

| 8 |

+

# https://github.com/pytorch/vision/blob/ea6b879e90459006e71a164dc76b7e2cc3bff9d9/torchvision/datasets/lsun.py

|

| 9 |

+

#

|

| 10 |

+

# The license for the original version of this file can be

|

| 11 |

+

# found in this directory (LICENSE_torchvision). The modifications

|

| 12 |

+

# to this file are subject to the same BSD 3-Clause License.

|

| 13 |

+

# ---------------------------------------------------------------

|

| 14 |

+

|

| 15 |

+

from torchvision.datasets.vision import VisionDataset

|

| 16 |

+

from PIL import Image

|

| 17 |

+

import os

|

| 18 |

+

import os.path

|

| 19 |

+

import io

|

| 20 |

+

import string

|

| 21 |

+

from collections.abc import Iterable

|

| 22 |

+

import pickle

|

| 23 |

+

from torchvision.datasets.utils import verify_str_arg, iterable_to_str

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

class LSUNClass(VisionDataset):

|

| 27 |

+

def __init__(self, root, transform=None, target_transform=None):

|

| 28 |

+

import lmdb

|

| 29 |

+

super(LSUNClass, self).__init__(root, transform=transform,

|

| 30 |

+

target_transform=target_transform)

|

| 31 |

+

|

| 32 |

+

self.env = lmdb.open(root, max_readers=1, readonly=True, lock=False,

|

| 33 |

+

readahead=False, meminit=False)

|

| 34 |

+

with self.env.begin(write=False) as txn:

|

| 35 |

+

self.length = txn.stat()['entries']

|

| 36 |

+

# cache_file = '_cache_' + ''.join(c for c in root if c in string.ascii_letters)

|

| 37 |

+

# av begin

|

| 38 |

+

# We only modified the location of cache_file.

|

| 39 |

+

cache_file = os.path.join(self.root, '_cache_')

|

| 40 |

+

# av end

|

| 41 |

+

if os.path.isfile(cache_file):

|

| 42 |

+

self.keys = pickle.load(open(cache_file, "rb"))

|

| 43 |

+

else:

|

| 44 |

+

with self.env.begin(write=False) as txn:

|

| 45 |

+

self.keys = [key for key, _ in txn.cursor()]

|

| 46 |

+

pickle.dump(self.keys, open(cache_file, "wb"))

|

| 47 |

+

|

| 48 |

+

def __getitem__(self, index):

|

| 49 |

+

img, target = None, -1

|

| 50 |

+

env = self.env

|

| 51 |

+

with env.begin(write=False) as txn:

|

| 52 |

+

imgbuf = txn.get(self.keys[index])

|

| 53 |

+

|

| 54 |

+

buf = io.BytesIO()

|

| 55 |

+

buf.write(imgbuf)

|

| 56 |

+

buf.seek(0)

|

| 57 |

+

img = Image.open(buf).convert('RGB')

|

| 58 |

+

|

| 59 |

+

if self.transform is not None:

|

| 60 |

+

img = self.transform(img)

|

| 61 |

+

|

| 62 |

+

if self.target_transform is not None:

|

| 63 |

+

target = self.target_transform(target)

|

| 64 |

+

|

| 65 |

+

return img, target

|

| 66 |

+

|

| 67 |

+

def __len__(self):

|

| 68 |

+

return self.length

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

class LSUN(VisionDataset):

|

| 72 |

+

"""

|

| 73 |

+

`LSUN <https://www.yf.io/p/lsun>`_ dataset.

|

| 74 |

+

|

| 75 |

+

Args:

|

| 76 |

+

root (string): Root directory for the database files.

|

| 77 |

+

classes (string or list): One of {'train', 'val', 'test'} or a list of

|

| 78 |

+

categories to load. e,g. ['bedroom_train', 'church_outdoor_train'].

|

| 79 |

+

transform (callable, optional): A function/transform that takes in an PIL image

|

| 80 |

+

and returns a transformed version. E.g, ``transforms.RandomCrop``

|

| 81 |

+

target_transform (callable, optional): A function/transform that takes in the

|

| 82 |

+

target and transforms it.

|

| 83 |

+

"""

|

| 84 |

+

|

| 85 |

+

def __init__(self, root, classes='train', transform=None, target_transform=None):

|

| 86 |

+

super(LSUN, self).__init__(root, transform=transform,

|

| 87 |

+

target_transform=target_transform)

|

| 88 |

+

self.classes = self._verify_classes(classes)

|

| 89 |

+

|

| 90 |

+

# for each class, create an LSUNClassDataset

|

| 91 |

+

self.dbs = []

|

| 92 |

+

for c in self.classes:

|

| 93 |

+

self.dbs.append(LSUNClass(

|

| 94 |

+

root=root + '/' + c + '_lmdb',

|

| 95 |

+

transform=transform))

|

| 96 |

+

|

| 97 |

+

self.indices = []

|

| 98 |

+

count = 0

|

| 99 |

+

for db in self.dbs:

|

| 100 |

+

count += len(db)

|

| 101 |

+

self.indices.append(count)

|

| 102 |

+

|

| 103 |

+

self.length = count

|

| 104 |

+

|

| 105 |

+

def _verify_classes(self, classes):

|

| 106 |

+

categories = ['bedroom', 'bridge', 'church_outdoor', 'classroom',

|

| 107 |

+

'conference_room', 'dining_room', 'kitchen',

|

| 108 |

+

'living_room', 'restaurant', 'tower', 'cat']

|

| 109 |

+

dset_opts = ['train', 'val', 'test']

|

| 110 |

+

|

| 111 |

+

try:

|

| 112 |

+

verify_str_arg(classes, "classes", dset_opts)

|

| 113 |

+

if classes == 'test':

|

| 114 |

+

classes = [classes]

|

| 115 |

+

else:

|

| 116 |

+

classes = [c + '_' + classes for c in categories]

|

| 117 |

+

except ValueError:

|

| 118 |

+

if not isinstance(classes, Iterable):

|

| 119 |

+

msg = ("Expected type str or Iterable for argument classes, "

|

| 120 |

+

"but got type {}.")

|

| 121 |

+

raise ValueError(msg.format(type(classes)))

|

| 122 |

+

|

| 123 |

+

classes = list(classes)

|

| 124 |

+

msg_fmtstr = ("Expected type str for elements in argument classes, "

|

| 125 |

+

"but got type {}.")

|

| 126 |

+

for c in classes:

|

| 127 |

+

verify_str_arg(c, custom_msg=msg_fmtstr.format(type(c)))

|

| 128 |

+

c_short = c.split('_')

|

| 129 |

+

category, dset_opt = '_'.join(c_short[:-1]), c_short[-1]

|

| 130 |

+

|

| 131 |

+

msg_fmtstr = "Unknown value '{}' for {}. Valid values are {{{}}}."

|

| 132 |

+

msg = msg_fmtstr.format(category, "LSUN class",

|

| 133 |

+

iterable_to_str(categories))

|

| 134 |

+

verify_str_arg(category, valid_values=categories, custom_msg=msg)

|

| 135 |

+

|

| 136 |

+

msg = msg_fmtstr.format(dset_opt, "postfix", iterable_to_str(dset_opts))

|

| 137 |

+

verify_str_arg(dset_opt, valid_values=dset_opts, custom_msg=msg)

|

| 138 |

+

|

| 139 |

+

return classes

|

| 140 |

+

|

| 141 |

+

def __getitem__(self, index):

|

| 142 |

+

"""

|

| 143 |

+

Args:

|

| 144 |

+

index (int): Index

|

| 145 |

+

|

| 146 |

+

Returns:

|

| 147 |

+

tuple: Tuple (image, target) where target is the index of the target category.

|

| 148 |

+

"""

|

| 149 |

+

target = 0

|

| 150 |

+

sub = 0

|

| 151 |

+

for ind in self.indices:

|

| 152 |

+

if index < ind:

|

| 153 |

+

break

|

| 154 |

+

target += 1

|

| 155 |

+

sub = ind

|

| 156 |

+

|

| 157 |

+

db = self.dbs[target]

|

| 158 |

+

index = index - sub

|

| 159 |

+

|

| 160 |

+

if self.target_transform is not None:

|

| 161 |

+

target = self.target_transform(target)

|

| 162 |

+

|

| 163 |

+

img, _ = db[index]

|

| 164 |

+

return img, target

|

| 165 |

+

|

| 166 |

+

def __len__(self):

|

| 167 |

+

return self.length

|

| 168 |

+

|

| 169 |

+

def extra_repr(self):

|

| 170 |

+

return "Classes: {classes}".format(**self.__dict__)

|

datasets_prep/stackmnist_data.py

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ---------------------------------------------------------------

|

| 2 |

+

# Copyright (c) 2022, NVIDIA CORPORATION. All rights reserved.

|

| 3 |

+

#

|

| 4 |

+

# This work is licensed under the NVIDIA Source Code License

|

| 5 |

+

# for Denoising Diffusion GAN. To view a copy of this license, see the LICENSE file.

|

| 6 |

+

# ---------------------------------------------------------------

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

import numpy as np

|

| 10 |

+

from PIL import Image

|

| 11 |

+

import torchvision.datasets as dset

|

| 12 |

+

import torchvision.transforms as transforms

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class StackedMNIST(dset.MNIST):

|

| 16 |

+

def __init__(self, root, train=True, transform=None, target_transform=None,

|

| 17 |

+

download=False):

|

| 18 |

+

super(StackedMNIST, self).__init__(root=root, train=train, transform=transform,

|

| 19 |

+

target_transform=target_transform, download=download)

|

| 20 |

+

|

| 21 |

+

index1 = np.hstack([np.random.permutation(len(self.data)), np.random.permutation(len(self.data))])

|

| 22 |

+

index2 = np.hstack([np.random.permutation(len(self.data)), np.random.permutation(len(self.data))])

|

| 23 |

+

index3 = np.hstack([np.random.permutation(len(self.data)), np.random.permutation(len(self.data))])

|

| 24 |

+

self.num_images = 2 * len(self.data)

|

| 25 |

+

|

| 26 |

+

self.index = []

|

| 27 |

+

for i in range(self.num_images):

|

| 28 |

+

self.index.append((index1[i], index2[i], index3[i]))

|

| 29 |

+

|

| 30 |

+

def __len__(self):

|

| 31 |

+

return self.num_images

|

| 32 |

+

|

| 33 |

+

def __getitem__(self, index):

|

| 34 |

+

img = np.zeros((28, 28, 3), dtype=np.uint8)

|

| 35 |

+

target = 0

|

| 36 |

+

for i in range(3):

|

| 37 |

+

img_, target_ = self.data[self.index[index][i]], int(self.targets[self.index[index][i]])

|

| 38 |

+

img[:, :, i] = img_

|

| 39 |

+

target += target_ * 10 ** (2 - i)

|

| 40 |

+

|

| 41 |

+

img = Image.fromarray(img, mode="RGB")

|

| 42 |

+

|

| 43 |

+

if self.transform is not None:

|

| 44 |

+

img = self.transform(img)

|

| 45 |

+

|

| 46 |

+

if self.target_transform is not None:

|

| 47 |

+

target = self.target_transform(target)

|

| 48 |

+

|

| 49 |

+

return img, target

|

| 50 |

+

|

| 51 |

+

def _data_transforms_stacked_mnist():

|

| 52 |

+

"""Get data transforms for cifar10."""

|

| 53 |

+

train_transform = transforms.Compose([

|

| 54 |

+

transforms.Pad(padding=2),

|

| 55 |

+

transforms.ToTensor(),

|

| 56 |

+

transforms.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5))

|

| 57 |

+

])

|

| 58 |

+

|

| 59 |

+

valid_transform = transforms.Compose([

|

| 60 |

+

transforms.Pad(padding=2),

|

| 61 |

+

transforms.ToTensor(),

|

| 62 |

+

transforms.Normalize((0.5,0.5,0.5), (0.5,0.5,0.5))

|

| 63 |

+

])

|

| 64 |

+

|

| 65 |

+

return train_transform, valid_transform

|

pytorch_fid/LICENSE_MIT

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2021 Zhifeng Kong

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

pytorch_fid/LICENSE_inception

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |