Spaces:

Build error

Streamlit app development (#5)

Browse files* Fixed gitignore file

* Project architecture update

* Source code & tests initial work

* Repo clean up, file naming

* Streamlit app creation, testing

* Silero debugging, torch load issues

* Change silero usage to pip install, fixed zip archiving

* Updated README

* Fixed streamlit messages and updated requirements.txt

* Simplified app.py, added instructions.md and config.py

* Moved global variables to config, added voice selection step

* Updated Readme from huggingface spaces

* Fixed config imports, moved config to /src

* Separated epub_gen() from predict()

* Testing work, logging debugging

* Update .gitignore, cleaned up lib imports

* Removed pycache files

* Split write_audio from predict, fixed logging to app.log

* Implemented txt import, more pytest attempts

* HTML and PDF parsing functions implemented

* Added parsers to streamlit app, testing

* Added pdf and htm test files

* Fixed st.upload issues, tested file types

* Fixed gitignore file

* Project architecture update

* Source code & tests initial work

* Repo clean up, file naming

* Streamlit app creation, testing

* Silero debugging, torch load issues

* Change silero usage to pip install, fixed zip archiving

* Updated README

* Fixed streamlit messages and updated requirements.txt

* Simplified app.py, added instructions.md and config.py

* Moved global variables to config, added voice selection step

* Updated Readme from huggingface spaces

* Fixed config imports, moved config to /src

* Separated epub_gen() from predict()

* Testing work, logging debugging

* Update .gitignore, cleaned up lib imports

* Removed pycache files

* Split write_audio from predict, fixed logging to app.log

* Implemented txt import, more pytest attempts

* HTML and PDF parsing functions implemented

* Added parsers to streamlit app, testing

* Added pdf and htm test files

* Fixed st.upload issues, tested file types

* Improved function and file naming, removed unneeded comments, improved app instructions.

* Improved file title handling, audio output clean up

* Unexpected character handling tests

* Voice selection preview created

* Test for preprocess, updating test files

* Epub testing updates

* Update Readme to remove HuggingFace Spaces config

* PDF reading function testing, updating

* PDF reading function completed, tested

* Fixed testing file directory

* Cleaned up notebooks and example test files

* Testing predict function, added test audio tensor

* Cleaned up init.py files

* Updated package versions in GitHub Actions workflow

* Updated package versions in GitHub Actions workflow, correctly

* Testing on read_pdf function

* Updated Readme and Instructions

* Updated Readme with demo screenshot, removed non-functional test

* Fixed Readme typos, linked screenshot

* Linting and misc repo updates

* Added function docstrings

* Module headers added.

* HTML reading WIP

* Testing assemble_zip updated, improved path handling

* Assemble zip, further test updating; tests succeed locally

* Assemble zip, further test updating; tests succeed locally, fixed typos

* Pytest files corrections, np warnings handled

* Further testing work, conditionals tested, tesing running GitHub Actions locally.

* Fixed issues with path handling in output functions

* Solved not a dir error: create dir automatically if does not exist.

* Test for write_audio function completed.

* Testing for generate_audio function complete

* Test for predict function implemented, manually set seed for tests

* Formatting, removed whitespace

* Fixing test_predict, changing tolerance for difference

* Switched to torch.testing.assert_close function for test_predict

* Updates from PR comments; import style, assert style, README instructions, use pathlib instead of os

* Fixed hardcoding of paths, using pathlib paths defined in configs instead

* Testing file equality instead of multiple statements, formatting fixes, fixed load_model test

Former-commit-id: 727b3975d12143bb8d05ad51c7c299a773784b6a

- .coveragerc +1 -1

- .github/workflows/python-app.yml +3 -3

- .gitignore +3 -1

- README.md +24 -1

- app.py +66 -0

- models/latest_silero_models.yml +563 -0

- notebooks/1232-h.htm +0 -0

- notebooks/audiobook_gen_silero.ipynb +28 -387

- notebooks/parser_function_html.ipynb +389 -0

- notebooks/{pg174.epub → test.epub} +0 -0

- notebooks/test.htm +118 -0

- data/testfile.txt → outputs/.gitkeep +0 -0

- pytest.ini +2 -2

- requirements.txt +7 -0

- resources/audiobook_gen.png +0 -0

- resources/instructions.md +13 -0

- resources/speaker_en_0.wav +0 -0

- resources/speaker_en_110.wav +0 -0

- resources/speaker_en_29.wav +0 -0

- resources/speaker_en_41.wav +0 -0

- src/__inti__.py +0 -0

- src/config.py +23 -0

- src/file_readers.py +120 -0

- src/output.py +74 -0

- src/predict.py +110 -0

- tests/__pycache__/test_dummy.cpython-39-pytest-7.1.2.pyc +0 -0

- tests/data/test.epub +0 -0

- tests/data/test.htm +118 -0

- tests/data/test.pdf +0 -0

- tests/data/test.txt +19 -0

- tests/data/test_audio.pt +0 -0

- tests/data/test_predict.pt.REMOVED.git-id +1 -0

- tests/data/test_processed.txt +26 -0

- tests/test_config.py +9 -0

- tests/test_dummy.py +0 -2

- tests/test_file_readers.py +46 -0

- tests/test_output.py +50 -0

- tests/test_predict.py +63 -0

|

@@ -1,5 +1,5 @@

|

|

| 1 |

|

| 2 |

-

# .

|

| 3 |

|

| 4 |

[run]

|

| 5 |

# data_file = put a coverage file name here!!!

|

|

|

|

| 1 |

|

| 2 |

+

# .coveragerc for audiobook_gen

|

| 3 |

|

| 4 |

[run]

|

| 5 |

# data_file = put a coverage file name here!!!

|

|

@@ -19,14 +19,14 @@ jobs:

|

|

| 19 |

|

| 20 |

steps:

|

| 21 |

- uses: actions/checkout@v3

|

| 22 |

-

- name: Set up Python 3.

|

| 23 |

uses: actions/setup-python@v3

|

| 24 |

with:

|

| 25 |

-

python-version: "3.

|

| 26 |

- name: Install dependencies

|

| 27 |

run: |

|

| 28 |

python -m pip install --upgrade pip

|

| 29 |

-

pip install flake8 pytest pytest-cov

|

| 30 |

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

| 31 |

- name: Lint with flake8

|

| 32 |

run: |

|

|

|

|

| 19 |

|

| 20 |

steps:

|

| 21 |

- uses: actions/checkout@v3

|

| 22 |

+

- name: Set up Python 3.9.12

|

| 23 |

uses: actions/setup-python@v3

|

| 24 |

with:

|

| 25 |

+

python-version: "3.9.12"

|

| 26 |

- name: Install dependencies

|

| 27 |

run: |

|

| 28 |

python -m pip install --upgrade pip

|

| 29 |

+

pip install flake8 pytest==7.1.3 pytest-cov==3.0.0

|

| 30 |

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

| 31 |

- name: Lint with flake8

|

| 32 |

run: |

|

|

@@ -8,7 +8,9 @@ token

|

|

| 8 |

docs/

|

| 9 |

conda/

|

| 10 |

tmp/

|

| 11 |

-

|

|

|

|

|

|

|

| 12 |

|

| 13 |

tags

|

| 14 |

*~

|

|

|

|

| 8 |

docs/

|

| 9 |

conda/

|

| 10 |

tmp/

|

| 11 |

+

notebooks/outputs/

|

| 12 |

+

tests/__pycache__/

|

| 13 |

+

tests/.pytest_cache

|

| 14 |

|

| 15 |

tags

|

| 16 |

*~

|

|

@@ -1,4 +1,27 @@

|

|

| 1 |

Audiobook Gen

|

| 2 |

=============

|

| 3 |

|

| 4 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

Audiobook Gen

|

| 2 |

=============

|

| 3 |

|

| 4 |

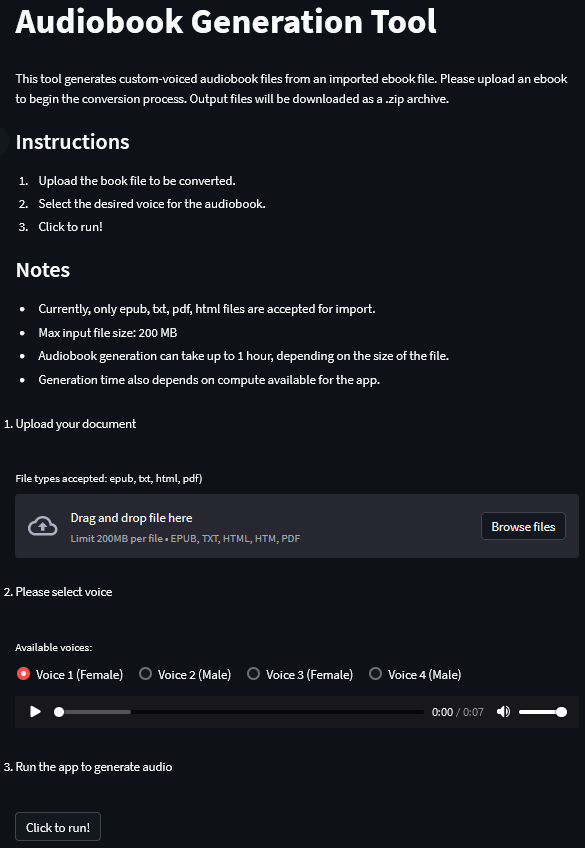

+

## Description

|

| 5 |

+

Audiobook Gen is a tool that allows the users to generate an audio file of text (e.g. audiobook), read in the voice of the user's choice. This tool is based on the Silero text-to-speech toolkit and uses Streamlit to deliver the application.

|

| 6 |

+

|

| 7 |

+

## Demo

|

| 8 |

+

A demonstration of this tool is hosted at HuggingFace Spaces - see [Audiobook_Gen](https://huggingface.co/spaces/mkutarna/audiobook_gen).

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

#### Instructions

|

| 13 |

+

1. Upload the book file to be converted.

|

| 14 |

+

2. Select the desired voice for the audiobook.

|

| 15 |

+

3. Click to run!

|

| 16 |

+

|

| 17 |

+

## Dependencies

|

| 18 |

+

- silero

|

| 19 |

+

- streamlit

|

| 20 |

+

- ebooklib

|

| 21 |

+

- PyPDF2

|

| 22 |

+

- bs4

|

| 23 |

+

- nltk

|

| 24 |

+

- stqdm

|

| 25 |

+

|

| 26 |

+

## License

|

| 27 |

+

See [LICENSE](https://github.com/mkutarna/audiobook_gen/blob/master/LICENSE)

|

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

|

| 3 |

+

import streamlit as st

|

| 4 |

+

|

| 5 |

+

from src import file_readers, predict, output, config

|

| 6 |

+

|

| 7 |

+

logging.basicConfig(filename='app.log',

|

| 8 |

+

filemode='w',

|

| 9 |

+

format='%(name)s - %(levelname)s - %(message)s',

|

| 10 |

+

level=logging.INFO,

|

| 11 |

+

force=True)

|

| 12 |

+

|

| 13 |

+

st.title('Audiobook Generation Tool')

|

| 14 |

+

|

| 15 |

+

text_file = open(config.INSTRUCTIONS, "r")

|

| 16 |

+

readme_text = text_file.read()

|

| 17 |

+

text_file.close()

|

| 18 |

+

st.markdown(readme_text)

|

| 19 |

+

|

| 20 |

+

st.header('1. Upload your document')

|

| 21 |

+

uploaded_file = st.file_uploader(

|

| 22 |

+

label="File types accepted: epub, txt, pdf)",

|

| 23 |

+

type=['epub', 'txt', 'pdf'])

|

| 24 |

+

|

| 25 |

+

model = predict.load_model()

|

| 26 |

+

|

| 27 |

+

st.header('2. Please select voice')

|

| 28 |

+

speaker = st.radio('Available voices:', config.SPEAKER_LIST.keys(), horizontal=True)

|

| 29 |

+

|

| 30 |

+

audio_path = config.resource_path / f'speaker_{config.SPEAKER_LIST.get(speaker)}.wav'

|

| 31 |

+

audio_file = open(audio_path, 'rb')

|

| 32 |

+

audio_bytes = audio_file.read()

|

| 33 |

+

|

| 34 |

+

st.audio(audio_bytes, format='audio/ogg')

|

| 35 |

+

|

| 36 |

+

st.header('3. Run the app to generate audio')

|

| 37 |

+

if st.button('Click to run!'):

|

| 38 |

+

file_ext = uploaded_file.type

|

| 39 |

+

file_title = uploaded_file.name

|

| 40 |

+

if file_ext == 'application/epub+zip':

|

| 41 |

+

text, file_title = file_readers.read_epub(uploaded_file)

|

| 42 |

+

elif file_ext == 'text/plain':

|

| 43 |

+

file = uploaded_file.read()

|

| 44 |

+

text = file_readers.preprocess_text(file)

|

| 45 |

+

elif file_ext == 'application/pdf':

|

| 46 |

+

text = file_readers.read_pdf(uploaded_file)

|

| 47 |

+

else:

|

| 48 |

+

st.warning('Invalid file type', icon="⚠️")

|

| 49 |

+

st.success('Reading file complete!')

|

| 50 |

+

|

| 51 |

+

with st.spinner('Generating audio...'):

|

| 52 |

+

output.generate_audio(text, file_title, model, config.SPEAKER_LIST.get(speaker))

|

| 53 |

+

st.success('Audio generation complete!')

|

| 54 |

+

|

| 55 |

+

with st.spinner('Building zip file...'):

|

| 56 |

+

zip_file = output.assemble_zip(file_title)

|

| 57 |

+

title_name = f'{file_title}.zip'

|

| 58 |

+

st.success('Zip file prepared!')

|

| 59 |

+

|

| 60 |

+

with open(zip_file, "rb") as fp:

|

| 61 |

+

btn = st.download_button(

|

| 62 |

+

label="Download Audiobook",

|

| 63 |

+

data=fp,

|

| 64 |

+

file_name=title_name,

|

| 65 |

+

mime="application/zip"

|

| 66 |

+

)

|

|

@@ -0,0 +1,563 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# pre-trained STT models

|

| 2 |

+

stt_models:

|

| 3 |

+

en:

|

| 4 |

+

latest:

|

| 5 |

+

meta:

|

| 6 |

+

name: "en_v6"

|

| 7 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 8 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 9 |

+

jit: "https://models.silero.ai/models/en/en_v6.jit"

|

| 10 |

+

onnx: "https://models.silero.ai/models/en/en_v5.onnx"

|

| 11 |

+

jit_q: "https://models.silero.ai/models/en/en_v6_q.jit"

|

| 12 |

+

jit_xlarge: "https://models.silero.ai/models/en/en_v6_xlarge.jit"

|

| 13 |

+

onnx_xlarge: "https://models.silero.ai/models/en/en_v6_xlarge.onnx"

|

| 14 |

+

v6:

|

| 15 |

+

meta:

|

| 16 |

+

name: "en_v6"

|

| 17 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 18 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 19 |

+

jit: "https://models.silero.ai/models/en/en_v6.jit"

|

| 20 |

+

onnx: "https://models.silero.ai/models/en/en_v5.onnx"

|

| 21 |

+

jit_q: "https://models.silero.ai/models/en/en_v6_q.jit"

|

| 22 |

+

jit_xlarge: "https://models.silero.ai/models/en/en_v6_xlarge.jit"

|

| 23 |

+

onnx_xlarge: "https://models.silero.ai/models/en/en_v6_xlarge.onnx"

|

| 24 |

+

v5:

|

| 25 |

+

meta:

|

| 26 |

+

name: "en_v5"

|

| 27 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 28 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 29 |

+

jit: "https://models.silero.ai/models/en/en_v5.jit"

|

| 30 |

+

onnx: "https://models.silero.ai/models/en/en_v5.onnx"

|

| 31 |

+

onnx_q: "https://models.silero.ai/models/en/en_v5_q.onnx"

|

| 32 |

+

jit_q: "https://models.silero.ai/models/en/en_v5_q.jit"

|

| 33 |

+

jit_xlarge: "https://models.silero.ai/models/en/en_v5_xlarge.jit"

|

| 34 |

+

onnx_xlarge: "https://models.silero.ai/models/en/en_v5_xlarge.onnx"

|

| 35 |

+

v4_0:

|

| 36 |

+

meta:

|

| 37 |

+

name: "en_v4_0"

|

| 38 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 39 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 40 |

+

jit_large: "https://models.silero.ai/models/en/en_v4_0_jit_large.model"

|

| 41 |

+

onnx_large: "https://models.silero.ai/models/en/en_v4_0_large.onnx"

|

| 42 |

+

v3:

|

| 43 |

+

meta:

|

| 44 |

+

name: "en_v3"

|

| 45 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 46 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 47 |

+

jit: "https://models.silero.ai/models/en/en_v3_jit.model"

|

| 48 |

+

onnx: "https://models.silero.ai/models/en/en_v3.onnx"

|

| 49 |

+

jit_q: "https://models.silero.ai/models/en/en_v3_jit_q.model"

|

| 50 |

+

jit_skip: "https://models.silero.ai/models/en/en_v3_jit_skips.model"

|

| 51 |

+

jit_large: "https://models.silero.ai/models/en/en_v3_jit_large.model"

|

| 52 |

+

onnx_large: "https://models.silero.ai/models/en/en_v3_large.onnx"

|

| 53 |

+

jit_xsmall: "https://models.silero.ai/models/en/en_v3_jit_xsmall.model"

|

| 54 |

+

jit_q_xsmall: "https://models.silero.ai/models/en/en_v3_jit_q_xsmall.model"

|

| 55 |

+

onnx_xsmall: "https://models.silero.ai/models/en/en_v3_xsmall.onnx"

|

| 56 |

+

v2:

|

| 57 |

+

meta:

|

| 58 |

+

name: "en_v2"

|

| 59 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 60 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 61 |

+

jit: "https://models.silero.ai/models/en/en_v2_jit.model"

|

| 62 |

+

onnx: "https://models.silero.ai/models/en/en_v2.onnx"

|

| 63 |

+

tf: "https://models.silero.ai/models/en/en_v2_tf.tar.gz"

|

| 64 |

+

v1:

|

| 65 |

+

meta:

|

| 66 |

+

name: "en_v1"

|

| 67 |

+

sample: "https://models.silero.ai/examples/en_sample.wav"

|

| 68 |

+

labels: "https://models.silero.ai/models/en/en_v1_labels.json"

|

| 69 |

+

jit: "https://models.silero.ai/models/en/en_v1_jit.model"

|

| 70 |

+

onnx: "https://models.silero.ai/models/en/en_v1.onnx"

|

| 71 |

+

tf: "https://models.silero.ai/models/en/en_v1_tf.tar.gz"

|

| 72 |

+

de:

|

| 73 |

+

latest:

|

| 74 |

+

meta:

|

| 75 |

+

name: "de_v1"

|

| 76 |

+

sample: "https://models.silero.ai/examples/de_sample.wav"

|

| 77 |

+

labels: "https://models.silero.ai/models/de/de_v1_labels.json"

|

| 78 |

+

jit: "https://models.silero.ai/models/de/de_v1_jit.model"

|

| 79 |

+

onnx: "https://models.silero.ai/models/de/de_v1.onnx"

|

| 80 |

+

tf: "https://models.silero.ai/models/de/de_v1_tf.tar.gz"

|

| 81 |

+

v1:

|

| 82 |

+

meta:

|

| 83 |

+

name: "de_v1"

|

| 84 |

+

sample: "https://models.silero.ai/examples/de_sample.wav"

|

| 85 |

+

labels: "https://models.silero.ai/models/de/de_v1_labels.json"

|

| 86 |

+

jit_large: "https://models.silero.ai/models/de/de_v1_jit.model"

|

| 87 |

+

onnx: "https://models.silero.ai/models/de/de_v1.onnx"

|

| 88 |

+

tf: "https://models.silero.ai/models/de/de_v1_tf.tar.gz"

|

| 89 |

+

v3:

|

| 90 |

+

meta:

|

| 91 |

+

name: "de_v3"

|

| 92 |

+

sample: "https://models.silero.ai/examples/de_sample.wav"

|

| 93 |

+

labels: "https://models.silero.ai/models/de/de_v1_labels.json"

|

| 94 |

+

jit_large: "https://models.silero.ai/models/de/de_v3_large.jit"

|

| 95 |

+

v4:

|

| 96 |

+

meta:

|

| 97 |

+

name: "de_v4"

|

| 98 |

+

sample: "https://models.silero.ai/examples/de_sample.wav"

|

| 99 |

+

labels: "https://models.silero.ai/models/de/de_v1_labels.json"

|

| 100 |

+

jit_large: "https://models.silero.ai/models/de/de_v4_large.jit"

|

| 101 |

+

onnx_large: "https://models.silero.ai/models/de/de_v4_large.onnx"

|

| 102 |

+

es:

|

| 103 |

+

latest:

|

| 104 |

+

meta:

|

| 105 |

+

name: "es_v1"

|

| 106 |

+

sample: "https://models.silero.ai/examples/es_sample.wav"

|

| 107 |

+

labels: "https://models.silero.ai/models/es/es_v1_labels.json"

|

| 108 |

+

jit: "https://models.silero.ai/models/es/es_v1_jit.model"

|

| 109 |

+

onnx: "https://models.silero.ai/models/es/es_v1.onnx"

|

| 110 |

+

tf: "https://models.silero.ai/models/es/es_v1_tf.tar.gz"

|

| 111 |

+

ua:

|

| 112 |

+

latest:

|

| 113 |

+

meta:

|

| 114 |

+

name: "ua_v3"

|

| 115 |

+

sample: "https://models.silero.ai/examples/ua_sample.wav"

|

| 116 |

+

credits:

|

| 117 |

+

datasets:

|

| 118 |

+

speech-recognition-uk: https://github.com/egorsmkv/speech-recognition-uk

|

| 119 |

+

labels: "https://models.silero.ai/models/ua/ua_v1_labels.json"

|

| 120 |

+

jit: "https://models.silero.ai/models/ua/ua_v3_jit.model"

|

| 121 |

+

jit_q: "https://models.silero.ai/models/ua/ua_v3_jit_q.model"

|

| 122 |

+

onnx: "https://models.silero.ai/models/ua/ua_v3.onnx"

|

| 123 |

+

v3:

|

| 124 |

+

meta:

|

| 125 |

+

name: "ua_v3"

|

| 126 |

+

sample: "https://models.silero.ai/examples/ua_sample.wav"

|

| 127 |

+

credits:

|

| 128 |

+

datasets:

|

| 129 |

+

speech-recognition-uk: https://github.com/egorsmkv/speech-recognition-uk

|

| 130 |

+

labels: "https://models.silero.ai/models/ua/ua_v1_labels.json"

|

| 131 |

+

jit: "https://models.silero.ai/models/ua/ua_v3_jit.model"

|

| 132 |

+

jit_q: "https://models.silero.ai/models/ua/ua_v3_jit_q.model"

|

| 133 |

+

onnx: "https://models.silero.ai/models/ua/ua_v3.onnx"

|

| 134 |

+

v1:

|

| 135 |

+

meta:

|

| 136 |

+

name: "ua_v1"

|

| 137 |

+

sample: "https://models.silero.ai/examples/ua_sample.wav"

|

| 138 |

+

credits:

|

| 139 |

+

datasets:

|

| 140 |

+

speech-recognition-uk: https://github.com/egorsmkv/speech-recognition-uk

|

| 141 |

+

labels: "https://models.silero.ai/models/ua/ua_v1_labels.json"

|

| 142 |

+

jit: "https://models.silero.ai/models/ua/ua_v1_jit.model"

|

| 143 |

+

jit_q: "https://models.silero.ai/models/ua/ua_v1_jit_q.model"

|

| 144 |

+

tts_models:

|

| 145 |

+

ru:

|

| 146 |

+

v3_1_ru:

|

| 147 |

+

latest:

|

| 148 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 149 |

+

package: 'https://models.silero.ai/models/tts/ru/v3_1_ru.pt'

|

| 150 |

+

sample_rate: [8000, 24000, 48000]

|

| 151 |

+

ru_v3:

|

| 152 |

+

latest:

|

| 153 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 154 |

+

package: 'https://models.silero.ai/models/tts/ru/ru_v3.pt'

|

| 155 |

+

sample_rate: [8000, 24000, 48000]

|

| 156 |

+

aidar_v2:

|

| 157 |

+

latest:

|

| 158 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 159 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_aidar.pt'

|

| 160 |

+

sample_rate: [8000, 16000]

|

| 161 |

+

aidar_8khz:

|

| 162 |

+

latest:

|

| 163 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 164 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 165 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_aidar_8000.jit'

|

| 166 |

+

sample_rate: 8000

|

| 167 |

+

v1:

|

| 168 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 169 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 170 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_aidar_8000.jit'

|

| 171 |

+

sample_rate: 8000

|

| 172 |

+

aidar_16khz:

|

| 173 |

+

latest:

|

| 174 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 175 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 176 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_aidar_16000.jit'

|

| 177 |

+

sample_rate: 16000

|

| 178 |

+

v1:

|

| 179 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 180 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 181 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_aidar_16000.jit'

|

| 182 |

+

sample_rate: 16000

|

| 183 |

+

baya_v2:

|

| 184 |

+

latest:

|

| 185 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 186 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_baya.pt'

|

| 187 |

+

sample_rate: [8000, 16000]

|

| 188 |

+

baya_8khz:

|

| 189 |

+

latest:

|

| 190 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 191 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 192 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_baya_8000.jit'

|

| 193 |

+

sample_rate: 8000

|

| 194 |

+

v1:

|

| 195 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 196 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 197 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_baya_8000.jit'

|

| 198 |

+

sample_rate: 8000

|

| 199 |

+

baya_16khz:

|

| 200 |

+

latest:

|

| 201 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 202 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 203 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_baya_16000.jit'

|

| 204 |

+

sample_rate: 16000

|

| 205 |

+

v1:

|

| 206 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 207 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 208 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_baya_16000.jit'

|

| 209 |

+

sample_rate: 16000

|

| 210 |

+

irina_v2:

|

| 211 |

+

latest:

|

| 212 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 213 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_irina.pt'

|

| 214 |

+

sample_rate: [8000, 16000]

|

| 215 |

+

irina_8khz:

|

| 216 |

+

latest:

|

| 217 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 218 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 219 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_irina_8000.jit'

|

| 220 |

+

sample_rate: 8000

|

| 221 |

+

v1:

|

| 222 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 223 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 224 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_irina_8000.jit'

|

| 225 |

+

sample_rate: 8000

|

| 226 |

+

irina_16khz:

|

| 227 |

+

latest:

|

| 228 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 229 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 230 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_irina_16000.jit'

|

| 231 |

+

sample_rate: 16000

|

| 232 |

+

v1:

|

| 233 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 234 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 235 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_irina_16000.jit'

|

| 236 |

+

sample_rate: 16000

|

| 237 |

+

kseniya_v2:

|

| 238 |

+

latest:

|

| 239 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 240 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_kseniya.pt'

|

| 241 |

+

sample_rate: [8000, 16000]

|

| 242 |

+

kseniya_8khz:

|

| 243 |

+

latest:

|

| 244 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 245 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 246 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_kseniya_8000.jit'

|

| 247 |

+

sample_rate: 8000

|

| 248 |

+

v1:

|

| 249 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 250 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 251 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_kseniya_8000.jit'

|

| 252 |

+

sample_rate: 8000

|

| 253 |

+

kseniya_16khz:

|

| 254 |

+

latest:

|

| 255 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 256 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 257 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_kseniya_16000.jit'

|

| 258 |

+

sample_rate: 16000

|

| 259 |

+

v1:

|

| 260 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 261 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 262 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_kseniya_16000.jit'

|

| 263 |

+

sample_rate: 16000

|

| 264 |

+

natasha_v2:

|

| 265 |

+

latest:

|

| 266 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 267 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_natasha.pt'

|

| 268 |

+

sample_rate: [8000, 16000]

|

| 269 |

+

natasha_8khz:

|

| 270 |

+

latest:

|

| 271 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 272 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 273 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_natasha_8000.jit'

|

| 274 |

+

sample_rate: 8000

|

| 275 |

+

v1:

|

| 276 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 277 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 278 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_natasha_8000.jit'

|

| 279 |

+

sample_rate: 8000

|

| 280 |

+

natasha_16khz:

|

| 281 |

+

latest:

|

| 282 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 283 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 284 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_natasha_16000.jit'

|

| 285 |

+

sample_rate: 16000

|

| 286 |

+

v1:

|

| 287 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 288 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 289 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_natasha_16000.jit'

|

| 290 |

+

sample_rate: 16000

|

| 291 |

+

ruslan_v2:

|

| 292 |

+

latest:

|

| 293 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 294 |

+

package: 'https://models.silero.ai/models/tts/ru/v2_ruslan.pt'

|

| 295 |

+

sample_rate: [8000, 16000]

|

| 296 |

+

ruslan_8khz:

|

| 297 |

+

latest:

|

| 298 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 299 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 300 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_ruslan_8000.jit'

|

| 301 |

+

sample_rate: 8000

|

| 302 |

+

v1:

|

| 303 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 304 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 305 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_ruslan_8000.jit'

|

| 306 |

+

sample_rate: 8000

|

| 307 |

+

ruslan_16khz:

|

| 308 |

+

latest:

|

| 309 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 310 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 311 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_ruslan_16000.jit'

|

| 312 |

+

sample_rate: 16000

|

| 313 |

+

v1:

|

| 314 |

+

tokenset: '_~абвгдеёжзийклмнопрстуфхцчшщъыьэюя +.,!?…:;–'

|

| 315 |

+

example: 'В н+едрах т+ундры в+ыдры в г+етрах т+ырят в в+ёдра +ядра к+едров.'

|

| 316 |

+

jit: 'https://models.silero.ai/models/tts/ru/v1_ruslan_16000.jit'

|

| 317 |

+

sample_rate: 16000

|

| 318 |

+

en:

|

| 319 |

+

v3_en:

|

| 320 |

+

latest:

|

| 321 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 322 |

+

package: 'https://models.silero.ai/models/tts/en/v3_en.pt'

|

| 323 |

+

sample_rate: [8000, 24000, 48000]

|

| 324 |

+

v3_en_indic:

|

| 325 |

+

latest:

|

| 326 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 327 |

+

package: 'https://models.silero.ai/models/tts/en/v3_en_indic.pt'

|

| 328 |

+

sample_rate: [8000, 24000, 48000]

|

| 329 |

+

lj_v2:

|

| 330 |

+

latest:

|

| 331 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 332 |

+

package: 'https://models.silero.ai/models/tts/en/v2_lj.pt'

|

| 333 |

+

sample_rate: [8000, 16000]

|

| 334 |

+

lj_8khz:

|

| 335 |

+

latest:

|

| 336 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyz .,!?…:;–'

|

| 337 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 338 |

+

jit: 'https://models.silero.ai/models/tts/en/v1_lj_8000.jit'

|

| 339 |

+

sample_rate: 8000

|

| 340 |

+

v1:

|

| 341 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyz .,!?…:;–'

|

| 342 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 343 |

+

jit: 'https://models.silero.ai/models/tts/en/v1_lj_8000.jit'

|

| 344 |

+

sample_rate: 8000

|

| 345 |

+

lj_16khz:

|

| 346 |

+

latest:

|

| 347 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyz .,!?…:;–'

|

| 348 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 349 |

+

jit: 'https://models.silero.ai/models/tts/en/v1_lj_16000.jit'

|

| 350 |

+

sample_rate: 16000

|

| 351 |

+

v1:

|

| 352 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyz .,!?…:;–'

|

| 353 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 354 |

+

jit: 'https://models.silero.ai/models/tts/en/v1_lj_16000.jit'

|

| 355 |

+

sample_rate: 16000

|

| 356 |

+

de:

|

| 357 |

+

v3_de:

|

| 358 |

+

latest:

|

| 359 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 360 |

+

package: 'https://models.silero.ai/models/tts/de/v3_de.pt'

|

| 361 |

+

sample_rate: [8000, 24000, 48000]

|

| 362 |

+

thorsten_v2:

|

| 363 |

+

latest:

|

| 364 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 365 |

+

package: 'https://models.silero.ai/models/tts/de/v2_thorsten.pt'

|

| 366 |

+

sample_rate: [8000, 16000]

|

| 367 |

+

thorsten_8khz:

|

| 368 |

+

latest:

|

| 369 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzäöüß .,!?…:;–'

|

| 370 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 371 |

+

jit: 'https://models.silero.ai/models/tts/de/v1_thorsten_8000.jit'

|

| 372 |

+

sample_rate: 8000

|

| 373 |

+

v1:

|

| 374 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzäöüß .,!?…:;–'

|

| 375 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 376 |

+

jit: 'https://models.silero.ai/models/tts/de/v1_thorsten_8000.jit'

|

| 377 |

+

sample_rate: 8000

|

| 378 |

+

thorsten_16khz:

|

| 379 |

+

latest:

|

| 380 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzäöüß .,!?…:;–'

|

| 381 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 382 |

+

jit: 'https://models.silero.ai/models/tts/de/v1_thorsten_16000.jit'

|

| 383 |

+

sample_rate: 16000

|

| 384 |

+

v1:

|

| 385 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzäöüß .,!?…:;–'

|

| 386 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 387 |

+

jit: 'https://models.silero.ai/models/tts/de/v1_thorsten_16000.jit'

|

| 388 |

+

sample_rate: 16000

|

| 389 |

+

es:

|

| 390 |

+

v3_es:

|

| 391 |

+

latest:

|

| 392 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 393 |

+

package: 'https://models.silero.ai/models/tts/es/v3_es.pt'

|

| 394 |

+

sample_rate: [8000, 24000, 48000]

|

| 395 |

+

tux_v2:

|

| 396 |

+

latest:

|

| 397 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 398 |

+

package: 'https://models.silero.ai/models/tts/es/v2_tux.pt'

|

| 399 |

+

sample_rate: [8000, 16000]

|

| 400 |

+

tux_8khz:

|

| 401 |

+

latest:

|

| 402 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzáéíñóú .,!?…:;–¡¿'

|

| 403 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 404 |

+

jit: 'https://models.silero.ai/models/tts/es/v1_tux_8000.jit'

|

| 405 |

+

sample_rate: 8000

|

| 406 |

+

v1:

|

| 407 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzáéíñóú .,!?…:;–¡¿'

|

| 408 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 409 |

+

jit: 'https://models.silero.ai/models/tts/es/v1_tux_8000.jit'

|

| 410 |

+

sample_rate: 8000

|

| 411 |

+

tux_16khz:

|

| 412 |

+

latest:

|

| 413 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzáéíñóú .,!?…:;–¡¿'

|

| 414 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 415 |

+

jit: 'https://models.silero.ai/models/tts/es/v1_tux_16000.jit'

|

| 416 |

+

sample_rate: 16000

|

| 417 |

+

v1:

|

| 418 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzáéíñóú .,!?…:;–¡¿'

|

| 419 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 420 |

+

jit: 'https://models.silero.ai/models/tts/es/v1_tux_16000.jit'

|

| 421 |

+

sample_rate: 16000

|

| 422 |

+

fr:

|

| 423 |

+

v3_fr:

|

| 424 |

+

latest:

|

| 425 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 426 |

+

package: 'https://models.silero.ai/models/tts/fr/v3_fr.pt'

|

| 427 |

+

sample_rate: [8000, 24000, 48000]

|

| 428 |

+

gilles_v2:

|

| 429 |

+

latest:

|

| 430 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 431 |

+

package: 'https://models.silero.ai/models/tts/fr/v2_gilles.pt'

|

| 432 |

+

sample_rate: [8000, 16000]

|

| 433 |

+

gilles_8khz:

|

| 434 |

+

latest:

|

| 435 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzéàèùâêîôûç .,!?…:;–'

|

| 436 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 437 |

+

jit: 'https://models.silero.ai/models/tts/fr/v1_gilles_8000.jit'

|

| 438 |

+

sample_rate: 8000

|

| 439 |

+

v1:

|

| 440 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzéàèùâêîôûç .,!?…:;–'

|

| 441 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 442 |

+

jit: 'https://models.silero.ai/models/tts/fr/v1_gilles_8000.jit'

|

| 443 |

+

sample_rate: 8000

|

| 444 |

+

gilles_16khz:

|

| 445 |

+

latest:

|

| 446 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzéàèùâêîôûç .,!?…:;–'

|

| 447 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 448 |

+

jit: 'https://models.silero.ai/models/tts/fr/v1_gilles_16000.jit'

|

| 449 |

+

sample_rate: 16000

|

| 450 |

+

v1:

|

| 451 |

+

tokenset: '_~abcdefghijklmnopqrstuvwxyzéàèùâêîôûç .,!?…:;–'

|

| 452 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 453 |

+

jit: 'https://models.silero.ai/models/tts/fr/v1_gilles_16000.jit'

|

| 454 |

+

sample_rate: 16000

|

| 455 |

+

ba:

|

| 456 |

+

aigul_v2:

|

| 457 |

+

latest:

|

| 458 |

+

example: 'Салауат Юлаевтың тормошо һәм яҙмышы хаҡындағы документтарҙың һәм шиғри әҫәрҙәренең бик аҙ өлөшө генә һаҡланған.'

|

| 459 |

+

package: 'https://models.silero.ai/models/tts/ba/v2_aigul.pt'

|

| 460 |

+

sample_rate: [8000, 16000]

|

| 461 |

+

language_name: 'bashkir'

|

| 462 |

+

xal:

|

| 463 |

+

v3_xal:

|

| 464 |

+

latest:

|

| 465 |

+

example: 'Һорвн, дөрвн күн ирәд, һазань чиңгнв. Байн Цецн хаана һорвн көвүн күүндҗәнә.'

|

| 466 |

+

package: 'https://models.silero.ai/models/tts/xal/v3_xal.pt'

|

| 467 |

+

sample_rate: [8000, 24000, 48000]

|

| 468 |

+

erdni_v2:

|

| 469 |

+

latest:

|

| 470 |

+

example: 'Һорвн, дөрвн күн ирәд, һазань чиңгнв. Байн Цецн хаана һорвн көвүн күүндҗәнә.'

|

| 471 |

+

package: 'https://models.silero.ai/models/tts/xal/v2_erdni.pt'

|

| 472 |

+

sample_rate: [8000, 16000]

|

| 473 |

+

language_name: 'kalmyk'

|

| 474 |

+

tt:

|

| 475 |

+

v3_tt:

|

| 476 |

+

latest:

|

| 477 |

+

example: 'Исәнмесез, саумысез, нишләп кәҗәгезне саумыйсыз, әтәчегез күкәй салган, нишләп чыгып алмыйсыз.'

|

| 478 |

+

package: 'https://models.silero.ai/models/tts/tt/v3_tt.pt'

|

| 479 |

+

sample_rate: [8000, 24000, 48000]

|

| 480 |

+

dilyara_v2:

|

| 481 |

+

latest:

|

| 482 |

+

example: 'Ис+әнмесез, с+аумысез, нишл+әп кәҗәгезн+е с+аумыйсыз, әтәчег+ез күк+әй салг+ан, нишл+әп чыг+ып +алмыйсыз.'

|

| 483 |

+

package: 'https://models.silero.ai/models/tts/tt/v2_dilyara.pt'

|

| 484 |

+

sample_rate: [8000, 16000]

|

| 485 |

+

language_name: 'tatar'

|

| 486 |

+

uz:

|

| 487 |

+

v3_uz:

|

| 488 |

+

latest:

|

| 489 |

+

example: 'Tanishganimdan xursandman.'

|

| 490 |

+

package: 'https://models.silero.ai/models/tts/uz/v3_uz.pt'

|

| 491 |

+

sample_rate: [8000, 24000, 48000]

|

| 492 |

+

dilnavoz_v2:

|

| 493 |

+

latest:

|

| 494 |

+

example: 'Tanishganimdan xursandman.'

|

| 495 |

+

package: 'https://models.silero.ai/models/tts/uz/v2_dilnavoz.pt'

|

| 496 |

+

sample_rate: [8000, 16000]

|

| 497 |

+

language_name: 'uzbek'

|

| 498 |

+

ua:

|

| 499 |

+

v3_ua:

|

| 500 |

+

latest:

|

| 501 |

+

example: 'К+отики - пухн+асті жив+отики.'

|

| 502 |

+

package: 'https://models.silero.ai/models/tts/ua/v3_ua.pt'

|

| 503 |

+

sample_rate: [8000, 24000, 48000]

|

| 504 |

+

mykyta_v2:

|

| 505 |

+

latest:

|

| 506 |

+

example: 'К+отики - пухн+асті жив+отики.'

|

| 507 |

+

package: 'https://models.silero.ai/models/tts/ua/v22_mykyta_48k.pt'

|

| 508 |

+

sample_rate: [8000, 24000, 48000]

|

| 509 |

+

language_name: 'ukrainian'

|

| 510 |

+

indic:

|

| 511 |

+

v3_indic:

|

| 512 |

+

latest:

|

| 513 |

+

example: 'prasidda kabīra adhyētā, puruṣōttama agravāla kā yaha śōdha ālēkha, usa rāmānaṁda kī khōja karatā hai'

|

| 514 |

+

package: 'https://models.silero.ai/models/tts/indic/v3_indic.pt'

|

| 515 |

+

sample_rate: [8000, 24000, 48000]

|

| 516 |

+

multi:

|

| 517 |

+

multi_v2:

|

| 518 |

+

latest:

|

| 519 |

+

package: 'https://models.silero.ai/models/tts/multi/v2_multi.pt'

|

| 520 |

+

sample_rate: [8000, 16000]

|

| 521 |

+

speakers:

|

| 522 |

+

aidar:

|

| 523 |

+

lang: 'ru'

|

| 524 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 525 |

+

baya:

|

| 526 |

+

lang: 'ru'

|

| 527 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 528 |

+

kseniya:

|

| 529 |

+

lang: 'ru'

|

| 530 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 531 |

+

irina:

|

| 532 |

+

lang: 'ru'

|

| 533 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 534 |

+

ruslan:

|

| 535 |

+

lang: 'ru'

|

| 536 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 537 |

+

natasha:

|

| 538 |

+

lang: 'ru'

|

| 539 |

+

example: 'Съ+ешьте ещ+ё +этих м+ягких франц+узских б+улочек, д+а в+ыпейте ч+аю.'

|

| 540 |

+

thorsten:

|

| 541 |

+

lang: 'de'

|

| 542 |

+

example: 'Fischers Fritze fischt frische Fische, Frische Fische fischt Fischers Fritze.'

|

| 543 |

+

tux:

|

| 544 |

+

lang: 'es'

|

| 545 |

+

example: 'Hoy ya es ayer y ayer ya es hoy, ya llegó el día, y hoy es hoy.'

|

| 546 |

+

gilles:

|

| 547 |

+

lang: 'fr'

|

| 548 |

+

example: 'Je suis ce que je suis, et si je suis ce que je suis, qu’est ce que je suis.'

|

| 549 |

+

lj:

|

| 550 |

+

lang: 'en'

|

| 551 |

+

example: 'Can you can a canned can into an un-canned can like a canner can can a canned can into an un-canned can?'

|

| 552 |

+

dilyara:

|

| 553 |

+

lang: 'tt'

|

| 554 |

+

example: 'Пес+и пес+и песик+әй, борыннар+ы бәләк+әй.'

|

| 555 |

+

te_models:

|

| 556 |

+

latest:

|

| 557 |

+

package: "https://models.silero.ai/te_models/v2_4lang_q.pt"

|

| 558 |

+

languages: ['en', 'de', 'ru', 'es']

|

| 559 |

+

punct: '.,-!?—'

|

| 560 |

+

v2:

|

| 561 |

+

package: "https://models.silero.ai/te_models/v2_4lang_q.pt"

|

| 562 |

+

languages: ['en', 'de', 'ru', 'es']

|

| 563 |

+

punct: '.,-!?—'

|

|

The diff for this file is too large to render.

See raw diff

|

|

|

|

@@ -45,7 +45,7 @@

|

|

| 45 |

},

|

| 46 |

{

|

| 47 |

"cell_type": "code",

|

| 48 |

-

"execution_count":

|

| 49 |

"metadata": {},

|

| 50 |

"outputs": [],

|

| 51 |

"source": [

|

|

@@ -79,11 +79,11 @@

|

|

| 79 |

},

|

| 80 |

{

|

| 81 |

"cell_type": "code",

|

| 82 |

-

"execution_count":

|

| 83 |

"metadata": {},

|

| 84 |

"outputs": [],

|

| 85 |

"source": [

|

| 86 |

-

"max_char_len =

|

| 87 |

"sample_rate = 24000"

|

| 88 |

]

|

| 89 |

},

|

|

@@ -122,11 +122,11 @@

|

|

| 122 |

},

|

| 123 |

{

|

| 124 |

"cell_type": "code",

|

| 125 |

-

"execution_count":

|

| 126 |

"metadata": {},

|

| 127 |

"outputs": [],

|

| 128 |

"source": [

|

| 129 |

-

"ebook_path = '

|

| 130 |

]

|

| 131 |

},

|

| 132 |

{

|

|

@@ -144,7 +144,7 @@

|

|

| 144 |

},

|

| 145 |

{

|

| 146 |

"cell_type": "code",

|

| 147 |

-

"execution_count":

|

| 148 |

"metadata": {},

|

| 149 |

"outputs": [],

|

| 150 |

"source": [

|

|

@@ -198,24 +198,9 @@

|

|

| 198 |

},

|

| 199 |

{

|

| 200 |

"cell_type": "code",

|

| 201 |

-

"execution_count":

|

| 202 |

"metadata": {},

|

| 203 |

-

"outputs": [

|

| 204 |

-

{

|

| 205 |

-

"data": {

|

| 206 |

-

"application/vnd.jupyter.widget-view+json": {

|

| 207 |

-

"model_id": "7c7a0d27b2984cac933f97c68905d393",

|

| 208 |

-

"version_major": 2,

|

| 209 |

-

"version_minor": 0

|

| 210 |

-

},

|

| 211 |

-

"text/plain": [

|

| 212 |

-

" 0%| | 0/28 [00:00<?, ?it/s]"

|

| 213 |

-

]

|

| 214 |

-

},

|

| 215 |

-

"metadata": {},

|

| 216 |

-

"output_type": "display_data"

|

| 217 |

-

}

|

| 218 |

-

],

|

| 219 |

"source": [

|

| 220 |

"ebook, title = read_ebook(ebook_path)"

|

| 221 |

]

|

|

@@ -229,24 +214,24 @@

|

|

| 229 |

},

|

| 230 |

{

|

| 231 |

"cell_type": "code",

|

| 232 |

-

"execution_count":

|

| 233 |

"metadata": {},

|

| 234 |

-

"outputs": [

|

| 235 |

-

{

|

| 236 |

-

"name": "stdout",

|

| 237 |

-

"output_type": "stream",

|