# Supplementary Material

## Training and test data

We provide a [website](https://zju3dv.github.io/zju_mocap/) for visualization.

The multi-view videos are captured by 23 cameras. We train our model on the "0, 6, 12, 18" cameras and test it on the remaining cameras.

The following table shows the detailed frame numbers for training and test of each video. Since the video length of each subject is different, we choose the appropriate number of frames for training and test.

**Note that since rendering is very slow, we test our model every 30 frames. For example, although the frame range of video 313 is "0-59", we only test our model on the 0-th and 30-th frames.**

| Video | 313 | 315 | 377 | 386 | 387 | 390 | 392 | 393 | 394 |

| :-----: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: | :---: |

| Number of frames | 1470 | 2185 | 617 | 646 | 654 | 1171 | 556 | 658 | 859 |

| Frame Range (Training) | 0-59 | 0-399 | 0-299 | 0-299 | 0-299 | 700-999 | 0-299 | 0-299 | 0-299 |

| Frame Range (Unseen human poses) | 60-1060 | 400-1400 | 300-617 | 300-646 | 300-654 | 0-700 | 300-556 | 300-658 | 300-859 |

## Evaluation metrics

**We save our rendering results on novel views of training frames and unseen human poses at [here](https://zjueducn-my.sharepoint.com/:u:/g/personal/pengsida_zju_edu_cn/Ea3VOUy204VAiVJ-V-OGd9YBxdhbtfpS-U6icD_rDq0mUQ?e=cAcylK).**

As described in the paper, we evaluate our model in terms of the PSNR and SSIM metrics.

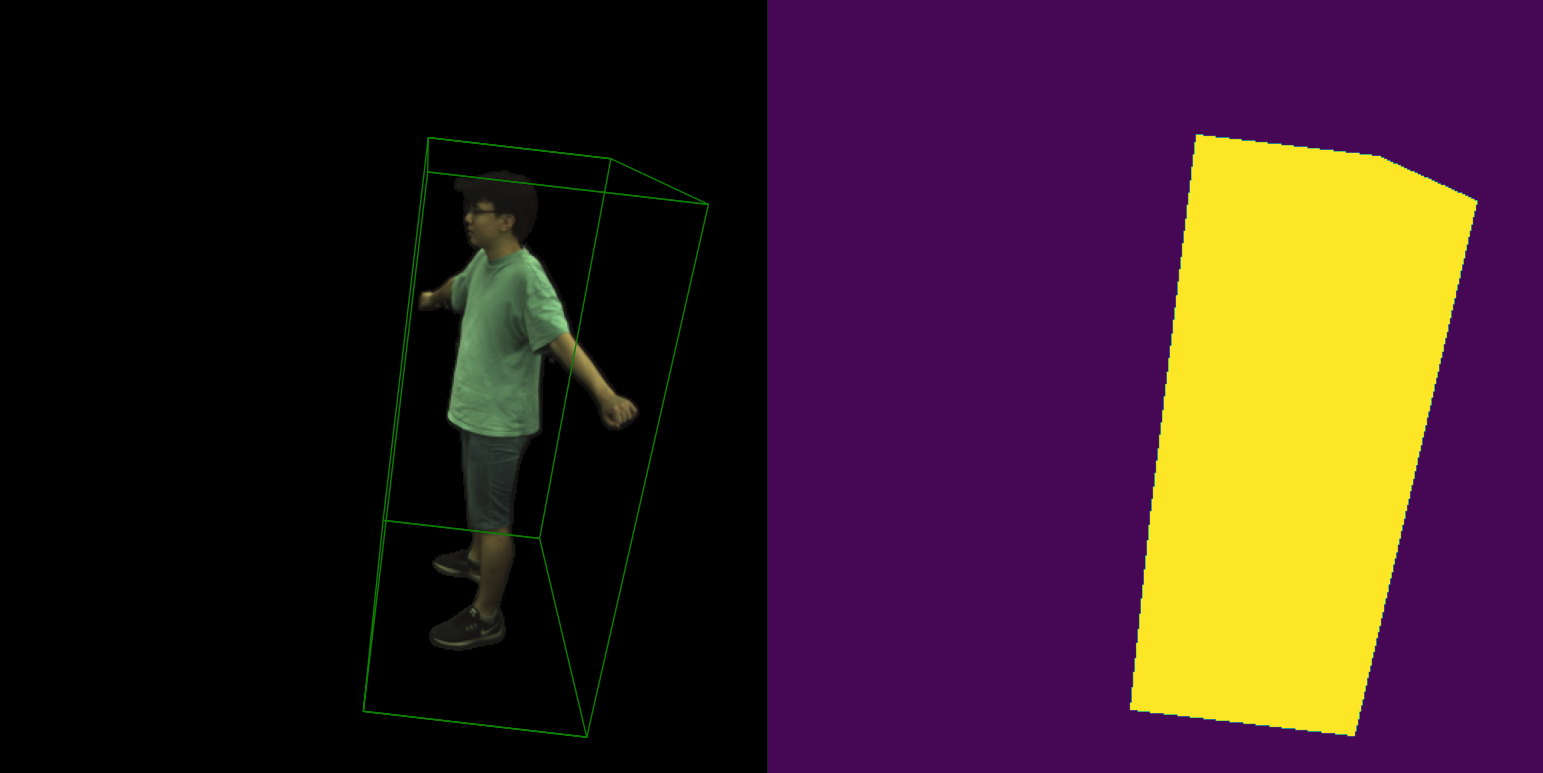

A straightforward way for evaluation is calculating the metrics on the whole image. Since we already know the 3D bounding box of the target human, we can project the 3D box to obtain a `bound_mask` and make the colors of pixels outside the mask as zero, as shown in the following figure.

As a result, the PSNR and SSIM metrics appear very high performances, as shown in the following table.

|

Training frames |

Unseen human poses |

|

PSNR |

SSIM |

PSNR |

SSIM |

| 313 |

35.21 |

0.985 |

29.02 |

0.964 |

| 315 |

33.07 |

0.988 |

25.70 |

0.957 |

| 392 |

35.76 |

0.984 |

31.53 |

0.971 |

| 393 |

33.24 |

0.979 |

28.40 |

0.960 |

| 394 |

34.31 |

0.980 |

29.61 |

0.961 |

| 377 |

33.86 |

0.985 |

30.60 |

0.977 |

| 386 |

36.07 |

0.984 |

33.05 |

0.974 |

| 390 |

34.48 |

0.980 |

30.25 |

0.964 |

| 387 |

31.39 |

0.975 |

27.68 |

0.961 |

|

34.15 |

0.982 |

29.54 |

0.966 |

To overcome this problem, a solution is only calculating the metrics on pixels inside the `bound_mask`. Since the SSIM metric requires the input to have the image format, we first compute the 2D box that bounds the `bound_mask` and then crop the corresponding image region.

```python

def ssim_metric(rgb_pred, rgb_gt, batch):

mask_at_box = batch['mask_at_box'][0].detach().cpu().numpy()

H, W = int(cfg.H * cfg.ratio), int(cfg.W * cfg.ratio)

mask_at_box = mask_at_box.reshape(H, W)

# convert the pixels into an image

img_pred = np.zeros((H, W, 3))

img_pred[mask_at_box] = rgb_pred

img_gt = np.zeros((H, W, 3))

img_gt[mask_at_box] = rgb_gt

# crop the object region

x, y, w, h = cv2.boundingRect(mask_at_box.astype(np.uint8))

img_pred = img_pred[y:y + h, x:x + w]

img_gt = img_gt[y:y + h, x:x + w]

# compute the ssim

ssim = compare_ssim(img_pred, img_gt, multichannel=True)

return ssim

```

The following table lists corresponding results.

|

Training frames |

Unseen human poses |

|

PSNR |

SSIM |

PSNR |

SSIM |

| 313 |

30.56 |

0.971 |

23.95 |

0.905 |

| 315 |

27.24 |

0.962 |

19.56 |

0.852 |

| 392 |

29.44 |

0.946 |

25.76 |

0.909 |

| 394 |

28.44 |

0.940 |

23.80 |

0.878 |

| 393 |

27.58 |

0.939 |

23.25 |

0.893 |

| 377 |

27.64 |

0.951 |

23.91 |

0.909 |

| 386 |

28.60 |

0.931 |

25.68 |

0.881 |

| 387 |

25.79 |

0.928 |

21.60 |

0.870 |

| 390 |

27.59 |

0.926 |

23.90 |

0.870 |

|

28.10 |

0.944 |

23.49 |

0.885 |

## Results of other methods on ZJU-MoCap

We save rendering results of other methods on novel views of training frames and unseen human poses at [here](https://zjueducn-my.sharepoint.com/:u:/g/personal/pengsida_zju_edu_cn/EQaPRQww70NDqEXeSG-fOeAB5JXFSWiWDW223h5nmkHvwQ?e=mdofbl), including Neural Volumes, Multi-view Neural Human Rendering, and Deferred Neural Human Rendering. **Note that we only generate novel views of training frames for Neural Volumes.**

The following table lists quantitative results of Neural Volumes.

|

PSNR |

SSIM |

| 313 |

20.09 |

0.831 |

| 315 |

18.57 |

0.824 |

| 392 |

22.88 |

0.726 |

| 394 |

22.08 |

0.843 |

| 393 |

21.29 |

0.842 |

| 377 |

21.15 |

0.842 |

| 386 |

23.21 |

0.820 |

| 387 |

20.74 |

0.838 |

| 390 |

22.49 |

0.825 |

|

21.39 |

0.821 |

The following table lists quantitative results of Multi-view Neural Human Rendering.

|

Training frames |

Unseen human poses |

|

PSNR |

SSIM |

PSNR |

SSIM |

| 313 |

26.68 |

0.935 |

23.05 |

0.893 |

| 315 |

19.81 |

0.874 |

18.88 |

0.844 |

| 392 |

24.73 |

0.902 |

23.66 |

0.893 |

| 394 |

25.01 |

0.906 |

22.87 |

0.874 |

| 393 |

23.47 |

0.894 |

22.27 |

0.885 |

| 377 |

23.79 |

0.918 |

21.94 |

0.885 |

| 386 |

25.02 |

0.879 |

23.70 |

0.853 |

| 387 |

22.65 |

0.858 |

20.97 |

0.866 |

| 390 |

23.72 |

0.873 |

22.65 |

0.858 |

|

23.87 |

0.893 |

22.22 |

0.872 |

The following table lists quantitative results of Deferred Neural Human Rendering.

|

Training frames |

Unseen human poses |

|

PSNR |

SSIM |

PSNR |

SSIM |

| 313 |

25.78 |

0.929 |

22.56 |

0.889 |

| 315 |

19.44 |

0.869 |

18.38 |

0.841 |

| 392 |

24.96 |

0.905 |

24.08 |

0.900 |

| 394 |

24.84 |

0.903 |

22.67 |

0.871 |

| 393 |

23.50 |

0.896 |

22.45 |

0.888 |

| 377 |

23.74 |

0.917 |

22.07 |

0.886 |

| 386 |

24.93 |

0.877 |

23.70 |

0.851 |

| 387 |

22.44 |

0.888 |

20.64 |

0.862 |

| 390 |

24.33 |

0.881 |

22.90 |

0.864 |

|

23.77 |

0.896 |

22.16 |

0.872 |