Spaces:

Running

Running

added upload file from device

Browse files

app.py

CHANGED

|

@@ -1,3 +1,4 @@

|

|

|

|

|

| 1 |

import streamlit as st

|

| 2 |

import requests

|

| 3 |

import numpy as np

|

|

@@ -11,14 +12,8 @@ def get_model():

|

|

| 11 |

|

| 12 |

caption_model = get_model()

|

| 13 |

|

| 14 |

-

img_url = st.text_input(label='Enter Image URL')

|

| 15 |

-

|

| 16 |

-

if (img_url != "") and (img_url != None):

|

| 17 |

-

img = Image.open(requests.get(img_url, stream=True).raw)

|

| 18 |

-

img = img.convert('RGB')

|

| 19 |

-

st.image(img)

|

| 20 |

|

| 21 |

-

|

| 22 |

pred_caption = generate_caption('tmp.jpg', caption_model)

|

| 23 |

|

| 24 |

st.write('Predicted Captions:')

|

|

@@ -27,3 +22,25 @@ if (img_url != "") and (img_url != None):

|

|

| 27 |

for _ in range(4):

|

| 28 |

pred_caption = generate_caption('tmp.jpg', caption_model, add_noise=True)

|

| 29 |

st.write(pred_caption)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import io

|

| 2 |

import streamlit as st

|

| 3 |

import requests

|

| 4 |

import numpy as np

|

|

|

|

| 12 |

|

| 13 |

caption_model = get_model()

|

| 14 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

|

| 16 |

+

def predict():

|

| 17 |

pred_caption = generate_caption('tmp.jpg', caption_model)

|

| 18 |

|

| 19 |

st.write('Predicted Captions:')

|

|

|

|

| 22 |

for _ in range(4):

|

| 23 |

pred_caption = generate_caption('tmp.jpg', caption_model, add_noise=True)

|

| 24 |

st.write(pred_caption)

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

img_url = st.text_input(label='Enter Image URL')

|

| 28 |

+

|

| 29 |

+

if (img_url != "") and (img_url != None):

|

| 30 |

+

img = Image.open(requests.get(img_url, stream=True).raw)

|

| 31 |

+

img = img.convert('RGB')

|

| 32 |

+

st.image(img)

|

| 33 |

+

img.save('tmp.jpg')

|

| 34 |

+

st.image(img)

|

| 35 |

+

predict()

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

img = st.file_uploader(label='Upload Image', type=['jpg', 'png'])

|

| 39 |

+

|

| 40 |

+

if img != None:

|

| 41 |

+

img = img.read()

|

| 42 |

+

img = Image.open(io.BytesIO(img))

|

| 43 |

+

img = img.convert('RGB')

|

| 44 |

+

img.save('tmp.jpg')

|

| 45 |

+

st.image(img)

|

| 46 |

+

predict()

|

model.py

CHANGED

|

@@ -21,12 +21,13 @@ tokenizer = tf.keras.layers.TextVectorization(

|

|

| 21 |

standardize=None,

|

| 22 |

output_sequence_length=MAX_LENGTH,

|

| 23 |

vocabulary=vocab

|

| 24 |

-

|

| 25 |

|

| 26 |

idx2word = tf.keras.layers.StringLookup(

|

| 27 |

mask_token="",

|

| 28 |

vocabulary=tokenizer.get_vocabulary(),

|

| 29 |

-

invert=True

|

|

|

|

| 30 |

|

| 31 |

|

| 32 |

# MODEL

|

|

|

|

| 21 |

standardize=None,

|

| 22 |

output_sequence_length=MAX_LENGTH,

|

| 23 |

vocabulary=vocab

|

| 24 |

+

)

|

| 25 |

|

| 26 |

idx2word = tf.keras.layers.StringLookup(

|

| 27 |

mask_token="",

|

| 28 |

vocabulary=tokenizer.get_vocabulary(),

|

| 29 |

+

invert=True

|

| 30 |

+

)

|

| 31 |

|

| 32 |

|

| 33 |

# MODEL

|

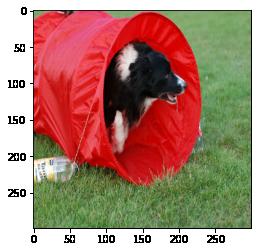

tmp.jpg

CHANGED

|

|