'

+ image_html = ""

+

+ for path in [Path(f"characters/{character}.{extension}") for extension in ['png', 'jpg', 'jpeg']]:

+ if path.exists():

+ image_html = f'}) '

+ break

+

+ container_html += f'{image_html} {character}'

+ container_html += "

'

+ break

+

+ container_html += f'{image_html} {character}'

+ container_html += "

"

+ cards.append([container_html, character])

+

+ return cards

+

+

+def select_character(evt: gr.SelectData):

+ return (evt.value[1])

+

+

+def ui():

+ with gr.Accordion("Character gallery", open=False):

+ update = gr.Button("Refresh")

+ gr.HTML(value="")

+ gallery = gr.Dataset(components=[gr.HTML(visible=False)],

+ label="",

+ samples=generate_html(),

+ elem_classes=["character-gallery"],

+ samples_per_page=50

+ )

+ update.click(generate_html, [], gallery)

+ gallery.select(select_character, None, gradio['character_menu'])

diff --git a/extensions/google_translate/requirements.txt b/extensions/google_translate/requirements.txt

new file mode 100644

index 0000000000000000000000000000000000000000..554a00df62818f96ba7d396ae39d8e58efbe9bfe

--- /dev/null

+++ b/extensions/google_translate/requirements.txt

@@ -0,0 +1 @@

+deep-translator==1.9.2

diff --git a/extensions/google_translate/script.py b/extensions/google_translate/script.py

new file mode 100644

index 0000000000000000000000000000000000000000..5dfdbcd0c0a9b889497dc2a147007e997c3cda80

--- /dev/null

+++ b/extensions/google_translate/script.py

@@ -0,0 +1,56 @@

+import gradio as gr

+from deep_translator import GoogleTranslator

+

+params = {

+ "activate": True,

+ "language string": "ja",

+}

+

+language_codes = {'Afrikaans': 'af', 'Albanian': 'sq', 'Amharic': 'am', 'Arabic': 'ar', 'Armenian': 'hy', 'Azerbaijani': 'az', 'Basque': 'eu', 'Belarusian': 'be', 'Bengali': 'bn', 'Bosnian': 'bs', 'Bulgarian': 'bg', 'Catalan': 'ca', 'Cebuano': 'ceb', 'Chinese (Simplified)': 'zh-CN', 'Chinese (Traditional)': 'zh-TW', 'Corsican': 'co', 'Croatian': 'hr', 'Czech': 'cs', 'Danish': 'da', 'Dutch': 'nl', 'English': 'en', 'Esperanto': 'eo', 'Estonian': 'et', 'Finnish': 'fi', 'French': 'fr', 'Frisian': 'fy', 'Galician': 'gl', 'Georgian': 'ka', 'German': 'de', 'Greek': 'el', 'Gujarati': 'gu', 'Haitian Creole': 'ht', 'Hausa': 'ha', 'Hawaiian': 'haw', 'Hebrew': 'iw', 'Hindi': 'hi', 'Hmong': 'hmn', 'Hungarian': 'hu', 'Icelandic': 'is', 'Igbo': 'ig', 'Indonesian': 'id', 'Irish': 'ga', 'Italian': 'it', 'Japanese': 'ja', 'Javanese': 'jw', 'Kannada': 'kn', 'Kazakh': 'kk', 'Khmer': 'km', 'Korean': 'ko', 'Kurdish': 'ku', 'Kyrgyz': 'ky', 'Lao': 'lo', 'Latin': 'la', 'Latvian': 'lv', 'Lithuanian': 'lt', 'Luxembourgish': 'lb', 'Macedonian': 'mk', 'Malagasy': 'mg', 'Malay': 'ms', 'Malayalam': 'ml', 'Maltese': 'mt', 'Maori': 'mi', 'Marathi': 'mr', 'Mongolian': 'mn', 'Myanmar (Burmese)': 'my', 'Nepali': 'ne', 'Norwegian': 'no', 'Nyanja (Chichewa)': 'ny', 'Pashto': 'ps', 'Persian': 'fa', 'Polish': 'pl', 'Portuguese (Portugal, Brazil)': 'pt', 'Punjabi': 'pa', 'Romanian': 'ro', 'Russian': 'ru', 'Samoan': 'sm', 'Scots Gaelic': 'gd', 'Serbian': 'sr', 'Sesotho': 'st', 'Shona': 'sn', 'Sindhi': 'sd', 'Sinhala (Sinhalese)': 'si', 'Slovak': 'sk', 'Slovenian': 'sl', 'Somali': 'so', 'Spanish': 'es', 'Sundanese': 'su', 'Swahili': 'sw', 'Swedish': 'sv', 'Tagalog (Filipino)': 'tl', 'Tajik': 'tg', 'Tamil': 'ta', 'Telugu': 'te', 'Thai': 'th', 'Turkish': 'tr', 'Ukrainian': 'uk', 'Urdu': 'ur', 'Uzbek': 'uz', 'Vietnamese': 'vi', 'Welsh': 'cy', 'Xhosa': 'xh', 'Yiddish': 'yi', 'Yoruba': 'yo', 'Zulu': 'zu'}

+

+

+def input_modifier(string):

+ """

+ This function is applied to your text inputs before

+ they are fed into the model.

+ """

+ if not params['activate']:

+ return string

+

+ return GoogleTranslator(source=params['language string'], target='en').translate(string)

+

+

+def output_modifier(string):

+ """

+ This function is applied to the model outputs.

+ """

+ if not params['activate']:

+ return string

+

+ return GoogleTranslator(source='en', target=params['language string']).translate(string)

+

+

+def bot_prefix_modifier(string):

+ """

+ This function is only applied in chat mode. It modifies

+ the prefix text for the Bot and can be used to bias its

+ behavior.

+ """

+

+ return string

+

+

+def ui():

+ # Finding the language name from the language code to use as the default value

+ language_name = list(language_codes.keys())[list(language_codes.values()).index(params['language string'])]

+

+ # Gradio elements

+ with gr.Row():

+ activate = gr.Checkbox(value=params['activate'], label='Activate translation')

+

+ with gr.Row():

+ language = gr.Dropdown(value=language_name, choices=[k for k in language_codes], label='Language')

+

+ # Event functions to update the parameters in the backend

+ activate.change(lambda x: params.update({"activate": x}), activate, None)

+ language.change(lambda x: params.update({"language string": language_codes[x]}), language, None)

diff --git a/extensions/llava/script.py b/extensions/llava/script.py

new file mode 100644

index 0000000000000000000000000000000000000000..781d584b78ebf8e7c0c87e4203665286b92cf81c

--- /dev/null

+++ b/extensions/llava/script.py

@@ -0,0 +1,8 @@

+import gradio as gr

+

+from modules.logging_colors import logger

+

+

+def ui():

+ gr.Markdown("### This extension is deprecated, use \"multimodal\" extension instead")

+ logger.error("LLaVA extension is deprecated, use \"multimodal\" extension instead")

diff --git a/extensions/multimodal/DOCS.md b/extensions/multimodal/DOCS.md

new file mode 100644

index 0000000000000000000000000000000000000000..eaa4365e9a304a14ebbdb1d4d435f3a2a1f7a7d2

--- /dev/null

+++ b/extensions/multimodal/DOCS.md

@@ -0,0 +1,85 @@

+# Technical description of multimodal extension

+

+## Working principle

+Multimodality extension does most of the stuff which is required for any image input:

+

+- adds the UI

+- saves the images as base64 JPEGs to history

+- provides the hooks to the UI

+- if there are images in the prompt, it:

+ - splits the prompt to text and image parts

+ - adds image start/end markers to text parts, then encodes and embeds the text parts

+ - calls the vision pipeline to embed the images

+ - stitches the embeddings together, and returns them to text generation

+- loads the appropriate vision pipeline, selected either from model name, or by specifying --multimodal-pipeline parameter

+

+Now, for the pipelines, they:

+

+- load the required vision models

+- return some consts, for example the number of tokens taken up by image

+- and most importantly: return the embeddings for LLM, given a list of images

+

+## Prompts/history

+

+To save images in prompt/history, this extension is using a base64 JPEG, wrapped in a HTML tag, like so:

+```

+

+

+

+Load it in the `--chat` mode with `--extension sd_api_pictures` alongside `send_pictures`

+(it's not really required, but completes the picture, *pun intended*).

+

+

+## History

+

+Consider the version included with [oobabooga's repository](https://github.com/oobabooga/text-generation-webui/tree/main/extensions/sd_api_pictures) to be STABLE, experimental developments and untested features are pushed in [Brawlence/SD_api_pics](https://github.com/Brawlence/SD_api_pics)

+

+Lastest change:

+1.1.0 → 1.1.1 Fixed not having Auto1111's metadata in received images

+

+## Details

+

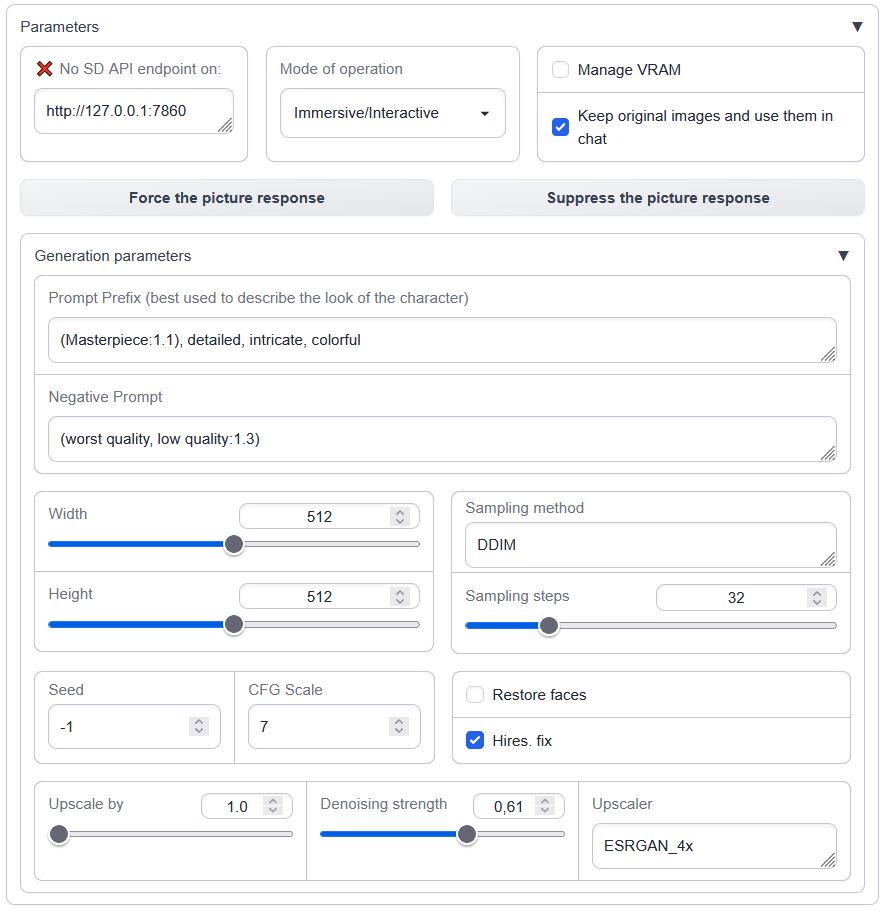

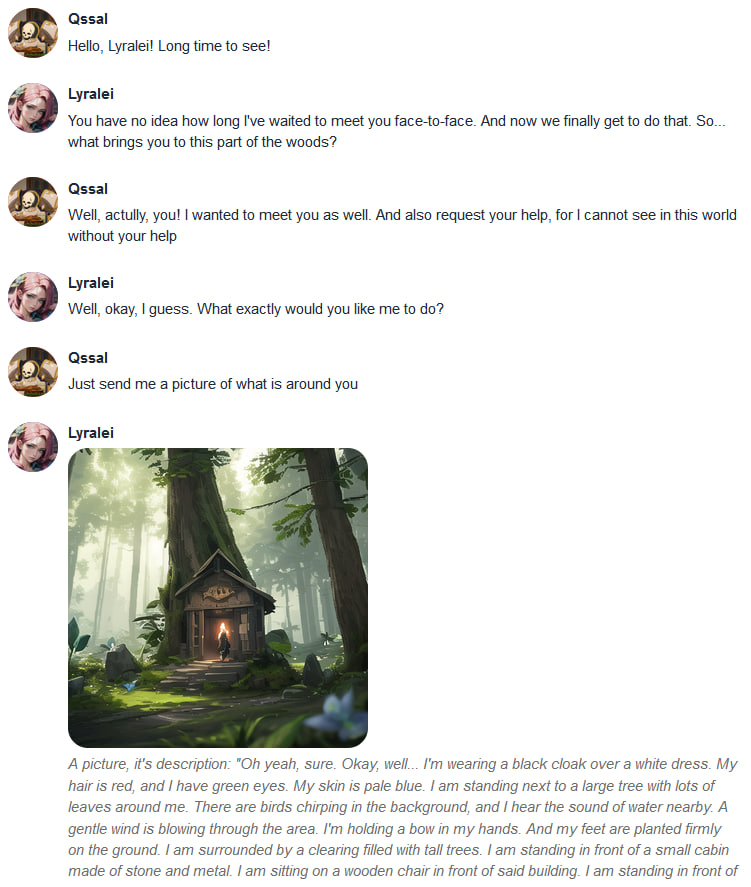

+The image generation is triggered:

+- manually through the 'Force the picture response' button while in `Manual` or `Immersive/Interactive` modes OR

+- automatically in `Immersive/Interactive` mode if the words `'send|main|message|me'` are followed by `'image|pic|picture|photo|snap|snapshot|selfie|meme'` in the user's prompt

+- always on in `Picturebook/Adventure` mode (if not currently suppressed by 'Suppress the picture response')

+

+## Prerequisites

+

+One needs an available instance of Automatic1111's webui running with an `--api` flag. Ain't tested with a notebook / cloud hosted one but should be possible.

+To run it locally in parallel on the same machine, specify custom `--listen-port` for either Auto1111's or ooba's webUIs.

+

+## Features overview

+- Connection to API check (press enter in the address box)

+- [VRAM management (model shuffling)](https://github.com/Brawlence/SD_api_pics/wiki/VRAM-management-feature)

+- [Three different operation modes](https://github.com/Brawlence/SD_api_pics/wiki/Modes-of-operation) (manual, interactive, always-on)

+- User-defined persistent settings via settings.json

+

+### Connection check

+

+Insert the Automatic1111's WebUI address and press Enter:

+

+Green mark confirms the ability to communicate with Auto1111's API on this address. Red cross means something's not right (the ext won't work).

+

+### Persistents settings

+

+Create or modify the `settings.json` in the `text-generation-webui` root directory to override the defaults

+present in script.py, ex:

+

+```json

+{

+ "sd_api_pictures-manage_VRAM": 1,

+ "sd_api_pictures-save_img": 1,

+ "sd_api_pictures-prompt_prefix": "(Masterpiece:1.1), detailed, intricate, colorful, (solo:1.1)",

+ "sd_api_pictures-sampler_name": "DPM++ 2M Karras"

+}

+```

+

+will automatically set the `Manage VRAM` & `Keep original images` checkboxes and change the texts in `Prompt Prefix` and `Sampler name` on load.

+

+---

+

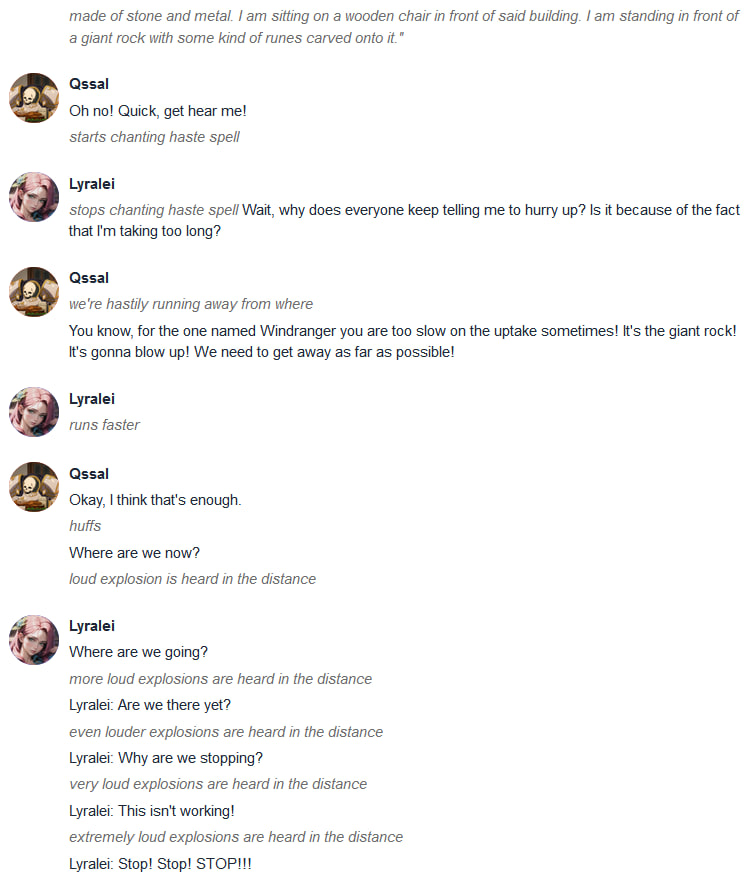

+## Demonstrations:

+

+Those are examples of the version 1.0.0, but the core functionality is still the same

+

+Interface overview

+ + + +

+

+

+Conversation 1

+ + + + + + + +

+

+

diff --git a/extensions/sd_api_pictures/script.py b/extensions/sd_api_pictures/script.py

new file mode 100644

index 0000000000000000000000000000000000000000..78488cd022b7a68ad1162fabbc1976662534b24f

--- /dev/null

+++ b/extensions/sd_api_pictures/script.py

@@ -0,0 +1,383 @@

+import base64

+import io

+import re

+import time

+from datetime import date

+from pathlib import Path

+

+import gradio as gr

+import requests

+import torch

+from PIL import Image

+

+from modules import shared

+from modules.models import reload_model, unload_model

+from modules.ui import create_refresh_button

+

+torch._C._jit_set_profiling_mode(False)

+

+# parameters which can be customized in settings.json of webui

+params = {

+ 'address': 'http://127.0.0.1:7860',

+ 'mode': 0, # modes of operation: 0 (Manual only), 1 (Immersive/Interactive - looks for words to trigger), 2 (Picturebook Adventure - Always on)

+ 'manage_VRAM': False,

+ 'save_img': False,

+ 'SD_model': 'NeverEndingDream', # not used right now

+ 'prompt_prefix': '(Masterpiece:1.1), detailed, intricate, colorful',

+ 'negative_prompt': '(worst quality, low quality:1.3)',

+ 'width': 512,

+ 'height': 512,

+ 'denoising_strength': 0.61,

+ 'restore_faces': False,

+ 'enable_hr': False,

+ 'hr_upscaler': 'ESRGAN_4x',

+ 'hr_scale': '1.0',

+ 'seed': -1,

+ 'sampler_name': 'DPM++ 2M Karras',

+ 'steps': 32,

+ 'cfg_scale': 7,

+ 'textgen_prefix': 'Please provide a detailed and vivid description of [subject]',

+ 'sd_checkpoint': ' ',

+ 'checkpoint_list': [" "]

+}

+

+

+def give_VRAM_priority(actor):

+ global shared, params

+

+ if actor == 'SD':

+ unload_model()

+ print("Requesting Auto1111 to re-load last checkpoint used...")

+ response = requests.post(url=f'{params["address"]}/sdapi/v1/reload-checkpoint', json='')

+ response.raise_for_status()

+

+ elif actor == 'LLM':

+ print("Requesting Auto1111 to vacate VRAM...")

+ response = requests.post(url=f'{params["address"]}/sdapi/v1/unload-checkpoint', json='')

+ response.raise_for_status()

+ reload_model()

+

+ elif actor == 'set':

+ print("VRAM mangement activated -- requesting Auto1111 to vacate VRAM...")

+ response = requests.post(url=f'{params["address"]}/sdapi/v1/unload-checkpoint', json='')

+ response.raise_for_status()

+

+ elif actor == 'reset':

+ print("VRAM mangement deactivated -- requesting Auto1111 to reload checkpoint")

+ response = requests.post(url=f'{params["address"]}/sdapi/v1/reload-checkpoint', json='')

+ response.raise_for_status()

+

+ else:

+ raise RuntimeError(f'Managing VRAM: "{actor}" is not a known state!')

+

+ response.raise_for_status()

+ del response

+

+

+if params['manage_VRAM']:

+ give_VRAM_priority('set')

+

+SD_models = ['NeverEndingDream'] # TODO: get with http://{address}}/sdapi/v1/sd-models and allow user to select

+

+picture_response = False # specifies if the next model response should appear as a picture

+

+

+def remove_surrounded_chars(string):

+ # this expression matches to 'as few symbols as possible (0 upwards) between any asterisks' OR

+ # 'as few symbols as possible (0 upwards) between an asterisk and the end of the string'

+ return re.sub('\*[^\*]*?(\*|$)', '', string)

+

+

+def triggers_are_in(string):

+ string = remove_surrounded_chars(string)

+ # regex searches for send|main|message|me (at the end of the word) followed by

+ # a whole word of image|pic|picture|photo|snap|snapshot|selfie|meme(s),

+ # (?aims) are regex parser flags

+ return bool(re.search('(?aims)(send|mail|message|me)\\b.+?\\b(image|pic(ture)?|photo|snap(shot)?|selfie|meme)s?\\b', string))

+

+

+def state_modifier(state):

+ if picture_response:

+ state['stream'] = False

+

+ return state

+

+

+def input_modifier(string):

+ """

+ This function is applied to your text inputs before

+ they are fed into the model.

+ """

+

+ global params

+

+ if not params['mode'] == 1: # if not in immersive/interactive mode, do nothing

+ return string

+

+ if triggers_are_in(string): # if we're in it, check for trigger words

+ toggle_generation(True)

+ string = string.lower()

+ if "of" in string:

+ subject = string.split('of', 1)[1] # subdivide the string once by the first 'of' instance and get what's coming after it

+ string = params['textgen_prefix'].replace("[subject]", subject)

+ else:

+ string = params['textgen_prefix'].replace("[subject]", "your appearance, your surroundings and what you are doing right now")

+

+ return string

+

+# Get and save the Stable Diffusion-generated picture

+def get_SD_pictures(description, character):

+

+ global params

+

+ if params['manage_VRAM']:

+ give_VRAM_priority('SD')

+

+ payload = {

+ "prompt": params['prompt_prefix'] + description,

+ "seed": params['seed'],

+ "sampler_name": params['sampler_name'],

+ "enable_hr": params['enable_hr'],

+ "hr_scale": params['hr_scale'],

+ "hr_upscaler": params['hr_upscaler'],

+ "denoising_strength": params['denoising_strength'],

+ "steps": params['steps'],

+ "cfg_scale": params['cfg_scale'],

+ "width": params['width'],

+ "height": params['height'],

+ "restore_faces": params['restore_faces'],

+ "override_settings_restore_afterwards": True,

+ "negative_prompt": params['negative_prompt']

+ }

+

+ print(f'Prompting the image generator via the API on {params["address"]}...')

+ response = requests.post(url=f'{params["address"]}/sdapi/v1/txt2img', json=payload)

+ response.raise_for_status()

+ r = response.json()

+

+ visible_result = ""

+ for img_str in r['images']:

+ if params['save_img']:

+ img_data = base64.b64decode(img_str)

+

+ variadic = f'{date.today().strftime("%Y_%m_%d")}/{character}_{int(time.time())}'

+ output_file = Path(f'extensions/sd_api_pictures/outputs/{variadic}.png')

+ output_file.parent.mkdir(parents=True, exist_ok=True)

+

+ with open(output_file.as_posix(), 'wb') as f:

+ f.write(img_data)

+

+ visible_result = visible_result + f'Conversation 2

+ + + + + + \n'

+ else:

+ image = Image.open(io.BytesIO(base64.b64decode(img_str.split(",", 1)[0])))

+ # lower the resolution of received images for the chat, otherwise the log size gets out of control quickly with all the base64 values in visible history

+ image.thumbnail((300, 300))

+ buffered = io.BytesIO()

+ image.save(buffered, format="JPEG")

+ buffered.seek(0)

+ image_bytes = buffered.getvalue()

+ img_str = "data:image/jpeg;base64," + base64.b64encode(image_bytes).decode()

+ visible_result = visible_result + f'

\n'

+ else:

+ image = Image.open(io.BytesIO(base64.b64decode(img_str.split(",", 1)[0])))

+ # lower the resolution of received images for the chat, otherwise the log size gets out of control quickly with all the base64 values in visible history

+ image.thumbnail((300, 300))

+ buffered = io.BytesIO()

+ image.save(buffered, format="JPEG")

+ buffered.seek(0)

+ image_bytes = buffered.getvalue()

+ img_str = "data:image/jpeg;base64," + base64.b64encode(image_bytes).decode()

+ visible_result = visible_result + f'