Spaces:

Sleeping

Sleeping

File size: 40,614 Bytes

c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c 1b15ea8 c3dc61c |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 473 474 |

<div align="center">

<p>

<a href="https://yolovision.ultralytics.com/" target="_blank">

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/im/banner-yolo-vision-2023.png"></a>

<!--

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov5/v70/splash.png"></a>

-->

</p>

[英文](README.md)|[简体中文](README.zh-CN.md)<br>

<div>

<a href="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml"><img src="https://github.com/ultralytics/yolov5/actions/workflows/ci-testing.yml/badge.svg" alt="YOLOv5 CI"></a>

<a href="https://zenodo.org/badge/latestdoi/264818686"><img src="https://zenodo.org/badge/264818686.svg" alt="YOLOv5 Citation"></a>

<a href="https://hub.docker.com/r/ultralytics/yolov5"><img src="https://img.shields.io/docker/pulls/ultralytics/yolov5?logo=docker" alt="Docker Pulls"></a>

<br>

<a href="https://bit.ly/yolov5-paperspace-notebook"><img src="https://assets.paperspace.io/img/gradient-badge.svg" alt="Run on Gradient"></a>

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a>

<a href="https://www.kaggle.com/ultralytics/yolov5"><img src="https://kaggle.com/static/images/open-in-kaggle.svg" alt="Open In Kaggle"></a>

</div>

<br>

YOLOv5 🚀 是世界上最受欢迎的视觉 AI,代表<a href="https://ultralytics.com"> Ultralytics </a>对未来视觉 AI 方法的开源研究,结合在数千小时的研究和开发中积累的经验教训和最佳实践。

我们希望这里的资源能帮助您充分利用 YOLOv5。请浏览 YOLOv5 <a href="https://docs.ultralytics.com/yolov5/">文档</a> 了解详细信息,在 <a href="https://github.com/ultralytics/yolov5/issues/new/choose">GitHub</a> 上提交问题以获得支持,并加入我们的 <a href="https://ultralytics.com/discord">Discord</a> 社区进行问题和讨论!

如需申请企业许可,请在 [Ultralytics Licensing](https://ultralytics.com/license) 处填写表格

<div align="center">

<a href="https://github.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="2%" alt="Ultralytics GitHub"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://www.linkedin.com/company/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="2%" alt="Ultralytics LinkedIn"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://twitter.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="2%" alt="Ultralytics Twitter"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://youtube.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="2%" alt="Ultralytics YouTube"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://www.tiktok.com/@ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="2%" alt="Ultralytics TikTok"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://www.instagram.com/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="2%" alt="Ultralytics Instagram"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="2%">

<a href="https://ultralytics.com/discord"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-discord.png" width="2%" alt="Ultralytics Discord"></a>

</div>

</div>

## <div align="center">YOLOv8 🚀 新品</div>

我们很高兴宣布 Ultralytics YOLOv8 🚀 的发布,这是我们新推出的领先水平、最先进的(SOTA)模型,发布于 **[https://github.com/ultralytics/ultralytics](https://github.com/ultralytics/ultralytics)**。 YOLOv8 旨在快速、准确且易于使用,使其成为广泛的物体检测、图像分割和图像分类任务的极佳选择。

请查看 [YOLOv8 文档](https://docs.ultralytics.com)了解详细信息,并开始使用:

[](https://badge.fury.io/py/ultralytics) [](https://pepy.tech/project/ultralytics)

```commandline

pip install ultralytics

```

<div align="center">

<a href="https://ultralytics.com/yolov8" target="_blank">

<img width="100%" src="https://raw.githubusercontent.com/ultralytics/assets/main/yolov8/yolo-comparison-plots.png"></a>

</div>

## <div align="center">文档</div>

有关训练、测试和部署的完整文档见[YOLOv5 文档](https://docs.ultralytics.com/yolov5/)。请参阅下面的快速入门示例。

<details open>

<summary>安装</summary>

克隆 repo,并要求在 [**Python>=3.8.0**](https://www.python.org/) 环境中安装 [requirements.txt](https://github.com/ultralytics/yolov5/blob/master/requirements.txt) ,且要求 [**PyTorch>=1.8**](https://pytorch.org/get-started/locally/) 。

```bash

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # install

```

</details>

<details>

<summary>推理</summary>

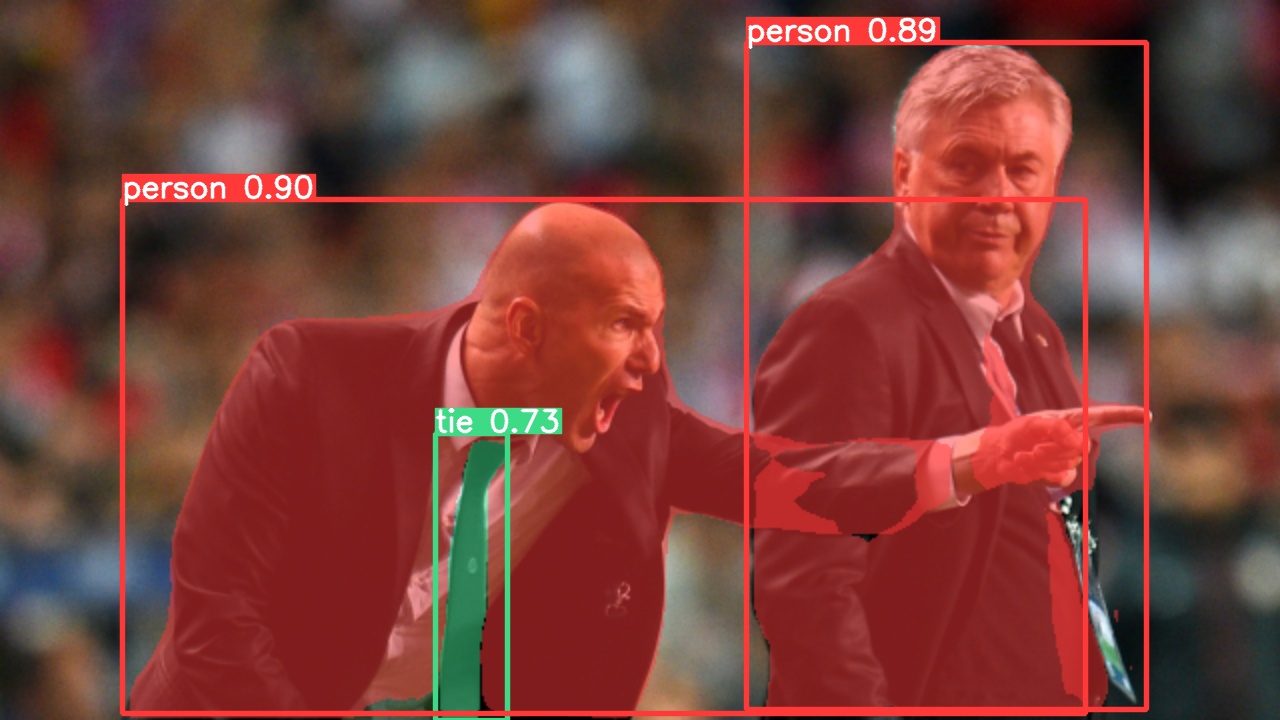

使用 YOLOv5 [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading) 推理。最新 [模型](https://github.com/ultralytics/yolov5/tree/master/models) 将自动的从 YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) 中下载。

```python

import torch

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s") # or yolov5n - yolov5x6, custom

# Images

img = "https://ultralytics.com/images/zidane.jpg" # or file, Path, PIL, OpenCV, numpy, list

# Inference

results = model(img)

# Results

results.print() # or .show(), .save(), .crop(), .pandas(), etc.

```

</details>

<details>

<summary>使用 detect.py 推理</summary>

`detect.py` 在各种来源上运行推理, [模型](https://github.com/ultralytics/yolov5/tree/master/models) 自动从 最新的YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) 中下载,并将结果保存到 `runs/detect` 。

```bash

python detect.py --weights yolov5s.pt --source 0 # webcam

img.jpg # image

vid.mp4 # video

screen # screenshot

path/ # directory

list.txt # list of images

list.streams # list of streams

'path/*.jpg' # glob

'https://youtu.be/LNwODJXcvt4' # YouTube

'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

```

</details>

<details>

<summary>训练</summary>

下面的命令重现 YOLOv5 在 [COCO](https://github.com/ultralytics/yolov5/blob/master/data/scripts/get_coco.sh) 数据集上的结果。 最新的 [模型](https://github.com/ultralytics/yolov5/tree/master/models) 和 [数据集](https://github.com/ultralytics/yolov5/tree/master/data)

将自动的从 YOLOv5 [release](https://github.com/ultralytics/yolov5/releases) 中下载。 YOLOv5n/s/m/l/x 在 V100 GPU 的训练时间为 1/2/4/6/8 天( [多GPU](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training) 训练速度更快)。 尽可能使用更大的 `--batch-size` ,或通过 `--batch-size -1` 实现 YOLOv5 [自动批处理](https://github.com/ultralytics/yolov5/pull/5092) 。下方显示的 batchsize 适用于 V100-16GB。

```bash

python train.py --data coco.yaml --epochs 300 --weights '' --cfg yolov5n.yaml --batch-size 128

yolov5s 64

yolov5m 40

yolov5l 24

yolov5x 16

```

<img width="800" src="https://user-images.githubusercontent.com/26833433/90222759-949d8800-ddc1-11ea-9fa1-1c97eed2b963.png">

</details>

<details open>

<summary>教程</summary>

- [训练自定义数据](https://docs.ultralytics.com/yolov5/tutorials/train_custom_data) 🚀 推荐

- [获得最佳训练结果的技巧](https://docs.ultralytics.com/yolov5/tutorials/tips_for_best_training_results) ☘️

- [多GPU训练](https://docs.ultralytics.com/yolov5/tutorials/multi_gpu_training)

- [PyTorch Hub](https://docs.ultralytics.com/yolov5/tutorials/pytorch_hub_model_loading) 🌟 新

- [TFLite,ONNX,CoreML,TensorRT导出](https://docs.ultralytics.com/yolov5/tutorials/model_export) 🚀

- [NVIDIA Jetson平台部署](https://docs.ultralytics.com/yolov5/tutorials/running_on_jetson_nano) 🌟 新

- [测试时增强 (TTA)](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation)

- [模型集成](https://docs.ultralytics.com/yolov5/tutorials/model_ensembling)

- [模型剪枝/稀疏](https://docs.ultralytics.com/yolov5/tutorials/model_pruning_and_sparsity)

- [超参数进化](https://docs.ultralytics.com/yolov5/tutorials/hyperparameter_evolution)

- [冻结层的迁移学习](https://docs.ultralytics.com/yolov5/tutorials/transfer_learning_with_frozen_layers)

- [架构概述](https://docs.ultralytics.com/yolov5/tutorials/architecture_description) 🌟 新

- [Roboflow用于数据集、标注和主动学习](https://docs.ultralytics.com/yolov5/tutorials/roboflow_datasets_integration)

- [ClearML日志记录](https://docs.ultralytics.com/yolov5/tutorials/clearml_logging_integration) 🌟 新

- [使用Neural Magic的Deepsparse的YOLOv5](https://docs.ultralytics.com/yolov5/tutorials/neural_magic_pruning_quantization) 🌟 新

- [Comet日志记录](https://docs.ultralytics.com/yolov5/tutorials/comet_logging_integration) 🌟 新

</details>

## <div align="center">模块集成</div>

<br>

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/integrations-loop.png"></a>

<br>

<br>

<div align="center">

<a href="https://roboflow.com/?ref=ultralytics">

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-roboflow.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

<a href="https://cutt.ly/yolov5-readme-clearml">

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-clearml.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

<a href="https://bit.ly/yolov5-readme-comet2">

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-comet.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="15%" height="0" alt="" />

<a href="https://bit.ly/yolov5-neuralmagic">

<img src="https://github.com/ultralytics/assets/raw/main/partners/logo-neuralmagic.png" width="10%" /></a>

</div>

| Roboflow | ClearML ⭐ 新 | Comet ⭐ 新 | Neural Magic ⭐ 新 |

| :--------------------------------------------------------------------------------: | :-------------------------------------------------------------------------: | :--------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------: |

| 将您的自定义数据集进行标注并直接导出到 YOLOv5 以进行训练 [Roboflow](https://roboflow.com/?ref=ultralytics) | 自动跟踪、可视化甚至远程训练 YOLOv5 [ClearML](https://cutt.ly/yolov5-readme-clearml)(开源!) | 永远免费,[Comet](https://bit.ly/yolov5-readme-comet2)可让您保存 YOLOv5 模型、恢复训练以及交互式可视化和调试预测 | 使用 [Neural Magic DeepSparse](https://bit.ly/yolov5-neuralmagic),运行 YOLOv5 推理的速度最高可提高6倍 |

## <div align="center">Ultralytics HUB</div>

[Ultralytics HUB](https://bit.ly/ultralytics_hub) 是我们的⭐**新的**用于可视化数据集、训练 YOLOv5 🚀 模型并以无缝体验部署到现实世界的无代码解决方案。现在开始 **免费** 使用他!

<a align="center" href="https://bit.ly/ultralytics_hub" target="_blank">

<img width="100%" src="https://github.com/ultralytics/assets/raw/main/im/ultralytics-hub.png"></a>

## <div align="center">为什么选择 YOLOv5</div>

YOLOv5 超级容易上手,简单易学。我们优先考虑现实世界的结果。

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040763-93c22a27-347c-4e3c-847a-8094621d3f4e.png"></p>

<details>

<summary>YOLOv5-P5 640 图</summary>

<p align="left"><img width="800" src="https://user-images.githubusercontent.com/26833433/155040757-ce0934a3-06a6-43dc-a979-2edbbd69ea0e.png"></p>

</details>

<details>

<summary>图表笔记</summary>

- **COCO AP val** 表示 mAP@0.5:0.95 指标,在 [COCO val2017](http://cocodataset.org) 数据集的 5000 张图像上测得, 图像包含 256 到 1536 各种推理大小。

- **显卡推理速度** 为在 [COCO val2017](http://cocodataset.org) 数据集上的平均推理时间,使用 [AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/) V100实例,batchsize 为 32 。

- **EfficientDet** 数据来自 [google/automl](https://github.com/google/automl) , batchsize 为32。

- **复现命令** 为 `python val.py --task study --data coco.yaml --iou 0.7 --weights yolov5n6.pt yolov5s6.pt yolov5m6.pt yolov5l6.pt yolov5x6.pt`

</details>

### 预训练模型

| 模型 | 尺寸<br><sup>(像素) | mAP<sup>val<br>50-95 | mAP<sup>val<br>50 | 推理速度<br><sup>CPU b1<br>(ms) | 推理速度<br><sup>V100 b1<br>(ms) | 速度<br><sup>V100 b32<br>(ms) | 参数量<br><sup>(M) | FLOPs<br><sup>@640 (B) |

| ---------------------------------------------------------------------------------------------- | --------------- | -------------------- | ----------------- | --------------------------- | ---------------------------- | --------------------------- | --------------- | ---------------------- |

| [YOLOv5n](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n.pt) | 640 | 28.0 | 45.7 | **45** | **6.3** | **0.6** | **1.9** | **4.5** |

| [YOLOv5s](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt) | 640 | 37.4 | 56.8 | 98 | 6.4 | 0.9 | 7.2 | 16.5 |

| [YOLOv5m](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m.pt) | 640 | 45.4 | 64.1 | 224 | 8.2 | 1.7 | 21.2 | 49.0 |

| [YOLOv5l](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l.pt) | 640 | 49.0 | 67.3 | 430 | 10.1 | 2.7 | 46.5 | 109.1 |

| [YOLOv5x](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x.pt) | 640 | 50.7 | 68.9 | 766 | 12.1 | 4.8 | 86.7 | 205.7 |

| | | | | | | | | |

| [YOLOv5n6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n6.pt) | 1280 | 36.0 | 54.4 | 153 | 8.1 | 2.1 | 3.2 | 4.6 |

| [YOLOv5s6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s6.pt) | 1280 | 44.8 | 63.7 | 385 | 8.2 | 3.6 | 12.6 | 16.8 |

| [YOLOv5m6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m6.pt) | 1280 | 51.3 | 69.3 | 887 | 11.1 | 6.8 | 35.7 | 50.0 |

| [YOLOv5l6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l6.pt) | 1280 | 53.7 | 71.3 | 1784 | 15.8 | 10.5 | 76.8 | 111.4 |

| [YOLOv5x6](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x6.pt)<br>+[TTA] | 1280<br>1536 | 55.0<br>**55.8** | 72.7<br>**72.7** | 3136<br>- | 26.2<br>- | 19.4<br>- | 140.7<br>- | 209.8<br>- |

<details>

<summary>笔记</summary>

- 所有模型都使用默认配置,训练 300 epochs。n和s模型使用 [hyp.scratch-low.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-low.yaml) ,其他模型都使用 [hyp.scratch-high.yaml](https://github.com/ultralytics/yolov5/blob/master/data/hyps/hyp.scratch-high.yaml) 。

- \*\*mAP<sup>val</sup>\*\*在单模型单尺度上计算,数据集使用 [COCO val2017](http://cocodataset.org) 。<br>复现命令 `python val.py --data coco.yaml --img 640 --conf 0.001 --iou 0.65`

- **推理速度**在 COCO val 图像总体时间上进行平均得到,测试环境使用[AWS p3.2xlarge](https://aws.amazon.com/ec2/instance-types/p3/)实例。 NMS 时间 (大约 1 ms/img) 不包括在内。<br>复现命令 `python val.py --data coco.yaml --img 640 --task speed --batch 1`

- **TTA** [测试时数据增强](https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation) 包括反射和尺度变换。<br>复现命令 `python val.py --data coco.yaml --img 1536 --iou 0.7 --augment`

</details>

## <div align="center">实例分割模型 ⭐ 新</div>

我们新的 YOLOv5 [release v7.0](https://github.com/ultralytics/yolov5/releases/v7.0) 实例分割模型是世界上最快和最准确的模型,击败所有当前 [SOTA 基准](https://paperswithcode.com/sota/real-time-instance-segmentation-on-mscoco)。我们使它非常易于训练、验证和部署。更多细节请查看 [发行说明](https://github.com/ultralytics/yolov5/releases/v7.0) 或访问我们的 [YOLOv5 分割 Colab 笔记本](https://github.com/ultralytics/yolov5/blob/master/segment/tutorial.ipynb) 以快速入门。

<details>

<summary>实例分割模型列表</summary>

<br>

<div align="center">

<a align="center" href="https://ultralytics.com/yolov5" target="_blank">

<img width="800" src="https://user-images.githubusercontent.com/61612323/204180385-84f3aca9-a5e9-43d8-a617-dda7ca12e54a.png"></a>

</div>

我们使用 A100 GPU 在 COCO 上以 640 图像大小训练了 300 epochs 得到 YOLOv5 分割模型。我们将所有模型导出到 ONNX FP32 以进行 CPU 速度测试,并导出到 TensorRT FP16 以进行 GPU 速度测试。为了便于再现,我们在 Google [Colab Pro](https://colab.research.google.com/signup) 上进行了所有速度测试。

| 模型 | 尺寸<br><sup>(像素) | mAP<sup>box<br>50-95 | mAP<sup>mask<br>50-95 | 训练时长<br><sup>300 epochs<br>A100 GPU(小时) | 推理速度<br><sup>ONNX CPU<br>(ms) | 推理速度<br><sup>TRT A100<br>(ms) | 参数量<br><sup>(M) | FLOPs<br><sup>@640 (B) |

| ------------------------------------------------------------------------------------------ | --------------- | -------------------- | --------------------- | --------------------------------------- | ----------------------------- | ----------------------------- | --------------- | ---------------------- |

| [YOLOv5n-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-seg.pt) | 640 | 27.6 | 23.4 | 80:17 | **62.7** | **1.2** | **2.0** | **7.1** |

| [YOLOv5s-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-seg.pt) | 640 | 37.6 | 31.7 | 88:16 | 173.3 | 1.4 | 7.6 | 26.4 |

| [YOLOv5m-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-seg.pt) | 640 | 45.0 | 37.1 | 108:36 | 427.0 | 2.2 | 22.0 | 70.8 |

| [YOLOv5l-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-seg.pt) | 640 | 49.0 | 39.9 | 66:43 (2x) | 857.4 | 2.9 | 47.9 | 147.7 |

| [YOLOv5x-seg](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-seg.pt) | 640 | **50.7** | **41.4** | 62:56 (3x) | 1579.2 | 4.5 | 88.8 | 265.7 |

- 所有模型使用 SGD 优化器训练, 都使用 `lr0=0.01` 和 `weight_decay=5e-5` 参数, 图像大小为 640 。<br>训练 log 可以查看 https://wandb.ai/glenn-jocher/YOLOv5_v70_official

- **准确性**结果都在 COCO 数据集上,使用单模型单尺度测试得到。<br>复现命令 `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt`

- **推理速度**是使用 100 张图像推理时间进行平均得到,测试环境使用 [Colab Pro](https://colab.research.google.com/signup) 上 A100 高 RAM 实例。结果仅表示推理速度(NMS 每张图像增加约 1 毫秒)。<br>复现命令 `python segment/val.py --data coco.yaml --weights yolov5s-seg.pt --batch 1`

- **模型转换**到 FP32 的 ONNX 和 FP16 的 TensorRT 脚本为 `export.py`.<br>运行命令 `python export.py --weights yolov5s-seg.pt --include engine --device 0 --half`

</details>

<details>

<summary>分割模型使用示例 <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/segment/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

### 训练

YOLOv5分割训练支持自动下载 COCO128-seg 分割数据集,用户仅需在启动指令中包含 `--data coco128-seg.yaml` 参数。 若要手动下载,使用命令 `bash data/scripts/get_coco.sh --train --val --segments`, 在下载完毕后,使用命令 `python train.py --data coco.yaml` 开启训练。

```bash

# 单 GPU

python segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640

# 多 GPU, DDP 模式

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 segment/train.py --data coco128-seg.yaml --weights yolov5s-seg.pt --img 640 --device 0,1,2,3

```

### 验证

在 COCO 数据集上验证 YOLOv5s-seg mask mAP:

```bash

bash data/scripts/get_coco.sh --val --segments # 下载 COCO val segments 数据集 (780MB, 5000 images)

python segment/val.py --weights yolov5s-seg.pt --data coco.yaml --img 640 # 验证

```

### 预测

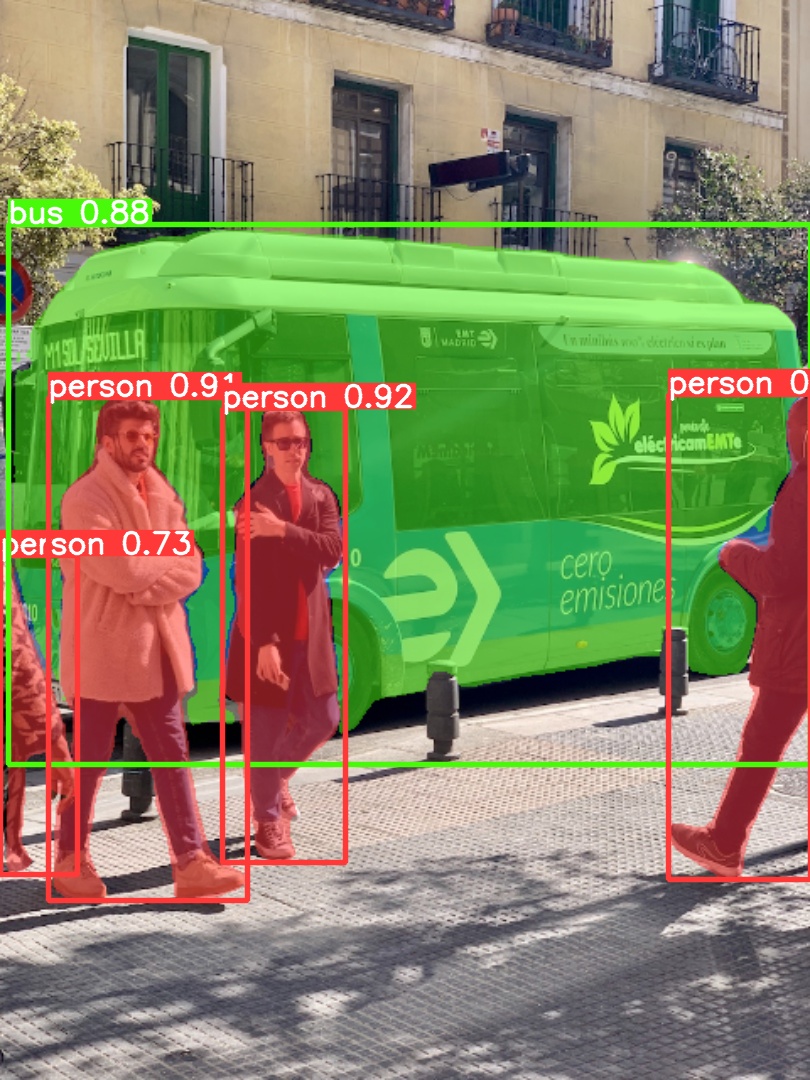

使用预训练的 YOLOv5m-seg.pt 来预测 bus.jpg:

```bash

python segment/predict.py --weights yolov5m-seg.pt --source data/images/bus.jpg

```

```python

model = torch.hub.load(

"ultralytics/yolov5", "custom", "yolov5m-seg.pt"

) # 从load from PyTorch Hub 加载模型 (WARNING: 推理暂未支持)

```

|  |  |

| ---------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------- |

### 模型导出

将 YOLOv5s-seg 模型导出到 ONNX 和 TensorRT:

```bash

python export.py --weights yolov5s-seg.pt --include onnx engine --img 640 --device 0

```

</details>

## <div align="center">分类网络 ⭐ 新</div>

YOLOv5 [release v6.2](https://github.com/ultralytics/yolov5/releases) 带来对分类模型训练、验证和部署的支持!详情请查看 [发行说明](https://github.com/ultralytics/yolov5/releases/v6.2) 或访问我们的 [YOLOv5 分类 Colab 笔记本](https://github.com/ultralytics/yolov5/blob/master/classify/tutorial.ipynb) 以快速入门。

<details>

<summary>分类网络模型</summary>

<br>

我们使用 4xA100 实例在 ImageNet 上训练了 90 个 epochs 得到 YOLOv5-cls 分类模型,我们训练了 ResNet 和 EfficientNet 模型以及相同的默认训练设置以进行比较。我们将所有模型导出到 ONNX FP32 以进行 CPU 速度测试,并导出到 TensorRT FP16 以进行 GPU 速度测试。为了便于重现,我们在 Google 上进行了所有速度测试 [Colab Pro](https://colab.research.google.com/signup) 。

| 模型 | 尺寸<br><sup>(像素) | acc<br><sup>top1 | acc<br><sup>top5 | 训练时长<br><sup>90 epochs<br>4xA100(小时) | 推理速度<br><sup>ONNX CPU<br>(ms) | 推理速度<br><sup>TensorRT V100<br>(ms) | 参数<br><sup>(M) | FLOPs<br><sup>@640 (B) |

| -------------------------------------------------------------------------------------------------- | --------------- | ---------------- | ---------------- | ------------------------------------ | ----------------------------- | ---------------------------------- | -------------- | ---------------------- |

| [YOLOv5n-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5n-cls.pt) | 224 | 64.6 | 85.4 | 7:59 | **3.3** | **0.5** | **2.5** | **0.5** |

| [YOLOv5s-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s-cls.pt) | 224 | 71.5 | 90.2 | 8:09 | 6.6 | 0.6 | 5.4 | 1.4 |

| [YOLOv5m-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5m-cls.pt) | 224 | 75.9 | 92.9 | 10:06 | 15.5 | 0.9 | 12.9 | 3.9 |

| [YOLOv5l-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5l-cls.pt) | 224 | 78.0 | 94.0 | 11:56 | 26.9 | 1.4 | 26.5 | 8.5 |

| [YOLOv5x-cls](https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5x-cls.pt) | 224 | **79.0** | **94.4** | 15:04 | 54.3 | 1.8 | 48.1 | 15.9 |

| | | | | | | | | |

| [ResNet18](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet18.pt) | 224 | 70.3 | 89.5 | **6:47** | 11.2 | 0.5 | 11.7 | 3.7 |

| [Resnetzch](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet34.pt) | 224 | 73.9 | 91.8 | 8:33 | 20.6 | 0.9 | 21.8 | 7.4 |

| [ResNet50](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet50.pt) | 224 | 76.8 | 93.4 | 11:10 | 23.4 | 1.0 | 25.6 | 8.5 |

| [ResNet101](https://github.com/ultralytics/yolov5/releases/download/v7.0/resnet101.pt) | 224 | 78.5 | 94.3 | 17:10 | 42.1 | 1.9 | 44.5 | 15.9 |

| | | | | | | | | |

| [EfficientNet_b0](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b0.pt) | 224 | 75.1 | 92.4 | 13:03 | 12.5 | 1.3 | 5.3 | 1.0 |

| [EfficientNet_b1](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b1.pt) | 224 | 76.4 | 93.2 | 17:04 | 14.9 | 1.6 | 7.8 | 1.5 |

| [EfficientNet_b2](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b2.pt) | 224 | 76.6 | 93.4 | 17:10 | 15.9 | 1.6 | 9.1 | 1.7 |

| [EfficientNet_b3](https://github.com/ultralytics/yolov5/releases/download/v7.0/efficientnet_b3.pt) | 224 | 77.7 | 94.0 | 19:19 | 18.9 | 1.9 | 12.2 | 2.4 |

<details>

<summary>Table Notes (点击以展开)</summary>

- 所有模型都使用 SGD 优化器训练 90 个 epochs,都使用 `lr0=0.001` 和 `weight_decay=5e-5` 参数, 图像大小为 224 ,且都使用默认设置。<br>训练 log 可以查看 https://wandb.ai/glenn-jocher/YOLOv5-Classifier-v6-2

- **准确性**都在单模型单尺度上计算,数据集使用 [ImageNet-1k](https://www.image-net.org/index.php) 。<br>复现命令 `python classify/val.py --data ../datasets/imagenet --img 224`

- **推理速度**是使用 100 个推理图像进行平均得到,测试环境使用谷歌 [Colab Pro](https://colab.research.google.com/signup) V100 高 RAM 实例。<br>复现命令 `python classify/val.py --data ../datasets/imagenet --img 224 --batch 1`

- **模型导出**到 FP32 的 ONNX 和 FP16 的 TensorRT 使用 `export.py` 。<br>复现命令 `python export.py --weights yolov5s-cls.pt --include engine onnx --imgsz 224`

</details>

</details>

<details>

<summary>分类训练示例 <a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/classify/tutorial.ipynb"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"></a></summary>

### 训练

YOLOv5 分类训练支持自动下载 MNIST、Fashion-MNIST、CIFAR10、CIFAR100、Imagenette、Imagewoof 和 ImageNet 数据集,命令中使用 `--data` 即可。 MNIST 示例 `--data mnist` 。

```bash

# 单 GPU

python classify/train.py --model yolov5s-cls.pt --data cifar100 --epochs 5 --img 224 --batch 128

# 多 GPU, DDP 模式

python -m torch.distributed.run --nproc_per_node 4 --master_port 1 classify/train.py --model yolov5s-cls.pt --data imagenet --epochs 5 --img 224 --device 0,1,2,3

```

### 验证

在 ImageNet-1k 数据集上验证 YOLOv5m-cls 的准确性:

```bash

bash data/scripts/get_imagenet.sh --val # download ImageNet val split (6.3G, 50000 images)

python classify/val.py --weights yolov5m-cls.pt --data ../datasets/imagenet --img 224 # validate

```

### 预测

使用预训练的 YOLOv5s-cls.pt 来预测 bus.jpg:

```bash

python classify/predict.py --weights yolov5s-cls.pt --source data/images/bus.jpg

```

```python

model = torch.hub.load(

"ultralytics/yolov5", "custom", "yolov5s-cls.pt"

) # load from PyTorch Hub

```

### 模型导出

将一组经过训练的 YOLOv5s-cls、ResNet 和 EfficientNet 模型导出到 ONNX 和 TensorRT:

```bash

python export.py --weights yolov5s-cls.pt resnet50.pt efficientnet_b0.pt --include onnx engine --img 224

```

</details>

## <div align="center">环境</div>

使用下面我们经过验证的环境,在几秒钟内开始使用 YOLOv5 。单击下面的图标了解详细信息。

<div align="center">

<a href="https://bit.ly/yolov5-paperspace-notebook">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gradient.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

<a href="https://colab.research.google.com/github/ultralytics/yolov5/blob/master/tutorial.ipynb">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-colab-small.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

<a href="https://www.kaggle.com/ultralytics/yolov5">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-kaggle-small.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

<a href="https://hub.docker.com/r/ultralytics/yolov5">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-docker-small.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

<a href="https://docs.ultralytics.com/yolov5/environments/aws_quickstart_tutorial/">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-aws-small.png" width="10%" /></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="5%" alt="" />

<a href="https://docs.ultralytics.com/yolov5/environments/google_cloud_quickstart_tutorial/">

<img src="https://github.com/ultralytics/yolov5/releases/download/v1.0/logo-gcp-small.png" width="10%" /></a>

</div>

## <div align="center">贡献</div>

我们喜欢您的意见或建议!我们希望尽可能简单和透明地为 YOLOv5 做出贡献。请看我们的 [投稿指南](https://docs.ultralytics.com/help/contributing/),并填写 [YOLOv5调查](https://ultralytics.com/survey?utm_source=github&utm_medium=social&utm_campaign=Survey) 向我们发送您的体验反馈。感谢我们所有的贡献者!

<!-- SVG image from https://opencollective.com/ultralytics/contributors.svg?width=990 -->

<a href="https://github.com/ultralytics/yolov5/graphs/contributors">

<img src="https://github.com/ultralytics/assets/raw/main/im/image-contributors.png" /></a>

## <div align="center">许可证</div>

Ultralytics 提供两种许可证选项以适应各种使用场景:

- **AGPL-3.0 许可证**:这个[OSI 批准](https://opensource.org/licenses/)的开源许可证非常适合学生和爱好者,可以推动开放的协作和知识分享。请查看[LICENSE](https://github.com/ultralytics/yolov5/blob/master/LICENSE) 文件以了解更多细节。

- **企业许可证**:专为商业用途设计,该许可证允许将 Ultralytics 的软件和 AI 模型无缝集成到商业产品和服务中,从而绕过 AGPL-3.0 的开源要求。如果您的场景涉及将我们的解决方案嵌入到商业产品中,请通过 [Ultralytics Licensing](https://ultralytics.com/license)与我们联系。

## <div align="center">联系方式</div>

对于 Ultralytics 的错误报告和功能请求,请访问 [GitHub Issues](https://github.com/ultralytics/yolov5/issues),并加入我们的 [Discord](https://ultralytics.com/discord) 社区进行问题和讨论!

<br>

<div align="center">

<a href="https://github.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-github.png" width="3%" alt="Ultralytics GitHub"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://www.linkedin.com/company/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-linkedin.png" width="3%" alt="Ultralytics LinkedIn"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://twitter.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-twitter.png" width="3%" alt="Ultralytics Twitter"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://youtube.com/ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-youtube.png" width="3%" alt="Ultralytics YouTube"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://www.tiktok.com/@ultralytics"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-tiktok.png" width="3%" alt="Ultralytics TikTok"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://www.instagram.com/ultralytics/"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-instagram.png" width="3%" alt="Ultralytics Instagram"></a>

<img src="https://github.com/ultralytics/assets/raw/main/social/logo-transparent.png" width="3%">

<a href="https://ultralytics.com/discord"><img src="https://github.com/ultralytics/assets/raw/main/social/logo-social-discord.png" width="3%" alt="Ultralytics Discord"></a>

</div>

[tta]: https://docs.ultralytics.com/yolov5/tutorials/test_time_augmentation

|