Spaces:

Running

Running

Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +18 -0

- .gitignore +139 -0

- .gradio/certificate.pem +31 -0

- LICENSE +21 -0

- NOTICE +213 -0

- README.md +243 -7

- app.py +26 -0

- config/train_booking.yaml +22 -0

- config/train_cord.yaml +22 -0

- config/train_docvqa.yaml +23 -0

- config/train_invoices.yaml +22 -0

- config/train_rvlcdip.yaml +23 -0

- config/train_zhtrainticket.yaml +22 -0

- dataset/.gitkeep +1 -0

- donut/__init__.py +16 -0

- donut/_version.py +6 -0

- donut/model.py +613 -0

- donut/util.py +340 -0

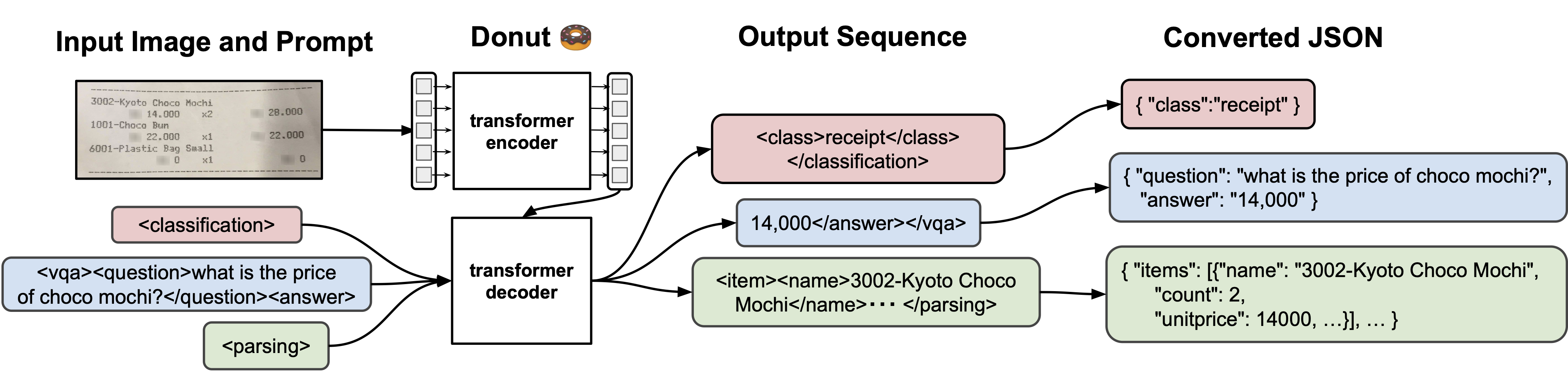

- lightning_module.py +198 -0

- misc/overview.png +0 -0

- misc/sample_image_cord_test_receipt_00004.png +3 -0

- misc/sample_image_donut_document.png +0 -0

- misc/sample_synthdog.png +3 -0

- misc/screenshot_gradio_demos.png +3 -0

- result/.gitkeep +1 -0

- setup.py +77 -0

- synthdog/README.md +63 -0

- synthdog/config_en.yaml +119 -0

- synthdog/config_ja.yaml +119 -0

- synthdog/config_ko.yaml +119 -0

- synthdog/config_zh.yaml +119 -0

- synthdog/elements/__init__.py +12 -0

- synthdog/elements/background.py +24 -0

- synthdog/elements/content.py +118 -0

- synthdog/elements/document.py +65 -0

- synthdog/elements/paper.py +17 -0

- synthdog/elements/textbox.py +43 -0

- synthdog/layouts/__init__.py +9 -0

- synthdog/layouts/grid.py +68 -0

- synthdog/layouts/grid_stack.py +74 -0

- synthdog/resources/background/bedroom_83.jpg +0 -0

- synthdog/resources/background/bob+dylan_83.jpg +0 -0

- synthdog/resources/background/coffee_122.jpg +0 -0

- synthdog/resources/background/coffee_18.jpeg +3 -0

- synthdog/resources/background/crater_141.jpg +3 -0

- synthdog/resources/background/cream_124.jpg +3 -0

- synthdog/resources/background/eagle_110.jpg +0 -0

- synthdog/resources/background/farm_25.jpg +0 -0

- synthdog/resources/background/hiking_18.jpg +0 -0

- synthdog/resources/corpus/enwiki.txt +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,21 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

misc/sample_image_cord_test_receipt_00004.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

misc/sample_synthdog.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

misc/screenshot_gradio_demos.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

synthdog/resources/background/coffee_18.jpeg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

synthdog/resources/background/crater_141.jpg filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

synthdog/resources/background/cream_124.jpg filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

synthdog/resources/font/ja/NotoSansJP-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

synthdog/resources/font/ja/NotoSerifJP-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

synthdog/resources/font/ko/NotoSansKR-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

synthdog/resources/font/ko/NotoSerifKR-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

synthdog/resources/font/zh/NotoSansSC-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

synthdog/resources/font/zh/NotoSerifSC-Regular.otf filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

synthdog/resources/paper/paper_1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

synthdog/resources/paper/paper_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

synthdog/resources/paper/paper_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

synthdog/resources/paper/paper_4.jpg filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

synthdog/resources/paper/paper_5.jpg filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

synthdog/resources/paper/paper_6.jpg filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,139 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

core.*

|

| 2 |

+

*.bin

|

| 3 |

+

.nfs*

|

| 4 |

+

.vscode/*

|

| 5 |

+

dataset/*

|

| 6 |

+

result/*

|

| 7 |

+

misc/*

|

| 8 |

+

!misc/*.png

|

| 9 |

+

!dataset/.gitkeep

|

| 10 |

+

!result/.gitkeep

|

| 11 |

+

# Byte-compiled / optimized / DLL files

|

| 12 |

+

__pycache__/

|

| 13 |

+

*.py[cod]

|

| 14 |

+

*$py.class

|

| 15 |

+

|

| 16 |

+

# C extensions

|

| 17 |

+

*.so

|

| 18 |

+

|

| 19 |

+

# Distribution / packaging

|

| 20 |

+

.Python

|

| 21 |

+

build/

|

| 22 |

+

develop-eggs/

|

| 23 |

+

dist/

|

| 24 |

+

downloads/

|

| 25 |

+

eggs/

|

| 26 |

+

.eggs/

|

| 27 |

+

lib/

|

| 28 |

+

lib64/

|

| 29 |

+

parts/

|

| 30 |

+

sdist/

|

| 31 |

+

var/

|

| 32 |

+

wheels/

|

| 33 |

+

pip-wheel-metadata/

|

| 34 |

+

share/python-wheels/

|

| 35 |

+

*.egg-info/

|

| 36 |

+

.installed.cfg

|

| 37 |

+

*.egg

|

| 38 |

+

MANIFEST

|

| 39 |

+

|

| 40 |

+

# PyInstaller

|

| 41 |

+

# Usually these files are written by a python script from a template

|

| 42 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 43 |

+

*.manifest

|

| 44 |

+

*.spec

|

| 45 |

+

|

| 46 |

+

# Installer logs

|

| 47 |

+

pip-log.txt

|

| 48 |

+

pip-delete-this-directory.txt

|

| 49 |

+

|

| 50 |

+

# Unit test / coverage reports

|

| 51 |

+

htmlcov/

|

| 52 |

+

.tox/

|

| 53 |

+

.nox/

|

| 54 |

+

.coverage

|

| 55 |

+

.coverage.*

|

| 56 |

+

.cache

|

| 57 |

+

nosetests.xml

|

| 58 |

+

coverage.xml

|

| 59 |

+

*.cover

|

| 60 |

+

*.py,cover

|

| 61 |

+

.hypothesis/

|

| 62 |

+

.pytest_cache/

|

| 63 |

+

|

| 64 |

+

# Translations

|

| 65 |

+

*.mo

|

| 66 |

+

*.pot

|

| 67 |

+

|

| 68 |

+

# Django stuff:

|

| 69 |

+

*.log

|

| 70 |

+

local_settings.py

|

| 71 |

+

db.sqlite3

|

| 72 |

+

db.sqlite3-journal

|

| 73 |

+

|

| 74 |

+

# Flask stuff:

|

| 75 |

+

instance/

|

| 76 |

+

.webassets-cache

|

| 77 |

+

|

| 78 |

+

# Scrapy stuff:

|

| 79 |

+

.scrapy

|

| 80 |

+

|

| 81 |

+

# Sphinx documentation

|

| 82 |

+

docs/_build/

|

| 83 |

+

|

| 84 |

+

# PyBuilder

|

| 85 |

+

target/

|

| 86 |

+

|

| 87 |

+

# Jupyter Notebook

|

| 88 |

+

.ipynb_checkpoints

|

| 89 |

+

|

| 90 |

+

# IPython

|

| 91 |

+

profile_default/

|

| 92 |

+

ipython_config.py

|

| 93 |

+

|

| 94 |

+

# pyenv

|

| 95 |

+

.python-version

|

| 96 |

+

|

| 97 |

+

# pipenv

|

| 98 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 99 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 100 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 101 |

+

# install all needed dependencies.

|

| 102 |

+

#Pipfile.lock

|

| 103 |

+

|

| 104 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 105 |

+

__pypackages__/

|

| 106 |

+

|

| 107 |

+

# Celery stuff

|

| 108 |

+

celerybeat-schedule

|

| 109 |

+

celerybeat.pid

|

| 110 |

+

|

| 111 |

+

# SageMath parsed files

|

| 112 |

+

*.sage.py

|

| 113 |

+

|

| 114 |

+

# Environments

|

| 115 |

+

.env

|

| 116 |

+

.venv

|

| 117 |

+

env/

|

| 118 |

+

venv/

|

| 119 |

+

ENV/

|

| 120 |

+

env.bak/

|

| 121 |

+

venv.bak/

|

| 122 |

+

|

| 123 |

+

# Spyder project settings

|

| 124 |

+

.spyderproject

|

| 125 |

+

.spyproject

|

| 126 |

+

|

| 127 |

+

# Rope project settings

|

| 128 |

+

.ropeproject

|

| 129 |

+

|

| 130 |

+

# mkdocs documentation

|

| 131 |

+

/site

|

| 132 |

+

|

| 133 |

+

# mypy

|

| 134 |

+

.mypy_cache/

|

| 135 |

+

.dmypy.json

|

| 136 |

+

dmypy.json

|

| 137 |

+

|

| 138 |

+

# Pyre type checker

|

| 139 |

+

.pyre/

|

.gradio/certificate.pem

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

-----BEGIN CERTIFICATE-----

|

| 2 |

+

MIIFazCCA1OgAwIBAgIRAIIQz7DSQONZRGPgu2OCiwAwDQYJKoZIhvcNAQELBQAw

|

| 3 |

+

TzELMAkGA1UEBhMCVVMxKTAnBgNVBAoTIEludGVybmV0IFNlY3VyaXR5IFJlc2Vh

|

| 4 |

+

cmNoIEdyb3VwMRUwEwYDVQQDEwxJU1JHIFJvb3QgWDEwHhcNMTUwNjA0MTEwNDM4

|

| 5 |

+

WhcNMzUwNjA0MTEwNDM4WjBPMQswCQYDVQQGEwJVUzEpMCcGA1UEChMgSW50ZXJu

|

| 6 |

+

ZXQgU2VjdXJpdHkgUmVzZWFyY2ggR3JvdXAxFTATBgNVBAMTDElTUkcgUm9vdCBY

|

| 7 |

+

MTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCCAgoCggIBAK3oJHP0FDfzm54rVygc

|

| 8 |

+

h77ct984kIxuPOZXoHj3dcKi/vVqbvYATyjb3miGbESTtrFj/RQSa78f0uoxmyF+

|

| 9 |

+

0TM8ukj13Xnfs7j/EvEhmkvBioZxaUpmZmyPfjxwv60pIgbz5MDmgK7iS4+3mX6U

|

| 10 |

+

A5/TR5d8mUgjU+g4rk8Kb4Mu0UlXjIB0ttov0DiNewNwIRt18jA8+o+u3dpjq+sW

|

| 11 |

+

T8KOEUt+zwvo/7V3LvSye0rgTBIlDHCNAymg4VMk7BPZ7hm/ELNKjD+Jo2FR3qyH

|

| 12 |

+

B5T0Y3HsLuJvW5iB4YlcNHlsdu87kGJ55tukmi8mxdAQ4Q7e2RCOFvu396j3x+UC

|

| 13 |

+

B5iPNgiV5+I3lg02dZ77DnKxHZu8A/lJBdiB3QW0KtZB6awBdpUKD9jf1b0SHzUv

|

| 14 |

+

KBds0pjBqAlkd25HN7rOrFleaJ1/ctaJxQZBKT5ZPt0m9STJEadao0xAH0ahmbWn

|

| 15 |

+

OlFuhjuefXKnEgV4We0+UXgVCwOPjdAvBbI+e0ocS3MFEvzG6uBQE3xDk3SzynTn

|

| 16 |

+

jh8BCNAw1FtxNrQHusEwMFxIt4I7mKZ9YIqioymCzLq9gwQbooMDQaHWBfEbwrbw

|

| 17 |

+

qHyGO0aoSCqI3Haadr8faqU9GY/rOPNk3sgrDQoo//fb4hVC1CLQJ13hef4Y53CI

|

| 18 |

+

rU7m2Ys6xt0nUW7/vGT1M0NPAgMBAAGjQjBAMA4GA1UdDwEB/wQEAwIBBjAPBgNV

|

| 19 |

+

HRMBAf8EBTADAQH/MB0GA1UdDgQWBBR5tFnme7bl5AFzgAiIyBpY9umbbjANBgkq

|

| 20 |

+

hkiG9w0BAQsFAAOCAgEAVR9YqbyyqFDQDLHYGmkgJykIrGF1XIpu+ILlaS/V9lZL

|

| 21 |

+

ubhzEFnTIZd+50xx+7LSYK05qAvqFyFWhfFQDlnrzuBZ6brJFe+GnY+EgPbk6ZGQ

|

| 22 |

+

3BebYhtF8GaV0nxvwuo77x/Py9auJ/GpsMiu/X1+mvoiBOv/2X/qkSsisRcOj/KK

|

| 23 |

+

NFtY2PwByVS5uCbMiogziUwthDyC3+6WVwW6LLv3xLfHTjuCvjHIInNzktHCgKQ5

|

| 24 |

+

ORAzI4JMPJ+GslWYHb4phowim57iaztXOoJwTdwJx4nLCgdNbOhdjsnvzqvHu7Ur

|

| 25 |

+

TkXWStAmzOVyyghqpZXjFaH3pO3JLF+l+/+sKAIuvtd7u+Nxe5AW0wdeRlN8NwdC

|

| 26 |

+

jNPElpzVmbUq4JUagEiuTDkHzsxHpFKVK7q4+63SM1N95R1NbdWhscdCb+ZAJzVc

|

| 27 |

+

oyi3B43njTOQ5yOf+1CceWxG1bQVs5ZufpsMljq4Ui0/1lvh+wjChP4kqKOJ2qxq

|

| 28 |

+

4RgqsahDYVvTH9w7jXbyLeiNdd8XM2w9U/t7y0Ff/9yi0GE44Za4rF2LN9d11TPA

|

| 29 |

+

mRGunUHBcnWEvgJBQl9nJEiU0Zsnvgc/ubhPgXRR4Xq37Z0j4r7g1SgEEzwxA57d

|

| 30 |

+

emyPxgcYxn/eR44/KJ4EBs+lVDR3veyJm+kXQ99b21/+jh5Xos1AnX5iItreGCc=

|

| 31 |

+

-----END CERTIFICATE-----

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT license

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in

|

| 13 |

+

all copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

| 21 |

+

THE SOFTWARE.

|

NOTICE

ADDED

|

@@ -0,0 +1,213 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Donut

|

| 2 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 3 |

+

|

| 4 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 5 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 6 |

+

in the Software without restriction, including without limitation the rights

|

| 7 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 8 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 9 |

+

furnished to do so, subject to the following conditions:

|

| 10 |

+

|

| 11 |

+

The above copyright notice and this permission notice shall be included in

|

| 12 |

+

all copies or substantial portions of the Software.

|

| 13 |

+

|

| 14 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 15 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 16 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 17 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 18 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 19 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

| 20 |

+

THE SOFTWARE.

|

| 21 |

+

|

| 22 |

+

--------------------------------------------------------------------------------------

|

| 23 |

+

|

| 24 |

+

This project contains subcomponents with separate copyright notices and license terms.

|

| 25 |

+

Your use of the source code for these subcomponents is subject to the terms and conditions of the following licenses.

|

| 26 |

+

|

| 27 |

+

=====

|

| 28 |

+

|

| 29 |

+

googlefonts/noto-fonts

|

| 30 |

+

https://fonts.google.com/specimen/Noto+Sans

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

Copyright 2018 The Noto Project Authors (github.com/googlei18n/noto-fonts)

|

| 34 |

+

|

| 35 |

+

This Font Software is licensed under the SIL Open Font License,

|

| 36 |

+

Version 1.1.

|

| 37 |

+

|

| 38 |

+

This license is copied below, and is also available with a FAQ at:

|

| 39 |

+

http://scripts.sil.org/OFL

|

| 40 |

+

|

| 41 |

+

-----------------------------------------------------------

|

| 42 |

+

SIL OPEN FONT LICENSE Version 1.1 - 26 February 2007

|

| 43 |

+

-----------------------------------------------------------

|

| 44 |

+

|

| 45 |

+

PREAMBLE

|

| 46 |

+

The goals of the Open Font License (OFL) are to stimulate worldwide

|

| 47 |

+

development of collaborative font projects, to support the font

|

| 48 |

+

creation efforts of academic and linguistic communities, and to

|

| 49 |

+

provide a free and open framework in which fonts may be shared and

|

| 50 |

+

improved in partnership with others.

|

| 51 |

+

|

| 52 |

+

The OFL allows the licensed fonts to be used, studied, modified and

|

| 53 |

+

redistributed freely as long as they are not sold by themselves. The

|

| 54 |

+

fonts, including any derivative works, can be bundled, embedded,

|

| 55 |

+

redistributed and/or sold with any software provided that any reserved

|

| 56 |

+

names are not used by derivative works. The fonts and derivatives,

|

| 57 |

+

however, cannot be released under any other type of license. The

|

| 58 |

+

requirement for fonts to remain under this license does not apply to

|

| 59 |

+

any document created using the fonts or their derivatives.

|

| 60 |

+

|

| 61 |

+

DEFINITIONS

|

| 62 |

+

"Font Software" refers to the set of files released by the Copyright

|

| 63 |

+

Holder(s) under this license and clearly marked as such. This may

|

| 64 |

+

include source files, build scripts and documentation.

|

| 65 |

+

|

| 66 |

+

"Reserved Font Name" refers to any names specified as such after the

|

| 67 |

+

copyright statement(s).

|

| 68 |

+

|

| 69 |

+

"Original Version" refers to the collection of Font Software

|

| 70 |

+

components as distributed by the Copyright Holder(s).

|

| 71 |

+

|

| 72 |

+

"Modified Version" refers to any derivative made by adding to,

|

| 73 |

+

deleting, or substituting -- in part or in whole -- any of the

|

| 74 |

+

components of the Original Version, by changing formats or by porting

|

| 75 |

+

the Font Software to a new environment.

|

| 76 |

+

|

| 77 |

+

"Author" refers to any designer, engineer, programmer, technical

|

| 78 |

+

writer or other person who contributed to the Font Software.

|

| 79 |

+

|

| 80 |

+

PERMISSION & CONDITIONS

|

| 81 |

+

Permission is hereby granted, free of charge, to any person obtaining

|

| 82 |

+

a copy of the Font Software, to use, study, copy, merge, embed,

|

| 83 |

+

modify, redistribute, and sell modified and unmodified copies of the

|

| 84 |

+

Font Software, subject to the following conditions:

|

| 85 |

+

|

| 86 |

+

1) Neither the Font Software nor any of its individual components, in

|

| 87 |

+

Original or Modified Versions, may be sold by itself.

|

| 88 |

+

|

| 89 |

+

2) Original or Modified Versions of the Font Software may be bundled,

|

| 90 |

+

redistributed and/or sold with any software, provided that each copy

|

| 91 |

+

contains the above copyright notice and this license. These can be

|

| 92 |

+

included either as stand-alone text files, human-readable headers or

|

| 93 |

+

in the appropriate machine-readable metadata fields within text or

|

| 94 |

+

binary files as long as those fields can be easily viewed by the user.

|

| 95 |

+

|

| 96 |

+

3) No Modified Version of the Font Software may use the Reserved Font

|

| 97 |

+

Name(s) unless explicit written permission is granted by the

|

| 98 |

+

corresponding Copyright Holder. This restriction only applies to the

|

| 99 |

+

primary font name as presented to the users.

|

| 100 |

+

|

| 101 |

+

4) The name(s) of the Copyright Holder(s) or the Author(s) of the Font

|

| 102 |

+

Software shall not be used to promote, endorse or advertise any

|

| 103 |

+

Modified Version, except to acknowledge the contribution(s) of the

|

| 104 |

+

Copyright Holder(s) and the Author(s) or with their explicit written

|

| 105 |

+

permission.

|

| 106 |

+

|

| 107 |

+

5) The Font Software, modified or unmodified, in part or in whole,

|

| 108 |

+

must be distributed entirely under this license, and must not be

|

| 109 |

+

distributed under any other license. The requirement for fonts to

|

| 110 |

+

remain under this license does not apply to any document created using

|

| 111 |

+

the Font Software.

|

| 112 |

+

|

| 113 |

+

TERMINATION

|

| 114 |

+

This license becomes null and void if any of the above conditions are

|

| 115 |

+

not met.

|

| 116 |

+

|

| 117 |

+

DISCLAIMER

|

| 118 |

+

THE FONT SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND,

|

| 119 |

+

EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO ANY WARRANTIES OF

|

| 120 |

+

MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT

|

| 121 |

+

OF COPYRIGHT, PATENT, TRADEMARK, OR OTHER RIGHT. IN NO EVENT SHALL THE

|

| 122 |

+

COPYRIGHT HOLDER BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY,

|

| 123 |

+

INCLUDING ANY GENERAL, SPECIAL, INDIRECT, INCIDENTAL, OR CONSEQUENTIAL

|

| 124 |

+

DAMAGES, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING

|

| 125 |

+

FROM, OUT OF THE USE OR INABILITY TO USE THE FONT SOFTWARE OR FROM

|

| 126 |

+

OTHER DEALINGS IN THE FONT SOFTWARE.

|

| 127 |

+

|

| 128 |

+

=====

|

| 129 |

+

|

| 130 |

+

huggingface/transformers

|

| 131 |

+

https://github.com/huggingface/transformers

|

| 132 |

+

|

| 133 |

+

|

| 134 |

+

Copyright [yyyy] [name of copyright owner]

|

| 135 |

+

|

| 136 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 137 |

+

you may not use this file except in compliance with the License.

|

| 138 |

+

You may obtain a copy of the License at

|

| 139 |

+

|

| 140 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 141 |

+

|

| 142 |

+

Unless required by applicable law or agreed to in writing, software

|

| 143 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 144 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 145 |

+

See the License for the specific language governing permissions and limitations under the License.

|

| 146 |

+

|

| 147 |

+

=====

|

| 148 |

+

|

| 149 |

+

clovaai/synthtiger

|

| 150 |

+

https://github.com/clovaai/synthtiger

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

Copyright (c) 2021-present NAVER Corp.

|

| 154 |

+

|

| 155 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 156 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 157 |

+

in the Software without restriction, including without limitation the rights

|

| 158 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 159 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 160 |

+

furnished to do so, subject to the following conditions:

|

| 161 |

+

|

| 162 |

+

The above copyright notice and this permission notice shall be included in

|

| 163 |

+

all copies or substantial portions of the Software.

|

| 164 |

+

|

| 165 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 166 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 167 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 168 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 169 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 170 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

| 171 |

+

THE SOFTWARE.

|

| 172 |

+

|

| 173 |

+

=====

|

| 174 |

+

|

| 175 |

+

rwightman/pytorch-image-models

|

| 176 |

+

https://github.com/rwightman/pytorch-image-models

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

Copyright 2019 Ross Wightman

|

| 180 |

+

|

| 181 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 182 |

+

you may not use this file except in compliance with the License.

|

| 183 |

+

You may obtain a copy of the License at

|

| 184 |

+

|

| 185 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 186 |

+

|

| 187 |

+

Unless required by applicable law or agreed to in writing, software

|

| 188 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 189 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 190 |

+

See the License for the specific language governing permissions and

|

| 191 |

+

limitations under the License.

|

| 192 |

+

|

| 193 |

+

=====

|

| 194 |

+

|

| 195 |

+

ankush-me/SynthText

|

| 196 |

+

https://github.com/ankush-me/SynthText

|

| 197 |

+

|

| 198 |

+

|

| 199 |

+

Copyright 2017, Ankush Gupta.

|

| 200 |

+

|

| 201 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 202 |

+

you may not use this file except in compliance with the License.

|

| 203 |

+

You may obtain a copy of the License at

|

| 204 |

+

|

| 205 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 206 |

+

|

| 207 |

+

Unless required by applicable law or agreed to in writing, software

|

| 208 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 209 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 210 |

+

See the License for the specific language governing permissions and

|

| 211 |

+

limitations under the License.

|

| 212 |

+

|

| 213 |

+

=====

|

README.md

CHANGED

|

@@ -1,12 +1,248 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

|

| 4 |

-

colorFrom: yellow

|

| 5 |

-

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

sdk_version: 5.5.0

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: donut-booking-gradio

|

| 3 |

+

app_file: app.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

sdk_version: 5.5.0

|

|

|

|

|

|

|

| 6 |

---

|

| 7 |

+

<div align="center">

|

| 8 |

+

|

| 9 |

+

# Donut 🍩 : Document Understanding Transformer

|

| 10 |

+

|

| 11 |

+

[](https://arxiv.org/abs/2111.15664)

|

| 12 |

+

[](#how-to-cite)

|

| 13 |

+

[](#demo)

|

| 14 |

+

[](#demo)

|

| 15 |

+

[](https://pypi.org/project/donut-python)

|

| 16 |

+

[](https://pepy.tech/project/donut-python)

|

| 17 |

+

|

| 18 |

+

Official Implementation of Donut and SynthDoG | [Paper](https://arxiv.org/abs/2111.15664) | [Slide](https://docs.google.com/presentation/d/1gv3A7t4xpwwNdpxV_yeHzEOMy-exJCAz6AlAI9O5fS8/edit?usp=sharing) | [Poster](https://docs.google.com/presentation/d/1m1f8BbAm5vxPcqynn_MbFfmQAlHQIR5G72-hQUFS2sk/edit?usp=sharing)

|

| 19 |

+

|

| 20 |

+

</div>

|

| 21 |

+

|

| 22 |

+

## Introduction

|

| 23 |

+

|

| 24 |

+

**Donut** 🍩, **Do**cume**n**t **u**nderstanding **t**ransformer, is a new method of document understanding that utilizes an OCR-free end-to-end Transformer model. Donut does not require off-the-shelf OCR engines/APIs, yet it shows state-of-the-art performances on various visual document understanding tasks, such as visual document classification or information extraction (a.k.a. document parsing).

|

| 25 |

+

In addition, we present **SynthDoG** 🐶, **Synth**etic **Do**cument **G**enerator, that helps the model pre-training to be flexible on various languages and domains.

|

| 26 |

+

|

| 27 |

+

Our academic paper, which describes our method in detail and provides full experimental results and analyses, can be found here:<br>

|

| 28 |

+

> [**OCR-free Document Understanding Transformer**](https://arxiv.org/abs/2111.15664).<br>

|

| 29 |

+

> [Geewook Kim](https://geewook.kim), [Teakgyu Hong](https://dblp.org/pid/183/0952.html), [Moonbin Yim](https://github.com/moonbings), [JeongYeon Nam](https://github.com/long8v), [Jinyoung Park](https://github.com/jyp1111), [Jinyeong Yim](https://jinyeong.github.io), [Wonseok Hwang](https://scholar.google.com/citations?user=M13_WdcAAAAJ), [Sangdoo Yun](https://sangdooyun.github.io), [Dongyoon Han](https://dongyoonhan.github.io), [Seunghyun Park](https://scholar.google.com/citations?user=iowjmTwAAAAJ). In ECCV 2022.

|

| 30 |

+

|

| 31 |

+

<img width="946" alt="image" src="misc/overview.png">

|

| 32 |

+

|

| 33 |

+

## Pre-trained Models and Web Demos

|

| 34 |

+

|

| 35 |

+

Gradio web demos are available! [](#demo) [](#demo)

|

| 36 |

+

|:--:|

|

| 37 |

+

||

|

| 38 |

+

- You can run the demo with `./app.py` file.

|

| 39 |

+

- Sample images are available at `./misc` and more receipt images are available at [CORD dataset link](https://huggingface.co/datasets/naver-clova-ix/cord-v2).

|

| 40 |

+

- Web demos are available from the links in the following table.

|

| 41 |

+

- Note: We have updated the Google Colab demo (as of June 15, 2023) to ensure its proper working.

|

| 42 |

+

|

| 43 |

+

|Task|Sec/Img|Score|Trained Model|<div id="demo">Demo</div>|

|

| 44 |

+

|---|---|---|---|---|

|

| 45 |

+

| [CORD](https://github.com/clovaai/cord) (Document Parsing) | 0.7 /<br> 0.7 /<br> 1.2 | 91.3 /<br> 91.1 /<br> 90.9 | [donut-base-finetuned-cord-v2](https://huggingface.co/naver-clova-ix/donut-base-finetuned-cord-v2/tree/official) (1280) /<br> [donut-base-finetuned-cord-v1](https://huggingface.co/naver-clova-ix/donut-base-finetuned-cord-v1/tree/official) (1280) /<br> [donut-base-finetuned-cord-v1-2560](https://huggingface.co/naver-clova-ix/donut-base-finetuned-cord-v1-2560/tree/official) | [gradio space web demo](https://huggingface.co/spaces/naver-clova-ix/donut-base-finetuned-cord-v2),<br>[google colab demo (updated at 23.06.15)](https://colab.research.google.com/drive/1NMSqoIZ_l39wyRD7yVjw2FIuU2aglzJi?usp=sharing) |

|

| 46 |

+

| [Train Ticket](https://github.com/beacandler/EATEN) (Document Parsing) | 0.6 | 98.7 | [donut-base-finetuned-zhtrainticket](https://huggingface.co/naver-clova-ix/donut-base-finetuned-zhtrainticket/tree/official) | [google colab demo (updated at 23.06.15)](https://colab.research.google.com/drive/1YJBjllahdqNktXaBlq5ugPh1BCm8OsxI?usp=sharing) |

|

| 47 |

+

| [RVL-CDIP](https://www.cs.cmu.edu/~aharley/rvl-cdip) (Document Classification) | 0.75 | 95.3 | [donut-base-finetuned-rvlcdip](https://huggingface.co/naver-clova-ix/donut-base-finetuned-rvlcdip/tree/official) | [gradio space web demo](https://huggingface.co/spaces/nielsr/donut-rvlcdip),<br>[google colab demo (updated at 23.06.15)](https://colab.research.google.com/drive/1iWOZHvao1W5xva53upcri5V6oaWT-P0O?usp=sharing) |

|

| 48 |

+

| [DocVQA Task1](https://rrc.cvc.uab.es/?ch=17) (Document VQA) | 0.78 | 67.5 | [donut-base-finetuned-docvqa](https://huggingface.co/naver-clova-ix/donut-base-finetuned-docvqa/tree/official) | [gradio space web demo](https://huggingface.co/spaces/nielsr/donut-docvqa),<br>[google colab demo (updated at 23.06.15)](https://colab.research.google.com/drive/1oKieslZCulFiquequ62eMGc-ZWgay4X3?usp=sharing) |

|

| 49 |

+

|

| 50 |

+

The links to the pre-trained backbones are here:

|

| 51 |

+

- [`donut-base`](https://huggingface.co/naver-clova-ix/donut-base/tree/official): trained with 64 A100 GPUs (~2.5 days), number of layers (encoder: {2,2,14,2}, decoder: 4), input size 2560x1920, swin window size 10, IIT-CDIP (11M) and SynthDoG (English, Chinese, Japanese, Korean, 0.5M x 4).

|

| 52 |

+

- [`donut-proto`](https://huggingface.co/naver-clova-ix/donut-proto/tree/official): (preliminary model) trained with 8 V100 GPUs (~5 days), number of layers (encoder: {2,2,18,2}, decoder: 4), input size 2048x1536, swin window size 8, and SynthDoG (English, Japanese, Korean, 0.4M x 3).

|

| 53 |

+

|

| 54 |

+

Please see [our paper](#how-to-cite) for more details.

|

| 55 |

+

|

| 56 |

+

## SynthDoG datasets

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

The links to the SynthDoG-generated datasets are here:

|

| 61 |

+

|

| 62 |

+

- [`synthdog-en`](https://huggingface.co/datasets/naver-clova-ix/synthdog-en): English, 0.5M.

|

| 63 |

+

- [`synthdog-zh`](https://huggingface.co/datasets/naver-clova-ix/synthdog-zh): Chinese, 0.5M.

|

| 64 |

+

- [`synthdog-ja`](https://huggingface.co/datasets/naver-clova-ix/synthdog-ja): Japanese, 0.5M.

|

| 65 |

+

- [`synthdog-ko`](https://huggingface.co/datasets/naver-clova-ix/synthdog-ko): Korean, 0.5M.

|

| 66 |

+

|

| 67 |

+

To generate synthetic datasets with our SynthDoG, please see `./synthdog/README.md` and [our paper](#how-to-cite) for details.

|

| 68 |

+

|

| 69 |

+

## Updates

|

| 70 |

+

|

| 71 |

+

**_2023-06-15_** We have updated all Google Colab demos to ensure its proper working.<br>

|

| 72 |

+

**_2022-11-14_** New version 1.0.9 is released (`pip install donut-python --upgrade`). See [1.0.9 Release Notes](https://github.com/clovaai/donut/releases/tag/1.0.9).<br>

|

| 73 |

+

**_2022-08-12_** Donut 🍩 is also available at [huggingface/transformers 🤗](https://huggingface.co/docs/transformers/main/en/model_doc/donut) (contributed by [@NielsRogge](https://github.com/NielsRogge)). `donut-python` loads the pre-trained weights from the `official` branch of the model repositories. See [1.0.5 Release Notes](https://github.com/clovaai/donut/releases/tag/1.0.5).<br>

|

| 74 |

+

**_2022-08-05_** A well-executed hands-on tutorial on donut 🍩 is published at [Towards Data Science](https://towardsdatascience.com/ocr-free-document-understanding-with-donut-1acfbdf099be) (written by [@estaudere](https://github.com/estaudere)).<br>

|

| 75 |

+

**_2022-07-20_** First Commit, We release our code, model weights, synthetic data and generator.

|

| 76 |

+

|

| 77 |

+

## Software installation

|

| 78 |

+

|

| 79 |

+

[](https://pypi.org/project/donut-python)

|

| 80 |

+

[](https://pepy.tech/project/donut-python)

|

| 81 |

+

|

| 82 |

+

```bash

|

| 83 |

+

pip install donut-python

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

or clone this repository and install the dependencies:

|

| 87 |

+

```bash

|

| 88 |

+

git clone https://github.com/clovaai/donut.git

|

| 89 |

+

cd donut/

|

| 90 |

+

conda create -n donut_official python=3.7

|

| 91 |

+

conda activate donut_official

|

| 92 |

+

pip install .

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

We tested [donut-python](https://pypi.org/project/donut-python/1.0.1) == 1.0.1 with:

|

| 96 |

+

- [torch](https://github.com/pytorch/pytorch) == 1.11.0+cu113

|

| 97 |

+

- [torchvision](https://github.com/pytorch/vision) == 0.12.0+cu113

|

| 98 |

+

- [pytorch-lightning](https://github.com/Lightning-AI/lightning) == 1.6.4

|

| 99 |

+

- [transformers](https://github.com/huggingface/transformers) == 4.11.3

|

| 100 |

+

- [timm](https://github.com/rwightman/pytorch-image-models) == 0.5.4

|

| 101 |

+

|

| 102 |

+

**Note**: From several reported issues, we have noticed increased challenges in configuring the testing environment for `donut-python` due to recent updates in key dependency libraries. While we are actively working on a solution, we have updated the Google Colab demo (as of June 15, 2023) to ensure its proper working. For assistance, we encourage you to refer to the following demo links: [CORD Colab Demo](https://colab.research.google.com/drive/1NMSqoIZ_l39wyRD7yVjw2FIuU2aglzJi?usp=sharing), [Train Ticket Colab Demo](https://colab.research.google.com/drive/1YJBjllahdqNktXaBlq5ugPh1BCm8OsxI?usp=sharing), [RVL-CDIP Colab Demo](https://colab.research.google.com/drive/1iWOZHvao1W5xva53upcri5V6oaWT-P0O?usp=sharing), [DocVQA Colab Demo](https://colab.research.google.com/drive/1oKieslZCulFiquequ62eMGc-ZWgay4X3?usp=sharing).

|

| 103 |

+

|

| 104 |

+

## Getting Started

|

| 105 |

+

|

| 106 |

+

### Data

|

| 107 |

+

|

| 108 |

+

This repository assumes the following structure of dataset:

|

| 109 |

+

```bash

|

| 110 |

+

> tree dataset_name

|

| 111 |

+

dataset_name

|

| 112 |

+

├── test

|

| 113 |

+

│ ├── metadata.jsonl

|

| 114 |

+

│ ├── {image_path0}

|

| 115 |

+

│ ├── {image_path1}

|

| 116 |

+

│ .

|

| 117 |

+

│ .

|

| 118 |

+

├── train

|

| 119 |

+

│ ├── metadata.jsonl

|

| 120 |

+

│ ├── {image_path0}

|

| 121 |

+

│ ├── {image_path1}

|

| 122 |

+

│ .

|

| 123 |

+

│ .

|

| 124 |

+

└── validation

|

| 125 |

+

├── metadata.jsonl

|

| 126 |

+

├── {image_path0}

|

| 127 |

+

├── {image_path1}

|

| 128 |

+

.

|

| 129 |

+

.

|

| 130 |

+

|

| 131 |

+

> cat dataset_name/test/metadata.jsonl

|

| 132 |

+

{"file_name": {image_path0}, "ground_truth": "{\"gt_parse\": {ground_truth_parse}, ... {other_metadata_not_used} ... }"}

|

| 133 |

+

{"file_name": {image_path1}, "ground_truth": "{\"gt_parse\": {ground_truth_parse}, ... {other_metadata_not_used} ... }"}

|

| 134 |

+

.

|

| 135 |

+

.

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

- The structure of `metadata.jsonl` file is in [JSON Lines text format](https://jsonlines.org), i.e., `.jsonl`. Each line consists of

|

| 139 |

+

- `file_name` : relative path to the image file.

|

| 140 |

+

- `ground_truth` : string format (json dumped), the dictionary contains either `gt_parse` or `gt_parses`. Other fields (metadata) can be added to the dictionary but will not be used.

|

| 141 |

+

- `donut` interprets all tasks as a JSON prediction problem. As a result, all `donut` model training share a same pipeline. For training and inference, the only thing to do is preparing `gt_parse` or `gt_parses` for the task in format described below.

|

| 142 |

+

|

| 143 |

+

#### For Document Classification

|

| 144 |

+

The `gt_parse` follows the format of `{"class" : {class_name}}`, for example, `{"class" : "scientific_report"}` or `{"class" : "presentation"}`.

|

| 145 |

+

- Google colab demo is available [here](https://colab.research.google.com/drive/1xUDmLqlthx8A8rWKLMSLThZ7oeRJkDuU?usp=sharing).

|

| 146 |

+

- Gradio web demo is available [here](https://huggingface.co/spaces/nielsr/donut-rvlcdip).

|

| 147 |

+

|

| 148 |

+

#### For Document Information Extraction

|

| 149 |

+

The `gt_parse` is a JSON object that contains full information of the document image, for example, the JSON object for a receipt may look like `{"menu" : [{"nm": "ICE BLACKCOFFEE", "cnt": "2", ...}, ...], ...}`.

|

| 150 |

+

- More examples are available at [CORD dataset](https://huggingface.co/datasets/naver-clova-ix/cord-v2).

|

| 151 |

+

- Google colab demo is available [here](https://colab.research.google.com/drive/1o07hty-3OQTvGnc_7lgQFLvvKQuLjqiw?usp=sharing).

|

| 152 |

+

- Gradio web demo is available [here](https://huggingface.co/spaces/naver-clova-ix/donut-base-finetuned-cord-v2).

|

| 153 |

+

|

| 154 |

+

#### For Document Visual Question Answering

|

| 155 |

+

The `gt_parses` follows the format of `[{"question" : {question_sentence}, "answer" : {answer_candidate_1}}, {"question" : {question_sentence}, "answer" : {answer_candidate_2}}, ...]`, for example, `[{"question" : "what is the model name?", "answer" : "donut"}, {"question" : "what is the model name?", "answer" : "document understanding transformer"}]`.

|

| 156 |

+

- DocVQA Task1 has multiple answers, hence `gt_parses` should be a list of dictionary that contains a pair of question and answer.

|

| 157 |

+

- Google colab demo is available [here](https://colab.research.google.com/drive/1Z4WG8Wunj3HE0CERjt608ALSgSzRC9ig?usp=sharing).

|

| 158 |

+

- Gradio web demo is available [here](https://huggingface.co/spaces/nielsr/donut-docvqa).

|

| 159 |

+

|

| 160 |

+

#### For (Pseudo) Text Reading Task

|

| 161 |

+

The `gt_parse` looks like `{"text_sequence" : "word1 word2 word3 ... "}`

|

| 162 |

+

- This task is also a pre-training task of Donut model.

|

| 163 |

+

- You can use our **SynthDoG** 🐶 to generate synthetic images for the text reading task with proper `gt_parse`. See `./synthdog/README.md` for details.

|

| 164 |

+

|

| 165 |

+

### Training

|

| 166 |

+

|

| 167 |

+

This is the configuration of Donut model training on [CORD](https://github.com/clovaai/cord) dataset used in our experiment.

|

| 168 |

+

We ran this with a single NVIDIA A100 GPU.

|

| 169 |

+

|

| 170 |

+

```bash

|

| 171 |

+

python train.py --config config/train_cord.yaml \

|

| 172 |

+

--pretrained_model_name_or_path "naver-clova-ix/donut-base" \

|

| 173 |

+

--dataset_name_or_paths '["naver-clova-ix/cord-v2"]' \

|

| 174 |

+

--exp_version "test_experiment"

|

| 175 |

+

.

|

| 176 |

+

.

|

| 177 |

+

Prediction: <s_menu><s_nm>Lemon Tea (L)</s_nm><s_cnt>1</s_cnt><s_price>25.000</s_price></s_menu><s_total><s_total_price>25.000</s_total_price><s_cashprice>30.000</s_cashprice><s_changeprice>5.000</s_changeprice></s_total>

|

| 178 |

+

Answer: <s_menu><s_nm>Lemon Tea (L)</s_nm><s_cnt>1</s_cnt><s_price>25.000</s_price></s_menu><s_total><s_total_price>25.000</s_total_price><s_cashprice>30.000</s_cashprice><s_changeprice>5.000</s_changeprice></s_total>

|

| 179 |

+

Normed ED: 0.0

|

| 180 |

+

Prediction: <s_menu><s_nm>Hulk Topper Package</s_nm><s_cnt>1</s_cnt><s_price>100.000</s_price></s_menu><s_total><s_total_price>100.000</s_total_price><s_cashprice>100.000</s_cashprice><s_changeprice>0</s_changeprice></s_total>

|

| 181 |

+

Answer: <s_menu><s_nm>Hulk Topper Package</s_nm><s_cnt>1</s_cnt><s_price>100.000</s_price></s_menu><s_total><s_total_price>100.000</s_total_price><s_cashprice>100.000</s_cashprice><s_changeprice>0</s_changeprice></s_total>

|

| 182 |

+

Normed ED: 0.0

|

| 183 |

+

Prediction: <s_menu><s_nm>Giant Squid</s_nm><s_cnt>x 1</s_cnt><s_price>Rp. 39.000</s_price><s_sub><s_nm>C.Finishing - Cut</s_nm><s_price>Rp. 0</s_price><sep/><s_nm>B.Spicy Level - Extreme Hot Rp. 0</s_price></s_sub><sep/><s_nm>A.Flavour - Salt & Pepper</s_nm><s_price>Rp. 0</s_price></s_sub></s_menu><s_sub_total><s_subtotal_price>Rp. 39.000</s_subtotal_price></s_sub_total><s_total><s_total_price>Rp. 39.000</s_total_price><s_cashprice>Rp. 50.000</s_cashprice><s_changeprice>Rp. 11.000</s_changeprice></s_total>

|

| 184 |

+

Answer: <s_menu><s_nm>Giant Squid</s_nm><s_cnt>x1</s_cnt><s_price>Rp. 39.000</s_price><s_sub><s_nm>C.Finishing - Cut</s_nm><s_price>Rp. 0</s_price><sep/><s_nm>B.Spicy Level - Extreme Hot</s_nm><s_price>Rp. 0</s_price><sep/><s_nm>A.Flavour- Salt & Pepper</s_nm><s_price>Rp. 0</s_price></s_sub></s_menu><s_sub_total><s_subtotal_price>Rp. 39.000</s_subtotal_price></s_sub_total><s_total><s_total_price>Rp. 39.000</s_total_price><s_cashprice>Rp. 50.000</s_cashprice><s_changeprice>Rp. 11.000</s_changeprice></s_total>

|

| 185 |

+

Normed ED: 0.039603960396039604

|

| 186 |

+

Epoch 29: 100%|█████████████| 200/200 [01:49<00:00, 1.82it/s, loss=0.00327, exp_name=train_cord, exp_version=test_experiment]

|

| 187 |

+

```

|

| 188 |

+

|

| 189 |

+

Some important arguments:

|

| 190 |

+

|

| 191 |

+

- `--config` : config file path for model training.

|

| 192 |

+

- `--pretrained_model_name_or_path` : string format, model name in Hugging Face modelhub or local path.

|

| 193 |

+

- `--dataset_name_or_paths` : string format (json dumped), list of dataset names in Hugging Face datasets or local paths.

|

| 194 |

+

- `--result_path` : file path to save model outputs/artifacts.

|

| 195 |

+

- `--exp_version` : used for experiment versioning. The output files are saved at `{result_path}/{exp_version}/*`

|

| 196 |

+

|

| 197 |

+

### Test

|

| 198 |

+

|

| 199 |

+

With the trained model, test images and ground truth parses, you can get inference results and accuracy scores.

|

| 200 |

+

|

| 201 |

+

```bash

|

| 202 |

+

python test.py --dataset_name_or_path naver-clova-ix/cord-v2 --pretrained_model_name_or_path ./result/train_cord/test_experiment --save_path ./result/output.json

|

| 203 |

+

100%|█████████████| 100/100 [00:35<00:00, 2.80it/s]

|

| 204 |

+

Total number of samples: 100, Tree Edit Distance (TED) based accuracy score: 0.9129639764131697, F1 accuracy score: 0.8406020841373987

|

| 205 |

+

```

|

| 206 |

+

|

| 207 |

+

Some important arguments:

|

| 208 |

+

|

| 209 |

+

- `--dataset_name_or_path` : string format, the target dataset name in Hugging Face datasets or local path.

|

| 210 |

+

- `--pretrained_model_name_or_path` : string format, the model name in Hugging Face modelhub or local path.

|

| 211 |

+

- `--save_path`: file path to save predictions and scores.

|

| 212 |

+

|

| 213 |

+

## How to Cite

|

| 214 |

+

If you find this work useful to you, please cite:

|

| 215 |

+

```bibtex

|

| 216 |

+

@inproceedings{kim2022donut,

|

| 217 |

+

title = {OCR-Free Document Understanding Transformer},

|

| 218 |

+

author = {Kim, Geewook and Hong, Teakgyu and Yim, Moonbin and Nam, JeongYeon and Park, Jinyoung and Yim, Jinyeong and Hwang, Wonseok and Yun, Sangdoo and Han, Dongyoon and Park, Seunghyun},

|

| 219 |

+

booktitle = {European Conference on Computer Vision (ECCV)},

|

| 220 |

+

year = {2022}

|

| 221 |

+

}

|

| 222 |

+

```

|

| 223 |

+

|

| 224 |

+

## License

|

| 225 |

+

|

| 226 |

+

```

|

| 227 |

+

MIT license

|

| 228 |

+

|

| 229 |

+

Copyright (c) 2022-present NAVER Corp.

|

| 230 |

+

|

| 231 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 232 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 233 |

+

in the Software without restriction, including without limitation the rights

|

| 234 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 235 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 236 |

+

furnished to do so, subject to the following conditions:

|

| 237 |

+

|

| 238 |

+

The above copyright notice and this permission notice shall be included in

|

| 239 |

+

all copies or substantial portions of the Software.

|

| 240 |

|

| 241 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 242 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 243 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 244 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 245 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 246 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

|

| 247 |

+

THE SOFTWARE.

|

| 248 |

+

```

|

app.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import argparse

|

| 3 |

+

import torch

|

| 4 |

+

from PIL import Image

|

| 5 |

+

from donut import DonutModel

|

| 6 |

+

def demo_process(input_img):

|

| 7 |

+

global model, task_prompt, task_name

|

| 8 |

+

input_img = Image.fromarray(input_img)

|

| 9 |

+

output = model.inference(image=input_img, prompt=task_prompt)["predictions"][0]

|

| 10 |

+

return output

|

| 11 |

+

parser = argparse.ArgumentParser()

|

| 12 |

+

parser.add_argument("--task", type=str, default="Booking")

|

| 13 |

+