Spaces:

Runtime error

Runtime error

hfspace gradio demo

Browse files- LICENSE +201 -0

- README.md +2 -13

- app.py +119 -0

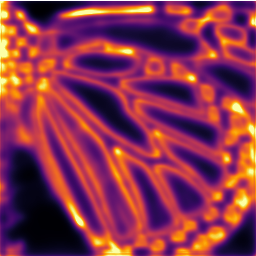

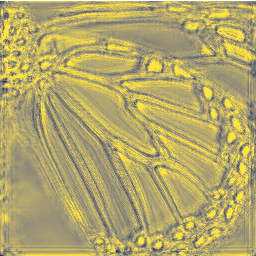

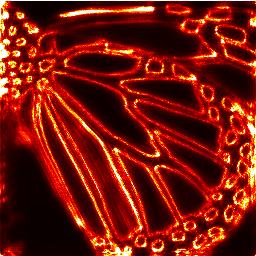

- demo_examples/baby.png +0 -0

- demo_examples/bird.png +0 -0

- demo_examples/butterfly.png +0 -0

- demo_examples/head.png +0 -0

- demo_examples/woman.png +0 -0

- ds.py +485 -0

- losses.py +131 -0

- networks_SRGAN.py +347 -0

- networks_T1toT2.py +477 -0

- requirements.txt +334 -0

- src/.gitkeep +0 -0

- src/__pycache__/ds.cpython-310.pyc +0 -0

- src/__pycache__/losses.cpython-310.pyc +0 -0

- src/__pycache__/networks_SRGAN.cpython-310.pyc +0 -0

- src/__pycache__/utils.cpython-310.pyc +0 -0

- src/app.py +115 -0

- src/ds.py +485 -0

- src/flagged/Alpha/0.png +0 -0

- src/flagged/Beta/0.png +0 -0

- src/flagged/Low-res/0.png +0 -0

- src/flagged/Orignal/0.png +0 -0

- src/flagged/Super-res/0.png +0 -0

- src/flagged/Uncertainty/0.png +0 -0

- src/flagged/log.csv +2 -0

- src/losses.py +131 -0

- src/networks_SRGAN.py +347 -0

- src/networks_T1toT2.py +477 -0

- src/utils.py +1273 -0

- utils.py +1304 -0

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,13 +1,2 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

emoji: 🦀

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: green

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 3.0.24

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: mit

|

| 11 |

-

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

+

# BayesCap

|

| 2 |

+

Bayesian Identity Cap for Calibrated Uncertainty in Pretrained Neural Networks

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

app.py

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import numpy as np

|

| 2 |

+

import random

|

| 3 |

+

import matplotlib.pyplot as plt

|

| 4 |

+

from matplotlib import cm

|

| 5 |

+

|

| 6 |

+

import torch

|

| 7 |

+

import torch.nn as nn

|

| 8 |

+

import torch.nn.functional as F

|

| 9 |

+

import torchvision.models as models

|

| 10 |

+

from torch.utils.data import Dataset, DataLoader

|

| 11 |

+

from torchvision import transforms

|

| 12 |

+

from torchvision.transforms.functional import InterpolationMode as IMode

|

| 13 |

+

|

| 14 |

+

from PIL import Image

|

| 15 |

+

|

| 16 |

+

from ds import *

|

| 17 |

+

from losses import *

|

| 18 |

+

from networks_SRGAN import *

|

| 19 |

+

from utils import *

|

| 20 |

+

|

| 21 |

+

device = 'cuda'

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

NetG = Generator()

|

| 25 |

+

model_parameters = filter(lambda p: True, NetG.parameters())

|

| 26 |

+

params = sum([np.prod(p.size()) for p in model_parameters])

|

| 27 |

+

print("Number of Parameters:", params)

|

| 28 |

+

NetC = BayesCap(in_channels=3, out_channels=3)

|

| 29 |

+

|

| 30 |

+

ensure_checkpoint_exists('BayesCap_SRGAN.pth')

|

| 31 |

+

NetG.load_state_dict(torch.load('BayesCap_SRGAN.pth', map_location=device))

|

| 32 |

+

NetG.to(device)

|

| 33 |

+

NetG.eval()

|

| 34 |

+

|

| 35 |

+

ensure_checkpoint_exists('BayesCap_ckpt.pth')

|

| 36 |

+

NetC.load_state_dict(torch.load('BayesCap_ckpt.pth', map_location=device))

|

| 37 |

+

NetC.to(device)

|

| 38 |

+

NetC.eval()

|

| 39 |

+

|

| 40 |

+

def tensor01_to_pil(xt):

|

| 41 |

+

r = transforms.ToPILImage(mode='RGB')(xt.squeeze())

|

| 42 |

+

return r

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def predict(img):

|

| 46 |

+

"""

|

| 47 |

+

img: image

|

| 48 |

+

"""

|

| 49 |

+

image_size = (256,256)

|

| 50 |

+

upscale_factor = 4

|

| 51 |

+

lr_transforms = transforms.Resize((image_size[0]//upscale_factor, image_size[1]//upscale_factor), interpolation=IMode.BICUBIC, antialias=True)

|

| 52 |

+

# lr_transforms = transforms.Resize((128, 128), interpolation=IMode.BICUBIC, antialias=True)

|

| 53 |

+

|

| 54 |

+

img = Image.fromarray(np.array(img))

|

| 55 |

+

img = lr_transforms(img)

|

| 56 |

+

lr_tensor = utils.image2tensor(img, range_norm=False, half=False)

|

| 57 |

+

|

| 58 |

+

device = 'cuda'

|

| 59 |

+

dtype = torch.cuda.FloatTensor

|

| 60 |

+

xLR = lr_tensor.to(device).unsqueeze(0)

|

| 61 |

+

xLR = xLR.type(dtype)

|

| 62 |

+

# pass them through the network

|

| 63 |

+

with torch.no_grad():

|

| 64 |

+

xSR = NetG(xLR)

|

| 65 |

+

xSRC_mu, xSRC_alpha, xSRC_beta = NetC(xSR)

|

| 66 |

+

|

| 67 |

+

a_map = (1/(xSRC_alpha[0] + 1e-5)).to('cpu').data

|

| 68 |

+

b_map = xSRC_beta[0].to('cpu').data

|

| 69 |

+

u_map = (a_map**2)*(torch.exp(torch.lgamma(3/(b_map + 1e-2)))/torch.exp(torch.lgamma(1/(b_map + 1e-2))))

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

x_LR = tensor01_to_pil(xLR.to('cpu').data.clip(0,1).transpose(0,2).transpose(0,1))

|

| 73 |

+

|

| 74 |

+

x_mean = tensor01_to_pil(xSR.to('cpu').data.clip(0,1).transpose(0,2).transpose(0,1))

|

| 75 |

+

|

| 76 |

+

#im = Image.fromarray(np.uint8(cm.gist_earth(myarray)*255))

|

| 77 |

+

|

| 78 |

+

a_map = torch.clamp(a_map, min=0, max=0.1)

|

| 79 |

+

a_map = (a_map - a_map.min())/(a_map.max() - a_map.min())

|

| 80 |

+

x_alpha = Image.fromarray(np.uint8(cm.inferno(a_map.transpose(0,2).transpose(0,1).squeeze())*255))

|

| 81 |

+

|

| 82 |

+

b_map = torch.clamp(b_map, min=0.45, max=0.75)

|

| 83 |

+

b_map = (b_map - b_map.min())/(b_map.max() - b_map.min())

|

| 84 |

+

x_beta = Image.fromarray(np.uint8(cm.cividis(b_map.transpose(0,2).transpose(0,1).squeeze())*255))

|

| 85 |

+

|

| 86 |

+

u_map = torch.clamp(u_map, min=0, max=0.15)

|

| 87 |

+

u_map = (u_map - u_map.min())/(u_map.max() - u_map.min())

|

| 88 |

+

x_uncer = Image.fromarray(np.uint8(cm.hot(u_map.transpose(0,2).transpose(0,1).squeeze())*255))

|

| 89 |

+

|

| 90 |

+

return x_LR, x_mean, x_alpha, x_beta, x_uncer

|

| 91 |

+

|

| 92 |

+

import gradio as gr

|

| 93 |

+

|

| 94 |

+

title = "BayesCap"

|

| 95 |

+

description = "BayesCap: Bayesian Identity Cap for Calibrated Uncertainty in Frozen Neural Networks (ECCV 2022)"

|

| 96 |

+

article = "<p style='text-align: center'> BayesCap: Bayesian Identity Cap for Calibrated Uncertainty in Frozen Neural Networks| <a href='https://github.com/ExplainableML/BayesCap'>Github Repo</a></p>"

|

| 97 |

+

|

| 98 |

+

|

| 99 |

+

gr.Interface(

|

| 100 |

+

fn=predict,

|

| 101 |

+

inputs=gr.inputs.Image(type='pil', label="Orignal"),

|

| 102 |

+

outputs=[

|

| 103 |

+

gr.outputs.Image(type='pil', label="Low-res"),

|

| 104 |

+

gr.outputs.Image(type='pil', label="Super-res"),

|

| 105 |

+

gr.outputs.Image(type='pil', label="Alpha"),

|

| 106 |

+

gr.outputs.Image(type='pil', label="Beta"),

|

| 107 |

+

gr.outputs.Image(type='pil', label="Uncertainty")

|

| 108 |

+

],

|

| 109 |

+

title=title,

|

| 110 |

+

description=description,

|

| 111 |

+

article=article,

|

| 112 |

+

examples=[

|

| 113 |

+

["./demo_examples/baby.png"],

|

| 114 |

+

["./demo_examples/bird.png"],

|

| 115 |

+

["./demo_examples/butterfly.png"],

|

| 116 |

+

["./demo_examples/head.png"],

|

| 117 |

+

["./demo_examples/woman.png"],

|

| 118 |

+

]

|

| 119 |

+

).launch(share=True)

|

demo_examples/baby.png

ADDED

|

demo_examples/bird.png

ADDED

|

demo_examples/butterfly.png

ADDED

|

demo_examples/head.png

ADDED

|

demo_examples/woman.png

ADDED

|

ds.py

ADDED

|

@@ -0,0 +1,485 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import absolute_import, division, print_function

|

| 2 |

+

|

| 3 |

+

import random

|

| 4 |

+

import copy

|

| 5 |

+

import io

|

| 6 |

+

import os

|

| 7 |

+

import numpy as np

|

| 8 |

+

from PIL import Image

|

| 9 |

+

import skimage.transform

|

| 10 |

+

from collections import Counter

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

import torch

|

| 14 |

+

import torch.utils.data as data

|

| 15 |

+

from torch import Tensor

|

| 16 |

+

from torch.utils.data import Dataset

|

| 17 |

+

from torchvision import transforms

|

| 18 |

+

from torchvision.transforms.functional import InterpolationMode as IMode

|

| 19 |

+

|

| 20 |

+

import utils

|

| 21 |

+

|

| 22 |

+

class ImgDset(Dataset):

|

| 23 |

+

"""Customize the data set loading function and prepare low/high resolution image data in advance.

|

| 24 |

+

|

| 25 |

+

Args:

|

| 26 |

+

dataroot (str): Training data set address

|

| 27 |

+

image_size (int): High resolution image size

|

| 28 |

+

upscale_factor (int): Image magnification

|

| 29 |

+

mode (str): Data set loading method, the training data set is for data enhancement,

|

| 30 |

+

and the verification data set is not for data enhancement

|

| 31 |

+

|

| 32 |

+

"""

|

| 33 |

+

|

| 34 |

+

def __init__(self, dataroot: str, image_size: int, upscale_factor: int, mode: str) -> None:

|

| 35 |

+

super(ImgDset, self).__init__()

|

| 36 |

+

self.filenames = [os.path.join(dataroot, x) for x in os.listdir(dataroot)]

|

| 37 |

+

|

| 38 |

+

if mode == "train":

|

| 39 |

+

self.hr_transforms = transforms.Compose([

|

| 40 |

+

transforms.RandomCrop(image_size),

|

| 41 |

+

transforms.RandomRotation(90),

|

| 42 |

+

transforms.RandomHorizontalFlip(0.5),

|

| 43 |

+

])

|

| 44 |

+

else:

|

| 45 |

+

self.hr_transforms = transforms.Resize(image_size)

|

| 46 |

+

|

| 47 |

+

self.lr_transforms = transforms.Resize((image_size[0]//upscale_factor, image_size[1]//upscale_factor), interpolation=IMode.BICUBIC, antialias=True)

|

| 48 |

+

|

| 49 |

+

def __getitem__(self, batch_index: int) -> [Tensor, Tensor]:

|

| 50 |

+

# Read a batch of image data

|

| 51 |

+

image = Image.open(self.filenames[batch_index])

|

| 52 |

+

|

| 53 |

+

# Transform image

|

| 54 |

+

hr_image = self.hr_transforms(image)

|

| 55 |

+

lr_image = self.lr_transforms(hr_image)

|

| 56 |

+

|

| 57 |

+

# Convert image data into Tensor stream format (PyTorch).

|

| 58 |

+

# Note: The range of input and output is between [0, 1]

|

| 59 |

+

lr_tensor = utils.image2tensor(lr_image, range_norm=False, half=False)

|

| 60 |

+

hr_tensor = utils.image2tensor(hr_image, range_norm=False, half=False)

|

| 61 |

+

|

| 62 |

+

return lr_tensor, hr_tensor

|

| 63 |

+

|

| 64 |

+

def __len__(self) -> int:

|

| 65 |

+

return len(self.filenames)

|

| 66 |

+

|

| 67 |

+

|

| 68 |

+

class PairedImages_w_nameList(Dataset):

|

| 69 |

+

'''

|

| 70 |

+

can act as supervised or un-supervised based on flists

|

| 71 |

+

'''

|

| 72 |

+

def __init__(self, flist1, flist2, transform1=None, transform2=None, do_aug=False):

|

| 73 |

+

self.flist1 = flist1

|

| 74 |

+

self.flist2 = flist2

|

| 75 |

+

self.transform1 = transform1

|

| 76 |

+

self.transform2 = transform2

|

| 77 |

+

self.do_aug = do_aug

|

| 78 |

+

def __getitem__(self, index):

|

| 79 |

+

impath1 = self.flist1[index]

|

| 80 |

+

img1 = Image.open(impath1).convert('RGB')

|

| 81 |

+

impath2 = self.flist2[index]

|

| 82 |

+

img2 = Image.open(impath2).convert('RGB')

|

| 83 |

+

|

| 84 |

+

img1 = utils.image2tensor(img1, range_norm=False, half=False)

|

| 85 |

+

img2 = utils.image2tensor(img2, range_norm=False, half=False)

|

| 86 |

+

|

| 87 |

+

if self.transform1 is not None:

|

| 88 |

+

img1 = self.transform1(img1)

|

| 89 |

+

if self.transform2 is not None:

|

| 90 |

+

img2 = self.transform2(img2)

|

| 91 |

+

|

| 92 |

+

return img1, img2

|

| 93 |

+

def __len__(self):

|

| 94 |

+

return len(self.flist1)

|

| 95 |

+

|

| 96 |

+

class PairedImages_w_nameList_npy(Dataset):

|

| 97 |

+

'''

|

| 98 |

+

can act as supervised or un-supervised based on flists

|

| 99 |

+

'''

|

| 100 |

+

def __init__(self, flist1, flist2, transform1=None, transform2=None, do_aug=False):

|

| 101 |

+

self.flist1 = flist1

|

| 102 |

+

self.flist2 = flist2

|

| 103 |

+

self.transform1 = transform1

|

| 104 |

+

self.transform2 = transform2

|

| 105 |

+

self.do_aug = do_aug

|

| 106 |

+

def __getitem__(self, index):

|

| 107 |

+

impath1 = self.flist1[index]

|

| 108 |

+

img1 = np.load(impath1)

|

| 109 |

+

impath2 = self.flist2[index]

|

| 110 |

+

img2 = np.load(impath2)

|

| 111 |

+

|

| 112 |

+

if self.transform1 is not None:

|

| 113 |

+

img1 = self.transform1(img1)

|

| 114 |

+

if self.transform2 is not None:

|

| 115 |

+

img2 = self.transform2(img2)

|

| 116 |

+

|

| 117 |

+

return img1, img2

|

| 118 |

+

def __len__(self):

|

| 119 |

+

return len(self.flist1)

|

| 120 |

+

|

| 121 |

+

# def call_paired():

|

| 122 |

+

# root1='./GOPRO_3840FPS_AVG_3-21/train/blur/'

|

| 123 |

+

# root2='./GOPRO_3840FPS_AVG_3-21/train/sharp/'

|

| 124 |

+

|

| 125 |

+

# flist1=glob.glob(root1+'/*/*.png')

|

| 126 |

+

# flist2=glob.glob(root2+'/*/*.png')

|

| 127 |

+

|

| 128 |

+

# dset = PairedImages_w_nameList(root1,root2,flist1,flist2)

|

| 129 |

+

|

| 130 |

+

#### KITTI depth

|

| 131 |

+

|

| 132 |

+

def load_velodyne_points(filename):

|

| 133 |

+

"""Load 3D point cloud from KITTI file format

|

| 134 |

+

(adapted from https://github.com/hunse/kitti)

|

| 135 |

+

"""

|

| 136 |

+

points = np.fromfile(filename, dtype=np.float32).reshape(-1, 4)

|

| 137 |

+

points[:, 3] = 1.0 # homogeneous

|

| 138 |

+

return points

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

def read_calib_file(path):

|

| 142 |

+

"""Read KITTI calibration file

|

| 143 |

+

(from https://github.com/hunse/kitti)

|

| 144 |

+

"""

|

| 145 |

+

float_chars = set("0123456789.e+- ")

|

| 146 |

+

data = {}

|

| 147 |

+

with open(path, 'r') as f:

|

| 148 |

+

for line in f.readlines():

|

| 149 |

+

key, value = line.split(':', 1)

|

| 150 |

+

value = value.strip()

|

| 151 |

+

data[key] = value

|

| 152 |

+

if float_chars.issuperset(value):

|

| 153 |

+

# try to cast to float array

|

| 154 |

+

try:

|

| 155 |

+

data[key] = np.array(list(map(float, value.split(' '))))

|

| 156 |

+

except ValueError:

|

| 157 |

+

# casting error: data[key] already eq. value, so pass

|

| 158 |

+

pass

|

| 159 |

+

|

| 160 |

+

return data

|

| 161 |

+

|

| 162 |

+

|

| 163 |

+

def sub2ind(matrixSize, rowSub, colSub):

|

| 164 |

+

"""Convert row, col matrix subscripts to linear indices

|

| 165 |

+

"""

|

| 166 |

+

m, n = matrixSize

|

| 167 |

+

return rowSub * (n-1) + colSub - 1

|

| 168 |

+

|

| 169 |

+

|

| 170 |

+

def generate_depth_map(calib_dir, velo_filename, cam=2, vel_depth=False):

|

| 171 |

+

"""Generate a depth map from velodyne data

|

| 172 |

+

"""

|

| 173 |

+

# load calibration files

|

| 174 |

+

cam2cam = read_calib_file(os.path.join(calib_dir, 'calib_cam_to_cam.txt'))

|

| 175 |

+

velo2cam = read_calib_file(os.path.join(calib_dir, 'calib_velo_to_cam.txt'))

|

| 176 |

+

velo2cam = np.hstack((velo2cam['R'].reshape(3, 3), velo2cam['T'][..., np.newaxis]))

|

| 177 |

+

velo2cam = np.vstack((velo2cam, np.array([0, 0, 0, 1.0])))

|

| 178 |

+

|

| 179 |

+

# get image shape

|

| 180 |

+

im_shape = cam2cam["S_rect_02"][::-1].astype(np.int32)

|

| 181 |

+

|

| 182 |

+

# compute projection matrix velodyne->image plane

|

| 183 |

+

R_cam2rect = np.eye(4)

|

| 184 |

+

R_cam2rect[:3, :3] = cam2cam['R_rect_00'].reshape(3, 3)

|

| 185 |

+

P_rect = cam2cam['P_rect_0'+str(cam)].reshape(3, 4)

|

| 186 |

+

P_velo2im = np.dot(np.dot(P_rect, R_cam2rect), velo2cam)

|

| 187 |

+

|

| 188 |

+

# load velodyne points and remove all behind image plane (approximation)

|

| 189 |

+

# each row of the velodyne data is forward, left, up, reflectance

|

| 190 |

+

velo = load_velodyne_points(velo_filename)

|

| 191 |

+

velo = velo[velo[:, 0] >= 0, :]

|

| 192 |

+

|

| 193 |

+

# project the points to the camera

|

| 194 |

+

velo_pts_im = np.dot(P_velo2im, velo.T).T

|

| 195 |

+

velo_pts_im[:, :2] = velo_pts_im[:, :2] / velo_pts_im[:, 2][..., np.newaxis]

|

| 196 |

+

|

| 197 |

+

if vel_depth:

|

| 198 |

+

velo_pts_im[:, 2] = velo[:, 0]

|

| 199 |

+

|

| 200 |

+

# check if in bounds

|

| 201 |

+

# use minus 1 to get the exact same value as KITTI matlab code

|

| 202 |

+

velo_pts_im[:, 0] = np.round(velo_pts_im[:, 0]) - 1

|

| 203 |

+

velo_pts_im[:, 1] = np.round(velo_pts_im[:, 1]) - 1

|

| 204 |

+

val_inds = (velo_pts_im[:, 0] >= 0) & (velo_pts_im[:, 1] >= 0)

|

| 205 |

+

val_inds = val_inds & (velo_pts_im[:, 0] < im_shape[1]) & (velo_pts_im[:, 1] < im_shape[0])

|

| 206 |

+

velo_pts_im = velo_pts_im[val_inds, :]

|

| 207 |

+

|

| 208 |

+

# project to image

|

| 209 |

+

depth = np.zeros((im_shape[:2]))

|

| 210 |

+

depth[velo_pts_im[:, 1].astype(np.int), velo_pts_im[:, 0].astype(np.int)] = velo_pts_im[:, 2]

|

| 211 |

+

|

| 212 |

+

# find the duplicate points and choose the closest depth

|

| 213 |

+

inds = sub2ind(depth.shape, velo_pts_im[:, 1], velo_pts_im[:, 0])

|

| 214 |

+

dupe_inds = [item for item, count in Counter(inds).items() if count > 1]

|

| 215 |

+

for dd in dupe_inds:

|

| 216 |

+

pts = np.where(inds == dd)[0]

|

| 217 |

+

x_loc = int(velo_pts_im[pts[0], 0])

|

| 218 |

+

y_loc = int(velo_pts_im[pts[0], 1])

|

| 219 |

+

depth[y_loc, x_loc] = velo_pts_im[pts, 2].min()

|

| 220 |

+

depth[depth < 0] = 0

|

| 221 |

+

|

| 222 |

+

return depth

|

| 223 |

+

|

| 224 |

+

def pil_loader(path):

|

| 225 |

+

# open path as file to avoid ResourceWarning

|

| 226 |

+

# (https://github.com/python-pillow/Pillow/issues/835)

|

| 227 |

+

with open(path, 'rb') as f:

|

| 228 |

+

with Image.open(f) as img:

|

| 229 |

+

return img.convert('RGB')

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

class MonoDataset(data.Dataset):

|

| 233 |

+

"""Superclass for monocular dataloaders

|

| 234 |

+

|

| 235 |

+

Args:

|

| 236 |

+

data_path

|

| 237 |

+

filenames

|

| 238 |

+

height

|

| 239 |

+

width

|

| 240 |

+

frame_idxs

|

| 241 |

+

num_scales

|

| 242 |

+

is_train

|

| 243 |

+

img_ext

|

| 244 |

+

"""

|

| 245 |

+

def __init__(self,

|

| 246 |

+

data_path,

|

| 247 |

+

filenames,

|

| 248 |

+

height,

|

| 249 |

+

width,

|

| 250 |

+

frame_idxs,

|

| 251 |

+

num_scales,

|

| 252 |

+

is_train=False,

|

| 253 |

+

img_ext='.jpg'):

|

| 254 |

+

super(MonoDataset, self).__init__()

|

| 255 |

+

|

| 256 |

+

self.data_path = data_path

|

| 257 |

+

self.filenames = filenames

|

| 258 |

+

self.height = height

|

| 259 |

+

self.width = width

|

| 260 |

+

self.num_scales = num_scales

|

| 261 |

+

self.interp = Image.ANTIALIAS

|

| 262 |

+

|

| 263 |

+

self.frame_idxs = frame_idxs

|

| 264 |

+

|

| 265 |

+

self.is_train = is_train

|

| 266 |

+

self.img_ext = img_ext

|

| 267 |

+

|

| 268 |

+

self.loader = pil_loader

|

| 269 |

+

self.to_tensor = transforms.ToTensor()

|

| 270 |

+

|

| 271 |

+

# We need to specify augmentations differently in newer versions of torchvision.

|

| 272 |

+

# We first try the newer tuple version; if this fails we fall back to scalars

|

| 273 |

+

try:

|

| 274 |

+

self.brightness = (0.8, 1.2)

|

| 275 |

+

self.contrast = (0.8, 1.2)

|

| 276 |

+

self.saturation = (0.8, 1.2)

|

| 277 |

+

self.hue = (-0.1, 0.1)

|

| 278 |

+

transforms.ColorJitter.get_params(

|

| 279 |

+

self.brightness, self.contrast, self.saturation, self.hue)

|

| 280 |

+

except TypeError:

|

| 281 |

+

self.brightness = 0.2

|

| 282 |

+

self.contrast = 0.2

|

| 283 |

+

self.saturation = 0.2

|

| 284 |

+

self.hue = 0.1

|

| 285 |

+

|

| 286 |

+

self.resize = {}

|

| 287 |

+

for i in range(self.num_scales):

|

| 288 |

+

s = 2 ** i

|

| 289 |

+

self.resize[i] = transforms.Resize((self.height // s, self.width // s),

|

| 290 |

+

interpolation=self.interp)

|

| 291 |

+

|

| 292 |

+

self.load_depth = self.check_depth()

|

| 293 |

+

|

| 294 |

+

def preprocess(self, inputs, color_aug):

|

| 295 |

+

"""Resize colour images to the required scales and augment if required

|

| 296 |

+

|

| 297 |

+

We create the color_aug object in advance and apply the same augmentation to all

|

| 298 |

+

images in this item. This ensures that all images input to the pose network receive the

|

| 299 |

+

same augmentation.

|

| 300 |

+

"""

|

| 301 |

+

for k in list(inputs):

|

| 302 |

+

frame = inputs[k]

|

| 303 |

+

if "color" in k:

|

| 304 |

+

n, im, i = k

|

| 305 |

+

for i in range(self.num_scales):

|

| 306 |

+

inputs[(n, im, i)] = self.resize[i](inputs[(n, im, i - 1)])

|

| 307 |

+

|

| 308 |

+

for k in list(inputs):

|

| 309 |

+

f = inputs[k]

|

| 310 |

+

if "color" in k:

|

| 311 |

+

n, im, i = k

|

| 312 |

+

inputs[(n, im, i)] = self.to_tensor(f)

|

| 313 |

+

inputs[(n + "_aug", im, i)] = self.to_tensor(color_aug(f))

|

| 314 |

+

|

| 315 |

+

def __len__(self):

|

| 316 |

+

return len(self.filenames)

|

| 317 |

+

|

| 318 |

+

def __getitem__(self, index):

|

| 319 |

+

"""Returns a single training item from the dataset as a dictionary.

|

| 320 |

+

|

| 321 |

+

Values correspond to torch tensors.

|

| 322 |

+

Keys in the dictionary are either strings or tuples:

|

| 323 |

+

|

| 324 |

+

("color", <frame_id>, <scale>) for raw colour images,

|

| 325 |

+

("color_aug", <frame_id>, <scale>) for augmented colour images,

|

| 326 |

+

("K", scale) or ("inv_K", scale) for camera intrinsics,

|

| 327 |

+

"stereo_T" for camera extrinsics, and

|

| 328 |

+

"depth_gt" for ground truth depth maps.

|

| 329 |

+

|

| 330 |

+

<frame_id> is either:

|

| 331 |

+

an integer (e.g. 0, -1, or 1) representing the temporal step relative to 'index',

|

| 332 |

+

or

|

| 333 |

+

"s" for the opposite image in the stereo pair.

|

| 334 |

+

|

| 335 |

+

<scale> is an integer representing the scale of the image relative to the fullsize image:

|

| 336 |

+

-1 images at native resolution as loaded from disk

|

| 337 |

+

0 images resized to (self.width, self.height )

|

| 338 |

+

1 images resized to (self.width // 2, self.height // 2)

|

| 339 |

+

2 images resized to (self.width // 4, self.height // 4)

|

| 340 |

+

3 images resized to (self.width // 8, self.height // 8)

|

| 341 |

+

"""

|

| 342 |

+

inputs = {}

|

| 343 |

+

|

| 344 |

+

do_color_aug = self.is_train and random.random() > 0.5

|

| 345 |

+

do_flip = self.is_train and random.random() > 0.5

|

| 346 |

+

|

| 347 |

+

line = self.filenames[index].split()

|

| 348 |

+

folder = line[0]

|

| 349 |

+

|

| 350 |

+

if len(line) == 3:

|

| 351 |

+

frame_index = int(line[1])

|

| 352 |

+

else:

|

| 353 |

+

frame_index = 0

|

| 354 |

+

|

| 355 |

+

if len(line) == 3:

|

| 356 |

+

side = line[2]

|

| 357 |

+

else:

|

| 358 |

+

side = None

|

| 359 |

+

|

| 360 |

+

for i in self.frame_idxs:

|

| 361 |

+

if i == "s":

|

| 362 |

+

other_side = {"r": "l", "l": "r"}[side]

|

| 363 |

+

inputs[("color", i, -1)] = self.get_color(folder, frame_index, other_side, do_flip)

|

| 364 |

+

else:

|

| 365 |

+

inputs[("color", i, -1)] = self.get_color(folder, frame_index + i, side, do_flip)

|

| 366 |

+

|

| 367 |

+

# adjusting intrinsics to match each scale in the pyramid

|

| 368 |

+

for scale in range(self.num_scales):

|

| 369 |

+

K = self.K.copy()

|

| 370 |

+

|

| 371 |

+

K[0, :] *= self.width // (2 ** scale)

|

| 372 |

+

K[1, :] *= self.height // (2 ** scale)

|

| 373 |

+

|

| 374 |

+

inv_K = np.linalg.pinv(K)

|

| 375 |

+

|

| 376 |

+

inputs[("K", scale)] = torch.from_numpy(K)

|

| 377 |

+

inputs[("inv_K", scale)] = torch.from_numpy(inv_K)

|

| 378 |

+

|

| 379 |

+

if do_color_aug:

|

| 380 |

+

color_aug = transforms.ColorJitter.get_params(

|

| 381 |

+

self.brightness, self.contrast, self.saturation, self.hue)

|

| 382 |

+

else:

|

| 383 |

+

color_aug = (lambda x: x)

|

| 384 |

+

|

| 385 |

+

self.preprocess(inputs, color_aug)

|

| 386 |

+

|

| 387 |

+

for i in self.frame_idxs:

|

| 388 |

+

del inputs[("color", i, -1)]

|

| 389 |

+

del inputs[("color_aug", i, -1)]

|

| 390 |

+

|

| 391 |

+

if self.load_depth:

|

| 392 |

+

depth_gt = self.get_depth(folder, frame_index, side, do_flip)

|

| 393 |

+

inputs["depth_gt"] = np.expand_dims(depth_gt, 0)

|

| 394 |

+

inputs["depth_gt"] = torch.from_numpy(inputs["depth_gt"].astype(np.float32))

|

| 395 |

+

|

| 396 |

+

if "s" in self.frame_idxs:

|

| 397 |

+

stereo_T = np.eye(4, dtype=np.float32)

|

| 398 |

+

baseline_sign = -1 if do_flip else 1

|

| 399 |

+

side_sign = -1 if side == "l" else 1

|

| 400 |

+

stereo_T[0, 3] = side_sign * baseline_sign * 0.1

|

| 401 |

+

|

| 402 |

+

inputs["stereo_T"] = torch.from_numpy(stereo_T)

|

| 403 |

+

|

| 404 |

+

return inputs

|

| 405 |

+

|

| 406 |

+

def get_color(self, folder, frame_index, side, do_flip):

|

| 407 |

+

raise NotImplementedError

|

| 408 |

+

|

| 409 |

+

def check_depth(self):

|

| 410 |

+

raise NotImplementedError

|

| 411 |

+

|

| 412 |

+

def get_depth(self, folder, frame_index, side, do_flip):

|

| 413 |

+

raise NotImplementedError

|

| 414 |

+

|

| 415 |

+

class KITTIDataset(MonoDataset):

|

| 416 |

+

"""Superclass for different types of KITTI dataset loaders

|

| 417 |

+

"""

|

| 418 |

+

def __init__(self, *args, **kwargs):

|

| 419 |

+

super(KITTIDataset, self).__init__(*args, **kwargs)

|

| 420 |

+

|

| 421 |

+

# NOTE: Make sure your intrinsics matrix is *normalized* by the original image size.

|

| 422 |

+

# To normalize you need to scale the first row by 1 / image_width and the second row

|

| 423 |

+

# by 1 / image_height. Monodepth2 assumes a principal point to be exactly centered.

|

| 424 |

+

# If your principal point is far from the center you might need to disable the horizontal

|

| 425 |

+

# flip augmentation.

|

| 426 |

+

self.K = np.array([[0.58, 0, 0.5, 0],

|

| 427 |

+

[0, 1.92, 0.5, 0],

|

| 428 |

+

[0, 0, 1, 0],

|

| 429 |

+

[0, 0, 0, 1]], dtype=np.float32)

|

| 430 |

+

|

| 431 |

+

self.full_res_shape = (1242, 375)

|

| 432 |

+

self.side_map = {"2": 2, "3": 3, "l": 2, "r": 3}

|

| 433 |

+

|

| 434 |

+

def check_depth(self):

|

| 435 |

+

line = self.filenames[0].split()

|

| 436 |

+

scene_name = line[0]

|

| 437 |

+

frame_index = int(line[1])

|

| 438 |

+

|

| 439 |

+

velo_filename = os.path.join(

|

| 440 |

+

self.data_path,

|

| 441 |

+

scene_name,

|

| 442 |

+

"velodyne_points/data/{:010d}.bin".format(int(frame_index)))

|

| 443 |

+

|

| 444 |

+

return os.path.isfile(velo_filename)

|

| 445 |

+

|

| 446 |

+

def get_color(self, folder, frame_index, side, do_flip):

|

| 447 |

+

color = self.loader(self.get_image_path(folder, frame_index, side))

|

| 448 |

+

|

| 449 |

+

if do_flip:

|

| 450 |

+

color = color.transpose(Image.FLIP_LEFT_RIGHT)

|

| 451 |

+

|

| 452 |

+

return color

|

| 453 |

+

|

| 454 |

+

|