Spaces:

Running

Running

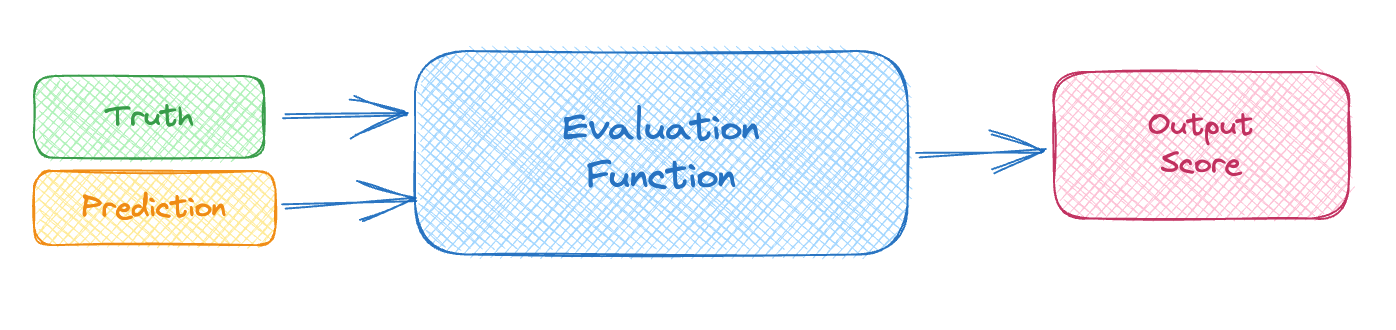

added eval image and explanation

Browse files- README.md +1 -1

- app.py +27 -7

- assets/eval_fnc_viz.png +0 -0

- constants.py +19 -0

- span_dataclass_converters.py +9 -0

README.md

CHANGED

|

@@ -1,7 +1,7 @@

|

|

| 1 |

---

|

| 2 |

title: "Evaluating NER Evaluation Metrics!"

|

| 3 |

emoji: 🤗

|

| 4 |

-

colorFrom:

|

| 5 |

colorTo: yellow

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: "1.36.0"

|

|

|

|

| 1 |

---

|

| 2 |

title: "Evaluating NER Evaluation Metrics!"

|

| 3 |

emoji: 🤗

|

| 4 |

+

colorFrom: blue

|

| 5 |

colorTo: yellow

|

| 6 |

sdk: streamlit

|

| 7 |

sdk_version: "1.36.0"

|

app.py

CHANGED

|

@@ -5,7 +5,14 @@ import streamlit as st

|

|

| 5 |

from annotated_text.util import get_annotated_html

|

| 6 |

from streamlit_annotation_tools import text_labeler

|

| 7 |

|

| 8 |

-

from constants import

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 9 |

from evaluation_metrics import EVALUATION_METRICS

|

| 10 |

from predefined_example import EXAMPLES

|

| 11 |

from span_dataclass_converters import (

|

|

@@ -28,15 +35,28 @@ def get_examples_attributes(selected_example):

|

|

| 28 |

|

| 29 |

if __name__ == "__main__":

|

| 30 |

st.set_page_config(layout="wide")

|

| 31 |

-

st.title(

|

| 32 |

|

| 33 |

-

st.write(

|

| 34 |

-

"Evaluation for the NER task requires a ground truth and a prediction that will be evaluated. The ground truth is shown below, add predictions in the next section to compare the evaluation metrics."

|

| 35 |

-

)

|

| 36 |

explanation_tab, comparision_tab = st.tabs(["📙 Explanation", "⚖️ Comparision"])

|

| 37 |

|

| 38 |

with explanation_tab:

|

| 39 |

-

st.write(

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

|

| 41 |

with comparision_tab:

|

| 42 |

# with st.container():

|

|

@@ -78,7 +98,7 @@ if __name__ == "__main__":

|

|

| 78 |

st.subheader("Adding predictions")

|

| 79 |

st.markdown(PREDICTION_ADDITION_INSTRUCTION)

|

| 80 |

st.write(

|

| 81 |

-

"Note: Only the spans of the selected label name

|

| 82 |

)

|

| 83 |

labels = text_labeler(text, gt_labels)

|

| 84 |

st.json(labels, expanded=False)

|

|

|

|

| 5 |

from annotated_text.util import get_annotated_html

|

| 6 |

from streamlit_annotation_tools import text_labeler

|

| 7 |

|

| 8 |

+

from constants import (

|

| 9 |

+

APP_INTRO,

|

| 10 |

+

APP_TITLE,

|

| 11 |

+

EVAL_FUNCTION_INTRO,

|

| 12 |

+

EVAL_FUNCTION_PROPERTIES,

|

| 13 |

+

NER_TASK_EXPLAINER,

|

| 14 |

+

PREDICTION_ADDITION_INSTRUCTION,

|

| 15 |

+

)

|

| 16 |

from evaluation_metrics import EVALUATION_METRICS

|

| 17 |

from predefined_example import EXAMPLES

|

| 18 |

from span_dataclass_converters import (

|

|

|

|

| 35 |

|

| 36 |

if __name__ == "__main__":

|

| 37 |

st.set_page_config(layout="wide")

|

| 38 |

+

st.title(APP_TITLE)

|

| 39 |

|

| 40 |

+

st.write(APP_INTRO)

|

|

|

|

|

|

|

| 41 |

explanation_tab, comparision_tab = st.tabs(["📙 Explanation", "⚖️ Comparision"])

|

| 42 |

|

| 43 |

with explanation_tab:

|

| 44 |

+

st.write(EVAL_FUNCTION_INTRO)

|

| 45 |

+

st.image("assets/eval_fnc_viz.png", caption="Evaluation Function Flow")

|

| 46 |

+

st.markdown(EVAL_FUNCTION_PROPERTIES)

|

| 47 |

+

st.markdown(NER_TASK_EXPLAINER)

|

| 48 |

+

st.subheader("Evaluation Metrics")

|

| 49 |

+

metric_names = "\n".join(

|

| 50 |

+

[

|

| 51 |

+

f"{index+1}. " + evaluation_metric.name

|

| 52 |

+

for index, evaluation_metric in enumerate(EVALUATION_METRICS)

|

| 53 |

+

]

|

| 54 |

+

)

|

| 55 |

+

st.markdown(

|

| 56 |

+

"The different evaluation metrics we have for the NER task are\n"

|

| 57 |

+

"\n"

|

| 58 |

+

f"{metric_names}"

|

| 59 |

+

)

|

| 60 |

|

| 61 |

with comparision_tab:

|

| 62 |

# with st.container():

|

|

|

|

| 98 |

st.subheader("Adding predictions")

|

| 99 |

st.markdown(PREDICTION_ADDITION_INSTRUCTION)

|

| 100 |

st.write(

|

| 101 |

+

"Note: Only the spans of the selected label name are shown at a given instance. Click on the label to see the corresponding spans. (or view the json below)",

|

| 102 |

)

|

| 103 |

labels = text_labeler(text, gt_labels)

|

| 104 |

st.json(labels, expanded=False)

|

assets/eval_fnc_viz.png

ADDED

|

constants.py

CHANGED

|

@@ -1,3 +1,22 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

PREDICTION_ADDITION_INSTRUCTION = """

|

| 2 |

Add predictions to the list of predictions on which the evaluation metric will be caculated.

|

| 3 |

- Select the entity type/label name and then highlight the span in the text below.

|

|

|

|

| 1 |

+

APP_TITLE = "📐 NER Metrics Comparison ⚖️"

|

| 2 |

+

|

| 3 |

+

APP_INTRO = "The NER task is performed over a piece of text and involves recognition of entities belonging to a desired entity set and classifying them. The various metrics are explained in the explanation tab. Once you go through them, head to the comparision tab to test out some examples."

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

### EXPLANATION TAB ###

|

| 7 |

+

|

| 8 |

+

EVAL_FUNCTION_INTRO = "An evaluation function tells us how well a model is performing. The basic working of any evaluation function involves comparing the model's output with the ground truth to give a score of correctness."

|

| 9 |

+

EVAL_FUNCTION_PROPERTIES = """

|

| 10 |

+

Some basic properties of an evaluation function are -

|

| 11 |

+

1. Give an output score equivalent to the upper bound when the prediction is completely correct(in some tasks, multiple variations of a predictions can be considered correct)

|

| 12 |

+

2. Give an output score equivalent to the lower bound when the prediction is completely wrong.

|

| 13 |

+

3. GIve an output score between upper and lower bound in other cases, corresponding to the degree of correctness.

|

| 14 |

+

"""

|

| 15 |

+

NER_TASK_EXPLAINER = """

|

| 16 |

+

The output of the NER task can be represented in either token format or span format.

|

| 17 |

+

"""

|

| 18 |

+

### COMPARISION TAB ###

|

| 19 |

+

|

| 20 |

PREDICTION_ADDITION_INSTRUCTION = """

|

| 21 |

Add predictions to the list of predictions on which the evaluation metric will be caculated.

|

| 22 |

- Select the entity type/label name and then highlight the span in the text below.

|

span_dataclass_converters.py

CHANGED

|

@@ -1,3 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

def get_ner_spans_from_annotations(annotated_labels):

|

| 2 |

spans = []

|

| 3 |

for entity_type, spans_list in annotated_labels.items():

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

There are 4 data formats for spans

|

| 3 |

+

1. annotations - this is what we obtain from the text_annotator, the format can be seen in the predefined_examples, gt_labels

|

| 4 |

+

2. higlight_spans - this is the format used by the highlighter to return the highlighted html text. This is a list of string/tuples("string", "label", color)

|

| 5 |

+

3. ner_spans - this is the standard format used for representing ner_spans, it is a dict of {"start":int, "end":int, "label":str, "span_text":str}

|

| 6 |

+

4. Token level output - this is delt with in the token_level_output file, this is either a list of tuples with [(token, label)] or just a list of [label, label]

|

| 7 |

+

"""

|

| 8 |

+

|

| 9 |

+

|

| 10 |

def get_ner_spans_from_annotations(annotated_labels):

|

| 11 |

spans = []

|

| 12 |

for entity_type, spans_list in annotated_labels.items():

|