Spaces:

Runtime error

Runtime error

wmpscc

commited on

Commit

·

7d1312d

1

Parent(s):

6aa2a6e

add

Browse files- app.py +56 -0

- configs.py +9 -0

- data/fairface_gender_angle.csv +0 -0

- img/demo.png +0 -0

- img/pic_top.jpg +0 -0

- models/__init__.py +0 -0

- models/__pycache__/__init__.cpython-39.pyc +0 -0

- models/encoders/__init__.py +0 -0

- models/encoders/helpers.py +140 -0

- models/encoders/model_irse.py +84 -0

- models/encoders/psp_encoders.py +236 -0

- models/stylegan2/__init__.py +0 -0

- models/stylegan2/__pycache__/__init__.cpython-39.pyc +0 -0

- models/stylegan2/__pycache__/model.cpython-39.pyc +0 -0

- models/stylegan2/model.py +674 -0

- models/stylegan2/op/__init__.py +2 -0

- models/stylegan2/op/__pycache__/__init__.cpython-39.pyc +0 -0

- models/stylegan2/op/__pycache__/fused_act.cpython-39.pyc +0 -0

- models/stylegan2/op/fused_act.py +86 -0

- models/stylegan2/op/fused_bias_act.cpp +21 -0

- models/stylegan2/op/fused_bias_act_kernel.cu +99 -0

- models/stylegan2/op/upfirdn2d.cpp +23 -0

- models/stylegan2/op/upfirdn2d.py +186 -0

- models/stylegan2/op/upfirdn2d_kernel.cu +272 -0

- models/stylegene/__init__.py +0 -0

- models/stylegene/__pycache__/__init__.cpython-39.pyc +0 -0

- models/stylegene/__pycache__/api.cpython-39.pyc +0 -0

- models/stylegene/api.py +94 -0

- models/stylegene/data_util.py +36 -0

- models/stylegene/fair_face_model.py +61 -0

- models/stylegene/gene_crossover_mutation.py +64 -0

- models/stylegene/gene_pool.py +42 -0

- models/stylegene/model.py +209 -0

- models/stylegene/util.py +30 -0

- preprocess/__init__.py +0 -0

- preprocess/align_images.py +32 -0

- preprocess/face_alignment.py +87 -0

- preprocess/landmarks_detector.py +24 -0

- requirements.txt +19 -0

app.py

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

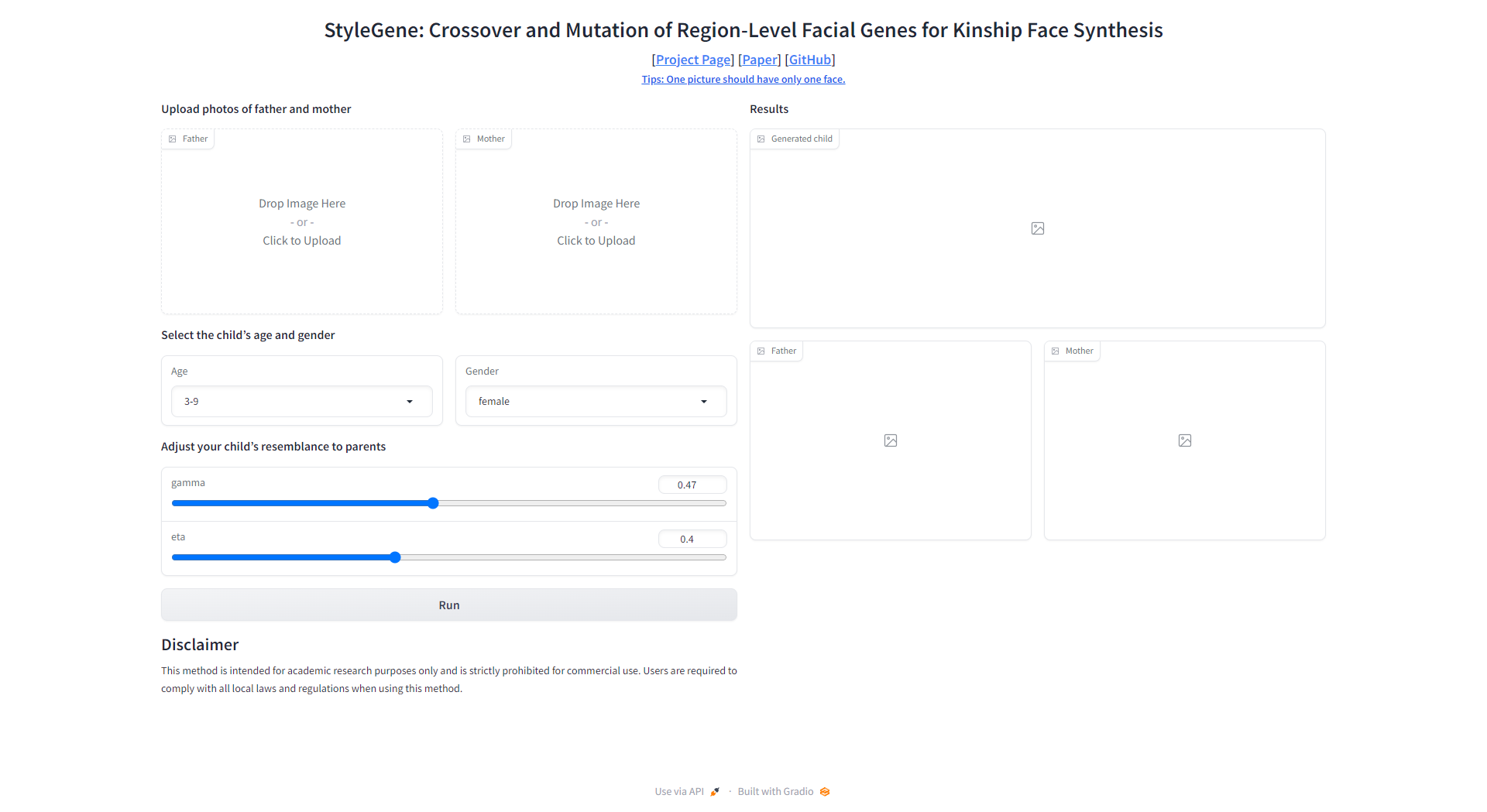

import gradio as gr

|

| 2 |

+

|

| 3 |

+

#from models.stylegene.api import synthesize_descendant

|

| 4 |

+

|

| 5 |

+

description = """<p style="text-align: center; font-weight: bold;">

|

| 6 |

+

<span style="font-size: 28px">StyleGene: Crossover and Mutation of Region-Level Facial Genes for Kinship Face Synthesis</span>

|

| 7 |

+

<br>

|

| 8 |

+

<span style="font-size: 18px" id="paper-info">

|

| 9 |

+

[<a href="https://wmpscc.github.io/stylegene/" target="_blank">Project Page</a>]

|

| 10 |

+

[<a href="https://openaccess.thecvf.com/content/CVPR2023/papers/Li_StyleGene_Crossover_and_Mutation_of_Region-Level_Facial_Genes_for_Kinship_CVPR_2023_paper.pdf" target="_blank">Paper</a>]

|

| 11 |

+

[<a href="https://github.com/CVI-SZU/StyleGene" target="_blank">GitHub</a>]

|

| 12 |

+

</span>

|

| 13 |

+

<br>

|

| 14 |

+

<a> Tips: One picture should have only one face.</a>

|

| 15 |

+

</p>"""

|

| 16 |

+

|

| 17 |

+

block = gr.Blocks()

|

| 18 |

+

with block:

|

| 19 |

+

gr.HTML(description)

|

| 20 |

+

with gr.Row():

|

| 21 |

+

with gr.Column():

|

| 22 |

+

gr.Markdown("### Upload photos of father and mother")

|

| 23 |

+

with gr.Row():

|

| 24 |

+

img1 = gr.Image(label="Father")

|

| 25 |

+

img2 = gr.Image(label="Mother")

|

| 26 |

+

gr.Markdown("### Select the child's age and gender")

|

| 27 |

+

with gr.Row():

|

| 28 |

+

age = gr.Dropdown(label="Age",

|

| 29 |

+

choices=["0-2", "3-9", "10-19", "20-29", "30-39",

|

| 30 |

+

"40-49", "50-59", "60-69", "70+"], value="3-9")

|

| 31 |

+

gender = gr.Dropdown(label="Gender", choices=["male", "female"], value="female")

|

| 32 |

+

gr.Markdown("### Adjust your child's resemblance to parents")

|

| 33 |

+

bar1 = gr.Slider(label="gamma", minimum=0, maximum=1, value=0.47)

|

| 34 |

+

bar2 = gr.Slider(label="eta", minimum=0, maximum=1, value=0.4)

|

| 35 |

+

bt_run = gr.Button("Run")

|

| 36 |

+

gr.Markdown("""## Disclaimer

|

| 37 |

+

This method is intended for academic research purposes only and is strictly prohibited for commercial use.

|

| 38 |

+

Users are required to comply with all local laws and regulations when using this method.""")

|

| 39 |

+

|

| 40 |

+

with gr.Column():

|

| 41 |

+

gr.Markdown("### Results")

|

| 42 |

+

img3 = gr.Image(label="Generated child")

|

| 43 |

+

with gr.Row():

|

| 44 |

+

img1_align = gr.Image(label="Father")

|

| 45 |

+

img2_align = gr.Image(label="Mother")

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

def run(father, mother, gamma, eta, age, gender):

|

| 49 |

+

attributes = {'age': age, 'gender': gender, 'gamma': float(gamma), 'eta': float(eta)}

|

| 50 |

+

img_F, img_M, img_C = synthesize_descendant(father, mother, attributes)

|

| 51 |

+

return img_F, img_M, img_C

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

bt_run.click(run, [img1, img2, bar1, bar2, age, gender], [img1_align, img2_align, img3])

|

| 55 |

+

|

| 56 |

+

block.launch(show_error=True)

|

configs.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

path_ckpt_landmark68 = "checkpoints/shape_predictor_68_face_landmarks.dat.bz2"

|

| 2 |

+

path_ckpt_e4e = "/home/cvi_demo/PythonProject/StyleGene/checkpoints/e4e_ffhq_encode.pt"

|

| 3 |

+

path_ckpt_stylegan2 = '/home/cvi_demo/PythonProject/StyleGene/checkpoints/stylegan2-ffhq-config-f.pt'

|

| 4 |

+

path_ckpt_stylegene = "/home/cvi_demo/PythonProject/StyleGene/checkpoints/stylegene_N18.ckpt"

|

| 5 |

+

path_ckpt_fairface = '/home/cvi_demo/PythonProject/StyleGene/checkpoints/res34_fair_align_multi_7_20190809.pt'

|

| 6 |

+

path_ckpt_genepool = "/home/cvi_demo/PythonProject/StyleGene/checkpoints/geneFactorPool.pkl"

|

| 7 |

+

|

| 8 |

+

path_csv_ffhq_attritube = 'data/fairface_gender_angle.csv'

|

| 9 |

+

path_dataset_ffhq = None

|

data/fairface_gender_angle.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

img/demo.png

ADDED

|

img/pic_top.jpg

ADDED

|

models/__init__.py

ADDED

|

File without changes

|

models/__pycache__/__init__.cpython-39.pyc

ADDED

|

Binary file (135 Bytes). View file

|

|

|

models/encoders/__init__.py

ADDED

|

File without changes

|

models/encoders/helpers.py

ADDED

|

@@ -0,0 +1,140 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from collections import namedtuple

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn.functional as F

|

| 4 |

+

from torch.nn import Conv2d, BatchNorm2d, PReLU, ReLU, Sigmoid, MaxPool2d, AdaptiveAvgPool2d, Sequential, Module

|

| 5 |

+

|

| 6 |

+

"""

|

| 7 |

+

ArcFace implementation from [TreB1eN](https://github.com/TreB1eN/InsightFace_Pytorch)

|

| 8 |

+

"""

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

class Flatten(Module):

|

| 12 |

+

def forward(self, input):

|

| 13 |

+

return input.view(input.size(0), -1)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def l2_norm(input, axis=1):

|

| 17 |

+

norm = torch.norm(input, 2, axis, True)

|

| 18 |

+

output = torch.div(input, norm)

|

| 19 |

+

return output

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

class Bottleneck(namedtuple('Block', ['in_channel', 'depth', 'stride'])):

|

| 23 |

+

""" A named tuple describing a ResNet block. """

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def get_block(in_channel, depth, num_units, stride=2):

|

| 27 |

+

return [Bottleneck(in_channel, depth, stride)] + [Bottleneck(depth, depth, 1) for i in range(num_units - 1)]

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

def get_blocks(num_layers):

|

| 31 |

+

if num_layers == 50:

|

| 32 |

+

blocks = [

|

| 33 |

+

get_block(in_channel=64, depth=64, num_units=3),

|

| 34 |

+

get_block(in_channel=64, depth=128, num_units=4),

|

| 35 |

+

get_block(in_channel=128, depth=256, num_units=14),

|

| 36 |

+

get_block(in_channel=256, depth=512, num_units=3)

|

| 37 |

+

]

|

| 38 |

+

elif num_layers == 100:

|

| 39 |

+

blocks = [

|

| 40 |

+

get_block(in_channel=64, depth=64, num_units=3),

|

| 41 |

+

get_block(in_channel=64, depth=128, num_units=13),

|

| 42 |

+

get_block(in_channel=128, depth=256, num_units=30),

|

| 43 |

+

get_block(in_channel=256, depth=512, num_units=3)

|

| 44 |

+

]

|

| 45 |

+

elif num_layers == 152:

|

| 46 |

+

blocks = [

|

| 47 |

+

get_block(in_channel=64, depth=64, num_units=3),

|

| 48 |

+

get_block(in_channel=64, depth=128, num_units=8),

|

| 49 |

+

get_block(in_channel=128, depth=256, num_units=36),

|

| 50 |

+

get_block(in_channel=256, depth=512, num_units=3)

|

| 51 |

+

]

|

| 52 |

+

else:

|

| 53 |

+

raise ValueError("Invalid number of layers: {}. Must be one of [50, 100, 152]".format(num_layers))

|

| 54 |

+

return blocks

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

class SEModule(Module):

|

| 58 |

+

def __init__(self, channels, reduction):

|

| 59 |

+

super(SEModule, self).__init__()

|

| 60 |

+

self.avg_pool = AdaptiveAvgPool2d(1)

|

| 61 |

+

self.fc1 = Conv2d(channels, channels // reduction, kernel_size=1, padding=0, bias=False)

|

| 62 |

+

self.relu = ReLU(inplace=True)

|

| 63 |

+

self.fc2 = Conv2d(channels // reduction, channels, kernel_size=1, padding=0, bias=False)

|

| 64 |

+

self.sigmoid = Sigmoid()

|

| 65 |

+

|

| 66 |

+

def forward(self, x):

|

| 67 |

+

module_input = x

|

| 68 |

+

x = self.avg_pool(x)

|

| 69 |

+

x = self.fc1(x)

|

| 70 |

+

x = self.relu(x)

|

| 71 |

+

x = self.fc2(x)

|

| 72 |

+

x = self.sigmoid(x)

|

| 73 |

+

return module_input * x

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

class bottleneck_IR(Module):

|

| 77 |

+

def __init__(self, in_channel, depth, stride):

|

| 78 |

+

super(bottleneck_IR, self).__init__()

|

| 79 |

+

if in_channel == depth:

|

| 80 |

+

self.shortcut_layer = MaxPool2d(1, stride)

|

| 81 |

+

else:

|

| 82 |

+

self.shortcut_layer = Sequential(

|

| 83 |

+

Conv2d(in_channel, depth, (1, 1), stride, bias=False),

|

| 84 |

+

BatchNorm2d(depth)

|

| 85 |

+

)

|

| 86 |

+

self.res_layer = Sequential(

|

| 87 |

+

BatchNorm2d(in_channel),

|

| 88 |

+

Conv2d(in_channel, depth, (3, 3), (1, 1), 1, bias=False), PReLU(depth),

|

| 89 |

+

Conv2d(depth, depth, (3, 3), stride, 1, bias=False), BatchNorm2d(depth)

|

| 90 |

+

)

|

| 91 |

+

|

| 92 |

+

def forward(self, x):

|

| 93 |

+

shortcut = self.shortcut_layer(x)

|

| 94 |

+

res = self.res_layer(x)

|

| 95 |

+

return res + shortcut

|

| 96 |

+

|

| 97 |

+

|

| 98 |

+

class bottleneck_IR_SE(Module):

|

| 99 |

+

def __init__(self, in_channel, depth, stride):

|

| 100 |

+

super(bottleneck_IR_SE, self).__init__()

|

| 101 |

+

if in_channel == depth:

|

| 102 |

+

self.shortcut_layer = MaxPool2d(1, stride)

|

| 103 |

+

else:

|

| 104 |

+

self.shortcut_layer = Sequential(

|

| 105 |

+

Conv2d(in_channel, depth, (1, 1), stride, bias=False),

|

| 106 |

+

BatchNorm2d(depth)

|

| 107 |

+

)

|

| 108 |

+

self.res_layer = Sequential(

|

| 109 |

+

BatchNorm2d(in_channel),

|

| 110 |

+

Conv2d(in_channel, depth, (3, 3), (1, 1), 1, bias=False),

|

| 111 |

+

PReLU(depth),

|

| 112 |

+

Conv2d(depth, depth, (3, 3), stride, 1, bias=False),

|

| 113 |

+

BatchNorm2d(depth),

|

| 114 |

+

SEModule(depth, 16)

|

| 115 |

+

)

|

| 116 |

+

|

| 117 |

+

def forward(self, x):

|

| 118 |

+

shortcut = self.shortcut_layer(x)

|

| 119 |

+

res = self.res_layer(x)

|

| 120 |

+

return res + shortcut

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

def _upsample_add(x, y):

|

| 124 |

+

"""Upsample and add two feature maps.

|

| 125 |

+

Args:

|

| 126 |

+

x: (Variable) top feature map to be upsampled.

|

| 127 |

+

y: (Variable) lateral feature map.

|

| 128 |

+

Returns:

|

| 129 |

+

(Variable) added feature map.

|

| 130 |

+

Note in PyTorch, when input size is odd, the upsampled feature map

|

| 131 |

+

with `F.upsample(..., scale_factor=2, mode='nearest')`

|

| 132 |

+

maybe not equal to the lateral feature map size.

|

| 133 |

+

e.g.

|

| 134 |

+

original input size: [N,_,15,15] ->

|

| 135 |

+

conv2d feature map size: [N,_,8,8] ->

|

| 136 |

+

upsampled feature map size: [N,_,16,16]

|

| 137 |

+

So we choose bilinear upsample which supports arbitrary output sizes.

|

| 138 |

+

"""

|

| 139 |

+

_, _, H, W = y.size()

|

| 140 |

+

return F.interpolate(x, size=(H, W), mode='bilinear', align_corners=True) + y

|

models/encoders/model_irse.py

ADDED

|

@@ -0,0 +1,84 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from torch.nn import Linear, Conv2d, BatchNorm1d, BatchNorm2d, PReLU, Dropout, Sequential, Module

|

| 2 |

+

from models.encoders.helpers import get_blocks, Flatten, bottleneck_IR, bottleneck_IR_SE, l2_norm

|

| 3 |

+

|

| 4 |

+

"""

|

| 5 |

+

Modified Backbone implementation from [TreB1eN](https://github.com/TreB1eN/InsightFace_Pytorch)

|

| 6 |

+

"""

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class Backbone(Module):

|

| 10 |

+

def __init__(self, input_size, num_layers, mode='ir', drop_ratio=0.4, affine=True):

|

| 11 |

+

super(Backbone, self).__init__()

|

| 12 |

+

assert input_size in [112, 224], "input_size should be 112 or 224"

|

| 13 |

+

assert num_layers in [50, 100, 152], "num_layers should be 50, 100 or 152"

|

| 14 |

+

assert mode in ['ir', 'ir_se'], "mode should be ir or ir_se"

|

| 15 |

+

blocks = get_blocks(num_layers)

|

| 16 |

+

if mode == 'ir':

|

| 17 |

+

unit_module = bottleneck_IR

|

| 18 |

+

elif mode == 'ir_se':

|

| 19 |

+

unit_module = bottleneck_IR_SE

|

| 20 |

+

self.input_layer = Sequential(Conv2d(3, 64, (3, 3), 1, 1, bias=False),

|

| 21 |

+

BatchNorm2d(64),

|

| 22 |

+

PReLU(64))

|

| 23 |

+

if input_size == 112:

|

| 24 |

+

self.output_layer = Sequential(BatchNorm2d(512),

|

| 25 |

+

Dropout(drop_ratio),

|

| 26 |

+

Flatten(),

|

| 27 |

+

Linear(512 * 7 * 7, 512),

|

| 28 |

+

BatchNorm1d(512, affine=affine))

|

| 29 |

+

else:

|

| 30 |

+

self.output_layer = Sequential(BatchNorm2d(512),

|

| 31 |

+

Dropout(drop_ratio),

|

| 32 |

+

Flatten(),

|

| 33 |

+

Linear(512 * 14 * 14, 512),

|

| 34 |

+

BatchNorm1d(512, affine=affine))

|

| 35 |

+

|

| 36 |

+

modules = []

|

| 37 |

+

for block in blocks:

|

| 38 |

+

for bottleneck in block:

|

| 39 |

+

modules.append(unit_module(bottleneck.in_channel,

|

| 40 |

+

bottleneck.depth,

|

| 41 |

+

bottleneck.stride))

|

| 42 |

+

self.body = Sequential(*modules)

|

| 43 |

+

|

| 44 |

+

def forward(self, x):

|

| 45 |

+

x = self.input_layer(x)

|

| 46 |

+

x = self.body(x)

|

| 47 |

+

x = self.output_layer(x)

|

| 48 |

+

return l2_norm(x)

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

def IR_50(input_size):

|

| 52 |

+

"""Constructs a ir-50 model."""

|

| 53 |

+

model = Backbone(input_size, num_layers=50, mode='ir', drop_ratio=0.4, affine=False)

|

| 54 |

+

return model

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

def IR_101(input_size):

|

| 58 |

+

"""Constructs a ir-101 model."""

|

| 59 |

+

model = Backbone(input_size, num_layers=100, mode='ir', drop_ratio=0.4, affine=False)

|

| 60 |

+

return model

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def IR_152(input_size):

|

| 64 |

+

"""Constructs a ir-152 model."""

|

| 65 |

+

model = Backbone(input_size, num_layers=152, mode='ir', drop_ratio=0.4, affine=False)

|

| 66 |

+

return model

|

| 67 |

+

|

| 68 |

+

|

| 69 |

+

def IR_SE_50(input_size):

|

| 70 |

+

"""Constructs a ir_se-50 model."""

|

| 71 |

+

model = Backbone(input_size, num_layers=50, mode='ir_se', drop_ratio=0.4, affine=False)

|

| 72 |

+

return model

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

def IR_SE_101(input_size):

|

| 76 |

+

"""Constructs a ir_se-101 model."""

|

| 77 |

+

model = Backbone(input_size, num_layers=100, mode='ir_se', drop_ratio=0.4, affine=False)

|

| 78 |

+

return model

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

def IR_SE_152(input_size):

|

| 82 |

+

"""Constructs a ir_se-152 model."""

|

| 83 |

+

model = Backbone(input_size, num_layers=152, mode='ir_se', drop_ratio=0.4, affine=False)

|

| 84 |

+

return model

|

models/encoders/psp_encoders.py

ADDED

|

@@ -0,0 +1,236 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from enum import Enum

|

| 2 |

+

import math

|

| 3 |

+

import numpy as np

|

| 4 |

+

import torch

|

| 5 |

+

from torch import nn

|

| 6 |

+

from torch.nn import Conv2d, BatchNorm2d, PReLU, Sequential, Module

|

| 7 |

+

|

| 8 |

+

from models.encoders.helpers import get_blocks, bottleneck_IR, bottleneck_IR_SE, _upsample_add

|

| 9 |

+

from models.stylegan2.model import EqualLinear

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# Adapted from https://github.com/omertov/encoder4editing

|

| 13 |

+

class ProgressiveStage(Enum):

|

| 14 |

+

WTraining = 0

|

| 15 |

+

Delta1Training = 1

|

| 16 |

+

Delta2Training = 2

|

| 17 |

+

Delta3Training = 3

|

| 18 |

+

Delta4Training = 4

|

| 19 |

+

Delta5Training = 5

|

| 20 |

+

Delta6Training = 6

|

| 21 |

+

Delta7Training = 7

|

| 22 |

+

Delta8Training = 8

|

| 23 |

+

Delta9Training = 9

|

| 24 |

+

Delta10Training = 10

|

| 25 |

+

Delta11Training = 11

|

| 26 |

+

Delta12Training = 12

|

| 27 |

+

Delta13Training = 13

|

| 28 |

+

Delta14Training = 14

|

| 29 |

+

Delta15Training = 15

|

| 30 |

+

Delta16Training = 16

|

| 31 |

+

Delta17Training = 17

|

| 32 |

+

Inference = 18

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

class GradualStyleBlock(Module):

|

| 36 |

+

def __init__(self, in_c, out_c, spatial):

|

| 37 |

+

super(GradualStyleBlock, self).__init__()

|

| 38 |

+

self.out_c = out_c

|

| 39 |

+

self.spatial = spatial

|

| 40 |

+

num_pools = int(np.log2(spatial))

|

| 41 |

+

modules = []

|

| 42 |

+

modules += [Conv2d(in_c, out_c, kernel_size=3, stride=2, padding=1),

|

| 43 |

+

nn.LeakyReLU()]

|

| 44 |

+

for i in range(num_pools - 1):

|

| 45 |

+

modules += [

|

| 46 |

+

Conv2d(out_c, out_c, kernel_size=3, stride=2, padding=1),

|

| 47 |

+

nn.LeakyReLU()

|

| 48 |

+

]

|

| 49 |

+

self.convs = nn.Sequential(*modules)

|

| 50 |

+

self.linear = EqualLinear(out_c, out_c, lr_mul=1)

|

| 51 |

+

|

| 52 |

+

def forward(self, x):

|

| 53 |

+

x = self.convs(x)

|

| 54 |

+

x = x.view(-1, self.out_c)

|

| 55 |

+

x = self.linear(x)

|

| 56 |

+

return x

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

class GradualStyleEncoder(Module):

|

| 60 |

+

def __init__(self, num_layers, mode='ir', opts=None):

|

| 61 |

+

super(GradualStyleEncoder, self).__init__()

|

| 62 |

+

assert num_layers in [50, 100, 152], 'num_layers should be 50,100, or 152'

|

| 63 |

+

assert mode in ['ir', 'ir_se'], 'mode should be ir or ir_se'

|

| 64 |

+

blocks = get_blocks(num_layers)

|

| 65 |

+

if mode == 'ir':

|

| 66 |

+

unit_module = bottleneck_IR

|

| 67 |

+

elif mode == 'ir_se':

|

| 68 |

+

unit_module = bottleneck_IR_SE

|

| 69 |

+

self.input_layer = Sequential(Conv2d(3, 64, (3, 3), 1, 1, bias=False),

|

| 70 |

+

BatchNorm2d(64),

|

| 71 |

+

PReLU(64))

|

| 72 |

+

modules = []

|

| 73 |

+

for block in blocks:

|

| 74 |

+

for bottleneck in block:

|

| 75 |

+

modules.append(unit_module(bottleneck.in_channel,

|

| 76 |

+

bottleneck.depth,

|

| 77 |

+

bottleneck.stride))

|

| 78 |

+

self.body = Sequential(*modules)

|

| 79 |

+

|

| 80 |

+

self.styles = nn.ModuleList()

|

| 81 |

+

log_size = int(math.log(opts.stylegan_size, 2))

|

| 82 |

+

self.style_count = 2 * log_size - 2

|

| 83 |

+

self.coarse_ind = 3

|

| 84 |

+

self.middle_ind = 7

|

| 85 |

+

for i in range(self.style_count):

|

| 86 |

+

if i < self.coarse_ind:

|

| 87 |

+

style = GradualStyleBlock(512, 512, 16)

|

| 88 |

+

elif i < self.middle_ind:

|

| 89 |

+

style = GradualStyleBlock(512, 512, 32)

|

| 90 |

+

else:

|

| 91 |

+

style = GradualStyleBlock(512, 512, 64)

|

| 92 |

+

self.styles.append(style)

|

| 93 |

+

self.latlayer1 = nn.Conv2d(256, 512, kernel_size=1, stride=1, padding=0)

|

| 94 |

+

self.latlayer2 = nn.Conv2d(128, 512, kernel_size=1, stride=1, padding=0)

|

| 95 |

+

|

| 96 |

+

def forward(self, x):

|

| 97 |

+

x = self.input_layer(x)

|

| 98 |

+

|

| 99 |

+

latents = []

|

| 100 |

+

modulelist = list(self.body._modules.values())

|

| 101 |

+

for i, l in enumerate(modulelist):

|

| 102 |

+

x = l(x)

|

| 103 |

+

if i == 6:

|

| 104 |

+

c1 = x

|

| 105 |

+

elif i == 20:

|

| 106 |

+

c2 = x

|

| 107 |

+

elif i == 23:

|

| 108 |

+

c3 = x

|

| 109 |

+

|

| 110 |

+

for j in range(self.coarse_ind):

|

| 111 |

+

latents.append(self.styles[j](c3))

|

| 112 |

+

|

| 113 |

+

p2 = _upsample_add(c3, self.latlayer1(c2))

|

| 114 |

+

for j in range(self.coarse_ind, self.middle_ind):

|

| 115 |

+

latents.append(self.styles[j](p2))

|

| 116 |

+

|

| 117 |

+

p1 = _upsample_add(p2, self.latlayer2(c1))

|

| 118 |

+

for j in range(self.middle_ind, self.style_count):

|

| 119 |

+

latents.append(self.styles[j](p1))

|

| 120 |

+

|

| 121 |

+

out = torch.stack(latents, dim=1)

|

| 122 |

+

return out

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

class Encoder4Editing(Module):

|

| 126 |

+

def __init__(self, num_layers, mode='ir', stylegan_size=1024):

|

| 127 |

+

super(Encoder4Editing, self).__init__()

|

| 128 |

+

assert num_layers in [50, 100, 152], 'num_layers should be 50,100, or 152'

|

| 129 |

+

assert mode in ['ir', 'ir_se'], 'mode should be ir or ir_se'

|

| 130 |

+

blocks = get_blocks(num_layers)

|

| 131 |

+

if mode == 'ir':

|

| 132 |

+

unit_module = bottleneck_IR

|

| 133 |

+

elif mode == 'ir_se':

|

| 134 |

+

unit_module = bottleneck_IR_SE

|

| 135 |

+

self.input_layer = Sequential(Conv2d(3, 64, (3, 3), 1, 1, bias=False),

|

| 136 |

+

BatchNorm2d(64),

|

| 137 |

+

PReLU(64))

|

| 138 |

+

modules = []

|

| 139 |

+

for block in blocks:

|

| 140 |

+

for bottleneck in block:

|

| 141 |

+

modules.append(unit_module(bottleneck.in_channel,

|

| 142 |

+

bottleneck.depth,

|

| 143 |

+

bottleneck.stride))

|

| 144 |

+

self.body = Sequential(*modules)

|

| 145 |

+

|

| 146 |

+

self.styles = nn.ModuleList()

|

| 147 |

+

log_size = int(math.log(stylegan_size, 2))

|

| 148 |

+

self.style_count = 2 * log_size - 2

|

| 149 |

+

self.coarse_ind = 3

|

| 150 |

+

self.middle_ind = 7

|

| 151 |

+

|

| 152 |

+

for i in range(self.style_count):

|

| 153 |

+

if i < self.coarse_ind:

|

| 154 |

+

style = GradualStyleBlock(512, 512, 16)

|

| 155 |

+

elif i < self.middle_ind:

|

| 156 |

+

style = GradualStyleBlock(512, 512, 32)

|

| 157 |

+

else:

|

| 158 |

+

style = GradualStyleBlock(512, 512, 64)

|

| 159 |

+

self.styles.append(style)

|

| 160 |

+

|

| 161 |

+

self.latlayer1 = nn.Conv2d(256, 512, kernel_size=1, stride=1, padding=0)

|

| 162 |

+

self.latlayer2 = nn.Conv2d(128, 512, kernel_size=1, stride=1, padding=0)

|

| 163 |

+

|

| 164 |

+

self.progressive_stage = ProgressiveStage.Inference

|

| 165 |

+

|

| 166 |

+

def get_deltas_starting_dimensions(self):

|

| 167 |

+

''' Get a list of the initial dimension of every delta from which it is applied '''

|

| 168 |

+

return list(range(self.style_count)) # Each dimension has a delta applied to it

|

| 169 |

+

|

| 170 |

+

def set_progressive_stage(self, new_stage: ProgressiveStage):

|

| 171 |

+

self.progressive_stage = new_stage

|

| 172 |

+

print('Changed progressive stage to: ', new_stage)

|

| 173 |

+

|

| 174 |

+

def forward(self, x):

|

| 175 |

+

x = self.input_layer(x)

|

| 176 |

+

|

| 177 |

+

modulelist = list(self.body._modules.values())

|

| 178 |

+

for i, l in enumerate(modulelist):

|

| 179 |

+

x = l(x)

|

| 180 |

+

if i == 6:

|

| 181 |

+

c1 = x

|

| 182 |

+

elif i == 20:

|

| 183 |

+

c2 = x

|

| 184 |

+

elif i == 23:

|

| 185 |

+

c3 = x

|

| 186 |

+

|

| 187 |

+

# Infer main W and duplicate it

|

| 188 |

+

w0 = self.styles[0](c3)

|

| 189 |

+

w = w0.repeat(self.style_count, 1, 1).permute(1, 0, 2)

|

| 190 |

+

stage = self.progressive_stage.value

|

| 191 |

+

features = c3

|

| 192 |

+

for i in range(1, min(stage + 1, self.style_count)): # Infer additional deltas

|

| 193 |

+

if i == self.coarse_ind:

|

| 194 |

+

p2 = _upsample_add(c3, self.latlayer1(c2)) # FPN's middle features

|

| 195 |

+

features = p2

|

| 196 |

+

elif i == self.middle_ind:

|

| 197 |

+

p1 = _upsample_add(p2, self.latlayer2(c1)) # FPN's fine features

|

| 198 |

+

features = p1

|

| 199 |

+

delta_i = self.styles[i](features)

|

| 200 |

+

w[:, i] += delta_i

|

| 201 |

+

return w

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

class BackboneEncoderUsingLastLayerIntoW(Module):

|

| 205 |

+

def __init__(self, num_layers, mode='ir', opts=None):

|

| 206 |

+

super(BackboneEncoderUsingLastLayerIntoW, self).__init__()

|

| 207 |

+

print('Using BackboneEncoderUsingLastLayerIntoW')

|

| 208 |

+

assert num_layers in [50, 100, 152], 'num_layers should be 50,100, or 152'

|

| 209 |

+

assert mode in ['ir', 'ir_se'], 'mode should be ir or ir_se'

|

| 210 |

+

blocks = get_blocks(num_layers)

|

| 211 |

+

if mode == 'ir':

|

| 212 |

+

unit_module = bottleneck_IR

|

| 213 |

+

elif mode == 'ir_se':

|

| 214 |

+

unit_module = bottleneck_IR_SE

|

| 215 |

+

self.input_layer = Sequential(Conv2d(3, 64, (3, 3), 1, 1, bias=False),

|

| 216 |

+

BatchNorm2d(64),

|

| 217 |

+

PReLU(64))

|

| 218 |

+

self.output_pool = torch.nn.AdaptiveAvgPool2d((1, 1))

|

| 219 |

+

self.linear = EqualLinear(512, 512, lr_mul=1)

|

| 220 |

+

modules = []

|

| 221 |

+

for block in blocks:

|

| 222 |

+

for bottleneck in block:

|

| 223 |

+

modules.append(unit_module(bottleneck.in_channel,

|

| 224 |

+

bottleneck.depth,

|

| 225 |

+

bottleneck.stride))

|

| 226 |

+

self.body = Sequential(*modules)

|

| 227 |

+

log_size = int(math.log(opts.stylegan_size, 2))

|

| 228 |

+

self.style_count = 2 * log_size - 2

|

| 229 |

+

|

| 230 |

+

def forward(self, x):

|

| 231 |

+

x = self.input_layer(x)

|

| 232 |

+

x = self.body(x)

|

| 233 |

+

x = self.output_pool(x)

|

| 234 |

+

x = x.view(-1, 512)

|

| 235 |

+

x = self.linear(x)

|

| 236 |

+

return x.repeat(self.style_count, 1, 1).permute(1, 0, 2)

|

models/stylegan2/__init__.py

ADDED

|

File without changes

|

models/stylegan2/__pycache__/__init__.cpython-39.pyc

ADDED

|

Binary file (145 Bytes). View file

|

|

|

models/stylegan2/__pycache__/model.cpython-39.pyc

ADDED

|

Binary file (15.8 kB). View file

|

|

|

models/stylegan2/model.py

ADDED

|

@@ -0,0 +1,674 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import math

|

| 2 |

+

import random

|

| 3 |

+

import torch

|

| 4 |

+

from torch import nn

|

| 5 |

+

from torch.nn import functional as F

|

| 6 |

+

|

| 7 |

+

from .op import FusedLeakyReLU, fused_leaky_relu, upfirdn2d

|

| 8 |

+

|

| 9 |

+

# Adapted from https://github.com/rosinality/stylegan2-pytorch

|

| 10 |

+

|

| 11 |

+

class PixelNorm(nn.Module):

|

| 12 |

+

def __init__(self):

|

| 13 |

+

super().__init__()

|

| 14 |

+

|

| 15 |

+

def forward(self, input):

|

| 16 |

+

return input * torch.rsqrt(torch.mean(input ** 2, dim=1, keepdim=True) + 1e-8)

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def make_kernel(k):

|

| 20 |

+

k = torch.tensor(k, dtype=torch.float32)

|

| 21 |

+

|

| 22 |

+

if k.ndim == 1:

|

| 23 |

+

k = k[None, :] * k[:, None]

|

| 24 |

+

|

| 25 |

+

k /= k.sum()

|

| 26 |

+

|

| 27 |

+

return k

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

class Upsample(nn.Module):

|

| 31 |

+

def __init__(self, kernel, factor=2):

|

| 32 |

+

super().__init__()

|

| 33 |

+

|

| 34 |

+

self.factor = factor

|

| 35 |

+

kernel = make_kernel(kernel) * (factor ** 2)

|

| 36 |

+

self.register_buffer('kernel', kernel)

|

| 37 |

+

|

| 38 |

+

p = kernel.shape[0] - factor

|

| 39 |

+

|

| 40 |

+

pad0 = (p + 1) // 2 + factor - 1

|

| 41 |

+

pad1 = p // 2

|

| 42 |

+

|

| 43 |

+

self.pad = (pad0, pad1)

|

| 44 |

+

|

| 45 |

+

def forward(self, input):

|

| 46 |

+

out = upfirdn2d(input, self.kernel, up=self.factor, down=1, pad=self.pad)

|

| 47 |

+

|

| 48 |

+

return out

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

class Downsample(nn.Module):

|

| 52 |

+

def __init__(self, kernel, factor=2):

|

| 53 |

+

super().__init__()

|

| 54 |

+

|

| 55 |

+

self.factor = factor

|

| 56 |

+

kernel = make_kernel(kernel)

|

| 57 |

+

self.register_buffer('kernel', kernel)

|

| 58 |

+

|

| 59 |

+

p = kernel.shape[0] - factor

|

| 60 |

+

|

| 61 |

+

pad0 = (p + 1) // 2

|

| 62 |

+

pad1 = p // 2

|

| 63 |

+

|

| 64 |

+

self.pad = (pad0, pad1)

|

| 65 |

+

|

| 66 |

+

def forward(self, input):

|

| 67 |

+

out = upfirdn2d(input, self.kernel, up=1, down=self.factor, pad=self.pad)

|

| 68 |

+

|

| 69 |

+

return out

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

class Blur(nn.Module):

|

| 73 |

+

def __init__(self, kernel, pad, upsample_factor=1):

|

| 74 |

+

super().__init__()

|

| 75 |

+

|

| 76 |

+

kernel = make_kernel(kernel)

|

| 77 |

+

|

| 78 |

+

if upsample_factor > 1:

|

| 79 |

+

kernel = kernel * (upsample_factor ** 2)

|

| 80 |

+

|

| 81 |

+

self.register_buffer('kernel', kernel)

|

| 82 |

+

|

| 83 |

+

self.pad = pad

|

| 84 |

+

|

| 85 |

+

def forward(self, input):

|

| 86 |

+

out = upfirdn2d(input, self.kernel, pad=self.pad)

|

| 87 |

+

|

| 88 |

+

return out

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

class EqualConv2d(nn.Module):

|

| 92 |

+

def __init__(

|

| 93 |

+

self, in_channel, out_channel, kernel_size, stride=1, padding=0, bias=True

|

| 94 |

+

):

|

| 95 |

+

super().__init__()

|

| 96 |

+

|

| 97 |

+

self.weight = nn.Parameter(

|

| 98 |

+

torch.randn(out_channel, in_channel, kernel_size, kernel_size)

|

| 99 |

+

)

|

| 100 |

+

self.scale = 1 / math.sqrt(in_channel * kernel_size ** 2)

|

| 101 |

+

|

| 102 |

+

self.stride = stride

|

| 103 |

+

self.padding = padding

|

| 104 |

+

|

| 105 |

+

if bias:

|

| 106 |

+

self.bias = nn.Parameter(torch.zeros(out_channel))

|

| 107 |

+

|

| 108 |

+

else:

|

| 109 |

+

self.bias = None

|

| 110 |

+

|

| 111 |

+

def forward(self, input):

|

| 112 |

+

out = F.conv2d(

|

| 113 |

+

input,

|

| 114 |

+

self.weight * self.scale,

|

| 115 |

+

bias=self.bias,

|

| 116 |

+

stride=self.stride,

|

| 117 |

+

padding=self.padding,

|

| 118 |

+

)

|

| 119 |

+

|

| 120 |

+

return out

|

| 121 |

+

|

| 122 |

+

def __repr__(self):

|

| 123 |

+

return (

|

| 124 |

+

f'{self.__class__.__name__}({self.weight.shape[1]}, {self.weight.shape[0]},'

|

| 125 |

+

f' {self.weight.shape[2]}, stride={self.stride}, padding={self.padding})'

|

| 126 |

+

)

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

class EqualLinear(nn.Module):

|

| 130 |

+

def __init__(

|

| 131 |

+

self, in_dim, out_dim, bias=True, bias_init=0, lr_mul=1, activation=None

|

| 132 |

+

):

|

| 133 |

+

super().__init__()

|

| 134 |

+

|

| 135 |

+

self.weight = nn.Parameter(torch.randn(out_dim, in_dim).div_(lr_mul))

|

| 136 |

+

|

| 137 |

+

if bias:

|

| 138 |

+

self.bias = nn.Parameter(torch.zeros(out_dim).fill_(bias_init))

|

| 139 |

+

|

| 140 |

+

else:

|

| 141 |

+

self.bias = None

|

| 142 |

+

|

| 143 |

+

self.activation = activation

|

| 144 |

+

|

| 145 |

+

self.scale = (1 / math.sqrt(in_dim)) * lr_mul

|

| 146 |

+

self.lr_mul = lr_mul

|

| 147 |

+

|

| 148 |

+

def forward(self, input):

|

| 149 |

+

if self.activation:

|

| 150 |

+

out = F.linear(input, self.weight * self.scale)

|

| 151 |

+

out = fused_leaky_relu(out, self.bias * self.lr_mul)

|

| 152 |

+

|

| 153 |

+

else:

|

| 154 |

+

out = F.linear(

|

| 155 |

+

input, self.weight * self.scale, bias=self.bias * self.lr_mul

|

| 156 |

+

)

|

| 157 |

+

|

| 158 |

+

return out

|

| 159 |

+

|

| 160 |

+

def __repr__(self):

|

| 161 |

+

return (

|

| 162 |

+