Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .env +18 -0

- .github/workflows/branch.yml +60 -0

- .github/workflows/release.yml +30 -0

- .gitignore +10 -0

- CONTRIBUTING.md +90 -0

- LICENSE +21 -0

- README.md +379 -8

- app.py +418 -0

- benchmark.py +145 -0

- code_completion.py +216 -0

- colab/Llama_2_7b_Chat_GPTQ.ipynb +0 -0

- colab/ggmlv3_q4_0.ipynb +109 -0

- colab/webui_CodeLlama_7B_Instruct_GPTQ.ipynb +514 -0

- docs/issues.md +0 -0

- docs/news.md +38 -0

- docs/performance.md +32 -0

- docs/pypi.md +187 -0

- env_examples/.env.13b_example +13 -0

- env_examples/.env.7b_8bit_example +13 -0

- env_examples/.env.7b_ggmlv3_q4_0_example +18 -0

- env_examples/.env.7b_gptq_example +18 -0

- llama2_wrapper/__init__.py +1 -0

- llama2_wrapper/__pycache__/__init__.cpython-310.pyc +0 -0

- llama2_wrapper/__pycache__/model.cpython-310.pyc +0 -0

- llama2_wrapper/__pycache__/types.cpython-310.pyc +0 -0

- llama2_wrapper/download/__init__.py +0 -0

- llama2_wrapper/download/__main__.py +59 -0

- llama2_wrapper/model.py +787 -0

- llama2_wrapper/server/__init__.py +0 -0

- llama2_wrapper/server/__main__.py +46 -0

- llama2_wrapper/server/app.py +526 -0

- llama2_wrapper/types.py +115 -0

- nohup.out +0 -0

- poetry.lock +0 -0

- prompts/__pycache__/utils.cpython-310.pyc +0 -0

- prompts/prompts_en.csv +0 -0

- prompts/utils.py +48 -0

- pyproject.toml +47 -0

- requirements.txt +21 -0

- static/screenshot.png +0 -0

- tests/__init__.py +0 -0

- tests/test_get_prompt.py +59 -0

.env

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MODEL_PATH = "FlagAlpha/Llama2-Chinese-7b-Chat"

|

| 2 |

+

# if MODEL_PATH is "", default llama.cpp/gptq models

|

| 3 |

+

# will be downloaded to: ./models

|

| 4 |

+

|

| 5 |

+

# Example ggml path:

|

| 6 |

+

# MODEL_PATH = "./models/llama-2-7b-chat.ggmlv3.q4_0.bin"

|

| 7 |

+

|

| 8 |

+

# options: llama.cpp, gptq, transformers

|

| 9 |

+

BACKEND_TYPE = "transformers"

|

| 10 |

+

|

| 11 |

+

# only for transformers bitsandbytes 8 bit

|

| 12 |

+

LOAD_IN_8BIT = False

|

| 13 |

+

|

| 14 |

+

MAX_MAX_NEW_TOKENS = 2048

|

| 15 |

+

DEFAULT_MAX_NEW_TOKENS = 1024

|

| 16 |

+

MAX_INPUT_TOKEN_LENGTH = 4000

|

| 17 |

+

|

| 18 |

+

DEFAULT_SYSTEM_PROMPT = ""

|

.github/workflows/branch.yml

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Push

|

| 2 |

+

on: [push]

|

| 3 |

+

|

| 4 |

+

jobs:

|

| 5 |

+

test:

|

| 6 |

+

strategy:

|

| 7 |

+

fail-fast: false

|

| 8 |

+

matrix:

|

| 9 |

+

python-version: ['3.10']

|

| 10 |

+

poetry-version: ['1.5.1']

|

| 11 |

+

os: [ubuntu-latest]

|

| 12 |

+

runs-on: ${{ matrix.os }}

|

| 13 |

+

steps:

|

| 14 |

+

- uses: actions/checkout@v3

|

| 15 |

+

- uses: actions/setup-python@v3

|

| 16 |

+

with:

|

| 17 |

+

python-version: ${{ matrix.python-version }}

|

| 18 |

+

- name: Run image

|

| 19 |

+

uses: abatilo/actions-poetry@v2.1.4

|

| 20 |

+

with:

|

| 21 |

+

poetry-version: ${{ matrix.poetry-version }}

|

| 22 |

+

- name: Install dependencies

|

| 23 |

+

run: poetry install

|

| 24 |

+

- name: Run tests

|

| 25 |

+

run: poetry run pytest

|

| 26 |

+

- name: Upload coverage reports to Codecov

|

| 27 |

+

uses: codecov/codecov-action@v3

|

| 28 |

+

env:

|

| 29 |

+

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

| 30 |

+

# - name: Upload coverage to Codecov

|

| 31 |

+

# uses: codecov/codecov-action@v2

|

| 32 |

+

code-quality:

|

| 33 |

+

strategy:

|

| 34 |

+

fail-fast: false

|

| 35 |

+

matrix:

|

| 36 |

+

python-version: ['3.10']

|

| 37 |

+

poetry-version: ['1.5.1']

|

| 38 |

+

os: [ubuntu-latest]

|

| 39 |

+

runs-on: ${{ matrix.os }}

|

| 40 |

+

steps:

|

| 41 |

+

- uses: actions/checkout@v3

|

| 42 |

+

- uses: actions/setup-python@v3

|

| 43 |

+

with:

|

| 44 |

+

python-version: ${{ matrix.python-version }}

|

| 45 |

+

- name: Python Poetry Action

|

| 46 |

+

uses: abatilo/actions-poetry@v2.1.6

|

| 47 |

+

with:

|

| 48 |

+

poetry-version: ${{ matrix.poetry-version }}

|

| 49 |

+

- name: Install dependencies

|

| 50 |

+

run: poetry install

|

| 51 |

+

- name: Run black

|

| 52 |

+

run: poetry run black . --check

|

| 53 |

+

# - name: Run isort

|

| 54 |

+

# run: poetry run isort . --check-only --profile black

|

| 55 |

+

# - name: Run flake8

|

| 56 |

+

# run: poetry run flake8 .

|

| 57 |

+

# - name: Run bandit

|

| 58 |

+

# run: poetry run bandit .

|

| 59 |

+

# - name: Run saftey

|

| 60 |

+

# run: poetry run safety check

|

.github/workflows/release.yml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Release

|

| 2 |

+

on:

|

| 3 |

+

release:

|

| 4 |

+

types:

|

| 5 |

+

- created

|

| 6 |

+

|

| 7 |

+

jobs:

|

| 8 |

+

publish:

|

| 9 |

+

strategy:

|

| 10 |

+

fail-fast: false

|

| 11 |

+

matrix:

|

| 12 |

+

python-version: ['3.10']

|

| 13 |

+

poetry-version: ['1.5.1']

|

| 14 |

+

os: [ubuntu-latest]

|

| 15 |

+

runs-on: ${{ matrix.os }}

|

| 16 |

+

steps:

|

| 17 |

+

- uses: actions/checkout@v3

|

| 18 |

+

- uses: actions/setup-python@v3

|

| 19 |

+

with:

|

| 20 |

+

python-version: ${{ matrix.python-version }}

|

| 21 |

+

- name: Run image

|

| 22 |

+

uses: abatilo/actions-poetry@v2.1.4

|

| 23 |

+

with:

|

| 24 |

+

poetry-version: ${{ matrix.poetry-version }}

|

| 25 |

+

- name: Publish

|

| 26 |

+

env:

|

| 27 |

+

PYPI_TOKEN: ${{ secrets.PYPI_TOKEN }}

|

| 28 |

+

run: |

|

| 29 |

+

poetry config pypi-token.pypi $PYPI_TOKEN

|

| 30 |

+

poetry publish --build

|

.gitignore

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

models

|

| 2 |

+

dist

|

| 3 |

+

|

| 4 |

+

.DS_Store

|

| 5 |

+

.vscode

|

| 6 |

+

|

| 7 |

+

__pycache__

|

| 8 |

+

gradio_cached_examples

|

| 9 |

+

|

| 10 |

+

.pytest_cache

|

CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Contributing to [llama2-webui](https://github.com/liltom-eth/llama2-webui)

|

| 2 |

+

|

| 3 |

+

We love your input! We want to make contributing to this project as easy and transparent as possible, whether it's:

|

| 4 |

+

|

| 5 |

+

- Reporting a bug

|

| 6 |

+

- Proposing new features

|

| 7 |

+

- Discussing the current state of the code

|

| 8 |

+

- Update README.md

|

| 9 |

+

- Submitting a PR

|

| 10 |

+

|

| 11 |

+

## Using GitHub's [issues](https://github.com/liltom-eth/llama2-webui/issues)

|

| 12 |

+

|

| 13 |

+

We use GitHub issues to track public bugs. Report a bug by [opening a new issue](https://github.com/liltom-eth/llama2-webui/issues). It's that easy!

|

| 14 |

+

|

| 15 |

+

Thanks for **[jlb1504](https://github.com/jlb1504)** for reporting the [first issue](https://github.com/liltom-eth/llama2-webui/issues/1)!

|

| 16 |

+

|

| 17 |

+

**Great Bug Reports** tend to have:

|

| 18 |

+

|

| 19 |

+

- A quick summary and/or background

|

| 20 |

+

- Steps to reproduce

|

| 21 |

+

- Be specific!

|

| 22 |

+

- Give a sample code if you can.

|

| 23 |

+

- What you expected would happen

|

| 24 |

+

- What actually happens

|

| 25 |

+

- Notes (possibly including why you think this might be happening, or stuff you tried that didn't work)

|

| 26 |

+

|

| 27 |

+

Proposing new features are also welcome.

|

| 28 |

+

|

| 29 |

+

## Pull Request

|

| 30 |

+

|

| 31 |

+

All pull requests are welcome. For example, you update the `README.md` to help users to better understand the usage.

|

| 32 |

+

|

| 33 |

+

### Clone the repository

|

| 34 |

+

|

| 35 |

+

1. Create a user account on GitHub if you do not already have one.

|

| 36 |

+

|

| 37 |

+

2. Fork the project [repository](https://github.com/liltom-eth/llama2-webui): click on the *Fork* button near the top of the page. This creates a copy of the code under your account on GitHub.

|

| 38 |

+

|

| 39 |

+

3. Clone this copy to your local disk:

|

| 40 |

+

|

| 41 |

+

```

|

| 42 |

+

git clone git@github.com:liltom-eth/llama2-webui.git

|

| 43 |

+

cd llama2-webui

|

| 44 |

+

```

|

| 45 |

+

|

| 46 |

+

### Implement your changes

|

| 47 |

+

|

| 48 |

+

1. Create a branch to hold your changes:

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

git checkout -b my-feature

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

and start making changes. Never work on the main branch!

|

| 55 |

+

|

| 56 |

+

2. Start your work on this branch.

|

| 57 |

+

|

| 58 |

+

3. When you’re done editing, do:

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

git add <MODIFIED FILES>

|

| 62 |

+

git commit

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

to record your changes in [git](https://git-scm.com/).

|

| 66 |

+

|

| 67 |

+

### Submit your contribution

|

| 68 |

+

|

| 69 |

+

1. If everything works fine, push your local branch to the remote server with:

|

| 70 |

+

|

| 71 |

+

```

|

| 72 |

+

git push -u origin my-feature

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

2. Go to the web page of your fork and click "Create pull request" to send your changes for review.

|

| 76 |

+

|

| 77 |

+

```{todo}

|

| 78 |

+

Find more detailed information in [creating a PR]. You might also want to open

|

| 79 |

+

the PR as a draft first and mark it as ready for review after the feedbacks

|

| 80 |

+

from the continuous integration (CI) system or any required fixes.

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

## License

|

| 84 |

+

|

| 85 |

+

By contributing, you agree that your contributions will be licensed under its MIT License.

|

| 86 |

+

|

| 87 |

+

## Questions?

|

| 88 |

+

|

| 89 |

+

Email us at [liltom.eth@gmail.com](mailto:liltom.eth@gmail.com)

|

| 90 |

+

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Tom

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,12 +1,383 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji: 🚀

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: pink

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 4.5.0

|

| 8 |

app_file: app.py

|

| 9 |

-

|

|

|

|

| 10 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 11 |

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

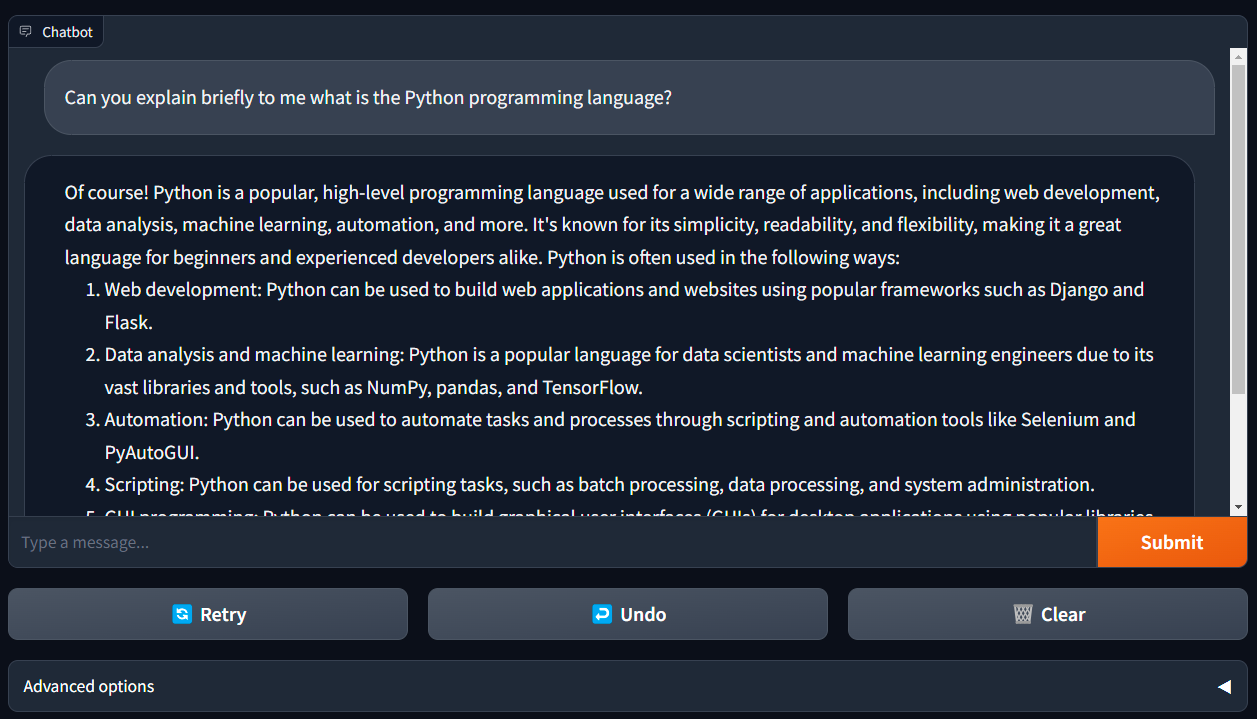

| 1 |

---

|

| 2 |

+

title: HydroxApp_t2t

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

app_file: app.py

|

| 4 |

+

sdk: gradio

|

| 5 |

+

sdk_version: 3.37.0

|

| 6 |

---

|

| 7 |

+

# llama2-webui

|

| 8 |

+

|

| 9 |

+

Running Llama 2 with gradio web UI on GPU or CPU from anywhere (Linux/Windows/Mac).

|

| 10 |

+

- Supporting all Llama 2 models (7B, 13B, 70B, GPTQ, GGML, GGUF, [CodeLlama](https://huggingface.co/TheBloke/CodeLlama-7B-Instruct-GPTQ)) with 8-bit, 4-bit mode.

|

| 11 |

+

- Use [llama2-wrapper](https://pypi.org/project/llama2-wrapper/) as your local llama2 backend for Generative Agents/Apps; [colab example](./colab/Llama_2_7b_Chat_GPTQ.ipynb).

|

| 12 |

+

- [Run OpenAI Compatible API](#start-openai-compatible-api) on Llama2 models.

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

## Features

|

| 19 |

+

|

| 20 |

+

- Supporting models: [Llama-2-7b](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf)/[13b](https://huggingface.co/llamaste/Llama-2-13b-chat-hf)/[70b](https://huggingface.co/llamaste/Llama-2-70b-chat-hf), [Llama-2-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ), [Llama-2-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML), [Llama-2-GGUF](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GGUF), [CodeLlama](https://huggingface.co/TheBloke/CodeLlama-7B-Instruct-GPTQ) ...

|

| 21 |

+

- Supporting model backends: [tranformers](https://github.com/huggingface/transformers), [bitsandbytes(8-bit inference)](https://github.com/TimDettmers/bitsandbytes), [AutoGPTQ(4-bit inference)](https://github.com/PanQiWei/AutoGPTQ), [llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 22 |

+

- Demos: [Run Llama2 on MacBook Air](https://twitter.com/liltom_eth/status/1682791729207070720?s=20); [Run Llama2 on free Colab T4 GPU](./colab/Llama_2_7b_Chat_GPTQ.ipynb)

|

| 23 |

+

- Use [llama2-wrapper](https://pypi.org/project/llama2-wrapper/) as your local llama2 backend for Generative Agents/Apps; [colab example](./colab/Llama_2_7b_Chat_GPTQ.ipynb).

|

| 24 |

+

- [Run OpenAI Compatible API](#start-openai-compatible-api) on Llama2 models.

|

| 25 |

+

- [News](./docs/news.md), [Benchmark](./docs/performance.md), [Issue Solutions](./docs/issues.md)

|

| 26 |

+

|

| 27 |

+

## Contents

|

| 28 |

+

|

| 29 |

+

- [Install](#install)

|

| 30 |

+

- [Usage](#usage)

|

| 31 |

+

- [Start Chat UI](#start-chat-ui)

|

| 32 |

+

- [Start Code Llama UI](#start-code-llama-ui)

|

| 33 |

+

- [Use llama2-wrapper for Your App](#use-llama2-wrapper-for-your-app)

|

| 34 |

+

- [Start OpenAI Compatible API](#start-openai-compatible-api)

|

| 35 |

+

- [Benchmark](#benchmark)

|

| 36 |

+

- [Download Llama-2 Models](#download-llama-2-models)

|

| 37 |

+

- [Model List](#model-list)

|

| 38 |

+

- [Download Script](#download-script)

|

| 39 |

+

- [Tips](#tips)

|

| 40 |

+

- [Env Examples](#env-examples)

|

| 41 |

+

- [Run on Nvidia GPU](#run-on-nvidia-gpu)

|

| 42 |

+

- [Run bitsandbytes 8 bit](#run-bitsandbytes-8-bit)

|

| 43 |

+

- [Run GPTQ 4 bit](#run-gptq-4-bit)

|

| 44 |

+

- [Run on CPU](#run-on-cpu)

|

| 45 |

+

- [Mac Metal Acceleration](#mac-metal-acceleration)

|

| 46 |

+

- [AMD/Nvidia GPU Acceleration](#amdnvidia-gpu-acceleration)

|

| 47 |

+

- [License](#license)

|

| 48 |

+

- [Contributing](#contributing)

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

## Install

|

| 53 |

+

### Method 1: From [PyPI](https://pypi.org/project/llama2-wrapper/)

|

| 54 |

+

```

|

| 55 |

+

pip install llama2-wrapper

|

| 56 |

+

```

|

| 57 |

+

The newest `llama2-wrapper>=0.1.14` supports llama.cpp's `gguf` models.

|

| 58 |

+

|

| 59 |

+

If you would like to use old `ggml` models, install `llama2-wrapper<=0.1.13` or manually install `llama-cpp-python==0.1.77`.

|

| 60 |

+

|

| 61 |

+

### Method 2: From Source:

|

| 62 |

+

|

| 63 |

+

```

|

| 64 |

+

git clone https://github.com/liltom-eth/llama2-webui.git

|

| 65 |

+

cd llama2-webui

|

| 66 |

+

pip install -r requirements.txt

|

| 67 |

+

```

|

| 68 |

+

### Install Issues:

|

| 69 |

+

`bitsandbytes >= 0.39` may not work on older NVIDIA GPUs. In that case, to use `LOAD_IN_8BIT`, you may have to downgrade like this:

|

| 70 |

+

|

| 71 |

+

- `pip install bitsandbytes==0.38.1`

|

| 72 |

+

|

| 73 |

+

`bitsandbytes` also need a special install for Windows:

|

| 74 |

+

|

| 75 |

+

```

|

| 76 |

+

pip uninstall bitsandbytes

|

| 77 |

+

pip install https://github.com/jllllll/bitsandbytes-windows-webui/releases/download/wheels/bitsandbytes-0.41.0-py3-none-win_amd64.whl

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

## Usage

|

| 81 |

+

|

| 82 |

+

### Start Chat UI

|

| 83 |

+

|

| 84 |

+

Run chatbot simply with web UI:

|

| 85 |

+

|

| 86 |

+

```bash

|

| 87 |

+

python app.py

|

| 88 |

+

```

|

| 89 |

+

|

| 90 |

+

`app.py` will load the default config `.env` which uses `llama.cpp` as the backend to run `llama-2-7b-chat.ggmlv3.q4_0.bin` model for inference. The model `llama-2-7b-chat.ggmlv3.q4_0.bin` will be automatically downloaded.

|

| 91 |

+

|

| 92 |

+

```bash

|

| 93 |

+

Running on backend llama.cpp.

|

| 94 |

+

Use default model path: ./models/llama-2-7b-chat.Q4_0.gguf

|

| 95 |

+

Start downloading model to: ./models/llama-2-7b-chat.Q4_0.gguf

|

| 96 |

+

```

|

| 97 |

+

|

| 98 |

+

You can also customize your `MODEL_PATH`, `BACKEND_TYPE,` and model configs in `.env` file to run different llama2 models on different backends (llama.cpp, transformers, gptq).

|

| 99 |

+

|

| 100 |

+

### Start Code Llama UI

|

| 101 |

+

|

| 102 |

+

We provide a code completion / filling UI for Code Llama.

|

| 103 |

+

|

| 104 |

+

Base model **Code Llama** and extend model **Code Llama — Python** are not fine-tuned to follow instructions. They should be prompted so that the expected answer is the natural continuation of the prompt. That means these two models focus on code filling and code completion.

|

| 105 |

+

|

| 106 |

+

Here is an example run CodeLlama code completion on llama.cpp backend:

|

| 107 |

+

|

| 108 |

+

```

|

| 109 |

+

python code_completion.py --model_path ./models/codellama-7b.Q4_0.gguf

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

`codellama-7b.Q4_0.gguf` can be downloaded from [TheBloke/CodeLlama-7B-GGUF](https://huggingface.co/TheBloke/CodeLlama-7B-GGUF/blob/main/codellama-7b.Q4_0.gguf).

|

| 115 |

+

|

| 116 |

+

**Code Llama — Instruct** trained with “natural language instruction” inputs paired with anticipated outputs. This strategic methodology enhances the model’s capacity to grasp human expectations in prompts. That means instruct models can be used in a chatbot-like app.

|

| 117 |

+

|

| 118 |

+

Example run CodeLlama chat on gptq backend:

|

| 119 |

+

|

| 120 |

+

```

|

| 121 |

+

python app.py --backend_type gptq --model_path ./models/CodeLlama-7B-Instruct-GPTQ/ --share True

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

`CodeLlama-7B-Instruct-GPTQ` can be downloaded from [TheBloke/CodeLlama-7B-Instruct-GPTQ](https://huggingface.co/TheBloke/CodeLlama-7B-Instruct-GPTQ)

|

| 127 |

+

|

| 128 |

+

### Use llama2-wrapper for Your App

|

| 129 |

+

|

| 130 |

+

🔥 For developers, we released `llama2-wrapper` as a llama2 backend wrapper in [PYPI](https://pypi.org/project/llama2-wrapper/).

|

| 131 |

+

|

| 132 |

+

Use `llama2-wrapper` as your local llama2 backend to answer questions and more, [colab example](./colab/ggmlv3_q4_0.ipynb):

|

| 133 |

+

|

| 134 |

+

```python

|

| 135 |

+

# pip install llama2-wrapper

|

| 136 |

+

from llama2_wrapper import LLAMA2_WRAPPER, get_prompt

|

| 137 |

+

llama2_wrapper = LLAMA2_WRAPPER()

|

| 138 |

+

# Default running on backend llama.cpp.

|

| 139 |

+

# Automatically downloading model to: ./models/llama-2-7b-chat.ggmlv3.q4_0.bin

|

| 140 |

+

prompt = "Do you know Pytorch"

|

| 141 |

+

answer = llama2_wrapper(get_prompt(prompt), temperature=0.9)

|

| 142 |

+

```

|

| 143 |

+

|

| 144 |

+

Run gptq llama2 model on Nvidia GPU, [colab example](./colab/Llama_2_7b_Chat_GPTQ.ipynb):

|

| 145 |

+

|

| 146 |

+

```python

|

| 147 |

+

from llama2_wrapper import LLAMA2_WRAPPER

|

| 148 |

+

llama2_wrapper = LLAMA2_WRAPPER(backend_type="gptq")

|

| 149 |

+

# Automatically downloading model to: ./models/Llama-2-7b-Chat-GPTQ

|

| 150 |

+

```

|

| 151 |

+

|

| 152 |

+

Run llama2 7b with bitsandbytes 8 bit with a `model_path`:

|

| 153 |

+

|

| 154 |

+

```python

|

| 155 |

+

from llama2_wrapper import LLAMA2_WRAPPER

|

| 156 |

+

llama2_wrapper = LLAMA2_WRAPPER(

|

| 157 |

+

model_path = "./models/Llama-2-7b-chat-hf",

|

| 158 |

+

backend_type = "transformers",

|

| 159 |

+

load_in_8bit = True

|

| 160 |

+

)

|

| 161 |

+

```

|

| 162 |

+

Check [API Document](https://pypi.org/project/llama2-wrapper/) for more usages.

|

| 163 |

+

|

| 164 |

+

### Start OpenAI Compatible API

|

| 165 |

+

|

| 166 |

+

`llama2-wrapper` offers a web server that acts as a drop-in replacement for the OpenAI API. This allows you to use Llama2 models with any OpenAI compatible clients, libraries or services, etc.

|

| 167 |

+

|

| 168 |

+

Start Fast API:

|

| 169 |

+

|

| 170 |

+

```

|

| 171 |

+

python -m llama2_wrapper.server

|

| 172 |

+

```

|

| 173 |

+

|

| 174 |

+

it will use `llama.cpp` as the backend by default to run `llama-2-7b-chat.ggmlv3.q4_0.bin` model.

|

| 175 |

+

|

| 176 |

+

Start Fast API for `gptq` backend:

|

| 177 |

+

|

| 178 |

+

```

|

| 179 |

+

python -m llama2_wrapper.server --backend_type gptq

|

| 180 |

+

```

|

| 181 |

+

|

| 182 |

+

Navigate to http://localhost:8000/docs to see the OpenAPI documentation.

|

| 183 |

+

|

| 184 |

+

#### Basic settings

|

| 185 |

+

|

| 186 |

+

| Flag | Description |

|

| 187 |

+

| ---------------- | ------------------------------------------------------------ |

|

| 188 |

+

| `-h`, `--help` | Show this help message. |

|

| 189 |

+

| `--model_path` | The path to the model to use for generating completions. |

|

| 190 |

+

| `--backend_type` | Backend for llama2, options: llama.cpp, gptq, transformers |

|

| 191 |

+

| `--max_tokens` | Maximum context size. |

|

| 192 |

+

| `--load_in_8bit` | Whether to use bitsandbytes to run model in 8 bit mode (only for transformers models). |

|

| 193 |

+

| `--verbose` | Whether to print verbose output to stderr. |

|

| 194 |

+

| `--host` | API address |

|

| 195 |

+

| `--port` | API port |

|

| 196 |

+

|

| 197 |

+

## Benchmark

|

| 198 |

+

|

| 199 |

+

Run benchmark script to compute performance on your device, `benchmark.py` will load the same `.env` as `app.py`.:

|

| 200 |

+

|

| 201 |

+

```bash

|

| 202 |

+

python benchmark.py

|

| 203 |

+

```

|

| 204 |

+

|

| 205 |

+

You can also select the `iter`, `backend_type` and `model_path` the benchmark will be run (overwrite .env args) :

|

| 206 |

+

|

| 207 |

+

```bash

|

| 208 |

+

python benchmark.py --iter NB_OF_ITERATIONS --backend_type gptq

|

| 209 |

+

```

|

| 210 |

+

|

| 211 |

+

By default, the number of iterations is 5, but if you want a faster result or a more accurate one

|

| 212 |

+

you can set it to whatever value you want, but please only report results with at least 5 iterations.

|

| 213 |

+

|

| 214 |

+

This [colab example](./colab/Llama_2_7b_Chat_GPTQ.ipynb) also show you how to benchmark gptq model on free Google Colab T4 GPU.

|

| 215 |

+

|

| 216 |

+

Some benchmark performance:

|

| 217 |

+

|

| 218 |

+

| Model | Precision | Device | RAM / GPU VRAM | Speed (tokens/sec) | load time (s) |

|

| 219 |

+

| --------------------------- | --------- | ------------------ | -------------- | ------------------ | ------------- |

|

| 220 |

+

| Llama-2-7b-chat-hf | 8 bit | NVIDIA RTX 2080 Ti | 7.7 GB VRAM | 3.76 | 641.36 |

|

| 221 |

+

| Llama-2-7b-Chat-GPTQ | 4 bit | NVIDIA RTX 2080 Ti | 5.8 GB VRAM | 18.85 | 192.91 |

|

| 222 |

+

| Llama-2-7b-Chat-GPTQ | 4 bit | Google Colab T4 | 5.8 GB VRAM | 18.19 | 37.44 |

|

| 223 |

+

| llama-2-7b-chat.ggmlv3.q4_0 | 4 bit | Apple M1 Pro CPU | 5.4 GB RAM | 17.90 | 0.18 |

|

| 224 |

+

| llama-2-7b-chat.ggmlv3.q4_0 | 4 bit | Apple M2 CPU | 5.4 GB RAM | 13.70 | 0.13 |

|

| 225 |

+

| llama-2-7b-chat.ggmlv3.q4_0 | 4 bit | Apple M2 Metal | 5.4 GB RAM | 12.60 | 0.10 |

|

| 226 |

+

| llama-2-7b-chat.ggmlv3.q2_K | 2 bit | Intel i7-8700 | 4.5 GB RAM | 7.88 | 31.90 |

|

| 227 |

+

|

| 228 |

+

Check/contribute the performance of your device in the full [performance doc](./docs/performance.md).

|

| 229 |

+

|

| 230 |

+

## Download Llama-2 Models

|

| 231 |

+

|

| 232 |

+

Llama 2 is a collection of pre-trained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters.

|

| 233 |

+

|

| 234 |

+

Llama-2-7b-Chat-GPTQ is the GPTQ model files for [Meta's Llama 2 7b Chat](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). GPTQ 4-bit Llama-2 model require less GPU VRAM to run it.

|

| 235 |

+

|

| 236 |

+

### Model List

|

| 237 |

+

|

| 238 |

+

| Model Name | set MODEL_PATH in .env | Download URL |

|

| 239 |

+

| ----------------------------------- | ---------------------------------------- | ------------------------------------------------------------ |

|

| 240 |

+

| meta-llama/Llama-2-7b-chat-hf | /path-to/Llama-2-7b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-7b-chat-hf) |

|

| 241 |

+

| meta-llama/Llama-2-13b-chat-hf | /path-to/Llama-2-13b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-13b-chat-hf) |

|

| 242 |

+

| meta-llama/Llama-2-70b-chat-hf | /path-to/Llama-2-70b-chat-hf | [Link](https://huggingface.co/llamaste/Llama-2-70b-chat-hf) |

|

| 243 |

+

| meta-llama/Llama-2-7b-hf | /path-to/Llama-2-7b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-7b-hf) |

|

| 244 |

+

| meta-llama/Llama-2-13b-hf | /path-to/Llama-2-13b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-13b-hf) |

|

| 245 |

+

| meta-llama/Llama-2-70b-hf | /path-to/Llama-2-70b-hf | [Link](https://huggingface.co/meta-llama/Llama-2-70b-hf) |

|

| 246 |

+

| TheBloke/Llama-2-7b-Chat-GPTQ | /path-to/Llama-2-7b-Chat-GPTQ | [Link](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ) |

|

| 247 |

+

| TheBloke/Llama-2-7b-Chat-GGUF | /path-to/llama-2-7b-chat.Q4_0.gguf | [Link](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GGUF/blob/main/llama-2-7b-chat.Q4_0.gguf) |

|

| 248 |

+

| TheBloke/Llama-2-7B-Chat-GGML | /path-to/llama-2-7b-chat.ggmlv3.q4_0.bin | [Link](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML) |

|

| 249 |

+

| TheBloke/CodeLlama-7B-Instruct-GPTQ | TheBloke/CodeLlama-7B-Instruct-GPTQ | [Link](https://huggingface.co/TheBloke/CodeLlama-7B-Instruct-GPTQ) |

|

| 250 |

+

| ... | ... | ... |

|

| 251 |

+

|

| 252 |

+

Running 4-bit model `Llama-2-7b-Chat-GPTQ` needs GPU with 6GB VRAM.

|

| 253 |

+

|

| 254 |

+

Running 4-bit model `llama-2-7b-chat.ggmlv3.q4_0.bin` needs CPU with 6GB RAM. There is also a list of other 2, 3, 4, 5, 6, 8-bit GGML models that can be used from [TheBloke/Llama-2-7B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML).

|

| 255 |

+

|

| 256 |

+

### Download Script

|

| 257 |

+

|

| 258 |

+

These models can be downloaded through:

|

| 259 |

+

|

| 260 |

+

```bash

|

| 261 |

+

python -m llama2_wrapper.download --repo_id TheBloke/CodeLlama-7B-Python-GPTQ

|

| 262 |

+

|

| 263 |

+

python -m llama2_wrapper.download --repo_id TheBloke/Llama-2-7b-Chat-GGUF --filename llama-2-7b-chat.Q4_0.gguf --save_dir ./models

|

| 264 |

+

```

|

| 265 |

+

|

| 266 |

+

Or use CMD like:

|

| 267 |

+

|

| 268 |

+

```bash

|

| 269 |

+

# Make sure you have git-lfs installed (https://git-lfs.com)

|

| 270 |

+

git lfs install

|

| 271 |

+

git clone git@hf.co:meta-llama/Llama-2-7b-chat-hf

|

| 272 |

+

```

|

| 273 |

+

|

| 274 |

+

To download Llama 2 models, you need to request access from [https://ai.meta.com/llama/](https://ai.meta.com/llama/) and also enable access on repos like [meta-llama/Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf/tree/main). Requests will be processed in hours.

|

| 275 |

+

|

| 276 |

+

For GPTQ models like [TheBloke/Llama-2-7b-Chat-GPTQ](https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ), you can directly download without requesting access.

|

| 277 |

+

|

| 278 |

+

For GGML models like [TheBloke/Llama-2-7B-Chat-GGML](https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML), you can directly download without requesting access.

|

| 279 |

+

|

| 280 |

+

## Tips

|

| 281 |

+

|

| 282 |

+

### Env Examples

|

| 283 |

+

|

| 284 |

+

There are some examples in `./env_examples/` folder.

|

| 285 |

+

|

| 286 |

+

| Model Setup | Example .env |

|

| 287 |

+

| ------------------------------------------------------ | --------------------------- |

|

| 288 |

+

| Llama-2-7b-chat-hf 8-bit (transformers backend) | .env.7b_8bit_example |

|

| 289 |

+

| Llama-2-7b-Chat-GPTQ 4-bit (gptq transformers backend) | .env.7b_gptq_example |

|

| 290 |

+

| Llama-2-7B-Chat-GGML 4bit (llama.cpp backend) | .env.7b_ggmlv3_q4_0_example |

|

| 291 |

+

| Llama-2-13b-chat-hf (transformers backend) | .env.13b_example |

|

| 292 |

+

| ... | ... |

|

| 293 |

+

|

| 294 |

+

### Run on Nvidia GPU

|

| 295 |

+

|

| 296 |

+

The running requires around 14GB of GPU VRAM for Llama-2-7b and 28GB of GPU VRAM for Llama-2-13b.

|

| 297 |

+

|

| 298 |

+

If you are running on multiple GPUs, the model will be loaded automatically on GPUs and split the VRAM usage. That allows you to run Llama-2-7b (requires 14GB of GPU VRAM) on a setup like 2 GPUs (11GB VRAM each).

|

| 299 |

+

|

| 300 |

+

#### Run bitsandbytes 8 bit

|

| 301 |

+

|

| 302 |

+

If you do not have enough memory, you can set up your `LOAD_IN_8BIT` as `True` in `.env`. This can reduce memory usage by around half with slightly degraded model quality. It is compatible with the CPU, GPU, and Metal backend.

|

| 303 |

+

|

| 304 |

+

Llama-2-7b with 8-bit compression can run on a single GPU with 8 GB of VRAM, like an Nvidia RTX 2080Ti, RTX 4080, T4, V100 (16GB).

|

| 305 |

+

|

| 306 |

+

#### Run GPTQ 4 bit

|

| 307 |

+

|

| 308 |

+

If you want to run 4 bit Llama-2 model like `Llama-2-7b-Chat-GPTQ`, you can set up your `BACKEND_TYPE` as `gptq` in `.env` like example `.env.7b_gptq_example`.

|

| 309 |

+

|

| 310 |

+

Make sure you have downloaded the 4-bit model from `Llama-2-7b-Chat-GPTQ` and set the `MODEL_PATH` and arguments in `.env` file.

|

| 311 |

+

|

| 312 |

+

`Llama-2-7b-Chat-GPTQ` can run on a single GPU with 6 GB of VRAM.

|

| 313 |

+

|

| 314 |

+

If you encounter issue like `NameError: name 'autogptq_cuda_256' is not defined`, please refer to [here](https://huggingface.co/TheBloke/open-llama-13b-open-instruct-GPTQ/discussions/1)

|

| 315 |

+

> pip install https://github.com/PanQiWei/AutoGPTQ/releases/download/v0.3.0/auto_gptq-0.3.0+cu117-cp310-cp310-linux_x86_64.whl

|

| 316 |

+

|

| 317 |

+

### Run on CPU

|

| 318 |

+

|

| 319 |

+

Run Llama-2 model on CPU requires [llama.cpp](https://github.com/ggerganov/llama.cpp) dependency and [llama.cpp Python Bindings](https://github.com/abetlen/llama-cpp-python), which are already installed.

|

| 320 |

+

|

| 321 |

+

|

| 322 |

+

Download GGML models like `llama-2-7b-chat.ggmlv3.q4_0.bin` following [Download Llama-2 Models](#download-llama-2-models) section. `llama-2-7b-chat.ggmlv3.q4_0.bin` model requires at least 6 GB RAM to run on CPU.

|

| 323 |

+

|

| 324 |

+

Set up configs like `.env.7b_ggmlv3_q4_0_example` from `env_examples` as `.env`.

|

| 325 |

+

|

| 326 |

+

Run web UI `python app.py` .

|

| 327 |

+

|

| 328 |

+

#### Mac Metal Acceleration

|

| 329 |

+

|

| 330 |

+

For Mac users, you can also set up Mac Metal for acceleration, try install this dependencies:

|

| 331 |

+

|

| 332 |

+

```bash

|

| 333 |

+

pip uninstall llama-cpp-python -y

|

| 334 |

+

CMAKE_ARGS="-DLLAMA_METAL=on" FORCE_CMAKE=1 pip install -U llama-cpp-python --no-cache-dir

|

| 335 |

+

pip install 'llama-cpp-python[server]'

|

| 336 |

+

```

|

| 337 |

+

|

| 338 |

+

or check details:

|

| 339 |

+

|

| 340 |

+

- [MacOS Install with Metal GPU](https://github.com/abetlen/llama-cpp-python/blob/main/docs/install/macos.md)

|

| 341 |

+

|

| 342 |

+

#### AMD/Nvidia GPU Acceleration

|

| 343 |

+

|

| 344 |

+

If you would like to use AMD/Nvidia GPU for acceleration, check this:

|

| 345 |

+

|

| 346 |

+

- [Installation with OpenBLAS / cuBLAS / CLBlast / Metal](https://github.com/abetlen/llama-cpp-python#installation-with-openblas--cublas--clblast--metal)

|

| 347 |

+

|

| 348 |

+

|

| 349 |

+

|

| 350 |

+

|

| 351 |

+

|

| 352 |

+

## License

|

| 353 |

+

|

| 354 |

+

MIT - see [MIT License](LICENSE)

|

| 355 |

+

|

| 356 |

+

This project enables users to adapt it freely for proprietary purposes without any restrictions.

|

| 357 |

+

|

| 358 |

+

## Contributing

|

| 359 |

+

|

| 360 |

+

Kindly read our [Contributing Guide](CONTRIBUTING.md) to learn and understand our development process.

|

| 361 |

+

|

| 362 |

+

### All Contributors

|

| 363 |

+

|

| 364 |

+

<a href="https://github.com/liltom-eth/llama2-webui/graphs/contributors">

|

| 365 |

+

<img src="https://contrib.rocks/image?repo=liltom-eth/llama2-webui" />

|

| 366 |

+

</a>

|

| 367 |

+

|

| 368 |

+

### Review

|

| 369 |

+

<a href='https://github.com/repo-reviews/repo-reviews.github.io/blob/main/create.md' target="_blank"><img alt='Github' src='https://img.shields.io/badge/review-100000?style=flat&logo=Github&logoColor=white&labelColor=888888&color=555555'/></a>

|

| 370 |

+

|

| 371 |

+

### Star History

|

| 372 |

+

|

| 373 |

+

[](https://star-history.com/#liltom-eth/llama2-webui&Date)

|

| 374 |

+

|

| 375 |

+

## Credits

|

| 376 |

|

| 377 |

+

- https://huggingface.co/meta-llama/Llama-2-7b-chat-hf

|

| 378 |

+

- https://huggingface.co/spaces/huggingface-projects/llama-2-7b-chat

|

| 379 |

+

- https://huggingface.co/TheBloke/Llama-2-7b-Chat-GPTQ

|

| 380 |

+

- [https://github.com/ggerganov/llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 381 |

+

- [https://github.com/TimDettmers/bitsandbytes](https://github.com/TimDettmers/bitsandbytes)

|

| 382 |

+

- [https://github.com/PanQiWei/AutoGPTQ](https://github.com/PanQiWei/AutoGPTQ)

|

| 383 |

+

- [https://github.com/abetlen/llama-cpp-python](https://github.com/abetlen/llama-cpp-python)

|

app.py

ADDED

|

@@ -0,0 +1,418 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import argparse

|

| 3 |

+

from typing import Iterator

|

| 4 |

+

|

| 5 |

+

import gradio as gr

|

| 6 |

+

from dotenv import load_dotenv

|

| 7 |

+

from distutils.util import strtobool

|

| 8 |

+

|

| 9 |

+

from llama2_wrapper import LLAMA2_WRAPPER

|

| 10 |

+

|

| 11 |

+

import logging

|

| 12 |

+

|

| 13 |

+

from prompts.utils import PromtsContainer

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def main():

|

| 17 |

+

parser = argparse.ArgumentParser()

|

| 18 |

+

parser.add_argument("--model_path", type=str, default="", help="model path")

|

| 19 |

+

parser.add_argument(

|

| 20 |

+

"--backend_type",

|

| 21 |

+

type=str,

|

| 22 |

+

default="",

|

| 23 |

+

help="Backend options: llama.cpp, gptq, transformers",

|

| 24 |

+

)

|

| 25 |

+

parser.add_argument(

|

| 26 |

+

"--load_in_8bit",

|

| 27 |

+

type=bool,

|

| 28 |

+

default=False,

|

| 29 |

+

help="Whether to use bitsandbytes 8 bit.",

|

| 30 |

+

)

|

| 31 |

+

parser.add_argument(

|

| 32 |

+

"--share",

|

| 33 |

+

type=bool,

|

| 34 |

+

default=False,

|

| 35 |

+

help="Whether to share public for gradio.",

|

| 36 |

+

)

|

| 37 |

+

args = parser.parse_args()

|

| 38 |

+

|

| 39 |

+

load_dotenv()

|

| 40 |

+

|

| 41 |

+

DEFAULT_SYSTEM_PROMPT = os.getenv("DEFAULT_SYSTEM_PROMPT", "")

|

| 42 |

+

MAX_MAX_NEW_TOKENS = int(os.getenv("MAX_MAX_NEW_TOKENS", 2048))

|

| 43 |

+

DEFAULT_MAX_NEW_TOKENS = int(os.getenv("DEFAULT_MAX_NEW_TOKENS", 1024))

|

| 44 |

+

MAX_INPUT_TOKEN_LENGTH = int(os.getenv("MAX_INPUT_TOKEN_LENGTH", 4000))

|

| 45 |

+

|

| 46 |

+

MODEL_PATH = os.getenv("MODEL_PATH")

|

| 47 |

+

assert MODEL_PATH is not None, f"MODEL_PATH is required, got: {MODEL_PATH}"

|

| 48 |

+

BACKEND_TYPE = os.getenv("BACKEND_TYPE")

|

| 49 |

+

assert BACKEND_TYPE is not None, f"BACKEND_TYPE is required, got: {BACKEND_TYPE}"

|

| 50 |

+

|

| 51 |

+

LOAD_IN_8BIT = bool(strtobool(os.getenv("LOAD_IN_8BIT", "True")))

|

| 52 |

+

|

| 53 |

+

if args.model_path != "":

|

| 54 |

+

MODEL_PATH = args.model_path

|

| 55 |

+

if args.backend_type != "":

|

| 56 |

+

BACKEND_TYPE = args.backend_type

|

| 57 |

+

if args.load_in_8bit:

|

| 58 |

+

LOAD_IN_8BIT = True

|

| 59 |

+

|

| 60 |

+

llama2_wrapper = LLAMA2_WRAPPER(

|

| 61 |

+

model_path=MODEL_PATH,

|

| 62 |

+

backend_type=BACKEND_TYPE,

|

| 63 |

+

max_tokens=MAX_INPUT_TOKEN_LENGTH,

|

| 64 |

+

load_in_8bit=LOAD_IN_8BIT,

|

| 65 |

+

# verbose=True,

|

| 66 |

+

)

|

| 67 |

+

|

| 68 |

+