Init

Browse files- .gitattributes +4 -0

- Community License for Baichuan2 Model.pdf +0 -0

- README.md +173 -1

- config.json +29 -0

- configuration_baichuan.py +48 -0

- generation_config.json +7 -0

- generation_utils.py +83 -0

- modeling_baichuan.py +826 -0

- pytorch_model-00001-of-00003.bin +3 -0

- pytorch_model-00002-of-00003.bin +3 -0

- pytorch_model-00003-of-00003.bin +3 -0

- pytorch_model.bin.index.json +290 -0

- quantizer.py +211 -0

- special_tokens_map.json +30 -0

- tokenization_baichuan.py +258 -0

- tokenizer.model +3 -0

- tokenizer_config.json +46 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,7 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

pytorch_model-00002-of-00003.bin filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

pytorch_model-00003-of-00003.bin filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

pytorch_model-00001-of-00003.bin filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

tokenizer.model filter=lfs diff=lfs merge=lfs -text

|

Community License for Baichuan2 Model.pdf

ADDED

|

Binary file (203 kB). View file

|

|

|

README.md

CHANGED

|

@@ -1,3 +1,175 @@

|

|

| 1 |

---

|

| 2 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

- zh

|

| 5 |

+

license: apache2

|

| 6 |

+

tasks:

|

| 7 |

+

- text-generation

|

| 8 |

+

datasets:

|

| 9 |

+

- ehartford/dolphin

|

| 10 |

+

- Open-Orca/OpenOrca

|

| 11 |

+

- garage-bAInd/Open-Platypus

|

| 12 |

---

|

| 13 |

+

|

| 14 |

+

<p><h1> speechless-baichuan2-dolphin-orca-platypus-13b </h1></p>

|

| 15 |

+

Fine-tune the baichuan-inc/Baichuan2-13B-Base with Dolphin, Orca and Platypus datasets.

|

| 16 |

+

|

| 17 |

+

| Metric | Value |

|

| 18 |

+

| --- | --- |

|

| 19 |

+

| ARC | |

|

| 20 |

+

| HellaSwag | |

|

| 21 |

+

| MMLU | |

|

| 22 |

+

| TruthfulQA | |

|

| 23 |

+

| Average | |

|

| 24 |

+

|

| 25 |

+

<!-- markdownlint-disable first-line-h1 -->

|

| 26 |

+

<!-- markdownlint-disable html -->

|

| 27 |

+

<div align="center">

|

| 28 |

+

<h1>

|

| 29 |

+

Baichuan 2

|

| 30 |

+

</h1>

|

| 31 |

+

</div>

|

| 32 |

+

|

| 33 |

+

<div align="center">

|

| 34 |

+

<a href="https://github.com/baichuan-inc/Baichuan2" target="_blank">🦉GitHub</a> | <a href="https://github.com/baichuan-inc/Baichuan-7B/blob/main/media/wechat.jpeg?raw=true" target="_blank">💬WeChat</a>

|

| 35 |

+

</div>

|

| 36 |

+

<div align="center">

|

| 37 |

+

🚀 <a href="https://www.baichuan-ai.com/" target="_blank">百川大模型在线对话平台</a> 已正式向公众开放 🎉

|

| 38 |

+

</div>

|

| 39 |

+

|

| 40 |

+

# 目录/Table of Contents

|

| 41 |

+

|

| 42 |

+

- [📖 模型介绍/Introduction](#Introduction)

|

| 43 |

+

- [⚙️ 快速开始/Quick Start](#Start)

|

| 44 |

+

- [📊 Benchmark评估/Benchmark Evaluation](#Benchmark)

|

| 45 |

+

- [📜 声明与协议/Terms and Conditions](#Terms)

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

# <span id="Introduction">模型介绍/Introduction</span>

|

| 49 |

+

|

| 50 |

+

Baichuan 2 是[百川智能]推出的新一代开源大语言模型,采用 **2.6 万亿** Tokens 的高质量语料训练,在权威的中文和英文 benchmark

|

| 51 |

+

上均取得同尺寸最好的效果。本次发布包含有 7B、13B 的 Base 和 Chat 版本,并提供了 Chat 版本的 4bits

|

| 52 |

+

量化,所有版本不仅对学术研究完全开放,开发者也仅需[邮件申请]并获得官方商用许可后,即可以免费商用。具体发布版本和下载见下表:

|

| 53 |

+

|

| 54 |

+

Baichuan 2 is the new generation of large-scale open-source language models launched by [Baichuan Intelligence inc.](https://www.baichuan-ai.com/).

|

| 55 |

+

It is trained on a high-quality corpus with 2.6 trillion tokens and has achieved the best performance in authoritative Chinese and English benchmarks of the same size.

|

| 56 |

+

This release includes 7B and 13B versions for both Base and Chat models, along with a 4bits quantized version for the Chat model.

|

| 57 |

+

All versions are fully open to academic research, and developers can also use them for free in commercial applications after obtaining an official commercial license through [email request](mailto:opensource@baichuan-inc.com).

|

| 58 |

+

The specific release versions and download links are listed in the table below:

|

| 59 |

+

|

| 60 |

+

| | Base Model | Chat Model | 4bits Quantized Chat Model |

|

| 61 |

+

|:---:|:--------------------:|:--------------------:|:--------------------------:|

|

| 62 |

+

| 7B | [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) | [Baichuan2-7B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat) | [Baichuan2-7B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base-4bits) |

|

| 63 |

+

| 13B | [Baichuan2-13B-Base](https://huggingface.co/baichuan-inc/Baichuan2-13B-Base) | [Baichuan2-13B-Chat](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat) | [Baichuan2-13B-Chat-4bits](https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits) |

|

| 64 |

+

|

| 65 |

+

# <span id="Start">快速开始/Quick Start</span>

|

| 66 |

+

|

| 67 |

+

在Baichuan2系列模型中,我们为了加快推理速度使用了Pytorch2.0加入的新功能F.scaled_dot_product_attention,因此模型需要在Pytorch2.0环境下运行。

|

| 68 |

+

|

| 69 |

+

In the Baichuan 2 series models, we have utilized the new feature `F.scaled_dot_product_attention` introduced in PyTorch 2.0 to accelerate inference speed. Therefore, the model needs to be run in a PyTorch 2.0 environment.

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

```python

|

| 73 |

+

import torch

|

| 74 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 75 |

+

tokenizer = AutoTokenizer.from_pretrained("baichuan-inc/Baichuan2-13B-Base", use_fast=False, trust_remote_code=True)

|

| 76 |

+

model = AutoModelForCausalLM.from_pretrained("baichuan-inc/Baichuan2-13B-Base", device_map="auto", trust_remote_code=True)

|

| 77 |

+

inputs = tokenizer('登鹳雀楼->王之涣\n夜雨寄北->', return_tensors='pt')

|

| 78 |

+

inputs = inputs.to('cuda:0')

|

| 79 |

+

pred = model.generate(**inputs, max_new_tokens=64, repetition_penalty=1.1)

|

| 80 |

+

print(tokenizer.decode(pred.cpu()[0], skip_special_tokens=True))

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

# <span id="Benchmark">Benchmark 结果/Benchmark Evaluation</span>

|

| 84 |

+

|

| 85 |

+

我们在[通用]、[法律]、[医疗]、[数学]、[代码]和[多语言翻译]六个领域的中英文权威数据集上对模型进行了广泛测试,更多详细测评结果可查看[GitHub]。

|

| 86 |

+

|

| 87 |

+

We have extensively tested the model on authoritative Chinese-English datasets across six domains: [General](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#general-domain), [Legal](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Medical](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#law-and-medicine), [Mathematics](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), [Code](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#mathematics-and-code), and [Multilingual Translation](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md#multilingual-translation). For more detailed evaluation results, please refer to [GitHub](https://github.com/baichuan-inc/Baichuan2/blob/main/README_EN.md).

|

| 88 |

+

|

| 89 |

+

### 7B Model Results

|

| 90 |

+

|

| 91 |

+

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|

| 92 |

+

|:-----------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

|

| 93 |

+

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

|

| 94 |

+

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

|

| 95 |

+

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

|

| 96 |

+

| **LLaMA-7B** | 27.10 | 35.10 | 26.75 | 27.81 | 28.17 | 32.38 |

|

| 97 |

+

| **LLaMA2-7B** | 28.90 | 45.73 | 31.38 | 25.97 | 26.53 | 39.16 |

|

| 98 |

+

| **MPT-7B** | 27.15 | 27.93 | 26.00 | 26.54 | 24.83 | 35.20 |

|

| 99 |

+

| **Falcon-7B** | 24.23 | 26.03 | 25.66 | 24.24 | 24.10 | 28.77 |

|

| 100 |

+

| **ChatGLM2-6B** | 50.20 | 45.90 | 49.00 | 49.44 | 45.28 | 31.65 |

|

| 101 |

+

| **[Baichuan-7B]** | 42.80 | 42.30 | 44.02 | 36.34 | 34.44 | 32.48 |

|

| 102 |

+

| **[Baichuan2-7B-Base]** | 54.00 | 54.16 | 57.07 | 47.47 | 42.73 | 41.56 |

|

| 103 |

+

|

| 104 |

+

### 13B Model Results

|

| 105 |

+

|

| 106 |

+

| | **C-Eval** | **MMLU** | **CMMLU** | **Gaokao** | **AGIEval** | **BBH** |

|

| 107 |

+

|:---------------------------:|:----------:|:--------:|:---------:|:----------:|:-----------:|:-------:|

|

| 108 |

+

| | 5-shot | 5-shot | 5-shot | 5-shot | 5-shot | 3-shot |

|

| 109 |

+

| **GPT-4** | 68.40 | 83.93 | 70.33 | 66.15 | 63.27 | 75.12 |

|

| 110 |

+

| **GPT-3.5 Turbo** | 51.10 | 68.54 | 54.06 | 47.07 | 46.13 | 61.59 |

|

| 111 |

+

| **LLaMA-13B** | 28.50 | 46.30 | 31.15 | 28.23 | 28.22 | 37.89 |

|

| 112 |

+

| **LLaMA2-13B** | 35.80 | 55.09 | 37.99 | 30.83 | 32.29 | 46.98 |

|

| 113 |

+

| **Vicuna-13B** | 32.80 | 52.00 | 36.28 | 30.11 | 31.55 | 43.04 |

|

| 114 |

+

| **Chinese-Alpaca-Plus-13B** | 38.80 | 43.90 | 33.43 | 34.78 | 35.46 | 28.94 |

|

| 115 |

+

| **XVERSE-13B** | 53.70 | 55.21 | 58.44 | 44.69 | 42.54 | 38.06 |

|

| 116 |

+

| **[Baichuan-13B-Base]** | 52.40 | 51.60 | 55.30 | 49.69 | 43.20 | 43.01 |

|

| 117 |

+

| **[Baichuan2-13B-Base]** | 58.10 | 59.17 | 61.97 | 54.33 | 48.17 | 48.78 |

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

## 训练过程模型/Training Dynamics

|

| 121 |

+

|

| 122 |

+

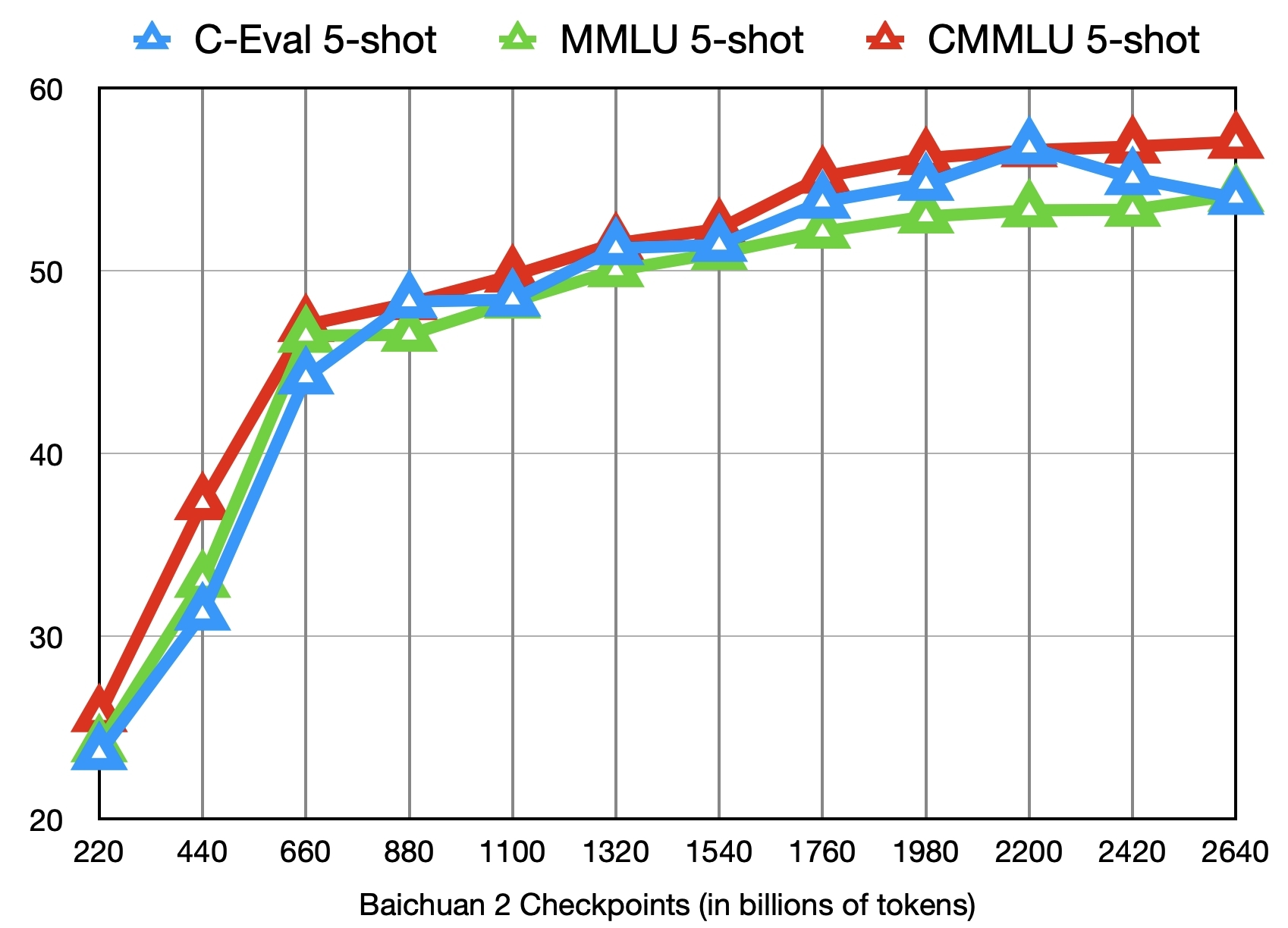

除了训练了 2.6 万亿 Tokens 的 [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) 模型,我们还提供了在此之前的另外 11 个中间过程的模型(分别对应训练了约 0.2 ~ 2.4 万亿 Tokens)供社区研究使用

|

| 123 |

+

([训练过程checkpoint下载](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints))。下图给出了这些 checkpoints 在 C-Eval、MMLU、CMMLU 三个 benchmark 上的效果变化:

|

| 124 |

+

|

| 125 |

+

In addition to the [Baichuan2-7B-Base](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base) model trained on 2.6 trillion tokens, we also offer 11 additional intermediate-stage models for community research, corresponding to training on approximately 0.2 to 2.4 trillion tokens each ([Intermediate Checkpoints Download](https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints)). The graph below shows the performance changes of these checkpoints on three benchmarks: C-Eval, MMLU, and CMMLU.

|

| 126 |

+

|

| 127 |

+

|

| 128 |

+

|

| 129 |

+

# <span id="Terms">声明与协议/Terms and Conditions</span>

|

| 130 |

+

|

| 131 |

+

## 声明

|

| 132 |

+

|

| 133 |

+

我们在此声明,我们的开发团队并未基于 Baichuan 2 模型开发任何应用,无论是在 iOS、Android、网页或任何其他平台。我们强烈呼吁所有使用者,不要利用

|

| 134 |

+

Baichuan 2 模型进行任何危害国家社会安全或违法的活动。另外,我们也要求使用者不要将 Baichuan 2

|

| 135 |

+

模型用于未经适当安全审查和备案的互联网服务。我们希望所有的使用者都能遵守这个原则,确保科技的发展能在规范和合法的环境下进行。

|

| 136 |

+

|

| 137 |

+

我们已经尽我们所能,来确保模型训练过程中使用的数据的合规性。然而,尽管我们已经做出了巨大的努力,但由于模型和数据的复杂性,仍有可能存在一些无法预见的问题。因此,如果由于使用

|

| 138 |

+

Baichuan 2 开源模型而导致的任何问题,包括但不限于数据安全问题、公共舆论风险,或模型被误导、滥用、传播或不当利用所带来的任何风险和问题,我们将不承担任何责任。

|

| 139 |

+

|

| 140 |

+

We hereby declare that our team has not developed any applications based on Baichuan 2 models, not on iOS, Android, the web, or any other platform. We strongly call on all users not to use Baichuan 2 models for any activities that harm national / social security or violate the law. Also, we ask users not to use Baichuan 2 models for Internet services that have not undergone appropriate security reviews and filings. We hope that all users can abide by this principle and ensure that the development of technology proceeds in a regulated and legal environment.

|

| 141 |

+

|

| 142 |

+

We have done our best to ensure the compliance of the data used in the model training process. However, despite our considerable efforts, there may still be some unforeseeable issues due to the complexity of the model and data. Therefore, if any problems arise due to the use of Baichuan 2 open-source models, including but not limited to data security issues, public opinion risks, or any risks and problems brought about by the model being misled, abused, spread or improperly exploited, we will not assume any responsibility.

|

| 143 |

+

|

| 144 |

+

## 协议

|

| 145 |

+

|

| 146 |

+

Baichuan 2 模型的社区使用需遵循[《Baichuan 2 模型社区许可协议》]。Baichuan 2 支持商用。如果将 Baichuan 2 模型或其衍生品用作商业用途,请您按照如下方式联系许可方,以进行登记并向许可方申请书面授权:联系邮箱 [opensource@baichuan-inc.com]。

|

| 147 |

+

|

| 148 |

+

The use of the source code in this repository follows the open-source license Apache 2.0. Community use of the Baichuan 2 model must adhere to the [Community License for Baichuan 2 Model](https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf). Baichuan 2 supports commercial use. If you are using the Baichuan 2 models or their derivatives for commercial purposes, please contact the licensor in the following manner for registration and to apply for written authorization: Email opensource@baichuan-inc.com.

|

| 149 |

+

|

| 150 |

+

[GitHub]:https://github.com/baichuan-inc/Baichuan2

|

| 151 |

+

[Baichuan2]:https://github.com/baichuan-inc/Baichuan2

|

| 152 |

+

|

| 153 |

+

[Baichuan-7B]:https://huggingface.co/baichuan-inc/Baichuan-7B

|

| 154 |

+

[Baichuan2-7B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base

|

| 155 |

+

[Baichuan2-7B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat

|

| 156 |

+

[Baichuan2-7B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Chat-4bits

|

| 157 |

+

[Baichuan-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan-13B-Base

|

| 158 |

+

[Baichuan2-13B-Base]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Base

|

| 159 |

+

[Baichuan2-13B-Chat]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat

|

| 160 |

+

[Baichuan2-13B-Chat-4bits]:https://huggingface.co/baichuan-inc/Baichuan2-13B-Chat-4bits

|

| 161 |

+

|

| 162 |

+

[通用]:https://github.com/baichuan-inc/Baichuan2#%E9%80%9A%E7%94%A8%E9%A2%86%E5%9F%9F

|

| 163 |

+

[法律]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

|

| 164 |

+

[医疗]:https://github.com/baichuan-inc/Baichuan2#%E6%B3%95%E5%BE%8B%E5%8C%BB%E7%96%97

|

| 165 |

+

[数学]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

|

| 166 |

+

[代码]:https://github.com/baichuan-inc/Baichuan2#%E6%95%B0%E5%AD%A6%E4%BB%A3%E7%A0%81

|

| 167 |

+

[多语言翻译]:https://github.com/baichuan-inc/Baichuan2#%E5%A4%9A%E8%AF%AD%E8%A8%80%E7%BF%BB%E8%AF%91

|

| 168 |

+

|

| 169 |

+

[《Baichuan 2 模型社区许可协议》]:https://huggingface.co/baichuan-inc/Baichuan2-7B-Base/blob/main/Baichuan%202%E6%A8%A1%E5%9E%8B%E7%A4%BE%E5%8C%BA%E8%AE%B8%E5%8F%AF%E5%8D%8F%E8%AE%AE.pdf

|

| 170 |

+

|

| 171 |

+

[邮件申请]: mailto:opensource@baichuan-inc.com

|

| 172 |

+

[Email]: mailto:opensource@baichuan-inc.com

|

| 173 |

+

[opensource@baichuan-inc.com]: mailto:opensource@baichuan-inc.com

|

| 174 |

+

[训练过程heckpoint下载]: https://huggingface.co/baichuan-inc/Baichuan2-7B-Intermediate-Checkpoints

|

| 175 |

+

[百川智能]: https://www.baichuan-ai.com

|

config.json

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"_name_or_path": "/opt/local/llm_models/huggingface.co/baichuan-inc/Baichuan2-13B-Base",

|

| 4 |

+

"architectures": [

|

| 5 |

+

"BaichuanForCausalLM"

|

| 6 |

+

],

|

| 7 |

+

"auto_map": {

|

| 8 |

+

"AutoConfig": "configuration_baichuan.BaichuanConfig",

|

| 9 |

+

"AutoModelForCausalLM": "modeling_baichuan.BaichuanForCausalLM"

|

| 10 |

+

},

|

| 11 |

+

"bos_token_id": 1,

|

| 12 |

+

"eos_token_id": 2,

|

| 13 |

+

"hidden_act": "silu",

|

| 14 |

+

"hidden_size": 5120,

|

| 15 |

+

"initializer_range": 0.02,

|

| 16 |

+

"intermediate_size": 13696,

|

| 17 |

+

"model_max_length": 4096,

|

| 18 |

+

"model_type": "baichuan",

|

| 19 |

+

"num_attention_heads": 40,

|

| 20 |

+

"num_hidden_layers": 40,

|

| 21 |

+

"pad_token_id": 0,

|

| 22 |

+

"rms_norm_eps": 1e-06,

|

| 23 |

+

"tie_word_embeddings": false,

|

| 24 |

+

"torch_dtype": "float16",

|

| 25 |

+

"transformers_version": "4.32.1",

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"vocab_size": 125696,

|

| 28 |

+

"z_loss_weight": 0

|

| 29 |

+

}

|

configuration_baichuan.py

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) 2023, Baichuan Intelligent Technology. All rights reserved.

|

| 2 |

+

|

| 3 |

+

from transformers.configuration_utils import PretrainedConfig

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

class BaichuanConfig(PretrainedConfig):

|

| 7 |

+

model_type = "baichuan"

|

| 8 |

+

keys_to_ignore_at_inference = ["past_key_values"]

|

| 9 |

+

|

| 10 |

+

def __init__(

|

| 11 |

+

self,

|

| 12 |

+

vocab_size=64000,

|

| 13 |

+

hidden_size=5120,

|

| 14 |

+

intermediate_size=13696,

|

| 15 |

+

num_hidden_layers=40,

|

| 16 |

+

num_attention_heads=40,

|

| 17 |

+

hidden_act="silu",

|

| 18 |

+

model_max_length=4096,

|

| 19 |

+

initializer_range=0.02,

|

| 20 |

+

rms_norm_eps=1e-6,

|

| 21 |

+

use_cache=True,

|

| 22 |

+

pad_token_id=0,

|

| 23 |

+

bos_token_id=1,

|

| 24 |

+

eos_token_id=2,

|

| 25 |

+

tie_word_embeddings=False,

|

| 26 |

+

gradient_checkpointing=False,

|

| 27 |

+

z_loss_weight=0,

|

| 28 |

+

**kwargs,

|

| 29 |

+

):

|

| 30 |

+

self.vocab_size = vocab_size

|

| 31 |

+

self.model_max_length = model_max_length

|

| 32 |

+

self.hidden_size = hidden_size

|

| 33 |

+

self.intermediate_size = intermediate_size

|

| 34 |

+

self.num_hidden_layers = num_hidden_layers

|

| 35 |

+

self.num_attention_heads = num_attention_heads

|

| 36 |

+

self.hidden_act = hidden_act

|

| 37 |

+

self.initializer_range = initializer_range

|

| 38 |

+

self.rms_norm_eps = rms_norm_eps

|

| 39 |

+

self.use_cache = use_cache

|

| 40 |

+

self.z_loss_weight = z_loss_weight

|

| 41 |

+

self.gradient_checkpointing = (gradient_checkpointing,)

|

| 42 |

+

super().__init__(

|

| 43 |

+

pad_token_id=pad_token_id,

|

| 44 |

+

bos_token_id=bos_token_id,

|

| 45 |

+

eos_token_id=eos_token_id,

|

| 46 |

+

tie_word_embeddings=tie_word_embeddings,

|

| 47 |

+

**kwargs,

|

| 48 |

+

)

|

generation_config.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"pad_token_id": 0,

|

| 6 |

+

"transformers_version": "4.32.1"

|

| 7 |

+

}

|

generation_utils.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

from queue import Queue

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def build_chat_input(model, tokenizer, messages: List[dict], max_new_tokens: int=0):

|

| 8 |

+

def _parse_messages(messages, split_role="user"):

|

| 9 |

+

system, rounds = "", []

|

| 10 |

+

round = []

|

| 11 |

+

for i, message in enumerate(messages):

|

| 12 |

+

if message["role"] == "system":

|

| 13 |

+

assert i == 0

|

| 14 |

+

system = message["content"]

|

| 15 |

+

continue

|

| 16 |

+

if message["role"] == split_role and round:

|

| 17 |

+

rounds.append(round)

|

| 18 |

+

round = []

|

| 19 |

+

round.append(message)

|

| 20 |

+

if round:

|

| 21 |

+

rounds.append(round)

|

| 22 |

+

return system, rounds

|

| 23 |

+

|

| 24 |

+

max_new_tokens = max_new_tokens or model.generation_config.max_new_tokens

|

| 25 |

+

max_input_tokens = model.config.model_max_length - max_new_tokens

|

| 26 |

+

system, rounds = _parse_messages(messages, split_role="user")

|

| 27 |

+

system_tokens = tokenizer.encode(system)

|

| 28 |

+

max_history_tokens = max_input_tokens - len(system_tokens)

|

| 29 |

+

|

| 30 |

+

history_tokens = []

|

| 31 |

+

for round in rounds[::-1]:

|

| 32 |

+

round_tokens = []

|

| 33 |

+

for message in round:

|

| 34 |

+

if message["role"] == "user":

|

| 35 |

+

round_tokens.append(model.generation_config.user_token_id)

|

| 36 |

+

else:

|

| 37 |

+

round_tokens.append(model.generation_config.assistant_token_id)

|

| 38 |

+

round_tokens.extend(tokenizer.encode(message["content"]))

|

| 39 |

+

if len(history_tokens) == 0 or len(history_tokens) + len(round_tokens) <= max_history_tokens:

|

| 40 |

+

history_tokens = round_tokens + history_tokens # concat left

|

| 41 |

+

if len(history_tokens) < max_history_tokens:

|

| 42 |

+

continue

|

| 43 |

+

break

|

| 44 |

+

|

| 45 |

+

input_tokens = system_tokens + history_tokens

|

| 46 |

+

if messages[-1]["role"] != "assistant":

|

| 47 |

+

input_tokens.append(model.generation_config.assistant_token_id)

|

| 48 |

+

input_tokens = input_tokens[-max_input_tokens:] # truncate left

|

| 49 |

+

return torch.LongTensor([input_tokens]).to(model.device)

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

class TextIterStreamer:

|

| 53 |

+

def __init__(self, tokenizer, skip_prompt=False, skip_special_tokens=False):

|

| 54 |

+

self.tokenizer = tokenizer

|

| 55 |

+

self.skip_prompt = skip_prompt

|

| 56 |

+

self.skip_special_tokens = skip_special_tokens

|

| 57 |

+

self.tokens = []

|

| 58 |

+

self.text_queue = Queue()

|

| 59 |

+

self.next_tokens_are_prompt = True

|

| 60 |

+

|

| 61 |

+

def put(self, value):

|

| 62 |

+

if self.skip_prompt and self.next_tokens_are_prompt:

|

| 63 |

+

self.next_tokens_are_prompt = False

|

| 64 |

+

else:

|

| 65 |

+

if len(value.shape) > 1:

|

| 66 |

+

value = value[0]

|

| 67 |

+

self.tokens.extend(value.tolist())

|

| 68 |

+

self.text_queue.put(

|

| 69 |

+

self.tokenizer.decode(self.tokens, skip_special_tokens=self.skip_special_tokens))

|

| 70 |

+

|

| 71 |

+

def end(self):

|

| 72 |

+

self.text_queue.put(None)

|

| 73 |

+

|

| 74 |

+

def __iter__(self):

|

| 75 |

+

return self

|

| 76 |

+

|

| 77 |

+

def __next__(self):

|

| 78 |

+

value = self.text_queue.get()

|

| 79 |

+

if value is None:

|

| 80 |

+

raise StopIteration()

|

| 81 |

+

else:

|

| 82 |

+

return value

|

| 83 |

+

|

modeling_baichuan.py

ADDED

|

@@ -0,0 +1,826 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) 2023, Baichuan Intelligent Technology. All rights reserved.

|

| 2 |

+

|

| 3 |

+

from .configuration_baichuan import BaichuanConfig

|

| 4 |

+

from .generation_utils import build_chat_input, TextIterStreamer

|

| 5 |

+

|

| 6 |

+

import math

|

| 7 |

+

from threading import Thread

|

| 8 |

+

from typing import List, Optional, Tuple, Union

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

from torch import nn

|

| 12 |

+

from torch.nn import CrossEntropyLoss

|

| 13 |

+

from torch.nn import functional as F

|

| 14 |

+

from transformers import PreTrainedModel, PretrainedConfig

|

| 15 |

+

from transformers.activations import ACT2FN

|

| 16 |

+

from transformers.generation.utils import GenerationConfig

|

| 17 |

+

from transformers.modeling_outputs import BaseModelOutputWithPast, CausalLMOutputWithPast

|

| 18 |

+

from transformers.utils import logging, ContextManagers

|

| 19 |

+

|

| 20 |

+

import os

|

| 21 |

+

from contextlib import contextmanager

|

| 22 |

+

from accelerate import init_empty_weights

|

| 23 |

+

|

| 24 |

+

logger = logging.get_logger(__name__)

|

| 25 |

+

|

| 26 |

+

try:

|

| 27 |

+

from xformers import ops as xops

|

| 28 |

+

except ImportError:

|

| 29 |

+

xops = None

|

| 30 |

+

logger.warning(

|

| 31 |

+

"Xformers is not installed correctly. If you want to use memory_efficient_attention to accelerate training use the following command to install Xformers\npip install xformers."

|

| 32 |

+

)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def _get_interleave(n):

|

| 36 |

+

def _get_interleave_power_of_2(n):

|

| 37 |

+

start = 2 ** (-(2 ** -(math.log2(n) - 3)))

|

| 38 |

+

ratio = start

|

| 39 |

+

return [start * ratio**i for i in range(n)]

|

| 40 |

+

|

| 41 |

+

if math.log2(n).is_integer():

|

| 42 |

+

return _get_interleave_power_of_2(n)

|

| 43 |

+

else:

|

| 44 |

+

closest_power_of_2 = 2 ** math.floor(math.log2(n))

|

| 45 |

+

return (

|

| 46 |

+

_get_interleave_power_of_2(closest_power_of_2)

|

| 47 |

+

+ _get_interleave(2 * closest_power_of_2)[0::2][: n - closest_power_of_2]

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

def _fill_with_neg_inf(t):

|

| 52 |

+

"""FP16-compatible function that fills a tensor with -inf."""

|

| 53 |

+

return t.float().fill_(float("-inf")).type_as(t)

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

def _buffered_future_mask(tensor, maxpos, alibi, attn_heads):

|

| 57 |

+

_future_mask = torch.triu(_fill_with_neg_inf(torch.zeros([maxpos, maxpos])), 1)

|

| 58 |

+

_future_mask = _future_mask.unsqueeze(0) + alibi

|

| 59 |

+

new_future_mask = _future_mask.to(tensor)

|

| 60 |

+

return new_future_mask[: tensor.shape[0] * attn_heads, :maxpos, :maxpos]

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def _gen_alibi_mask(tensor, n_head, max_pos):

|

| 64 |

+

slopes = torch.Tensor(_get_interleave(n_head))

|

| 65 |

+

position_point = torch.arange(max_pos) - max_pos + 1

|

| 66 |

+

position_point = position_point.unsqueeze(0).unsqueeze(0).expand(n_head, -1, -1)

|

| 67 |

+

diag = torch.diag(position_point[0])

|

| 68 |

+

position_point = position_point - diag.unsqueeze(0).unsqueeze(0).transpose(-1, -2)

|

| 69 |

+

alibi = slopes.unsqueeze(1).unsqueeze(1) * position_point

|

| 70 |

+

alibi = alibi.view(n_head, 1, max_pos)

|

| 71 |

+

alibi_mask = torch.triu(_fill_with_neg_inf(torch.zeros([max_pos, max_pos])), 1)

|

| 72 |

+

alibi_mask = alibi_mask.unsqueeze(0) + alibi

|

| 73 |

+

return alibi_mask

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

class RMSNorm(torch.nn.Module):

|

| 77 |

+

def __init__(self, hidden_size, epsilon=1e-6):

|

| 78 |

+

super().__init__()

|

| 79 |

+

self.weight = torch.nn.Parameter(torch.empty(hidden_size))

|

| 80 |

+

self.epsilon = epsilon

|

| 81 |

+

|

| 82 |

+

def forward(self, hidden_states):

|

| 83 |

+

variance = hidden_states.to(torch.float32).pow(2).mean(-1, keepdim=True)

|

| 84 |

+

hidden_states = hidden_states * torch.rsqrt(variance + self.epsilon)

|

| 85 |

+

|

| 86 |

+

# convert into half-precision

|

| 87 |

+

if self.weight.dtype in [torch.float16, torch.bfloat16]:

|

| 88 |

+

hidden_states = hidden_states.to(self.weight.dtype)

|

| 89 |

+

|

| 90 |

+

return self.weight * hidden_states

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

class MLP(torch.nn.Module):

|

| 94 |

+

def __init__(

|

| 95 |

+

self,

|

| 96 |

+

hidden_size: int,

|

| 97 |

+

intermediate_size: int,

|

| 98 |

+

hidden_act: str,

|

| 99 |

+

):

|

| 100 |

+

super().__init__()

|

| 101 |

+

self.gate_proj = torch.nn.Linear(hidden_size, intermediate_size, bias=False)

|

| 102 |

+

self.down_proj = torch.nn.Linear(intermediate_size, hidden_size, bias=False)

|

| 103 |

+

self.up_proj = torch.nn.Linear(hidden_size, intermediate_size, bias=False)

|

| 104 |

+

self.act_fn = ACT2FN[hidden_act]

|

| 105 |

+

|

| 106 |

+

def forward(self, x):

|

| 107 |

+

return self.down_proj(self.act_fn(self.gate_proj(x)) * self.up_proj(x))

|

| 108 |

+

|

| 109 |

+

|

| 110 |

+

class BaichuanAttention(torch.nn.Module):

|

| 111 |

+

def __init__(self, config: BaichuanConfig):

|

| 112 |

+

super().__init__()

|

| 113 |

+

self.config = config

|

| 114 |

+

self.hidden_size = config.hidden_size

|

| 115 |

+

self.num_heads = config.num_attention_heads

|

| 116 |

+

self.head_dim = self.hidden_size // self.num_heads

|

| 117 |

+

self.max_position_embeddings = config.model_max_length

|

| 118 |

+

|

| 119 |

+

if (self.head_dim * self.num_heads) != self.hidden_size:

|

| 120 |

+

raise ValueError(

|

| 121 |

+

f"hidden_size {self.hidden_size} is not divisible by num_heads {self.num_heads}"

|

| 122 |

+

)

|

| 123 |

+

self.W_pack = torch.nn.Linear(

|

| 124 |

+

self.hidden_size, 3 * self.hidden_size, bias=False

|

| 125 |

+

)

|

| 126 |

+

self.o_proj = torch.nn.Linear(

|

| 127 |

+

self.num_heads * self.head_dim, self.hidden_size, bias=False

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

def _shape(self, tensor: torch.Tensor, seq_len: int, bsz: int):

|

| 131 |

+

return (

|

| 132 |

+

tensor.view(bsz, seq_len, self.num_heads, self.head_dim)

|

| 133 |

+

.transpose(1, 2)

|

| 134 |

+

.contiguous()

|

| 135 |

+

)

|

| 136 |

+

|

| 137 |

+

def forward(

|

| 138 |

+

self,

|

| 139 |

+

hidden_states: torch.Tensor,

|

| 140 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 141 |

+

past_key_value: Optional[Tuple[torch.Tensor]] = None,

|

| 142 |

+

output_attentions: bool = False,

|

| 143 |

+

use_cache: bool = False,

|

| 144 |

+

) -> Tuple[torch.Tensor, Optional[torch.Tensor], Optional[Tuple[torch.Tensor]]]:

|

| 145 |

+

bsz, q_len, _ = hidden_states.size()

|

| 146 |

+

|

| 147 |

+

proj = self.W_pack(hidden_states)

|

| 148 |

+

proj = (

|

| 149 |

+

proj.unflatten(-1, (3, self.hidden_size))

|

| 150 |

+

.unsqueeze(0)

|

| 151 |

+

.transpose(0, -2)

|

| 152 |

+

.squeeze(-2)

|

| 153 |

+

)

|

| 154 |

+

query_states = (

|

| 155 |

+

proj[0].view(bsz, q_len, self.num_heads, self.head_dim).transpose(1, 2)

|

| 156 |

+

)

|

| 157 |

+

key_states = (

|

| 158 |

+

proj[1].view(bsz, q_len, self.num_heads, self.head_dim).transpose(1, 2)

|

| 159 |

+

)

|

| 160 |

+

value_states = (

|

| 161 |

+

proj[2].view(bsz, q_len, self.num_heads, self.head_dim).transpose(1, 2)

|

| 162 |

+

)

|

| 163 |

+

|

| 164 |

+

kv_seq_len = key_states.shape[-2]

|

| 165 |

+

if past_key_value is not None:

|

| 166 |

+

kv_seq_len += past_key_value[0].shape[-2]

|

| 167 |

+

|

| 168 |

+

if past_key_value is not None:

|

| 169 |

+

# reuse k, v, self_attention

|

| 170 |

+

key_states = torch.cat([past_key_value[0], key_states], dim=2)

|

| 171 |

+

value_states = torch.cat([past_key_value[1], value_states], dim=2)

|

| 172 |

+

|

| 173 |

+

past_key_value = (key_states, value_states) if use_cache else None

|

| 174 |

+

if xops is not None and self.training:

|

| 175 |

+

attn_weights = None

|

| 176 |

+

# query_states = query_states.transpose(1, 2)

|

| 177 |

+

# key_states = key_states.transpose(1, 2)

|

| 178 |

+

# value_states = value_states.transpose(1, 2)

|

| 179 |

+

# attn_output = xops.memory_efficient_attention(

|

| 180 |

+

# query_states, key_states, value_states, attn_bias=attention_mask

|

| 181 |

+

# )

|

| 182 |

+

with torch.backends.cuda.sdp_kernel(enable_flash=True, enable_math=True, enable_mem_efficient=True):

|

| 183 |

+

attn_output = F.scaled_dot_product_attention(query_states, key_states, value_states, attn_mask = attention_mask)

|

| 184 |

+

attn_output = attn_output.transpose(1, 2)

|

| 185 |

+

else:

|

| 186 |

+

attn_weights = torch.matmul(

|

| 187 |

+

query_states, key_states.transpose(2, 3)

|

| 188 |

+

) / math.sqrt(self.head_dim)

|

| 189 |

+

|

| 190 |

+

if attention_mask is not None:

|

| 191 |

+

if q_len == 1: # inference with cache

|

| 192 |

+

if len(attention_mask.size()) == 4:

|

| 193 |

+

attention_mask = attention_mask[:, :, -1:, :]

|

| 194 |

+

else:

|

| 195 |

+

attention_mask = attention_mask[:, -1:, :]

|

| 196 |

+

attn_weights = attn_weights + attention_mask

|

| 197 |

+

attn_weights = torch.max(

|

| 198 |

+

attn_weights, torch.tensor(torch.finfo(attn_weights.dtype).min)

|

| 199 |

+

)

|

| 200 |

+

|

| 201 |

+

attn_weights = torch.nn.functional.softmax(attn_weights, dim=-1)

|

| 202 |

+

attn_output = torch.matmul(attn_weights, value_states)

|

| 203 |

+

|

| 204 |

+

attn_output = attn_output.transpose(1, 2)

|

| 205 |

+

attn_output = attn_output.reshape(bsz, q_len, self.hidden_size)

|

| 206 |

+

attn_output = self.o_proj(attn_output)

|

| 207 |

+

|

| 208 |

+

if not output_attentions:

|

| 209 |

+

attn_weights = None

|

| 210 |

+

|

| 211 |

+

return attn_output, attn_weights, past_key_value

|

| 212 |

+

|

| 213 |

+

|

| 214 |

+

class BaichuanLayer(torch.nn.Module):

|

| 215 |

+

def __init__(self, config: BaichuanConfig):

|

| 216 |

+

super().__init__()

|

| 217 |

+

self.hidden_size = config.hidden_size

|

| 218 |

+

self.self_attn = BaichuanAttention(config=config)

|

| 219 |

+

self.mlp = MLP(

|

| 220 |

+

hidden_size=self.hidden_size,

|

| 221 |

+

intermediate_size=config.intermediate_size,

|

| 222 |

+

hidden_act=config.hidden_act,

|

| 223 |

+

)

|

| 224 |

+

self.input_layernorm = RMSNorm(config.hidden_size, epsilon=config.rms_norm_eps)

|

| 225 |

+

self.post_attention_layernorm = RMSNorm(

|

| 226 |

+

config.hidden_size, epsilon=config.rms_norm_eps

|

| 227 |

+

)

|

| 228 |

+

|

| 229 |

+

def forward(

|

| 230 |

+

self,

|

| 231 |

+

hidden_states: torch.Tensor,

|

| 232 |

+

attention_mask: Optional[torch.Tensor] = None,

|

| 233 |

+

past_key_value: Optional[Tuple[torch.Tensor]] = None,

|

| 234 |

+

output_attentions: Optional[bool] = False,

|

| 235 |

+

use_cache: Optional[bool] = False,

|

| 236 |

+

) -> Tuple[

|

| 237 |

+

torch.FloatTensor, Optional[Tuple[torch.FloatTensor, torch.FloatTensor]]

|

| 238 |

+

]:

|

| 239 |

+

residual = hidden_states

|

| 240 |

+

|

| 241 |

+

hidden_states = self.input_layernorm(hidden_states)

|

| 242 |

+

|

| 243 |

+

# Self Attention

|

| 244 |

+

hidden_states, self_attn_weights, present_key_value = self.self_attn(

|

| 245 |

+

hidden_states=hidden_states,

|

| 246 |

+

attention_mask=attention_mask,

|

| 247 |

+

past_key_value=past_key_value,

|

| 248 |

+

output_attentions=output_attentions,

|

| 249 |

+

use_cache=use_cache,

|

| 250 |

+

)

|

| 251 |

+

hidden_states = residual + hidden_states

|

| 252 |

+

|

| 253 |

+

# Fully Connected

|

| 254 |

+

residual = hidden_states

|

| 255 |

+

hidden_states = self.post_attention_layernorm(hidden_states)

|

| 256 |

+

hidden_states = self.mlp(hidden_states)

|

| 257 |

+

hidden_states = residual + hidden_states

|

| 258 |

+

|

| 259 |

+

outputs = (hidden_states,)

|

| 260 |

+

|

| 261 |

+

if use_cache:

|

| 262 |

+

outputs += (present_key_value,)

|

| 263 |

+

|

| 264 |

+