Upload folder using huggingface_hub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +2 -0

- stable-diffusion.cpp/.dockerignore +6 -0

- stable-diffusion.cpp/.github/workflows/build.yml +201 -0

- stable-diffusion.cpp/.gitignore +5 -0

- stable-diffusion.cpp/.gitmodules +3 -0

- stable-diffusion.cpp/CMakeLists.txt +45 -0

- stable-diffusion.cpp/Dockerfile +17 -0

- stable-diffusion.cpp/LICENSE +21 -0

- stable-diffusion.cpp/README.md +198 -0

- stable-diffusion.cpp/assets/a lovely cat.png +0 -0

- stable-diffusion.cpp/assets/f16.png +0 -0

- stable-diffusion.cpp/assets/f32.png +0 -0

- stable-diffusion.cpp/assets/img2img_output.png +0 -0

- stable-diffusion.cpp/assets/q4_0.png +0 -0

- stable-diffusion.cpp/assets/q4_1.png +0 -0

- stable-diffusion.cpp/assets/q5_0.png +0 -0

- stable-diffusion.cpp/assets/q5_1.png +0 -0

- stable-diffusion.cpp/assets/q8_0.png +0 -0

- stable-diffusion.cpp/examples/CMakeLists.txt +8 -0

- stable-diffusion.cpp/examples/main.cpp +473 -0

- stable-diffusion.cpp/examples/stb_image.h +0 -0

- stable-diffusion.cpp/examples/stb_image_write.h +1741 -0

- stable-diffusion.cpp/ggml/.editorconfig +19 -0

- stable-diffusion.cpp/ggml/.github/workflows/ci.yml +137 -0

- stable-diffusion.cpp/ggml/.gitignore +37 -0

- stable-diffusion.cpp/ggml/CMakeLists.txt +197 -0

- stable-diffusion.cpp/ggml/LICENSE +21 -0

- stable-diffusion.cpp/ggml/README.md +140 -0

- stable-diffusion.cpp/ggml/build.zig +158 -0

- stable-diffusion.cpp/ggml/ci/run.sh +334 -0

- stable-diffusion.cpp/ggml/cmake/BuildTypes.cmake +54 -0

- stable-diffusion.cpp/ggml/cmake/GitVars.cmake +22 -0

- stable-diffusion.cpp/ggml/examples/CMakeLists.txt +30 -0

- stable-diffusion.cpp/ggml/examples/common-ggml.cpp +246 -0

- stable-diffusion.cpp/ggml/examples/common-ggml.h +18 -0

- stable-diffusion.cpp/ggml/examples/common.cpp +817 -0

- stable-diffusion.cpp/ggml/examples/common.h +179 -0

- stable-diffusion.cpp/ggml/examples/dolly-v2/CMakeLists.txt +13 -0

- stable-diffusion.cpp/ggml/examples/dolly-v2/README.md +187 -0

- stable-diffusion.cpp/ggml/examples/dolly-v2/convert-h5-to-ggml.py +116 -0

- stable-diffusion.cpp/ggml/examples/dolly-v2/main.cpp +969 -0

- stable-diffusion.cpp/ggml/examples/dolly-v2/quantize.cpp +178 -0

- stable-diffusion.cpp/ggml/examples/dr_wav.h +0 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/CMakeLists.txt +36 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/README.md +225 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/convert-cerebras-to-ggml.py +183 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/convert-ckpt-to-ggml.py +159 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/convert-h5-to-ggml.py +195 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/download-ggml-model.sh +69 -0

- stable-diffusion.cpp/ggml/examples/gpt-2/download-model.sh +48 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

stable-diffusion.cpp/ggml/examples/mnist/models/mnist/mnist_model.state_dict filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

stable-diffusion.cpp/ggml/examples/mnist/models/mnist/t10k-images.idx3-ubyte filter=lfs diff=lfs merge=lfs -text

|

stable-diffusion.cpp/.dockerignore

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

build*/

|

| 2 |

+

test/

|

| 3 |

+

|

| 4 |

+

.cache/

|

| 5 |

+

*.swp

|

| 6 |

+

models/

|

stable-diffusion.cpp/.github/workflows/build.yml

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: CI

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

workflow_dispatch: # allows manual triggering

|

| 5 |

+

inputs:

|

| 6 |

+

create_release:

|

| 7 |

+

description: 'Create new release'

|

| 8 |

+

required: true

|

| 9 |

+

type: boolean

|

| 10 |

+

push:

|

| 11 |

+

branches:

|

| 12 |

+

- master

|

| 13 |

+

- ci

|

| 14 |

+

paths: ['.github/workflows/**', '**/CMakeLists.txt', '**/Makefile', '**/*.h', '**/*.hpp', '**/*.c', '**/*.cpp', '**/*.cu']

|

| 15 |

+

pull_request:

|

| 16 |

+

types: [opened, synchronize, reopened]

|

| 17 |

+

paths: ['**/CMakeLists.txt', '**/Makefile', '**/*.h', '**/*.hpp', '**/*.c', '**/*.cpp', '**/*.cu']

|

| 18 |

+

|

| 19 |

+

env:

|

| 20 |

+

BRANCH_NAME: ${{ github.head_ref || github.ref_name }}

|

| 21 |

+

|

| 22 |

+

jobs:

|

| 23 |

+

ubuntu-latest-cmake:

|

| 24 |

+

runs-on: ubuntu-latest

|

| 25 |

+

|

| 26 |

+

steps:

|

| 27 |

+

- name: Clone

|

| 28 |

+

id: checkout

|

| 29 |

+

uses: actions/checkout@v3

|

| 30 |

+

with:

|

| 31 |

+

submodules: recursive

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

- name: Dependencies

|

| 35 |

+

id: depends

|

| 36 |

+

run: |

|

| 37 |

+

sudo apt-get update

|

| 38 |

+

sudo apt-get install build-essential

|

| 39 |

+

|

| 40 |

+

- name: Build

|

| 41 |

+

id: cmake_build

|

| 42 |

+

run: |

|

| 43 |

+

mkdir build

|

| 44 |

+

cd build

|

| 45 |

+

cmake ..

|

| 46 |

+

cmake --build . --config Release

|

| 47 |

+

|

| 48 |

+

#- name: Test

|

| 49 |

+

#id: cmake_test

|

| 50 |

+

#run: |

|

| 51 |

+

#cd build

|

| 52 |

+

#ctest --verbose --timeout 900

|

| 53 |

+

|

| 54 |

+

macOS-latest-cmake:

|

| 55 |

+

runs-on: macos-latest

|

| 56 |

+

|

| 57 |

+

steps:

|

| 58 |

+

- name: Clone

|

| 59 |

+

id: checkout

|

| 60 |

+

uses: actions/checkout@v3

|

| 61 |

+

with:

|

| 62 |

+

submodules: recursive

|

| 63 |

+

|

| 64 |

+

- name: Dependencies

|

| 65 |

+

id: depends

|

| 66 |

+

continue-on-error: true

|

| 67 |

+

run: |

|

| 68 |

+

brew update

|

| 69 |

+

|

| 70 |

+

- name: Build

|

| 71 |

+

id: cmake_build

|

| 72 |

+

run: |

|

| 73 |

+

sysctl -a

|

| 74 |

+

mkdir build

|

| 75 |

+

cd build

|

| 76 |

+

cmake ..

|

| 77 |

+

cmake --build . --config Release

|

| 78 |

+

|

| 79 |

+

#- name: Test

|

| 80 |

+

#id: cmake_test

|

| 81 |

+

#run: |

|

| 82 |

+

#cd build

|

| 83 |

+

#ctest --verbose --timeout 900

|

| 84 |

+

|

| 85 |

+

windows-latest-cmake:

|

| 86 |

+

runs-on: windows-latest

|

| 87 |

+

|

| 88 |

+

strategy:

|

| 89 |

+

matrix:

|

| 90 |

+

include:

|

| 91 |

+

- build: 'noavx'

|

| 92 |

+

defines: '-DGGML_AVX=OFF -DGGML_AVX2=OFF -DGGML_FMA=OFF'

|

| 93 |

+

- build: 'avx2'

|

| 94 |

+

defines: '-DGGML_AVX2=ON'

|

| 95 |

+

- build: 'avx'

|

| 96 |

+

defines: '-DGGML_AVX2=OFF'

|

| 97 |

+

- build: 'avx512'

|

| 98 |

+

defines: '-DGGML_AVX512=ON'

|

| 99 |

+

|

| 100 |

+

steps:

|

| 101 |

+

- name: Clone

|

| 102 |

+

id: checkout

|

| 103 |

+

uses: actions/checkout@v3

|

| 104 |

+

with:

|

| 105 |

+

submodules: recursive

|

| 106 |

+

|

| 107 |

+

- name: Build

|

| 108 |

+

id: cmake_build

|

| 109 |

+

run: |

|

| 110 |

+

mkdir build

|

| 111 |

+

cd build

|

| 112 |

+

cmake .. ${{ matrix.defines }}

|

| 113 |

+

cmake --build . --config Release

|

| 114 |

+

|

| 115 |

+

- name: Check AVX512F support

|

| 116 |

+

id: check_avx512f

|

| 117 |

+

if: ${{ matrix.build == 'avx512' }}

|

| 118 |

+

continue-on-error: true

|

| 119 |

+

run: |

|

| 120 |

+

cd build

|

| 121 |

+

$vcdir = $(vswhere -latest -products * -requires Microsoft.VisualStudio.Component.VC.Tools.x86.x64 -property installationPath)

|

| 122 |

+

$msvc = $(join-path $vcdir $('VC\Tools\MSVC\'+$(gc -raw $(join-path $vcdir 'VC\Auxiliary\Build\Microsoft.VCToolsVersion.default.txt')).Trim()))

|

| 123 |

+

$cl = $(join-path $msvc 'bin\Hostx64\x64\cl.exe')

|

| 124 |

+

echo 'int main(void){unsigned int a[4];__cpuid(a,7);return !(a[1]&65536);}' >> avx512f.c

|

| 125 |

+

& $cl /O2 /GS- /kernel avx512f.c /link /nodefaultlib /entry:main

|

| 126 |

+

.\avx512f.exe && echo "AVX512F: YES" && ( echo HAS_AVX512F=1 >> $env:GITHUB_ENV ) || echo "AVX512F: NO"

|

| 127 |

+

|

| 128 |

+

#- name: Test

|

| 129 |

+

#id: cmake_test

|

| 130 |

+

#run: |

|

| 131 |

+

#cd build

|

| 132 |

+

#ctest -C Release --verbose --timeout 900

|

| 133 |

+

|

| 134 |

+

- name: Get commit hash

|

| 135 |

+

id: commit

|

| 136 |

+

if: ${{ ( github.event_name == 'push' && github.ref == 'refs/heads/master' ) || github.event.inputs.create_release == 'true' }}

|

| 137 |

+

uses: pr-mpt/actions-commit-hash@v2

|

| 138 |

+

|

| 139 |

+

- name: Pack artifacts

|

| 140 |

+

id: pack_artifacts

|

| 141 |

+

if: ${{ ( github.event_name == 'push' && github.ref == 'refs/heads/master' ) || github.event.inputs.create_release == 'true' }}

|

| 142 |

+

run: |

|

| 143 |

+

Copy-Item ggml/LICENSE .\build\bin\Release\ggml.txt

|

| 144 |

+

Copy-Item LICENSE .\build\bin\Release\stable-diffusion.cpp.txt

|

| 145 |

+

7z a sd-${{ env.BRANCH_NAME }}-${{ steps.commit.outputs.short }}-bin-win-${{ matrix.build }}-x64.zip .\build\bin\Release\*

|

| 146 |

+

|

| 147 |

+

- name: Upload artifacts

|

| 148 |

+

if: ${{ ( github.event_name == 'push' && github.ref == 'refs/heads/master' ) || github.event.inputs.create_release == 'true' }}

|

| 149 |

+

uses: actions/upload-artifact@v3

|

| 150 |

+

with:

|

| 151 |

+

path: |

|

| 152 |

+

sd-${{ env.BRANCH_NAME }}-${{ steps.commit.outputs.short }}-bin-win-${{ matrix.build }}-x64.zip

|

| 153 |

+

|

| 154 |

+

release:

|

| 155 |

+

if: ${{ ( github.event_name == 'push' && github.ref == 'refs/heads/master' ) || github.event.inputs.create_release == 'true' }}

|

| 156 |

+

|

| 157 |

+

runs-on: ubuntu-latest

|

| 158 |

+

|

| 159 |

+

needs:

|

| 160 |

+

- ubuntu-latest-cmake

|

| 161 |

+

- macOS-latest-cmake

|

| 162 |

+

- windows-latest-cmake

|

| 163 |

+

|

| 164 |

+

steps:

|

| 165 |

+

- name: Download artifacts

|

| 166 |

+

id: download-artifact

|

| 167 |

+

uses: actions/download-artifact@v3

|

| 168 |

+

|

| 169 |

+

- name: Get commit hash

|

| 170 |

+

id: commit

|

| 171 |

+

uses: pr-mpt/actions-commit-hash@v2

|

| 172 |

+

|

| 173 |

+

- name: Create release

|

| 174 |

+

id: create_release

|

| 175 |

+

uses: anzz1/action-create-release@v1

|

| 176 |

+

env:

|

| 177 |

+

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

| 178 |

+

with:

|

| 179 |

+

tag_name: ${{ env.BRANCH_NAME }}-${{ steps.commit.outputs.short }}

|

| 180 |

+

|

| 181 |

+

- name: Upload release

|

| 182 |

+

id: upload_release

|

| 183 |

+

uses: actions/github-script@v3

|

| 184 |

+

with:

|

| 185 |

+

github-token: ${{secrets.GITHUB_TOKEN}}

|

| 186 |

+

script: |

|

| 187 |

+

const path = require('path');

|

| 188 |

+

const fs = require('fs');

|

| 189 |

+

const release_id = '${{ steps.create_release.outputs.id }}';

|

| 190 |

+

for (let file of await fs.readdirSync('./artifact')) {

|

| 191 |

+

if (path.extname(file) === '.zip') {

|

| 192 |

+

console.log('uploadReleaseAsset', file);

|

| 193 |

+

await github.repos.uploadReleaseAsset({

|

| 194 |

+

owner: context.repo.owner,

|

| 195 |

+

repo: context.repo.repo,

|

| 196 |

+

release_id: release_id,

|

| 197 |

+

name: file,

|

| 198 |

+

data: await fs.readFileSync(`./artifact/${file}`)

|

| 199 |

+

});

|

| 200 |

+

}

|

| 201 |

+

}

|

stable-diffusion.cpp/.gitignore

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

build*/

|

| 2 |

+

test/

|

| 3 |

+

|

| 4 |

+

.cache/

|

| 5 |

+

*.swp

|

stable-diffusion.cpp/.gitmodules

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[submodule "ggml"]

|

| 2 |

+

path = ggml

|

| 3 |

+

url = https://github.com/leejet/ggml.git

|

stable-diffusion.cpp/CMakeLists.txt

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

cmake_minimum_required(VERSION 3.12)

|

| 2 |

+

project("stable-diffusion")

|

| 3 |

+

|

| 4 |

+

set(CMAKE_EXPORT_COMPILE_COMMANDS ON)

|

| 5 |

+

|

| 6 |

+

if (NOT XCODE AND NOT MSVC AND NOT CMAKE_BUILD_TYPE)

|

| 7 |

+

set(CMAKE_BUILD_TYPE Release CACHE STRING "Build type" FORCE)

|

| 8 |

+

set_property(CACHE CMAKE_BUILD_TYPE PROPERTY STRINGS "Debug" "Release" "MinSizeRel" "RelWithDebInfo")

|

| 9 |

+

endif()

|

| 10 |

+

|

| 11 |

+

set(CMAKE_LIBRARY_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR}/bin)

|

| 12 |

+

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ${CMAKE_BINARY_DIR}/bin)

|

| 13 |

+

|

| 14 |

+

if(CMAKE_SOURCE_DIR STREQUAL CMAKE_CURRENT_SOURCE_DIR)

|

| 15 |

+

set(SD_STANDALONE ON)

|

| 16 |

+

else()

|

| 17 |

+

set(SD_STANDALONE OFF)

|

| 18 |

+

endif()

|

| 19 |

+

|

| 20 |

+

#

|

| 21 |

+

# Option list

|

| 22 |

+

#

|

| 23 |

+

|

| 24 |

+

# general

|

| 25 |

+

#option(SD_BUILD_TESTS "sd: build tests" ${SD_STANDALONE})

|

| 26 |

+

option(SD_BUILD_EXAMPLES "sd: build examples" ${SD_STANDALONE})

|

| 27 |

+

option(BUILD_SHARED_LIBS "sd: build shared libs" OFF)

|

| 28 |

+

#option(SD_BUILD_SERVER "sd: build server example" ON)

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

# deps

|

| 32 |

+

add_subdirectory(ggml)

|

| 33 |

+

|

| 34 |

+

set(SD_LIB stable-diffusion)

|

| 35 |

+

|

| 36 |

+

add_library(${SD_LIB} stable-diffusion.h stable-diffusion.cpp)

|

| 37 |

+

target_link_libraries(${SD_LIB} PUBLIC ggml)

|

| 38 |

+

target_include_directories(${SD_LIB} PUBLIC .)

|

| 39 |

+

target_compile_features(${SD_LIB} PUBLIC cxx_std_11)

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

if (SD_BUILD_EXAMPLES)

|

| 43 |

+

add_subdirectory(examples)

|

| 44 |

+

endif()

|

| 45 |

+

|

stable-diffusion.cpp/Dockerfile

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

ARG UBUNTU_VERSION=22.04

|

| 2 |

+

|

| 3 |

+

FROM ubuntu:$UBUNTU_VERSION as build

|

| 4 |

+

|

| 5 |

+

RUN apt-get update && apt-get install -y build-essential git cmake

|

| 6 |

+

|

| 7 |

+

WORKDIR /sd.cpp

|

| 8 |

+

|

| 9 |

+

COPY . .

|

| 10 |

+

|

| 11 |

+

RUN mkdir build && cd build && cmake .. && cmake --build . --config Release

|

| 12 |

+

|

| 13 |

+

FROM ubuntu:$UBUNTU_VERSION as runtime

|

| 14 |

+

|

| 15 |

+

COPY --from=build /sd.cpp/build/bin/sd /sd

|

| 16 |

+

|

| 17 |

+

ENTRYPOINT [ "/sd" ]

|

stable-diffusion.cpp/LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 leejet

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

stable-diffusion.cpp/README.md

ADDED

|

@@ -0,0 +1,198 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

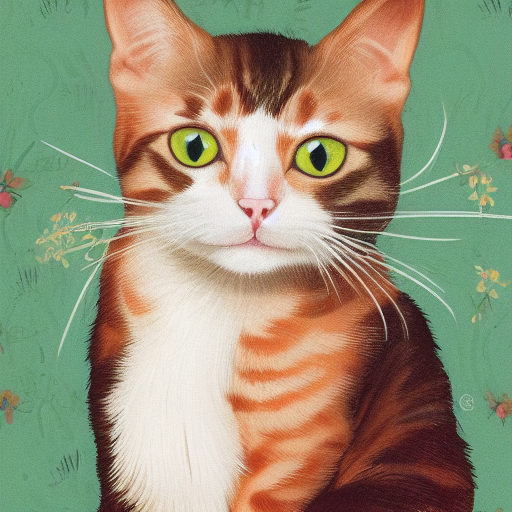

+

<p align="center">

|

| 2 |

+

<img src="./assets/a%20lovely%20cat.png" width="256x">

|

| 3 |

+

</p>

|

| 4 |

+

|

| 5 |

+

# stable-diffusion.cpp

|

| 6 |

+

|

| 7 |

+

Inference of [Stable Diffusion](https://github.com/CompVis/stable-diffusion) in pure C/C++

|

| 8 |

+

|

| 9 |

+

## Features

|

| 10 |

+

|

| 11 |

+

- Plain C/C++ implementation based on [ggml](https://github.com/ggerganov/ggml), working in the same way as [llama.cpp](https://github.com/ggerganov/llama.cpp)

|

| 12 |

+

- 16-bit, 32-bit float support

|

| 13 |

+

- 4-bit, 5-bit and 8-bit integer quantization support

|

| 14 |

+

- Accelerated memory-efficient CPU inference

|

| 15 |

+

- Only requires ~2.3GB when using txt2img with fp16 precision to generate a 512x512 image

|

| 16 |

+

- AVX, AVX2 and AVX512 support for x86 architectures

|

| 17 |

+

- SD1.x and SD2.x support

|

| 18 |

+

- Original `txt2img` and `img2img` mode

|

| 19 |

+

- Negative prompt

|

| 20 |

+

- [stable-diffusion-webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui) style tokenizer (not all the features, only token weighting for now)

|

| 21 |

+

- Sampling method

|

| 22 |

+

- `Euler A`

|

| 23 |

+

- `Euler`

|

| 24 |

+

- `Heun`

|

| 25 |

+

- `DPM2`

|

| 26 |

+

- `DPM++ 2M`

|

| 27 |

+

- [`DPM++ 2M v2`](https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/8457)

|

| 28 |

+

- `DPM++ 2S a`

|

| 29 |

+

- Cross-platform reproducibility (`--rng cuda`, consistent with the `stable-diffusion-webui GPU RNG`)

|

| 30 |

+

- Embedds generation parameters into png output as webui-compatible text string

|

| 31 |

+

- Supported platforms

|

| 32 |

+

- Linux

|

| 33 |

+

- Mac OS

|

| 34 |

+

- Windows

|

| 35 |

+

- Android (via Termux)

|

| 36 |

+

|

| 37 |

+

### TODO

|

| 38 |

+

|

| 39 |

+

- [ ] More sampling methods

|

| 40 |

+

- [ ] GPU support

|

| 41 |

+

- [ ] Make inference faster

|

| 42 |

+

- The current implementation of ggml_conv_2d is slow and has high memory usage

|

| 43 |

+

- [ ] Continuing to reduce memory usage (quantizing the weights of ggml_conv_2d)

|

| 44 |

+

- [ ] LoRA support

|

| 45 |

+

- [ ] k-quants support

|

| 46 |

+

|

| 47 |

+

## Usage

|

| 48 |

+

|

| 49 |

+

### Get the Code

|

| 50 |

+

|

| 51 |

+

```

|

| 52 |

+

git clone --recursive https://github.com/leejet/stable-diffusion.cpp

|

| 53 |

+

cd stable-diffusion.cpp

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

- If you have already cloned the repository, you can use the following command to update the repository to the latest code.

|

| 57 |

+

|

| 58 |

+

```

|

| 59 |

+

cd stable-diffusion.cpp

|

| 60 |

+

git pull origin master

|

| 61 |

+

git submodule init

|

| 62 |

+

git submodule update

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

### Convert weights

|

| 66 |

+

|

| 67 |

+

- download original weights(.ckpt or .safetensors). For example

|

| 68 |

+

- Stable Diffusion v1.4 from https://huggingface.co/CompVis/stable-diffusion-v-1-4-original

|

| 69 |

+

- Stable Diffusion v1.5 from https://huggingface.co/runwayml/stable-diffusion-v1-5

|

| 70 |

+

- Stable Diffuison v2.1 from https://huggingface.co/stabilityai/stable-diffusion-2-1

|

| 71 |

+

|

| 72 |

+

```shell

|

| 73 |

+

curl -L -O https://huggingface.co/CompVis/stable-diffusion-v-1-4-original/resolve/main/sd-v1-4.ckpt

|

| 74 |

+

# curl -L -O https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors

|

| 75 |

+

# curl -L -O https://huggingface.co/stabilityai/stable-diffusion-2-1/blob/main/v2-1_768-nonema-pruned.safetensors

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

- convert weights to ggml model format

|

| 79 |

+

|

| 80 |

+

```shell

|

| 81 |

+

cd models

|

| 82 |

+

pip install -r requirements.txt

|

| 83 |

+

python convert.py [path to weights] --out_type [output precision]

|

| 84 |

+

# For example, python convert.py sd-v1-4.ckpt --out_type f16

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

### Quantization

|

| 88 |

+

|

| 89 |

+

You can specify the output model format using the --out_type parameter

|

| 90 |

+

|

| 91 |

+

- `f16` for 16-bit floating-point

|

| 92 |

+

- `f32` for 32-bit floating-point

|

| 93 |

+

- `q8_0` for 8-bit integer quantization

|

| 94 |

+

- `q5_0` or `q5_1` for 5-bit integer quantization

|

| 95 |

+

- `q4_0` or `q4_1` for 4-bit integer quantization

|

| 96 |

+

|

| 97 |

+

### Build

|

| 98 |

+

|

| 99 |

+

#### Build from scratch

|

| 100 |

+

|

| 101 |

+

```shell

|

| 102 |

+

mkdir build

|

| 103 |

+

cd build

|

| 104 |

+

cmake ..

|

| 105 |

+

cmake --build . --config Release

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

##### Using OpenBLAS

|

| 109 |

+

|

| 110 |

+

```

|

| 111 |

+

cmake .. -DGGML_OPENBLAS=ON

|

| 112 |

+

cmake --build . --config Release

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

### Run

|

| 116 |

+

|

| 117 |

+

```

|

| 118 |

+

usage: ./bin/sd [arguments]

|

| 119 |

+

|

| 120 |

+

arguments:

|

| 121 |

+

-h, --help show this help message and exit

|

| 122 |

+

-M, --mode [txt2img or img2img] generation mode (default: txt2img)

|

| 123 |

+

-t, --threads N number of threads to use during computation (default: -1).

|

| 124 |

+

If threads <= 0, then threads will be set to the number of CPU physical cores

|

| 125 |

+

-m, --model [MODEL] path to model

|

| 126 |

+

-i, --init-img [IMAGE] path to the input image, required by img2img

|

| 127 |

+

-o, --output OUTPUT path to write result image to (default: .\output.png)

|

| 128 |

+

-p, --prompt [PROMPT] the prompt to render

|

| 129 |

+

-n, --negative-prompt PROMPT the negative prompt (default: "")

|

| 130 |

+

--cfg-scale SCALE unconditional guidance scale: (default: 7.0)

|

| 131 |

+

--strength STRENGTH strength for noising/unnoising (default: 0.75)

|

| 132 |

+

1.0 corresponds to full destruction of information in init image

|

| 133 |

+

-H, --height H image height, in pixel space (default: 512)

|

| 134 |

+

-W, --width W image width, in pixel space (default: 512)

|

| 135 |

+

--sampling-method {euler, euler_a, heun, dpm++2m, dpm++2mv2}

|

| 136 |

+

sampling method (default: "euler_a")

|

| 137 |

+

--steps STEPS number of sample steps (default: 20)

|

| 138 |

+

--rng {std_default, cuda} RNG (default: cuda)

|

| 139 |

+

-s SEED, --seed SEED RNG seed (default: 42, use random seed for < 0)

|

| 140 |

+

-v, --verbose print extra info

|

| 141 |

+

```

|

| 142 |

+

|

| 143 |

+

#### txt2img example

|

| 144 |

+

|

| 145 |

+

```

|

| 146 |

+

./bin/sd -m ../models/sd-v1-4-ggml-model-f16.bin -p "a lovely cat"

|

| 147 |

+

```

|

| 148 |

+

|

| 149 |

+

Using formats of different precisions will yield results of varying quality.

|

| 150 |

+

|

| 151 |

+

| f32 | f16 |q8_0 |q5_0 |q5_1 |q4_0 |q4_1 |

|

| 152 |

+

| ---- |---- |---- |---- |---- |---- |---- |

|

| 153 |

+

|  | | | | | | |

|

| 154 |

+

|

| 155 |

+

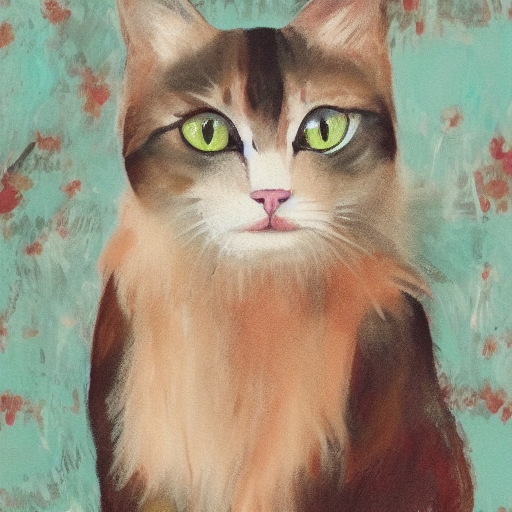

#### img2img example

|

| 156 |

+

|

| 157 |

+

- `./output.png` is the image generated from the above txt2img pipeline

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

```

|

| 161 |

+

./bin/sd --mode img2img -m ../models/sd-v1-4-ggml-model-f16.bin -p "cat with blue eyes" -i ./output.png -o ./img2img_output.png --strength 0.4

|

| 162 |

+

```

|

| 163 |

+

|

| 164 |

+

<p align="center">

|

| 165 |

+

<img src="./assets/img2img_output.png" width="256x">

|

| 166 |

+

</p>

|

| 167 |

+

|

| 168 |

+

### Docker

|

| 169 |

+

|

| 170 |

+

#### Building using Docker

|

| 171 |

+

|

| 172 |

+

```shell

|

| 173 |

+

docker build -t sd .

|

| 174 |

+

```

|

| 175 |

+

|

| 176 |

+

#### Run

|

| 177 |

+

|

| 178 |

+

```shell

|

| 179 |

+

docker run -v /path/to/models:/models -v /path/to/output/:/output sd [args...]

|

| 180 |

+

# For example

|

| 181 |

+

# docker run -v ./models:/models -v ./build:/output sd -m /models/sd-v1-4-ggml-model-f16.bin -p "a lovely cat" -v -o /output/output.png

|

| 182 |

+

```

|

| 183 |

+

|

| 184 |

+

## Memory/Disk Requirements

|

| 185 |

+

|

| 186 |

+

| precision | f32 | f16 |q8_0 |q5_0 |q5_1 |q4_0 |q4_1 |

|

| 187 |

+

| ---- | ---- |---- |---- |---- |---- |---- |---- |

|

| 188 |

+

| **Disk** | 2.7G | 2.0G | 1.7G | 1.6G | 1.6G | 1.5G | 1.5G |

|

| 189 |

+

| **Memory**(txt2img - 512 x 512) | ~2.8G | ~2.3G | ~2.1G | ~2.0G | ~2.0G | ~2.0G | ~2.0G |

|

| 190 |

+

|

| 191 |

+

|

| 192 |

+

## References

|

| 193 |

+

|

| 194 |

+

- [ggml](https://github.com/ggerganov/ggml)

|

| 195 |

+

- [stable-diffusion](https://github.com/CompVis/stable-diffusion)

|

| 196 |

+

- [stable-diffusion-stability-ai](https://github.com/Stability-AI/stablediffusion)

|

| 197 |

+

- [stable-diffusion-webui](https://github.com/AUTOMATIC1111/stable-diffusion-webui)

|

| 198 |

+

- [k-diffusion](https://github.com/crowsonkb/k-diffusion)

|

stable-diffusion.cpp/assets/a lovely cat.png

ADDED

|

stable-diffusion.cpp/assets/f16.png

ADDED

|

stable-diffusion.cpp/assets/f32.png

ADDED

|

stable-diffusion.cpp/assets/img2img_output.png

ADDED

|

stable-diffusion.cpp/assets/q4_0.png

ADDED

|

stable-diffusion.cpp/assets/q4_1.png

ADDED

|

stable-diffusion.cpp/assets/q5_0.png

ADDED

|

stable-diffusion.cpp/assets/q5_1.png

ADDED

|

stable-diffusion.cpp/assets/q8_0.png

ADDED

|

stable-diffusion.cpp/examples/CMakeLists.txt

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# TODO: move into its own subdirectoy

|

| 2 |

+

# TODO: make stb libs a target (maybe common)

|

| 3 |

+

set(SD_TARGET sd)

|

| 4 |

+

|

| 5 |

+

add_executable(${SD_TARGET} main.cpp stb_image.h stb_image_write.h)

|

| 6 |

+

install(TARGETS ${SD_TARGET} RUNTIME)

|

| 7 |

+

target_link_libraries(${SD_TARGET} PRIVATE stable-diffusion ${CMAKE_THREAD_LIBS_INIT})

|

| 8 |

+

target_compile_features(${SD_TARGET} PUBLIC cxx_std_11)

|

stable-diffusion.cpp/examples/main.cpp

ADDED

|

@@ -0,0 +1,473 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#include <stdio.h>

|

| 2 |

+

#include <ctime>

|

| 3 |

+

#include <fstream>

|

| 4 |

+

#include <iostream>

|

| 5 |

+

#include <random>

|

| 6 |

+

#include <string>

|

| 7 |

+

#include <thread>

|

| 8 |

+

#include <unordered_set>

|

| 9 |

+

|

| 10 |

+

#include "stable-diffusion.h"

|

| 11 |

+

|

| 12 |

+

#define STB_IMAGE_IMPLEMENTATION

|

| 13 |

+

#include "stb_image.h"

|

| 14 |

+

|

| 15 |

+

#define STB_IMAGE_WRITE_IMPLEMENTATION

|

| 16 |

+

#define STB_IMAGE_WRITE_STATIC

|

| 17 |

+

#include "stb_image_write.h"

|

| 18 |

+

|

| 19 |

+

#if defined(__APPLE__) && defined(__MACH__)

|

| 20 |

+

#include <sys/sysctl.h>

|

| 21 |

+

#include <sys/types.h>

|

| 22 |

+

#endif

|

| 23 |

+

|

| 24 |

+

#if !defined(_WIN32)

|

| 25 |

+

#include <sys/ioctl.h>

|

| 26 |

+

#include <unistd.h>

|

| 27 |

+

#endif

|

| 28 |

+

|

| 29 |

+

#define TXT2IMG "txt2img"

|

| 30 |

+

#define IMG2IMG "img2img"

|

| 31 |

+

|

| 32 |

+

// get_num_physical_cores is copy from

|

| 33 |

+

// https://github.com/ggerganov/llama.cpp/blob/master/examples/common.cpp

|

| 34 |

+

// LICENSE: https://github.com/ggerganov/llama.cpp/blob/master/LICENSE

|

| 35 |

+

int32_t get_num_physical_cores() {

|

| 36 |

+

#ifdef __linux__

|

| 37 |

+

// enumerate the set of thread siblings, num entries is num cores

|

| 38 |

+

std::unordered_set<std::string> siblings;

|

| 39 |

+

for (uint32_t cpu = 0; cpu < UINT32_MAX; ++cpu) {

|

| 40 |

+

std::ifstream thread_siblings("/sys/devices/system/cpu" + std::to_string(cpu) + "/topology/thread_siblings");

|

| 41 |

+

if (!thread_siblings.is_open()) {

|

| 42 |

+

break; // no more cpus

|

| 43 |

+

}

|

| 44 |

+

std::string line;

|

| 45 |

+

if (std::getline(thread_siblings, line)) {

|

| 46 |

+

siblings.insert(line);

|

| 47 |

+

}

|

| 48 |

+

}

|

| 49 |

+

if (siblings.size() > 0) {

|

| 50 |

+

return static_cast<int32_t>(siblings.size());

|

| 51 |

+

}

|

| 52 |

+

#elif defined(__APPLE__) && defined(__MACH__)

|

| 53 |

+

int32_t num_physical_cores;

|

| 54 |

+

size_t len = sizeof(num_physical_cores);

|

| 55 |

+

int result = sysctlbyname("hw.perflevel0.physicalcpu", &num_physical_cores, &len, NULL, 0);

|

| 56 |

+

if (result == 0) {

|

| 57 |

+

return num_physical_cores;

|

| 58 |

+

}

|

| 59 |

+

result = sysctlbyname("hw.physicalcpu", &num_physical_cores, &len, NULL, 0);

|

| 60 |

+

if (result == 0) {

|

| 61 |

+

return num_physical_cores;

|

| 62 |

+

}

|

| 63 |

+

#elif defined(_WIN32)

|

| 64 |

+

// TODO: Implement

|

| 65 |

+

#endif

|

| 66 |

+

unsigned int n_threads = std::thread::hardware_concurrency();

|

| 67 |

+

return n_threads > 0 ? (n_threads <= 4 ? n_threads : n_threads / 2) : 4;

|

| 68 |

+

}

|

| 69 |

+

|

| 70 |

+

const char* rng_type_to_str[] = {

|

| 71 |

+

"std_default",

|

| 72 |

+

"cuda",

|

| 73 |

+

};

|

| 74 |

+

|

| 75 |

+

// Names of the sampler method, same order as enum SampleMethod in stable-diffusion.h

|

| 76 |

+

const char* sample_method_str[] = {

|

| 77 |

+

"euler_a",

|

| 78 |

+

"euler",

|

| 79 |

+

"heun",

|

| 80 |

+

"dpm2",

|

| 81 |

+

"dpm++2s_a",

|

| 82 |

+

"dpm++2m",

|

| 83 |

+

"dpm++2mv2"};

|

| 84 |

+

|

| 85 |

+

// Names of the sigma schedule overrides, same order as Schedule in stable-diffusion.h

|

| 86 |

+

const char* schedule_str[] = {

|

| 87 |

+

"default",

|

| 88 |

+

"discrete",

|

| 89 |

+

"karras"};

|

| 90 |

+

|

| 91 |

+

struct Option {

|

| 92 |

+

int n_threads = -1;

|

| 93 |

+

std::string mode = TXT2IMG;

|

| 94 |

+

std::string model_path;

|

| 95 |

+

std::string output_path = "output.png";

|

| 96 |

+

std::string init_img;

|

| 97 |

+

std::string prompt;

|

| 98 |

+

std::string negative_prompt;

|

| 99 |

+

float cfg_scale = 7.0f;

|

| 100 |

+

int w = 512;

|

| 101 |

+

int h = 512;

|

| 102 |

+

SampleMethod sample_method = EULER_A;

|

| 103 |

+

Schedule schedule = DEFAULT;

|

| 104 |

+

int sample_steps = 20;

|

| 105 |

+

float strength = 0.75f;

|

| 106 |

+

RNGType rng_type = CUDA_RNG;

|

| 107 |

+

int64_t seed = 42;

|

| 108 |

+

bool verbose = false;

|

| 109 |

+

|

| 110 |

+

void print() {

|

| 111 |

+

printf("Option: \n");

|

| 112 |

+

printf(" n_threads: %d\n", n_threads);

|

| 113 |

+

printf(" mode: %s\n", mode.c_str());

|

| 114 |

+

printf(" model_path: %s\n", model_path.c_str());

|

| 115 |

+

printf(" output_path: %s\n", output_path.c_str());

|

| 116 |

+

printf(" init_img: %s\n", init_img.c_str());

|

| 117 |

+

printf(" prompt: %s\n", prompt.c_str());

|

| 118 |

+

printf(" negative_prompt: %s\n", negative_prompt.c_str());

|

| 119 |

+

printf(" cfg_scale: %.2f\n", cfg_scale);

|

| 120 |

+

printf(" width: %d\n", w);

|

| 121 |

+

printf(" height: %d\n", h);

|

| 122 |

+

printf(" sample_method: %s\n", sample_method_str[sample_method]);

|

| 123 |

+

printf(" schedule: %s\n", schedule_str[schedule]);

|

| 124 |

+

printf(" sample_steps: %d\n", sample_steps);

|

| 125 |

+

printf(" strength: %.2f\n", strength);

|

| 126 |

+

printf(" rng: %s\n", rng_type_to_str[rng_type]);

|

| 127 |

+

printf(" seed: %ld\n", seed);

|

| 128 |

+

}

|

| 129 |

+

};

|

| 130 |

+

|

| 131 |

+

void print_usage(int argc, const char* argv[]) {

|

| 132 |

+

printf("usage: %s [arguments]\n", argv[0]);

|

| 133 |

+

printf("\n");

|

| 134 |

+

printf("arguments:\n");

|

| 135 |

+

printf(" -h, --help show this help message and exit\n");

|

| 136 |

+

printf(" -M, --mode [txt2img or img2img] generation mode (default: txt2img)\n");

|

| 137 |

+

printf(" -t, --threads N number of threads to use during computation (default: -1).\n");

|

| 138 |

+

printf(" If threads <= 0, then threads will be set to the number of CPU physical cores\n");

|

| 139 |

+

printf(" -m, --model [MODEL] path to model\n");

|

| 140 |

+

printf(" -i, --init-img [IMAGE] path to the input image, required by img2img\n");

|

| 141 |

+

printf(" -o, --output OUTPUT path to write result image to (default: .\\output.png)\n");

|

| 142 |

+

printf(" -p, --prompt [PROMPT] the prompt to render\n");

|

| 143 |

+

printf(" -n, --negative-prompt PROMPT the negative prompt (default: \"\")\n");

|

| 144 |

+

printf(" --cfg-scale SCALE unconditional guidance scale: (default: 7.0)\n");

|

| 145 |

+

printf(" --strength STRENGTH strength for noising/unnoising (default: 0.75)\n");

|

| 146 |

+

printf(" 1.0 corresponds to full destruction of information in init image\n");

|

| 147 |

+

printf(" -H, --height H image height, in pixel space (default: 512)\n");

|

| 148 |

+

printf(" -W, --width W image width, in pixel space (default: 512)\n");

|

| 149 |

+

printf(" --sampling-method {euler, euler_a, heun, dpm2, dpm++2s_a, dpm++2m, dpm++2mv2}\n");

|

| 150 |

+

printf(" sampling method (default: \"euler_a\")\n");

|

| 151 |

+

printf(" --steps STEPS number of sample steps (default: 20)\n");

|

| 152 |

+

printf(" --rng {std_default, cuda} RNG (default: cuda)\n");

|

| 153 |

+

printf(" -s SEED, --seed SEED RNG seed (default: 42, use random seed for < 0)\n");

|

| 154 |

+

printf(" --schedule {discrete, karras} Denoiser sigma schedule (default: discrete)\n");

|

| 155 |

+

printf(" -v, --verbose print extra info\n");

|

| 156 |

+

}

|

| 157 |

+

|

| 158 |

+

void parse_args(int argc, const char* argv[], Option* opt) {

|

| 159 |

+

bool invalid_arg = false;

|

| 160 |

+

|

| 161 |

+

for (int i = 1; i < argc; i++) {

|

| 162 |

+

std::string arg = argv[i];

|

| 163 |

+

|

| 164 |

+

if (arg == "-t" || arg == "--threads") {

|

| 165 |

+

if (++i >= argc) {

|

| 166 |

+

invalid_arg = true;

|

| 167 |

+

break;

|

| 168 |

+

}

|

| 169 |

+

opt->n_threads = std::stoi(argv[i]);

|

| 170 |

+

} else if (arg == "-M" || arg == "--mode") {

|

| 171 |

+

if (++i >= argc) {

|

| 172 |

+

invalid_arg = true;

|

| 173 |

+

break;

|

| 174 |

+

}

|

| 175 |

+

opt->mode = argv[i];

|

| 176 |

+

|

| 177 |

+

} else if (arg == "-m" || arg == "--model") {

|

| 178 |

+

if (++i >= argc) {

|

| 179 |

+

invalid_arg = true;

|

| 180 |

+

break;

|

| 181 |

+

}

|

| 182 |

+

opt->model_path = argv[i];

|

| 183 |

+

} else if (arg == "-i" || arg == "--init-img") {

|

| 184 |

+

if (++i >= argc) {

|

| 185 |

+

invalid_arg = true;

|

| 186 |

+

break;

|

| 187 |

+

}

|

| 188 |

+

opt->init_img = argv[i];

|

| 189 |

+

} else if (arg == "-o" || arg == "--output") {

|

| 190 |

+

if (++i >= argc) {

|

| 191 |

+

invalid_arg = true;

|

| 192 |

+

break;

|

| 193 |

+

}

|

| 194 |

+

opt->output_path = argv[i];

|

| 195 |

+

} else if (arg == "-p" || arg == "--prompt") {

|

| 196 |

+

if (++i >= argc) {

|

| 197 |

+

invalid_arg = true;

|

| 198 |

+

break;

|

| 199 |

+

}

|

| 200 |

+

opt->prompt = argv[i];

|

| 201 |

+

} else if (arg == "-n" || arg == "--negative-prompt") {

|

| 202 |

+

if (++i >= argc) {

|

| 203 |

+

invalid_arg = true;

|

| 204 |

+

break;

|

| 205 |

+

}

|

| 206 |

+

opt->negative_prompt = argv[i];

|

| 207 |

+

} else if (arg == "--cfg-scale") {

|

| 208 |

+

if (++i >= argc) {

|

| 209 |

+

invalid_arg = true;

|

| 210 |

+

break;

|

| 211 |

+

}

|

| 212 |

+

opt->cfg_scale = std::stof(argv[i]);

|

| 213 |

+

} else if (arg == "--strength") {

|

| 214 |

+

if (++i >= argc) {

|

| 215 |

+

invalid_arg = true;

|

| 216 |

+

break;

|

| 217 |

+

}

|

| 218 |

+

opt->strength = std::stof(argv[i]);

|

| 219 |

+

} else if (arg == "-H" || arg == "--height") {

|

| 220 |

+

if (++i >= argc) {

|

| 221 |

+

invalid_arg = true;

|

| 222 |

+

break;

|

| 223 |

+

}

|

| 224 |

+

opt->h = std::stoi(argv[i]);

|

| 225 |

+

} else if (arg == "-W" || arg == "--width") {

|

| 226 |

+

if (++i >= argc) {

|

| 227 |

+

invalid_arg = true;

|

| 228 |

+

break;

|

| 229 |

+

}

|

| 230 |

+

opt->w = std::stoi(argv[i]);

|

| 231 |

+

} else if (arg == "--steps") {

|

| 232 |

+

if (++i >= argc) {

|

| 233 |

+

invalid_arg = true;

|

| 234 |

+

break;

|

| 235 |

+

}

|