PB Unity

commited on

Commit

•

6ecaf1d

1

Parent(s):

bb00bcc

Upload 4 files

Browse files- .gitattributes +1 -0

- RunFaceLandmark.cs +264 -0

- face_landmark.onnx +3 -0

- face_landmark.sentis +3 -0

- preview_face_landmark.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

face_landmark.sentis filter=lfs diff=lfs merge=lfs -text

|

RunFaceLandmark.cs

ADDED

|

@@ -0,0 +1,264 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

using UnityEngine;

|

| 2 |

+

using Unity.Sentis;

|

| 3 |

+

using UnityEngine.Video;

|

| 4 |

+

using UnityEngine.UI;

|

| 5 |

+

using System.Collections.Generic;

|

| 6 |

+

using Lays = Unity.Sentis.Layers;

|

| 7 |

+

|

| 8 |

+

/*

|

| 9 |

+

* Face Landmarks Inference

|

| 10 |

+

* ========================

|

| 11 |

+

*

|

| 12 |

+

* Basic inference script for mediapose face landmarks

|

| 13 |

+

*

|

| 14 |

+

* Put this script on the Main Camera

|

| 15 |

+

* Put face_landmarks.sentis in the Assets/StreamingAssets folder

|

| 16 |

+

* Create a RawImage of in the scene

|

| 17 |

+

* Put a link to that image in previewUI

|

| 18 |

+

* Put a video in Assets/StreamingAssets folder and put the name of it int videoName

|

| 19 |

+

* Or put a test image in inputImage

|

| 20 |

+

* Set inputType to appropriate input

|

| 21 |

+

*/

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

public class RunFaceLandmark : MonoBehaviour

|

| 25 |

+

{

|

| 26 |

+

//Drag a link to a raw image here:

|

| 27 |

+

public RawImage previewUI = null;

|

| 28 |

+

|

| 29 |

+

public string videoName = "chatting.mp4";

|

| 30 |

+

|

| 31 |

+

// Image to put into neural network

|

| 32 |

+

public Texture2D inputImage;

|

| 33 |

+

|

| 34 |

+

public InputType inputType = InputType.Video;

|

| 35 |

+

|

| 36 |

+

//Resolution of displayed image

|

| 37 |

+

Vector2Int resolution = new Vector2Int(640, 640);

|

| 38 |

+

WebCamTexture webcam;

|

| 39 |

+

VideoPlayer video;

|

| 40 |

+

|

| 41 |

+

const BackendType backend = BackendType.GPUCompute;

|

| 42 |

+

|

| 43 |

+

RenderTexture targetTexture;

|

| 44 |

+

public enum InputType { Image, Video, Webcam };

|

| 45 |

+

|

| 46 |

+

const int markerWidth = 5;

|

| 47 |

+

|

| 48 |

+

//Holds array of colors to draw landmarks

|

| 49 |

+

Color32[] markerPixels;

|

| 50 |

+

|

| 51 |

+

IWorker worker;

|

| 52 |

+

|

| 53 |

+

//Size of input image to neural network (196)

|

| 54 |

+

const int size = 192;

|

| 55 |

+

|

| 56 |

+

Ops ops;

|

| 57 |

+

ITensorAllocator allocator;

|

| 58 |

+

|

| 59 |

+

Model model;

|

| 60 |

+

|

| 61 |

+

//webcam device name:

|

| 62 |

+

const string deviceName = "";

|

| 63 |

+

|

| 64 |

+

bool closing = false;

|

| 65 |

+

|

| 66 |

+

Texture2D canvasTexture;

|

| 67 |

+

|

| 68 |

+

void Start()

|

| 69 |

+

{

|

| 70 |

+

allocator = new TensorCachingAllocator();

|

| 71 |

+

|

| 72 |

+

//(Note: if using a webcam on mobile get permissions here first)

|

| 73 |

+

|

| 74 |

+

SetupTextures();

|

| 75 |

+

SetupMarkers();

|

| 76 |

+

SetupInput();

|

| 77 |

+

SetupModel();

|

| 78 |

+

SetupEngine();

|

| 79 |

+

}

|

| 80 |

+

|

| 81 |

+

void SetupModel()

|

| 82 |

+

{

|

| 83 |

+

model = ModelLoader.Load(Application.streamingAssetsPath + "/face_landmark.sentis");

|

| 84 |

+

}

|

| 85 |

+

public void SetupEngine()

|

| 86 |

+

{

|

| 87 |

+

worker = WorkerFactory.CreateWorker(backend, model);

|

| 88 |

+

ops = WorkerFactory.CreateOps(backend, allocator);

|

| 89 |

+

}

|

| 90 |

+

|

| 91 |

+

void SetupTextures()

|

| 92 |

+

{

|

| 93 |

+

//To display the get and display the original image:

|

| 94 |

+

targetTexture = new RenderTexture(resolution.x, resolution.y, 0);

|

| 95 |

+

|

| 96 |

+

//Used for drawing the markers:

|

| 97 |

+

canvasTexture = new Texture2D(targetTexture.width, targetTexture.height);

|

| 98 |

+

|

| 99 |

+

previewUI.texture = targetTexture;

|

| 100 |

+

}

|

| 101 |

+

|

| 102 |

+

void SetupMarkers()

|

| 103 |

+

{

|

| 104 |

+

markerPixels = new Color32[markerWidth * markerWidth];

|

| 105 |

+

for (int n = 0; n < markerWidth * markerWidth; n++)

|

| 106 |

+

{

|

| 107 |

+

markerPixels[n] = Color.white;

|

| 108 |

+

}

|

| 109 |

+

int center = markerWidth / 2;

|

| 110 |

+

markerPixels[center * markerWidth + center] = Color.black;

|

| 111 |

+

}

|

| 112 |

+

|

| 113 |

+

void SetupInput()

|

| 114 |

+

{

|

| 115 |

+

switch (inputType)

|

| 116 |

+

{

|

| 117 |

+

case InputType.Webcam:

|

| 118 |

+

{

|

| 119 |

+

webcam = new WebCamTexture(deviceName, resolution.x, resolution.y);

|

| 120 |

+

webcam.requestedFPS = 30;

|

| 121 |

+

webcam.Play();

|

| 122 |

+

break;

|

| 123 |

+

}

|

| 124 |

+

case InputType.Video:

|

| 125 |

+

{

|

| 126 |

+

video = gameObject.AddComponent<VideoPlayer>();//new VideoPlayer();

|

| 127 |

+

video.renderMode = VideoRenderMode.APIOnly;

|

| 128 |

+

video.source = VideoSource.Url;

|

| 129 |

+

video.url = Application.streamingAssetsPath + "/"+videoName;

|

| 130 |

+

video.isLooping = true;

|

| 131 |

+

video.Play();

|

| 132 |

+

break;

|

| 133 |

+

}

|

| 134 |

+

default:

|

| 135 |

+

{

|

| 136 |

+

Graphics.Blit(inputImage, targetTexture);

|

| 137 |

+

}

|

| 138 |

+

break;

|

| 139 |

+

}

|

| 140 |

+

}

|

| 141 |

+

|

| 142 |

+

void Update()

|

| 143 |

+

{

|

| 144 |

+

GetImageFromSource();

|

| 145 |

+

|

| 146 |

+

if (Input.GetKeyDown(KeyCode.Escape))

|

| 147 |

+

{

|

| 148 |

+

closing = true;

|

| 149 |

+

Application.Quit();

|

| 150 |

+

}

|

| 151 |

+

|

| 152 |

+

if (Input.GetKeyDown(KeyCode.P))

|

| 153 |

+

{

|

| 154 |

+

previewUI.enabled = !previewUI.enabled;

|

| 155 |

+

}

|

| 156 |

+

}

|

| 157 |

+

|

| 158 |

+

void GetImageFromSource()

|

| 159 |

+

{

|

| 160 |

+

if (inputType == InputType.Webcam)

|

| 161 |

+

{

|

| 162 |

+

// Format video input

|

| 163 |

+

if (!webcam.didUpdateThisFrame) return;

|

| 164 |

+

|

| 165 |

+

var aspect1 = (float)webcam.width / webcam.height;

|

| 166 |

+

var aspect2 = (float)resolution.x / resolution.y;

|

| 167 |

+

var gap = aspect2 / aspect1;

|

| 168 |

+

|

| 169 |

+

var vflip = webcam.videoVerticallyMirrored;

|

| 170 |

+

var scale = new Vector2(gap, vflip ? -1 : 1);

|

| 171 |

+

var offset = new Vector2((1 - gap) / 2, vflip ? 1 : 0);

|

| 172 |

+

|

| 173 |

+

Graphics.Blit(webcam, targetTexture, scale, offset);

|

| 174 |

+

}

|

| 175 |

+

if (inputType == InputType.Video)

|

| 176 |

+

{

|

| 177 |

+

var aspect1 = (float)video.width / video.height;

|

| 178 |

+

var aspect2 = (float)resolution.x / resolution.y;

|

| 179 |

+

var gap = aspect2 / aspect1;

|

| 180 |

+

|

| 181 |

+

var vflip = false;

|

| 182 |

+

var scale = new Vector2(gap, vflip ? -1 : 1);

|

| 183 |

+

var offset = new Vector2((1 - gap) / 2, vflip ? 1 : 0);

|

| 184 |

+

Graphics.Blit(video.texture, targetTexture, scale, offset);

|

| 185 |

+

}

|

| 186 |

+

if (inputType == InputType.Image)

|

| 187 |

+

{

|

| 188 |

+

Graphics.Blit(inputImage, targetTexture);

|

| 189 |

+

}

|

| 190 |

+

}

|

| 191 |

+

|

| 192 |

+

void LateUpdate()

|

| 193 |

+

{

|

| 194 |

+

if (!closing)

|

| 195 |

+

{

|

| 196 |

+

RunInference(targetTexture);

|

| 197 |

+

}

|

| 198 |

+

}

|

| 199 |

+

|

| 200 |

+

void RunInference(Texture source)

|

| 201 |

+

{

|

| 202 |

+

var transform = new TextureTransform();

|

| 203 |

+

transform.SetDimensions(size, size, 3);

|

| 204 |

+

transform.SetTensorLayout(0, 3, 1, 2);

|

| 205 |

+

using var image0 = TextureConverter.ToTensor(source, transform);

|

| 206 |

+

|

| 207 |

+

// Pre-process the image to make input in range (-1..1)

|

| 208 |

+

using var image = ops.Mad(image0, 2f, -1f);

|

| 209 |

+

|

| 210 |

+

worker.Execute(image0);

|

| 211 |

+

|

| 212 |

+

using var landmarks= worker.PeekOutput("conv2d_21") as TensorFloat;

|

| 213 |

+

|

| 214 |

+

//This gives the condifidence:

|

| 215 |

+

//using var confidence = worker.PeekOutput("conv2d_31") as TensorFloat;

|

| 216 |

+

|

| 217 |

+

float scaleX = targetTexture.width * 1f / size;

|

| 218 |

+

float scaleY = targetTexture.height * 1f / size;

|

| 219 |

+

|

| 220 |

+

landmarks.MakeReadable();

|

| 221 |

+

DrawLandmarks(landmarks, scaleX, scaleY);

|

| 222 |

+

}

|

| 223 |

+

|

| 224 |

+

void DrawLandmarks(TensorFloat landmarks, float scaleX, float scaleY)

|

| 225 |

+

{

|

| 226 |

+

int numLandmarks = landmarks.shape[3] / 3; //468 face landmarks

|

| 227 |

+

|

| 228 |

+

RenderTexture.active = targetTexture;

|

| 229 |

+

canvasTexture.ReadPixels(new Rect(0, 0, targetTexture.width, targetTexture.height), 0, 0);

|

| 230 |

+

|

| 231 |

+

for (int n = 0; n < numLandmarks; n++)

|

| 232 |

+

{

|

| 233 |

+

int px = (int)(landmarks[0, 0, 0, n * 3 + 0] * scaleX) - (markerWidth - 1) / 2;

|

| 234 |

+

int py = (int)(landmarks[0, 0, 0, n * 3 + 1] * scaleY) - (markerWidth - 1) / 2;

|

| 235 |

+

int pz = (int)(landmarks[0, 0, 0, n * 3 + 2] * scaleX);

|

| 236 |

+

int destX = Mathf.Clamp(px, 0, targetTexture.width - 1 - markerWidth);

|

| 237 |

+

int destY = Mathf.Clamp(targetTexture.height - 1 - py, 0, targetTexture.height - 1 - markerWidth);

|

| 238 |

+

canvasTexture.SetPixels32(destX, destY, markerWidth, markerWidth, markerPixels);

|

| 239 |

+

}

|

| 240 |

+

canvasTexture.Apply();

|

| 241 |

+

Graphics.Blit(canvasTexture, targetTexture);

|

| 242 |

+

RenderTexture.active = null;

|

| 243 |

+

}

|

| 244 |

+

|

| 245 |

+

void CleanUp()

|

| 246 |

+

{

|

| 247 |

+

closing = true;

|

| 248 |

+

ops?.Dispose();

|

| 249 |

+

allocator?.Dispose();

|

| 250 |

+

if (webcam) Destroy(webcam);

|

| 251 |

+

if (video) Destroy(video);

|

| 252 |

+

RenderTexture.active = null;

|

| 253 |

+

targetTexture.Release();

|

| 254 |

+

worker?.Dispose();

|

| 255 |

+

worker = null;

|

| 256 |

+

}

|

| 257 |

+

|

| 258 |

+

void OnDestroy()

|

| 259 |

+

{

|

| 260 |

+

CleanUp();

|

| 261 |

+

}

|

| 262 |

+

|

| 263 |

+

}

|

| 264 |

+

|

face_landmark.onnx

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9cbac115d4340979867b656e26f258819490f898be54680a7a6387b9f8a28666

|

| 3 |

+

size 2429026

|

face_landmark.sentis

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fe79525320811b1b997fc09ffa290e488b70c454d1178238955302a93f67865f

|

| 3 |

+

size 2488087

|

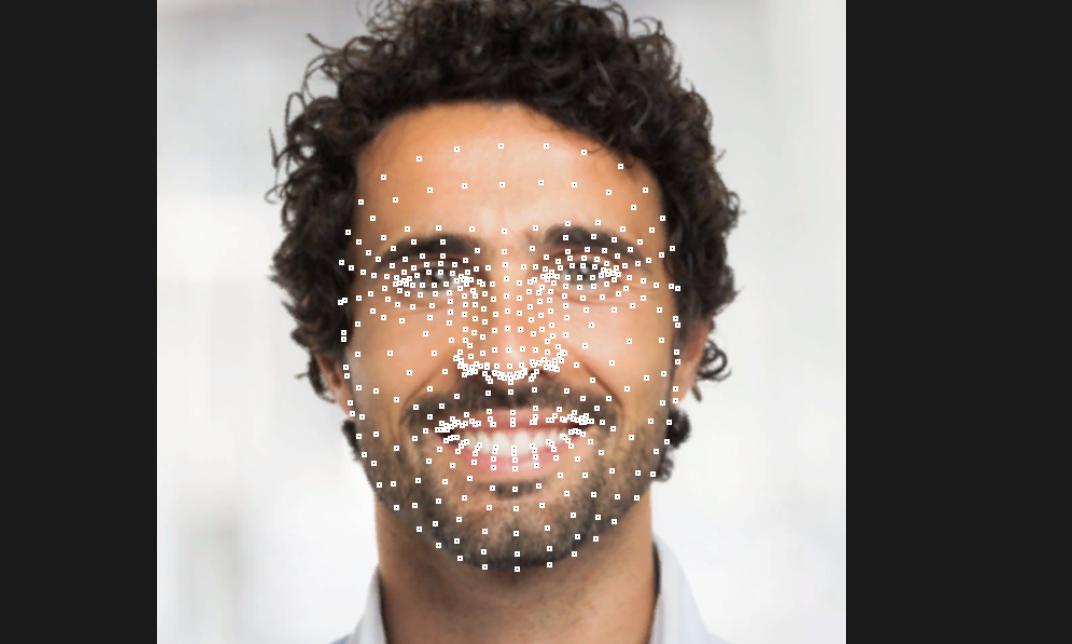

preview_face_landmark.png

ADDED

|