LLM4Fin

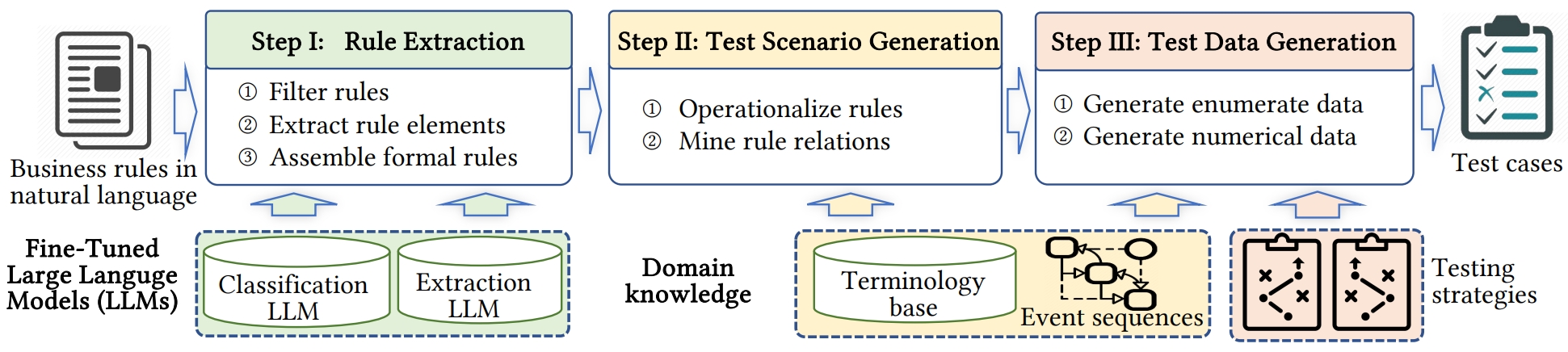

LLM4Fin is a prototype tool for automatically generating test cases from natural language business rules. It is the official implementation for paper "LLM4Fin: Fully Automating LLM-Powered Test Case Generation for FinTech Software Acceptance Testing" accepted by ISSTA 2024. The process of LLM4Fin can be divided into three steps: I. Rule Extraction, II. Test Scenario Generation, III. Test Data Generation. The workflow is shown as below. Step I.1 and Step I.2 are performed by fine-tuned LLMs, and the other steps are implemented by well-designed algorithms. We evaluate it on real-world stock-trading software. Experimental results shows that LLM4Fin outperforms both general LLMs like ChatGPT and skilled testing engineers, on the business scenario coverage, code coverage, and time consumption. The code of LLM4Fin is available at https://github.com/13luoyu/intelligent-test. This repository contains the language models used in the project.

Repository Structure

mengzi_rule_filtering. Fine-tuned model for rule filtering task based on Mengzi.

mengzi_rule_element_extraction. Fine-tuned model for rule element extraction task based on Mengzi.

finbert_rule_element_extraction. Fine-tuned model for rule element extraction task based on FinBert.

llama2_rule_element_extraction. Lora trained model for rule element extraction task based on Llama2.

Usage

- Usage of mengzi_rule_filtering.

from transformers import AutoModelForSequenceClassification, AutoTokenizer

import torch

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model_path = "mengzi_rule_filtering"

model = AutoModelForSequenceClassification.from_pretrained(model_path, num_labels=3)

model.eval().to(device)

tokenizer = AutoTokenizer.from_pretrained(model_path)

inputs = "采用匹配成交方式的,债券现券的申报数量应当为10万元面额或者其整数倍。"

batch = [inputs]

batch = tokenizer(batch, max_length=512, padding="max_length", truncation=True, return_tensors="pt")

input_ids = batch.input_ids.to(device)

logits = model(input_ids=input_ids).logits

_, outputs = torch.max(logits, dim=1)

outputs = outputs.cpu().numpy()[0]

if outputs == 0:

print("Untestable Rule.")

elif outputs == 1:

print("Testable Rule.")

else:

print("Domain Knowledge.")

- Usage of mengzi_rule_element_extraction and finbert_rule_element_extraction.

from transformers import AutoModelForTokenClassification, AutoTokenizer

import torch

tc_dict = {}

with open("tc_data.dict", "r", encoding="utf-8") as f:

lines = f.readlines()

for line in lines:

line = line.strip().split("\t")

tc_dict[int(line[0])] = line[1]

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model_path = "mengzi_rule_element_extraction"

# or

# model_path = "finbert_rule_element_extraction"

model = AutoModelForTokenClassification.from_pretrained(model_path, num_labels=len(tc_dict))

model.eval().to(device)

tokenizer = AutoTokenizer.from_pretrained(model_path)

inputs = "采用匹配成交方式的,债券现券的申报数量应当为10万元面额或者其整数倍。"

batch = [inputs]

batch = tokenizer(batch, max_length=512, padding="max_length", truncation=True, return_tensors="pt")

input_ids = batch.input_ids.to(device)

logits = model(input_ids=input_ids).logits

_, outputs = torch.max(logits, dim=2)

outputs = outputs.cpu().numpy()[0]

output = []

for i in range(min(len(inputs)+2, 512)):

output.append(tc_dict[outputs[i]])

output = output[1:-1]

print(output)

- Usage of llama2_rule_element_extraction.

from threading import Thread

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, TextIteratorStreamer

from peft import PeftConfig, PeftModel

import torch

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model_path = "trained/llama2_rule_element_extraction"

bnb_config = BitsAndBytesConfig(load_in_4bit=True, bnb_4bit_use_double_quant=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype=torch.bfloat16)

peft_config = PeftConfig.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(peft_config.base_model_name_or_path, device_map=device, torch_dtype=torch.float16, trust_remote_code=True, use_flash_attention_2=True, quantization_config=bnb_config)

model = PeftModel.from_pretrained(model, model_path, device)

model.eval()

tokenizer = AutoTokenizer.from_pretrained(peft_config.base_model_name_or_path)

inputs = "采用匹配成交方式的,债券现券的申报数量应当为10万元面额或者其整数倍。"

batch = ["<s>Human: 给出一条规则,请你尽可能全面地将规则中的关键信息抽取出来。\n规则: " + inputs + "\n</s><s>Assistant:"]

batch = tokenizer(batch, return_tensors="pt", add_special_tokens=False)

input_ids = batch.input_ids.to(device)

streamer = TextIteratorStreamer(

tokenizer=tokenizer,

timeout=120,

skip_prompt=True,

skip_special_tokens=True

)

generate_kwargs = {

"input_ids": input_ids,

"max_new_tokens": 512,

"streamer": streamer,

}

t = Thread(target=model.generate, kwargs=generate_kwargs)

t.start()

for new_token in streamer:

print(new_token, end="", flush=True)