Model description

Suicide Detection text classification model.

PYTHON 3.10 ONLY

Training Procedure

Trained using 0.7 of the the Suicide and Depression Detection dataset (https://www.kaggle.com/datasets/nikhileswarkomati/suicide-watch)

The model vectorises each text using a trained tfidf vectorizer and then classifies using xgboost.

See main.py for further details.

Hyperparameters

Click to expand

| Hyperparameter | Value |

|---|---|

| memory | |

| steps | [('tfidf', TfidfVectorizer(min_df=100, ngram_range=(1, 3), preprocessor=<function preprocessor at 0x7f8d443a30a0>)), ('classifier', XGBClassifier(base_score=None, booster=None, callbacks=None, colsample_bylevel=None, colsample_bynode=None, colsample_bytree=None, device=None, early_stopping_rounds=None, enable_categorical=False, eval_metric=None, feature_types=None, gamma=None, grow_policy=None, importance_type=None, interaction_constraints=None, learning_rate=None, max_bin=None, max_cat_threshold=None, max_cat_to_onehot=None, max_delta_step=None, max_depth=None, max_leaves=None, min_child_weight=None, missing=nan, monotone_constraints=None, multi_strategy=None, n_estimators=None, n_jobs=None, num_parallel_tree=None, random_state=None, ...))] |

| verbose | True |

| tfidf | TfidfVectorizer(min_df=100, ngram_range=(1, 3), preprocessor=<function preprocessor at 0x7f8d443a30a0>) |

| classifier | XGBClassifier(base_score=None, booster=None, callbacks=None, colsample_bylevel=None, colsample_bynode=None, colsample_bytree=None, device=None, early_stopping_rounds=None, enable_categorical=False, eval_metric=None, feature_types=None, gamma=None, grow_policy=None, importance_type=None, interaction_constraints=None, learning_rate=None, max_bin=None, max_cat_threshold=None, max_cat_to_onehot=None, max_delta_step=None, max_depth=None, max_leaves=None, min_child_weight=None, missing=nan, monotone_constraints=None, multi_strategy=None, n_estimators=None, n_jobs=None, num_parallel_tree=None, random_state=None, ...) |

| tfidf__analyzer | word |

| tfidf__binary | False |

| tfidf__decode_error | strict |

| tfidf__dtype | <class 'numpy.float64'> |

| tfidf__encoding | utf-8 |

| tfidf__input | content |

| tfidf__lowercase | True |

| tfidf__max_df | 1.0 |

| tfidf__max_features | |

| tfidf__min_df | 100 |

| tfidf__ngram_range | (1, 3) |

| tfidf__norm | l2 |

| tfidf__preprocessor | <function preprocessor at 0x7f8d443a30a0> |

| tfidf__smooth_idf | True |

| tfidf__stop_words | |

| tfidf__strip_accents | |

| tfidf__sublinear_tf | False |

| tfidf__token_pattern | (?u)\b\w\w+\b |

| tfidf__tokenizer | |

| tfidf__use_idf | True |

| tfidf__vocabulary | |

| classifier__objective | binary:logistic |

| classifier__base_score | |

| classifier__booster | |

| classifier__callbacks | |

| classifier__colsample_bylevel | |

| classifier__colsample_bynode | |

| classifier__colsample_bytree | |

| classifier__device | |

| classifier__early_stopping_rounds | |

| classifier__enable_categorical | False |

| classifier__eval_metric | |

| classifier__feature_types | |

| classifier__gamma | |

| classifier__grow_policy | |

| classifier__importance_type | |

| classifier__interaction_constraints | |

| classifier__learning_rate | |

| classifier__max_bin | |

| classifier__max_cat_threshold | |

| classifier__max_cat_to_onehot | |

| classifier__max_delta_step | |

| classifier__max_depth | |

| classifier__max_leaves | |

| classifier__min_child_weight | |

| classifier__missing | nan |

| classifier__monotone_constraints | |

| classifier__multi_strategy | |

| classifier__n_estimators | |

| classifier__n_jobs | |

| classifier__num_parallel_tree | |

| classifier__random_state | |

| classifier__reg_alpha | |

| classifier__reg_lambda | |

| classifier__sampling_method | |

| classifier__scale_pos_weight | |

| classifier__subsample | |

| classifier__tree_method | |

| classifier__validate_parameters | |

| classifier__verbosity |

Model Plot

Pipeline(steps=[('tfidf',TfidfVectorizer(min_df=100, ngram_range=(1, 3),preprocessor=<function preprocessor at 0x7f8d443a30a0>)),('classifier',XGBClassifier(base_score=None, booster=None, callbacks=None,colsample_bylevel=None, colsample_bynode=None,colsample_bytree=None, device=None,early_stopping_rounds=None,enable_categorical=False, eval_metric=None,featur...importance_type=None,interaction_constraints=None, learning_rate=None,max_bin=None, max_cat_threshold=None,max_cat_to_onehot=None, max_delta_step=None,max_depth=None, max_leaves=None,min_child_weight=None, missing=nan,monotone_constraints=None, multi_strategy=None,n_estimators=None, n_jobs=None,num_parallel_tree=None, random_state=None, ...))],verbose=True)In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('tfidf',TfidfVectorizer(min_df=100, ngram_range=(1, 3),preprocessor=<function preprocessor at 0x7f8d443a30a0>)),('classifier',XGBClassifier(base_score=None, booster=None, callbacks=None,colsample_bylevel=None, colsample_bynode=None,colsample_bytree=None, device=None,early_stopping_rounds=None,enable_categorical=False, eval_metric=None,featur...importance_type=None,interaction_constraints=None, learning_rate=None,max_bin=None, max_cat_threshold=None,max_cat_to_onehot=None, max_delta_step=None,max_depth=None, max_leaves=None,min_child_weight=None, missing=nan,monotone_constraints=None, multi_strategy=None,n_estimators=None, n_jobs=None,num_parallel_tree=None, random_state=None, ...))],verbose=True)TfidfVectorizer(min_df=100, ngram_range=(1, 3),preprocessor=<function preprocessor at 0x7f8d443a30a0>)

XGBClassifier(base_score=None, booster=None, callbacks=None,colsample_bylevel=None, colsample_bynode=None,colsample_bytree=None, device=None, early_stopping_rounds=None,enable_categorical=False, eval_metric=None, feature_types=None,gamma=None, grow_policy=None, importance_type=None,interaction_constraints=None, learning_rate=None, max_bin=None,max_cat_threshold=None, max_cat_to_onehot=None,max_delta_step=None, max_depth=None, max_leaves=None,min_child_weight=None, missing=nan, monotone_constraints=None,multi_strategy=None, n_estimators=None, n_jobs=None,num_parallel_tree=None, random_state=None, ...)

Evaluation Results

| Metric | Value |

|---|---|

| accuracy | 0.910317 |

| f1 score | 0.910317 |

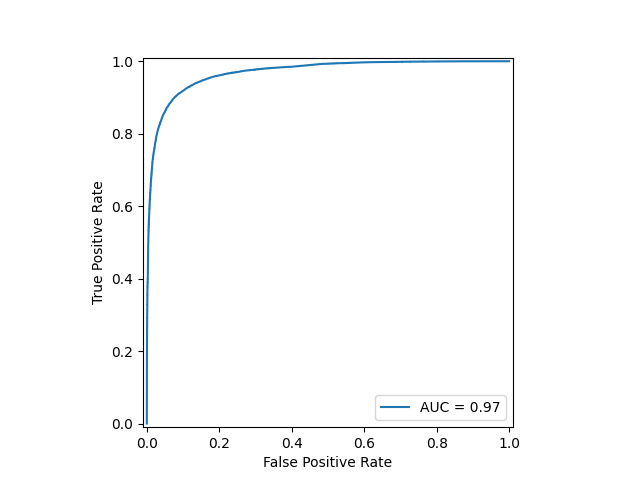

| ROC AUC | 0.969008 |

How to Get Started with the Model

import sklearn

import dill as pickle

from skops import hub_utils

from pathlib import Path

suicide_detector_repo = Path("./suicide-detector")

hub_utils.download(

repo_id="AndyJamesTurner/suicideDetector",

dst=suicide_detector_repo

)

with open(suicide_detector_repo / "model.pkl", 'rb') as file:

clf = pickle.load(file)

classification = clf.predict(["I want to kill myself"])[0]

Model Evaluation

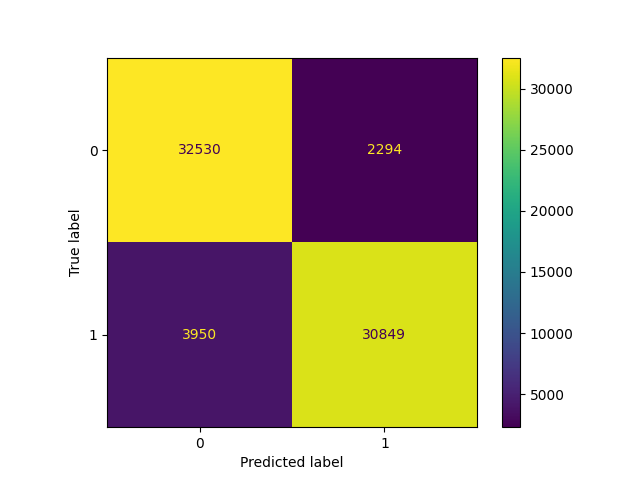

The model was evaluated on a 0.3 holdout split using f1 score, accuracy, confusion matrix and ROC curves.

Confusion matrix

ROC Curve

Classification Report

| index | precision | recall | f1-score | support |

|---|---|---|---|---|

| not suicide | 0.891721 | 0.934126 | 0.912431 | 34824 |

| suicide | 0.930785 | 0.886491 | 0.908098 | 34799 |

| accuracy | 0.910317 | 0.910317 | 0.910317 | 0.910317 |

| macro avg | 0.911253 | 0.910308 | 0.910265 | 69623 |

| weighted avg | 0.911246 | 0.910317 | 0.910265 | 69623 |

Model Authors

This model was created by the following authors:

- Andy Turner

- Downloads last month

- 0

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.