mineral-colour on Stable Diffusion via Dreambooth

token

mineral-colour

Here are the images used for training this concept:

inference

from torch import autocast

from diffusers import StableDiffusionPipeline

import torch

import diffusers

from PIL import Image

def image_grid(imgs, rows, cols):

assert len(imgs) == rows*cols

w, h = imgs[0].size

grid = Image.new('RGB', size=(cols*w, rows*h))

grid_w, grid_h = grid.size

for i, img in enumerate(imgs):

grid.paste(img, box=(i%cols*w, i//cols*h))

return grid

pipe = StableDiffusionPipeline.from_pretrained("Dushwe/mineral-colour").to("cuda")

prompt = 'A little girl in china chic hanfu walks in the forest, mineral-colour'

images = pipe(prompt, num_images_per_prompt=1, num_inference_steps=50, guidance_scale=7.5,torch_dtype=torch.cuda.HalfTensor).images

grid = image_grid(images, 1, 1)

grid

generate samples

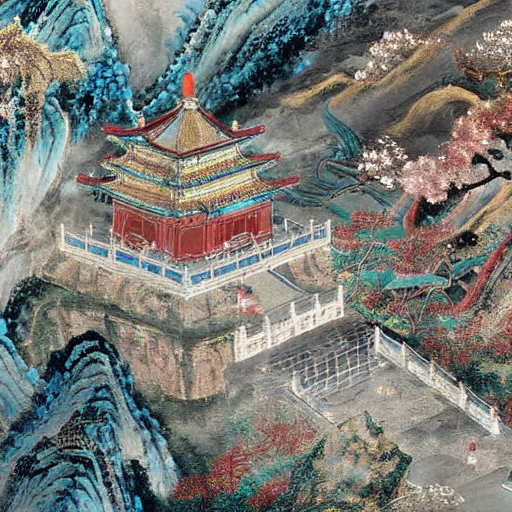

Chinese palace, 4k resolution, mineral-colour

beginning of autumn, autumn, forests, scenery, background, landscape, woodland, trees,mineral-colour

You run your new concept via diffusers

Colab Notebook for Inference. Don't forget to use the concept prompts!

- Downloads last month

- 0

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.