Flux.1 Lite

We are thrilled to announce the release of Flux.1 Lite, an 8B parameter transformer model distilled from the FLUX.1-dev model. This version uses 7 GB less RAM and runs 23% faster while maintaining the same precision (bfloat16) as the original model.

🔥 UPDATE 🔥: We have released a new version of Flux.1 Lite 8B. This version is trained with a new dataset and achieves better results than the previous alpha version. The main changes include:

- Distillation for a broader range of guidance values (2.0-5.0)

- Distillation for a broader range of number of steps (20-32)

- More diverse dataset with longer prompts

Text-to-Image

Flux.1 Lite is ready to unleash your creativity! For the best results, we strongly recommend using a guidance_scale between 2.0 and 5.0 and setting n_steps between 20 and 32.

import torch

from diffusers import FluxPipeline

torch_dtype = torch.bfloat16

device = "cuda"

# Load the pipe

model_id = "Freepik/flux.1-lite-8B"

pipe = FluxPipeline.from_pretrained(

model_id, torch_dtype=torch_dtype

).to(device)

# Inference

prompt = "A close-up image of a green alien with fluorescent skin in the middle of a dark purple forest"

guidance_scale = 3.5

n_steps = 28

seed = 11

with torch.inference_mode():

image = pipe(

prompt=prompt,

generator=torch.Generator(device="cpu").manual_seed(seed),

num_inference_steps=n_steps,

guidance_scale=guidance_scale,

height=1024,

width=1024,

).images[0]

image.save("output.png")

Motivation

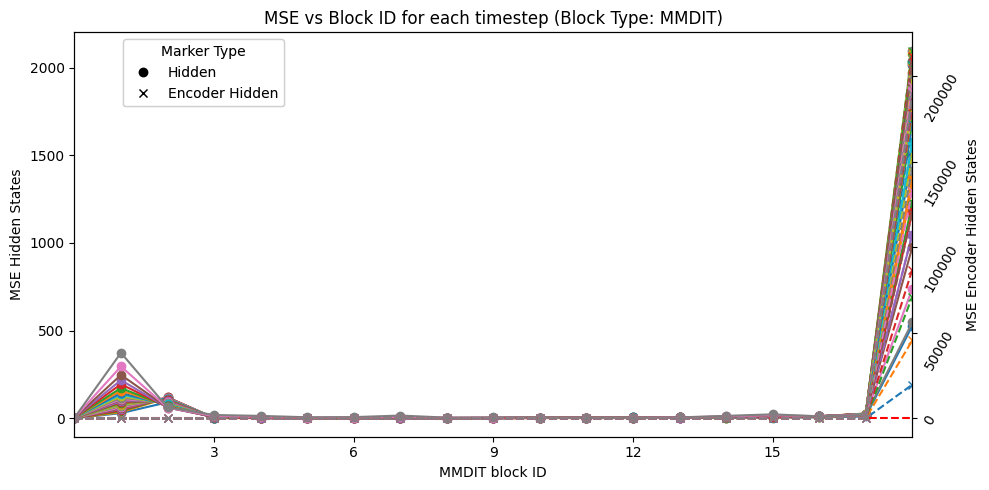

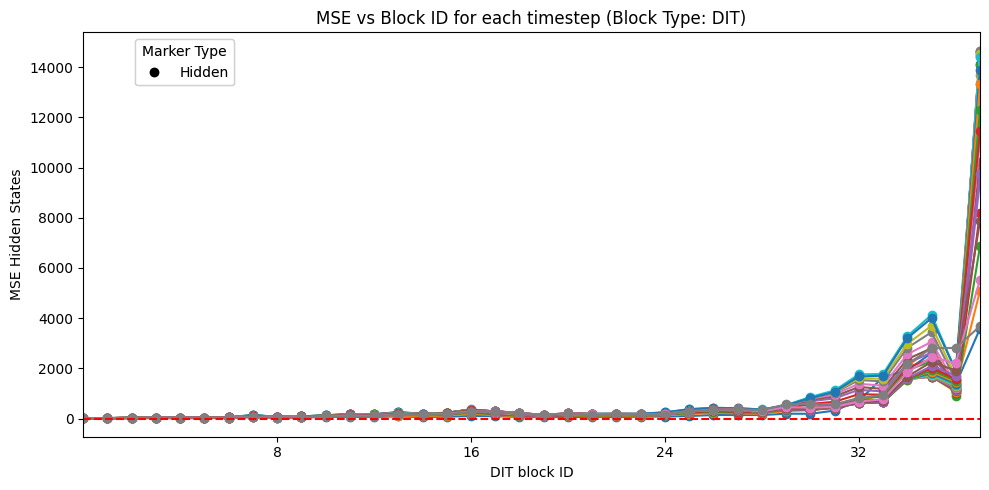

Inspired by Ostris findings, we analyzed the mean squared error (MSE) between the input and output of each block to quantify their contribution to the final result, revealing significant variability.

As Ostris pointed out, not all blocks contribute equally. While skipping just one of the early MMDiT or late DiT blocks can significantly impact model performance, skipping any single block in between does not have a significant impact over the final image quality.

ComfyUI

We've also crafted a ComfyUI workflow to make using Flux.1 Lite even more seamless! Find it in comfy/flux.1-lite_workflow.json.

The safetensors checkpoint is available here: flux.1-lite-8B.safetensors

Try it out at Freepik!

Our AI generator is now powered by Flux.1 Lite!

🔥 News 🔥

- Dec 30, 2024. Flux.1 Lite 8B new trained model is publicly available on HuggingFace Repo.

- Oct 23, 2024. Alpha 8B checkpoint is publicly available on HuggingFace Repo.

Citation

If you find our work helpful, please cite it!

@article{flux1-lite,

title={Flux.1 Lite: Distilling Flux1.dev for Efficient Text-to-Image Generation},

author={Daniel Verdú, Javier Martín},

email={dverdu@freepik.com, javier.martin@freepik.com},

year={2024},

}

- Downloads last month

- 4,163

Model tree for Freepik/flux.1-lite-8B

Base model

black-forest-labs/FLUX.1-dev