license: apache-2.0

pipeline_tag: reinforcement-learning

tags:

- Deep Reinforcement Learning

- Combinatorial Optimization

- Reinforcement Learning

- Vehicle Routing Problem

✊GreedRL

🏆Award

Introduction

GENERAL

HIGH-PERFORMANCE

USER-FRIENDLY

Architecture design

The entire architecture is divided into three layers:

High-performance Env framework

The constraints and optimization objectives for the problems to be solved are defined in the Reinforcement Learning(RL) Environment(Env). Based on performance and ease of use considerations, the Env framework provides two implementations:one based on pytorch and one based on CUDA C++. To facilitate the definition of problems for developers, the framework abstracts multiple variables to represent the environment's state, which are automatically generated after being declared by the user. When defining constraints and optimization objectives, developers can directly refer to the declared variables.

Currently, various VRP variants such as CVRP, VRPTW and PDPTW, as well as problems such as Batching, are supported.

Pluggable NN components

The framework provides certain neural network(NN) components, and developers can also implement custom neural network components.

High-performance NN operators

In order to achieve the ultimate performance, the framework implements some high-performance operators specifically for Combinatorial Optimization(CO) problems to replace pytorch operators, such as the Masked Addition Attention and Masked Softmax Sampling."

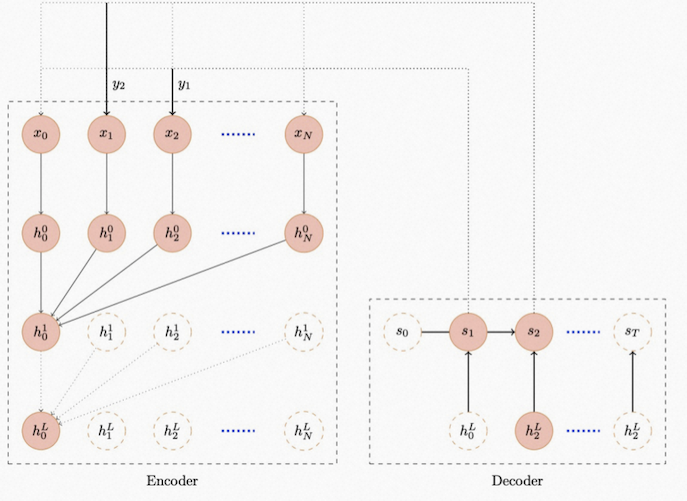

Network design

The neural network adopts the Seq2Seq architecture commonly used in Natural Language Processing(NLP), with the Transformer used in the Encoding part and RNN used in the decoding part, as shown in the diagram below.

Modeling examples

Capacitated Vehicle Routing Problem (CVRP)

CVRP

from greedrl.feature import *

from greedrl.variable import *

from greedrl.function import *

from greedrl import Problem, Solution, Solver

from greedrl import runner

features = [continuous_feature('task_demand'),

continuous_feature('worker_weight_limit'),

continuous_feature('distance_matrix'),

variable_feature('distance_this_to_task'),

variable_feature('distance_task_to_end')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

feature_variable('task_weight'),

worker_variable('worker_weight_limit'),

worker_used_resource('worker_used_weight', task_require='task_weight'),

edge_variable('distance_last_to_this', feature='distance_matrix', last_to_this=True),

edge_variable('distance_this_to_task', feature='distance_matrix', this_to_task=True),

edge_variable('distance_task_to_end', feature='distance_matrix', task_to_end=True)]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_task(self):

# 已经完成的任务

mask = self.task_demand_now <= 0

# 车辆容量限制

worker_weight_limit = self.worker_weight_limit - self.worker_used_weight

mask |= self.task_demand_now * self.task_weight > worker_weight_limit[:, None]

return mask

def finished(self):

return torch.all(self.task_demand_now <= 0, 1)

class Objective:

def step_worker_end(self):

return self.distance_last_to_this

def step_task(self):

return self.distance_last_to_this

Pickup and Delivery Problem with Time Windows(PDPTW)

PDPTW

from greedrl.model import runner

from greedrl.feature import *

from greedrl.variable import *

from greedrl.function import *

from greedrl import Problem, Solution, Solver

features = [local_category('task_group'),

global_category('task_priority', 2),

variable_feature('distance_this_to_task'),

variable_feature('distance_task_to_end')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

feature_variable('task_weight'),

feature_variable('task_group'),

feature_variable('task_priority'),

feature_variable('task_due_time2', feature='task_due_time'),

task_variable('task_due_time'),

task_variable('task_service_time'),

task_variable('task_due_time_penalty'),

worker_variable('worker_basic_cost'),

worker_variable('worker_distance_cost'),

worker_variable('worker_due_time'),

worker_variable('worker_weight_limit'),

worker_used_resource('worker_used_weight', task_require='task_weight'),

worker_used_resource('worker_used_time', 'distance_matrix', 'task_service_time', 'task_ready_time',

'worker_ready_time'),

edge_variable('distance_last_to_this', feature='distance_matrix', last_to_this=True),

edge_variable('distance_this_to_task', feature='distance_matrix', this_to_task=True),

edge_variable('distance_task_to_end', feature='distance_matrix', task_to_end=True)]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_worker_end(self):

return task_group_split(self.task_group, self.task_demand_now <= 0)

def mask_task(self):

mask = self.task_demand_now <= 0

mask |= task_group_priority(self.task_group, self.task_priority, mask)

worker_used_time = self.worker_used_time[:, None] + self.distance_this_to_task

mask |= (worker_used_time > self.task_due_time2) & (self.task_priority == 0)

# 容量约束

worker_weight_limit = self.worker_weight_limit - self.worker_used_weight

mask |= self.task_demand_now * self.task_weight > worker_weight_limit[:, None]

return mask

def finished(self):

return torch.all(self.task_demand_now <= 0, 1)

class Objective:

def step_worker_start(self):

return self.worker_basic_cost

def step_worker_end(self):

feasible = self.worker_used_time <= self.worker_due_time

return self.distance_last_to_this * self.worker_distance_cost, feasible

def step_task(self):

worker_used_time = self.worker_used_time - self.task_service_time

feasible = worker_used_time <= self.task_due_time

feasible &= worker_used_time <= self.worker_due_time

cost = self.distance_last_to_this * self.worker_distance_cost

return torch.where(feasible, cost, cost + self.task_due_time_penalty), feasible

VRP with Time Windows(VRPTW)

VRPTW

from greedrl import Problem, Solution, Solver

from greedrl.feature import *

from greedrl.variable import *

from greedrl.function import *

from greedrl.model import runner

from greedrl.myenv import VrptwEnv

features = [continuous_feature('worker_weight_limit'),

continuous_feature('worker_ready_time'),

continuous_feature('worker_due_time'),

continuous_feature('worker_basic_cost'),

continuous_feature('worker_distance_cost'),

continuous_feature('task_demand'),

continuous_feature('task_weight'),

continuous_feature('task_ready_time'),

continuous_feature('task_due_time'),

continuous_feature('task_service_time'),

continuous_feature('distance_matrix')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

feature_variable('task_weight'),

feature_variable('task_due_time'),

feature_variable('task_ready_time'),

feature_variable('task_service_time'),

worker_variable('worker_weight_limit'),

worker_variable('worker_due_time'),

worker_variable('worker_basic_cost'),

worker_variable('worker_distance_cost'),

worker_used_resource('worker_used_weight', task_require='task_weight'),

worker_used_resource('worker_used_time', 'distance_matrix', 'task_service_time', 'task_ready_time',

'worker_ready_time'),

edge_variable('distance_last_to_this', feature='distance_matrix', last_to_this=True),

edge_variable('distance_this_to_task', feature='distance_matrix', this_to_task=True),

edge_variable('distance_task_to_end', feature='distance_matrix', task_to_end=True)]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_task(self):

# 已经完成的任务

mask = self.task_demand_now <= 0

# 车辆容量限制

worker_weight_limit = self.worker_weight_limit - self.worker_used_weight

mask |= self.task_demand_now * self.task_weight > worker_weight_limit[:, None]

worker_used_time = self.worker_used_time[:, None] + self.distance_this_to_task

mask |= worker_used_time > self.task_due_time

worker_used_time = torch.max(worker_used_time, self.task_ready_time)

worker_used_time += self.task_service_time

worker_used_time += self.distance_task_to_end

mask |= worker_used_time > self.worker_due_time[:, None]

return mask

def finished(self):

return torch.all(self.task_demand_now <= 0, 1)

class Objective:

def step_worker_start(self):

return self.worker_basic_cost

def step_worker_end(self):

return self.distance_last_to_this * self.worker_distance_cost

def step_task(self):

return self.distance_last_to_this * self.worker_distance_cost

Travelling Salesman Problem(TSP)

TSP

from greedrl.feature import *

from greedrl.variable import *

from greedrl import Problem

from greedrl import runner

features = [continuous_feature('task_location'),

variable_feature('distance_this_to_task'),

variable_feature('distance_task_to_end')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

edge_variable('distance_last_to_this', feature='distance_matrix', last_to_this=True),

edge_variable('distance_this_to_task', feature='distance_matrix', this_to_task=True),

edge_variable('distance_task_to_end', feature='distance_matrix', task_to_end=True),

edge_variable('distance_last_to_loop', feature='distance_matrix', last_to_loop=True)]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_task(self):

mask = self.task_demand_now <= 0

return mask

def mask_worker_end(self):

return torch.any(self.task_demand_now > 0, 1)

def finished(self):

return torch.all(self.task_demand_now <= 0, 1)

class Objective:

def step_worker_end(self):

return self.distance_last_to_loop

def step_task(self):

return self.distance_last_to_this

Split Delivery Vehicle Routing Problem(SDVRP)

SDVRP

from greedrl.feature import *

from greedrl.variable import *

from greedrl import Problem

from greedrl import runner

features = [continuous_feature('task_demand'),

continuous_feature('worker_weight_limit'),

continuous_feature('distance_matrix'),

variable_feature('distance_this_to_task'),

variable_feature('distance_task_to_end')]

variables = [task_demand_now('task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

feature_variable('task_weight'),

task_variable('task_weight_this', feature='task_weight'),

worker_variable('worker_weight_limit'),

worker_used_resource('worker_used_weight', task_require='task_weight'),

edge_variable('distance_last_to_this', feature='distance_matrix', last_to_this=True)]

class Constraint:

def do_task(self):

worker_weight_limit = self.worker_weight_limit - self.worker_used_weight

return torch.min(self.task_demand_this, worker_weight_limit // self.task_weight_this)

def mask_task(self):

mask = self.task_demand <= 0

worker_weight_limit = self.worker_weight_limit - self.worker_used_weight

mask |= self.task_weight > worker_weight_limit[:, None]

return mask

def finished(self):

return torch.all(self.task_demand <= 0, 1)

class Objective:

def step_worker_end(self):

return self.distance_last_to_this

def step_task(self):

return self.distance_last_to_this

Realistic Business Scenario

real-time Dynamic Pickup and Delivery Problem(DPDP)

from greedrl.feature import *

from greedrl.variable import *

from greedrl.function import *

from greedrl import Problem

from greedrl import runner

features = [local_category('task_order'),

global_category('task_type', 2),

global_category('task_new_order', 2),

variable_feature('time_this_to_task'),

continuous_feature('x_time_matrix'),

continuous_feature('task_due_time_x'),

continuous_feature('worker_task_mask')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

task_variable('task_pickup_this', feature='task_pickup'),

task_variable('task_due_time_this', feature='task_due_time'),

feature_variable('task_order', feature='task_order'),

feature_variable('task_type', feature='task_type'),

feature_variable('task_new_pickup', feature='task_new_pickup'),

feature_variable('worker_task_mask', feature='worker_task_mask'),

worker_count_now('worker_count_now', feature='worker_count'),

worker_variable('worker_min_old_task_this', feature='worker_min_old_task'),

worker_variable('worker_max_new_order_this', feature='worker_max_new_order'),

worker_variable('worker_task_mask_this', feature='worker_task_mask'),

worker_used_resource('worker_used_old_task', task_require='task_old'),

worker_used_resource('worker_used_new_order', task_require='task_new_pickup'),

worker_used_resource('worker_used_time', edge_require='time_matrix'),

edge_variable('time_this_to_task', feature='x_time_matrix', this_to_task=True)]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_worker_start(self):

mask = self.worker_count_now <= 0

finished = self.task_demand_now <= 0

worker_task_mask = self.worker_task_mask | finished[:, None, :]

mask |= torch.all(worker_task_mask, 2)

return mask

def mask_worker_end(self):

mask = self.worker_used_old_task < self.worker_min_old_task_this

mask |= task_group_split(self.task_order, self.task_demand_now <= 0)

return mask

def mask_task(self):

mask = self.task_demand_now <= 0

mask |= task_group_priority(self.task_order, self.task_type, mask)

worker_max_new_order = self.worker_max_new_order_this - self.worker_used_new_order

mask |= self.task_new_pickup > worker_max_new_order[:, None]

mask |= self.worker_task_mask_this

return mask

def finished(self):

worker_mask = self.worker_count_now <= 0

task_mask = self.task_demand_now <= 0

worker_task_mask = worker_mask[:, :, None] | task_mask[:, None, :]

worker_task_mask |= self.worker_task_mask

batch_size = worker_task_mask.size(0)

worker_task_mask = worker_task_mask.view(batch_size, -1)

return worker_task_mask.all(1)

class Objective:

def step_task(self):

over_time = (self.worker_used_time - self.task_due_time_this).clamp(min=0)

pickup_time = self.worker_used_time * self.task_pickup_this

return self.worker_used_time + over_time + pickup_time

def step_finish(self):

return self.task_demand_now.sum(1) * 1000

Order Batching Problem

Batching

from greedrl import Problem, Solver

from greedrl.feature import *

from greedrl.variable import *

from greedrl import runner

features = [local_feature('task_area'),

local_feature('task_roadway'),

local_feature('task_area_group'),

sparse_local_feature('task_item_id', 'task_item_num'),

sparse_local_feature('task_item_owner_id', 'task_item_num'),

variable_feature('worker_task_item'),

variable_feature('worker_used_roadway'),

variable_feature('worker_used_area')]

variables = [task_demand_now('task_demand_now', feature='task_demand'),

task_demand_now('task_demand_this', feature='task_demand', only_this=True),

feature_variable('task_item_id'),

feature_variable('task_item_num'),

feature_variable('task_item_owner_id'),

feature_variable('task_area'),

feature_variable('task_area_group'),

feature_variable('task_load'),

feature_variable('task_group'),

worker_variable('worker_load_limit'),

worker_variable('worker_area_limit'),

worker_variable('worker_area_group_limit'),

worker_task_item('worker_task_item', item_id='task_item_id', item_num='task_item_num'),

worker_task_item('worker_task_item_owner', item_id='task_item_owner_id', item_num='task_item_num'),

worker_used_resource('worker_used_load', task_require='task_load'),

worker_used_resource('worker_used_area', task_require='task_area'),

worker_used_resource('worker_used_roadway', task_require='task_roadway'),

worker_used_resource('worker_used_area_group', task_require='task_area_group')]

class Constraint:

def do_task(self):

return self.task_demand_this

def mask_worker_end(self):

return self.worker_used_load < self.worker_load_limit

def mask_task(self):

# completed tasks

mask = self.task_demand_now <= 0

# mask |= task_group_priority(self.task_group, self.task_out_stock_time, mask)

NT = self.task_item_id.size(1)

worker_task_item = self.worker_task_item[:, None, :]

worker_task_item = worker_task_item.expand(-1, NT, -1)

task_item_in_worker = worker_task_item.gather(2, self.task_item_id.long())

task_item_in_worker = (task_item_in_worker > 0) & (self.task_item_num > 0)

worker_task_item_owner = self.worker_task_item_owner[:, None, :]

worker_task_item_owner = worker_task_item_owner.expand(-1, NT, -1)

task_item_owner_in_worker = worker_task_item_owner.gather(2, self.task_item_owner_id.long())

task_item_owner_in_worker = (task_item_owner_in_worker > 0) & (self.task_item_num > 0)

#

mask |= torch.any(task_item_in_worker & ~task_item_owner_in_worker, 2)

worker_load_limit = self.worker_load_limit - self.worker_used_load

mask |= (self.task_load > worker_load_limit[:, None])

task_area = self.task_area + self.worker_used_area[:, None, :]

task_area_num = task_area.clamp(0, 1).sum(2, dtype=torch.int32)

mask |= (task_area_num > self.worker_area_limit[:, None])

tak_area_group = self.task_area_group + self.worker_used_area_group[:, None, :]

tak_area_group_num = tak_area_group.clamp(0, 1).sum(2, dtype=torch.int32)

mask |= (tak_area_group_num > self.worker_area_group_limit[:, None])

return mask

def finished(self):

return torch.all(self.task_demand_now <= 0, 1)

class Objective:

def step_worker_end(self):

area_num = self.worker_used_area.clamp(0, 1).sum(1)

roadway_num = self.worker_used_roadway.clamp(0, 1).sum(1)

item_num = self.worker_task_item.clamp(0, 1).sum(1)

penalty = (self.worker_load_limit - self.worker_used_load) * 10

return area_num * 100 + roadway_num * 10 + item_num + penalty

🤠GreedRL-CVRP-pretrained model

Model description

Intended uses & limitations

You can use these model for solving the vehicle routing problems (VRPs) with reinforcement learning (RL).

How to use

Requirements

This library requires Python == 3.8. Miniconda / Anaconda is our recommended Python distribution.

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 torchaudio==0.12.1 --extra-index-url https://download.pytorch.org/whl/cu113

You need to compile first and add the resulting library greedrl_c to the PYTHONPATH

python setup.py build

export PYTHONPATH={root_path}/greedrl/build/lib.linux-x86_64-cpython-38/:$PYTHONPATH

Training

We provide example of Capacitated VRP(CVRP) for training and inference.

- Training data

We use the generated data for the training phase, the customers and depot locations are randomly generated in the unit square [0,1] X [0,1].

For the CVRP, we assume that the demand of each node is a discrete number in {1,...,9}, chosen uniformly at random.

- Start training

cd examples/cvrp

python train.py --model_filename cvrp_5000.pt --problem_size 5000

Evaluation

We provide some pretrained models for different CVRP problem sizes, such as cvrp_100, cvrp_1000, cvrp_2000 and cvrp_5000, that you can directly use for inference.

cd examples/cvrp

python solve.py --device cuda --model_name cvrp_5000.pt --problem_size 5000

Support

For commercial enquiries, please contact us.

About GreedRL

- Website: https://greedrl.github.io/