license: afl-3.0

This model is actually very accurate for this task, intuitively inspired by information retrieval techniques. In 2019, Nils Reimers and Iryna Gurevych introduced a new transformers model called Sentence-BERT, Sentence Embeddings using Siamese BERT-Networks. This model was introduce in this paper: https://doi.org/10.48550/arxiv.1908.10084}

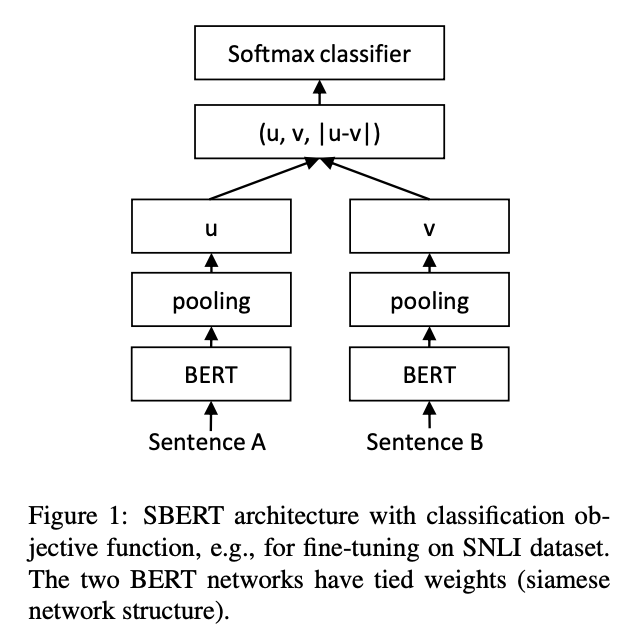

This new Sentence-BERT model is modified on the BERT model by adding a pooling operation to the output of BERT model. In such a way, it can output a fixed size of the sentence embedding to calculate cosine similarity, and so on. To obtain a meaningful sentence embedding in a sentence vector space where similar or pairwise sentence embedding are close, they created a triplet network to modify the BERT model as the architecture below figure.