datasets:

- Open-Orca/OpenOrca

language:

- en

library_name: transformers

pipeline_tag: text-generation

🐋 TBD 🐋

OpenOrca - Mistral - 7B - 8k

We have used our own OpenOrca dataset to fine-tune on top of Mistral 7B. This dataset is our attempt to reproduce the dataset generated for Microsoft Research's Orca Paper. We use OpenChat packing, trained with Axolotl.

This release is trained on a curated filtered subset of most of our GPT-4 augmented data. It is the same subset of our data as was used in our OpenOrcaxOpenChat-Preview2-13B model.

HF Leaderboard evals place this model as #2 for all models smaller than 30B at release time, outperforming all but one 13B model.

TBD

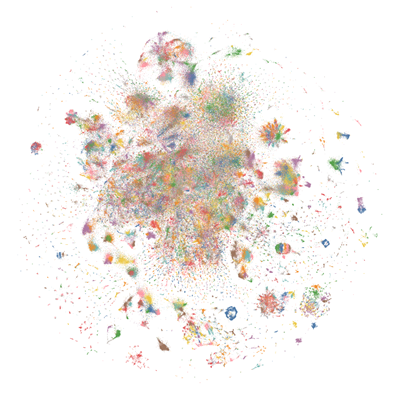

Want to visualize our full (pre-filtering) dataset? Check out our Nomic Atlas Map.

We are in-process with training more models, so keep a look out on our org for releases coming soon with exciting partners.

We will also give sneak-peak announcements on our Discord, which you can find here:

or on the OpenAccess AI Collective Discord for more information about Axolotl trainer here:

Prompt Template

We used OpenAI's Chat Markup Language (ChatML) format, with <|im_start|> and <|im_end|> tokens added to support this.

Example Prompt Exchange

TBD

Evaluation

We have evaluated using the methodology and tools for the HuggingFace Leaderboard, and find that we have significantly improved upon the base model.

TBD

HuggingFaceH4 Open LLM Leaderboard Performance

TBD

GPT4ALL Leaderboard Performance

TBD

Dataset

We used a curated, filtered selection of most of the GPT-4 augmented data from our OpenOrca dataset, which aims to reproduce the Orca Research Paper dataset.

Training

We trained with 8x A6000 GPUs for 62 hours, completing 4 epochs of full fine tuning on our dataset in one training run. Commodity cost was ~$400.

Citation

@misc{mukherjee2023orca,

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

year={2023},

eprint={2306.02707},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

@misc{longpre2023flan,

title={The Flan Collection: Designing Data and Methods for Effective Instruction Tuning},

author={Shayne Longpre and Le Hou and Tu Vu and Albert Webson and Hyung Won Chung and Yi Tay and Denny Zhou and Quoc V. Le and Barret Zoph and Jason Wei and Adam Roberts},

year={2023},

eprint={2301.13688},

archivePrefix={arXiv},

primaryClass={cs.AI}

}