Eurus-2-7B-SFT

Links

- 📜 Blog

- 🤗 PRIME Collection

- 🤗 SFT Data

Introduction

Eurus-2-7B-SFT is fine-tuned from Qwen2.5-Math-7B-Base for its great mathematical capabilities. It trains on Eurus-2-SFT-Data, which is an action-centric chain-of-thought reasoning dataset.

We apply imitation learning (supervised finetuning) as a warmup stage to teach models to learn reasoning patterns, , serving as a starter model for Eurus-2-7B-PRIME.

Usage

We apply tailored prompts for coding and math task:

System Prompt

\nWhen tackling complex reasoning tasks, you have access to the following actions. Use them as needed to progress through your thought process.\n\n[ASSESS]\n\n[ADVANCE]\n\n[VERIFY]\n\n[SIMPLIFY]\n\n[SYNTHESIZE]\n\n[PIVOT]\n\n[OUTPUT]\n\nYou should strictly follow the format below:\n\n[ACTION NAME]\n\n# Your action step 1\n\n# Your action step 2\n\n# Your action step 3\n\n...\n\nNext action: [NEXT ACTION NAME]\n

Coding

{question} + "\n\nWrite Python code to solve the problem. Present the code in \n```python\nYour code\n```\nat the end."

Math

{question} + "\n\nPresent the answer in LaTex format: \\boxed{Your answer}"

Evaluation

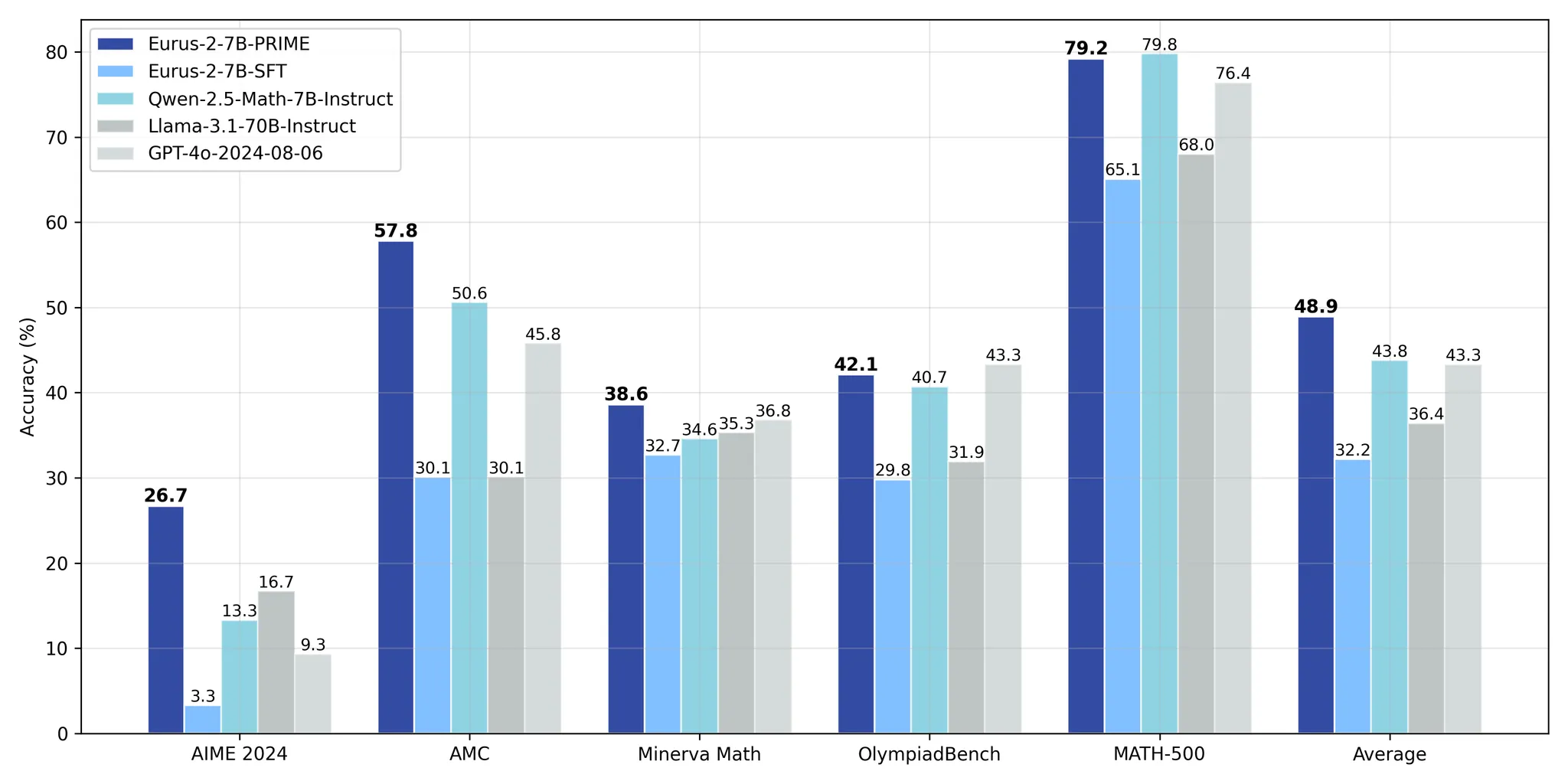

After finetuning, the performance of our Eurus-2-7B-SFT is shown in the following figure.

Citation

@misc{cui2024process,

title={Process Reinforcement through Implicit Rewards},

author={Ganqu Cui and Lifan Yuan and Zefan Wang and Hanbin Wang and Wendi Li and Bingxiang He and Yuchen Fan and Tianyu Yu and Qixin Xu and Weize Chen and Jiarui Yuan and Huayu Chen and Kaiyan Zhang and Xingtai Lv and Shuo Wang and Yuan Yao and Hao Peng and Yu Cheng and Zhiyuan Liu and Maosong Sun and Bowen Zhou and Ning Ding},

year={2025}

}

@article{yuan2024implicitprm,

title={Free Process Rewards without Process Labels},

author={Lifan Yuan and Wendi Li and Huayu Chen and Ganqu Cui and Ning Ding and Kaiyan Zhang and Bowen Zhou and Zhiyuan Liu and Hao Peng},

journal={arXiv preprint arXiv:2412.01981},

year={2024}

}

- Downloads last month

- 27