Wav2Lip-HD: Improving Wav2Lip to achieve High-Fidelity Videos

This repository contains code for achieving high-fidelity lip-syncing in videos, using the Wav2Lip algorithm for lip-syncing and the Real-ESRGAN algorithm for super-resolution. The combination of these two algorithms allows for the creation of lip-synced videos that are both highly accurate and visually stunning.

Algorithm

The algorithm for achieving high-fidelity lip-syncing with Wav2Lip and Real-ESRGAN can be summarized as follows:

- The input video and audio are given to

Wav2Lipalgorithm. - Python script is written to extract frames from the video generated by wav2lip.

- Frames are provided to Real-ESRGAN algorithm to improve quality.

- Then, the high-quality frames are converted to video using ffmpeg, along with the original audio.

- The result is a high-quality lip-syncing video.

- The specific steps for running this algorithm are described in the Testing Model section of this README.

Testing Model

To test the "Wav2Lip-HD" model, follow these steps:

Clone this repository and install requirements using following command (Make sure, Python and CUDA are already installed):

git clone https://github.com/saifhassan/Wav2Lip-HD.git cd Wav2Lip-HD pip install -r requirements.txtDownloading weights

| Model | Directory | Download Link |

|---|---|---|

| Wav2Lip | checkpoints/ | Link |

| ESRGAN | experiments/001_ESRGAN_x4_f64b23_custom16k_500k_B16G1_wandb/models/ | Link |

| Face_Detection | face_detection/detection/sfd/ | Link |

| Real-ESRGAN | Real-ESRGAN/gfpgan/weights/ | Link |

| Real-ESRGAN | Real-ESRGAN/weights/ | Link |

Put input video to

input_videosdirectory and input audio toinput_audiosdirectory.Open

run_final.shfile and modify following parameters:filename=kennedy(just video file name without extension)input_audio=input_audios/ai.wav(audio filename with extension)Execute

run_final.shusing following command:bash run_final.shOutputs

output_videos_wav2lipdirectory contains video output generated by wav2lip algorithm.frames_wav2lipdirectory contains frames extracted from video (generated by wav2lip algorithm).frames_hddirectory contains frames after performing super-resolution using Real-ESRGAN algorithm.output_videos_hddirectory contains final high quality video output generated by Wav2Lip-HD.

Results

The results produced by Wav2Lip-HD are in two forms, one is frames and other is videos. Both are shared below:

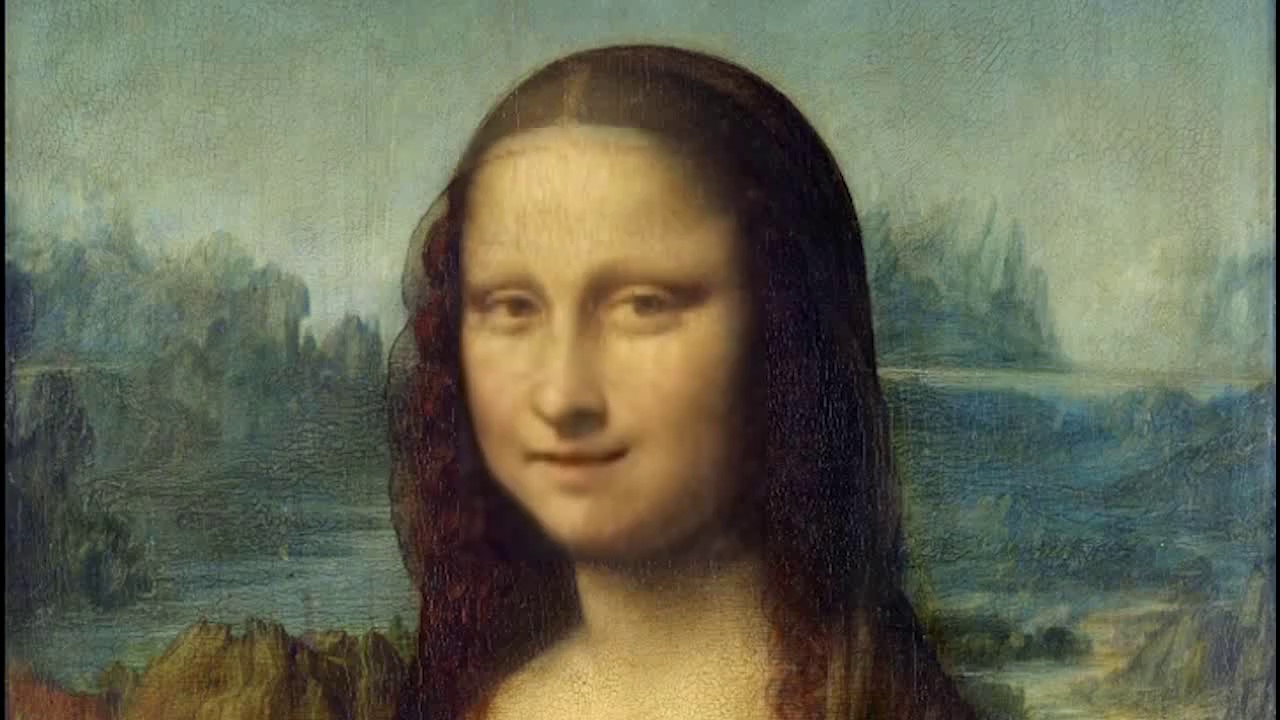

Example output frames

| Frame by Wav2Lip | Optimized Frame |

|

|

|

|

|

|

Example output videos

| Video by Wav2Lip | Optimized Video |

|---|---|

Acknowledgements

We would like to thank the following repositories and libraries for their contributions to our work:

- The Wav2Lip repository, which is the core model of our algorithm that performs lip-sync.

- The face-parsing.PyTorch repository, which provides us with a model for face segmentation.

- The Real-ESRGAN repository, which provides the super resolution component for our algorithm.

- ffmpeg, which we use for converting frames to video.