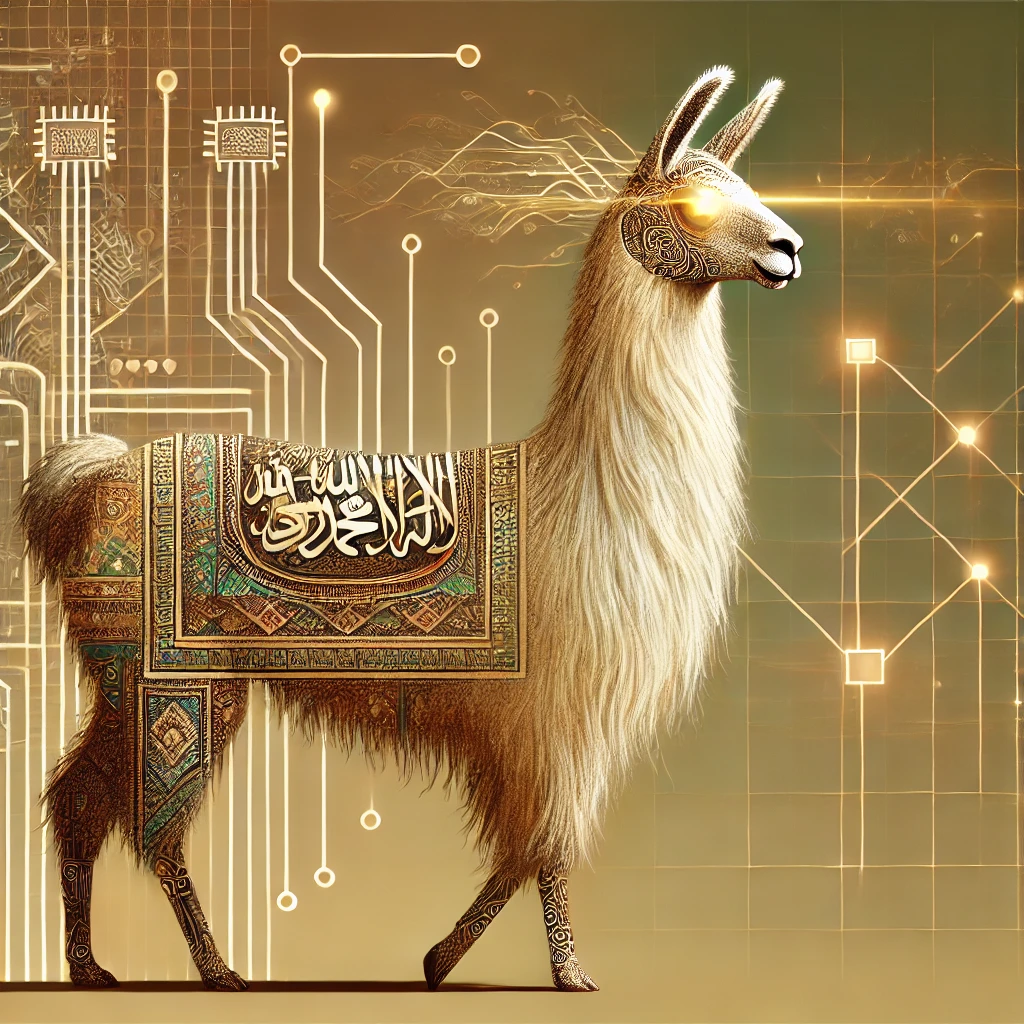

Welcome to Barka-2b-it : The best 2B Arabic LLM

Motivation :

The goal of this project was to adapt large language models for the Arabic language and create a new state-of-the-art Arabic LLM. Due to the scarcity of Arabic instruction fine-tuning data, not many LLMs have been trained specifically in Arabic, which is surprising given the large number of Arabic speakers.

Our final model was trained on a high-quality instruction fine-tuning (IFT) dataset, generated synthetically and then evaluated using the Hugging Face Arabic leaderboard.

Training :

This model is the 2B version. It was trained for 2 days on 1 A100 GPU using LoRA with a rank of 128, a learning rate of 1e-4, and a cosine learning rate schedule.

Evaluation :

My model is now on the Arabic leaderboard.

| Metric | Average | ACVA | AlGhafa | MMLU | EXAMS | ARC Challenge | ARC Easy | BOOLQ | COPA | HELLAWSWAG | OPENBOOK QA | PIQA | RACE | SCIQ | TOXIGEN |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Slim205/Barka-2b-it | 46.98 | 39.5 | 46.5 | 37.06 | 38.73 | 35.78 | 36.97 | 73.77 | 50 | 28.98 | 43.84 | 56.36 | 36.19 | 55.78 | 78.29 |

Please refer to https://github.com/Slim205/Arabicllm/ for more details.

Using the Model

The model uses transformers to generate responses based on the provided inputs. Here’s an example code to use the model:

from transformers import AutoTokenizer, AutoModelForCausalLM

from peft import PeftModel

import torch

model_id = "google/gemma-2-2b-it"

peft_model_id = "Slim205/Barka-2b-it"

model = AutoModelForCausalLM.from_pretrained(model_id).to("cuda")

tokenizer = AutoTokenizer.from_pretrained("Slim205/Barka-2b-it")

model1 = PeftModel.from_pretrained(model, peft_model_id)

input_text = "ما هي عاصمة تونس؟" # "What is the capital of Tunisia?"

chat = [

{ "role": "user", "content": input_text },

]

prompt = tokenizer.apply_chat_template(chat, tokenize=False, add_generation_prompt=True)

inputs = tokenizer.encode(prompt, add_special_tokens=False, return_tensors="pt")

outputs = model.generate(

input_ids=inputs.to(model.device),

max_new_tokens=32,

top_p=0.9,

do_sample=True

)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

user

ما هي عاصمة تونس؟

model

عاصمة تونس هي تونس. يشار إليها عادة باسم مدينة تونس. المدينة لديها حوالي 2،500،000 نسمة

Feel free to use this model and send me your feedback.

Together, we can advance Arabic LLM development!

- Downloads last month

- 2,036