YAML Metadata

Warning:

empty or missing yaml metadata in repo card

(https://huggingface.co/docs/hub/model-cards#model-card-metadata)

DistilBERT Incoherence Classifier

This is a fine-tuned DistilBERT model for classifying text based on its coherence. It can identify various types of incoherence.

Model Details

- Model: DistilBERT (distilbert-base-multilingual-cased)

- Task: Text Classification (Coherence Detection)

- Fine-tuning: The model was fine-tuned using a custom-generated dataset that features various types of incoherence.

- Training Dataset The model was trained on the incoherent-text-dataset dataset, located on Huggingface.

Training Metrics

| Epoch | Training Loss | Validation Loss | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|---|

| 1 | 0.037500 | 0.071958 | 0.984995 | 0.985002 | 0.984995 | 0.984564 |

| 2 | 0.008900 | 0.068670 | 0.985995 | 0.985973 | 0.985995 | 0.985603 |

| 3 | 0.008500 | 0.058111 | 0.990330 | 0.990260 | 0.990330 | 0.990262 |

Evaluation Metrics

The following metrics were measured on the test set:

| Metric | Value |

|---|---|

| Loss | 0.049511 |

| Accuracy | 0.991 |

| Precision | 0.990958 |

| Recall | 0.991 |

| F1-Score | 0.990962 |

Classification Report:

precision recall f1-score support

coherent 0.99 0.99 0.99 1500

grammatical_errors 0.96 0.94 0.95 250

random_bytes 1.00 1.00 1.00 250

random_tokens 1.00 1.00 1.00 250

random_words 1.00 1.00 1.00 250

run_on 1.00 0.99 1.00 250

word_soup 1.00 1.00 1.00 250

accuracy 0.99 3000

macro avg 0.99 0.99 0.99 3000

weighted avg 0.99 0.99 0.99 3000

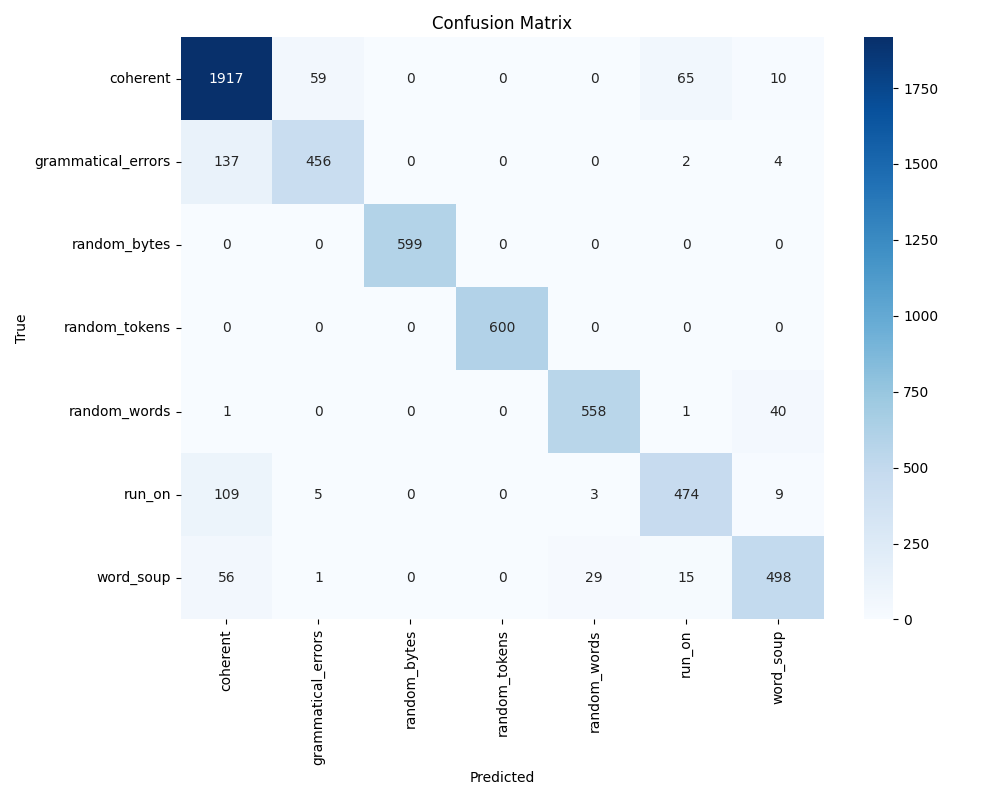

Confusion Matrix

The confusion matrix above shows the performance of the model on each class.

Usage

This model can be used for text classification tasks, specifically for detecting and categorizing different types of text incoherence. You can use the inference_example function provided in the notebook to test your own text.

Limitations

The model has been trained on a generated dataset, so care must be taken in evaluating it in the real world. More data may need to be collected before evaluating this model in a real-world setting.

License

CC-BY-SA 4.0

- Downloads last month

- 0

Inference Providers

NEW

This model is not currently available via any of the supported Inference Providers.

The model cannot be deployed to the HF Inference API:

The model has no library tag.