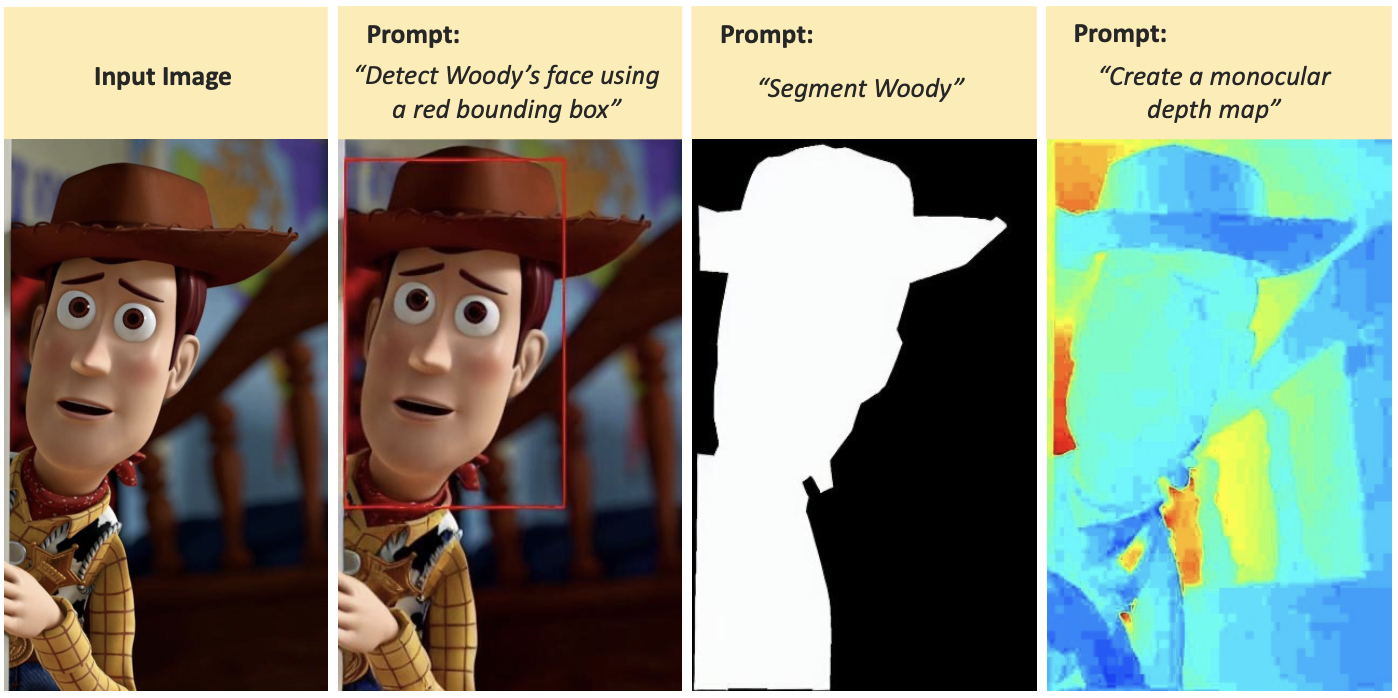

InstructCV: Instruction-Tuned Text-to-Image Diffusion Models as Vision Generalists

GitHub: https://github.com/AlaaLab/InstructCV

Example

To use InstructCV, install diffusers using main for now. The pipeline will be available in the next release

pip install diffusers accelerate safetensors transformers

import PIL

import requests

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline, EulerAncestralDiscreteScheduler

model_id = "yulu2/InstructCV"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16, safety_checker=None, variant="ema")

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

url = "put your url here"

def download_image(url):

image = PIL.Image.open(requests.get(url, stream=True).raw)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

return image

image = download_image(URL)

seed = random.randint(0, 100000)

generator = torch.manual_seed(seed)

width, height = image.size

factor = 512 / max(width, height)

factor = math.ceil(min(width, height) * factor / 64) * 64 / min(width, height)

width = int((width * factor) // 64) * 64

height = int((height * factor) // 64) * 64

image = ImageOps.fit(image, (width, height), method=Image.Resampling.LANCZOS)

prompt = "Detect the person."

images = pipe(prompt, image=image, num_inference_steps=100, generator=generator).images[0]

images[0]

- Downloads last month

- 103

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.