metadata

license: apache-2.0

language:

- fr

library_name: transformers

inference: false

CamemBERT-L2

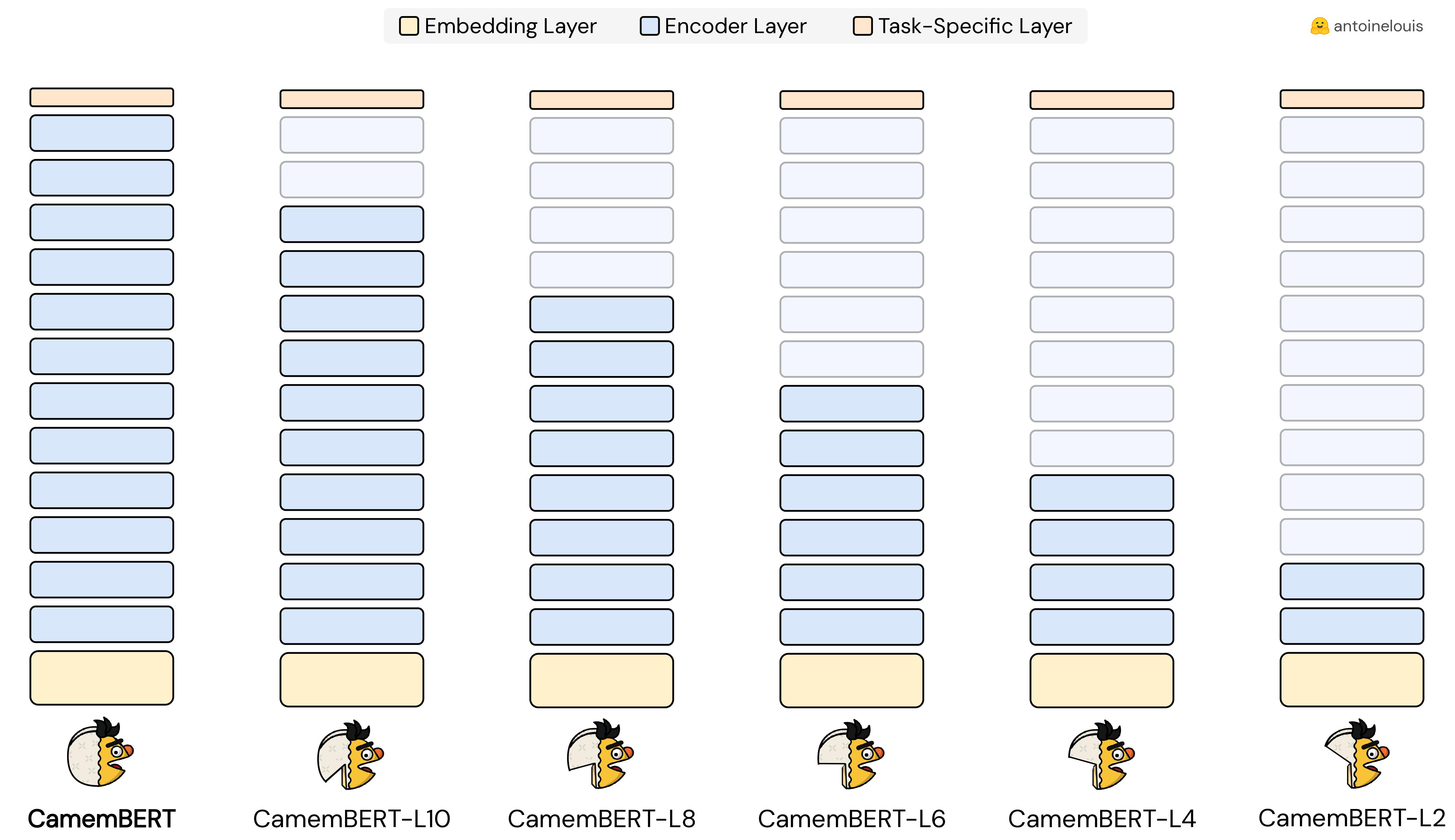

This model is a pruned version of the pre-trained CamemBERT checkpoint, obtained by dropping the top-layers from the original model.

Usage

You can use the raw model for masked language modeling (MLM), but it's mostly intended to be fine-tuned on a downstream task, especially one that uses the whole sentence to make decisions such as text classification, extractive question answering, or semantic search. For tasks such as text generation, you should look at autoregressive models like BelGPT-2.

You can use this model directly with a pipeline for masked language modeling:

from transformers import pipeline

unmasker = pipeline('fill-mask', model='antoinelouis/camembert-L2')

unmasker("Bonjour, je suis un [MASK] modèle.")

You can also use this model to get the features of a given text:

from transformers import AutoTokenizer, AutoModel

tokenizer = AutoTokenizer.from_pretrained('antoinelouis/camembert-L2')

model = AutoModel.from_pretrained('antoinelouis/camembert-L2')

text = "Remplacez-moi par le texte de votre choix."

encoded_input = tokenizer(text, return_tensors='pt')

output = model(**encoded_input)

Variations

| Model | #Params | Size | Pruning |

|---|---|---|---|

| CamemBERT | 110.6M | 445MB | - |

| CamemBERT-L10 | 96.4M | 386MB | -13% |

| CamemBERT-L8 | 82.3M | 329MB | -26% |

| CamemBERT-L6 | 68.1M | 272MB | -38% |

| CamemBERT-L4 | 53.9M | 216MB | -51% |

| CamemBERT-L2 | 39.7M | 159MB | -64% |

Citation

@online{louis2023,

author = 'Antoine Louis',

title = 'CamemBERT-L2: A Pruned Version of CamemBERT',

publisher = 'Hugging Face',

month = 'october',

year = '2023',

url = 'https://huggingface.co/antoinelouis/camembert-L2',

}