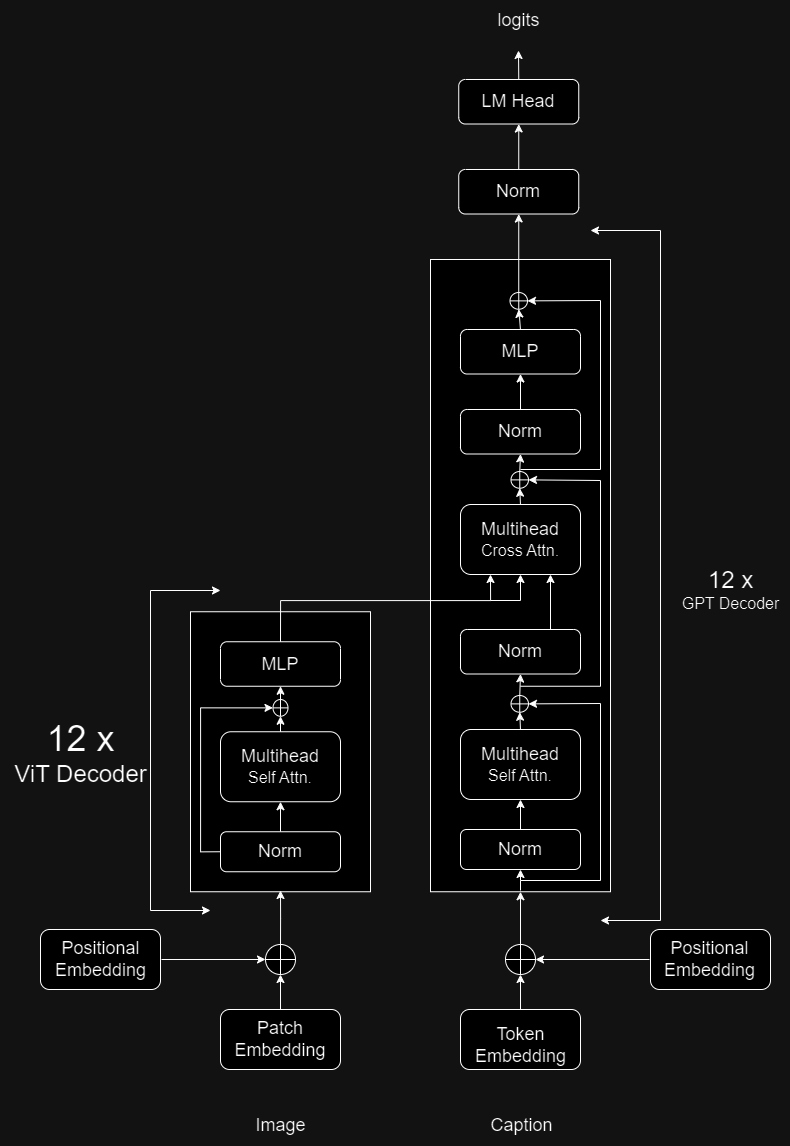

Image Captioning using ViT and GPT2 architecture

This is my attempt to make a transformer model which takes image as the input and provides a caption for the image

Model Architecture

It comprises of 12 ViT encoder and 12 GPT2 decoders

Training

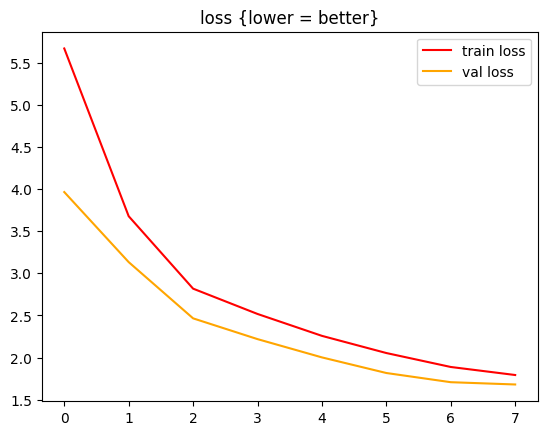

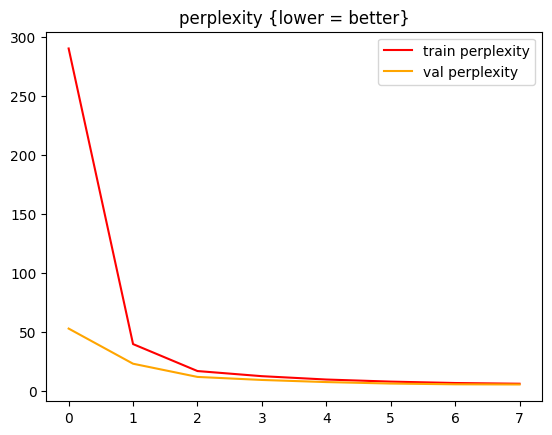

The model was trained on the dataset Flickr30k which comprises of 30k images and 5 captions for each image The model was trained for 8 epochs (which took 10hrs on kaggle's P100 GPU)

Results

The model acieved a BLEU-4 score of 0.2115, CIDEr score of 0.4, METEOR score of 0.25, and SPICE score of 0.19 on the Flickr8k dataset

These are the loss curves.

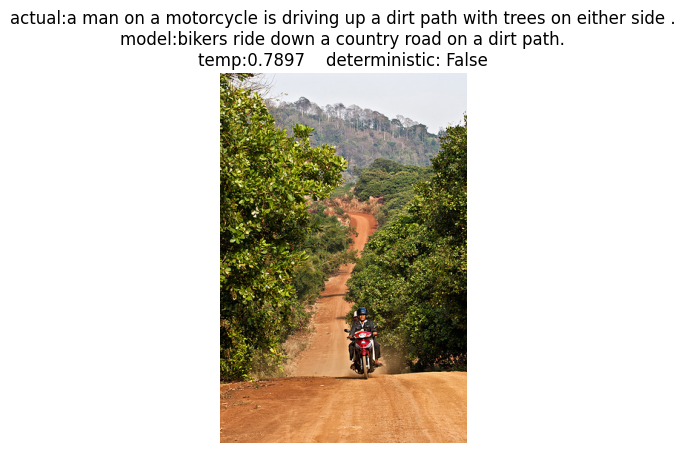

Predictions

To predict your own images download the models.py, predict.py and the requirements.txt and then run the following commands->

pip install -r requirements.txt

python predict.py

Predicting for the first time will take time as it has to download the model weights (1GB)

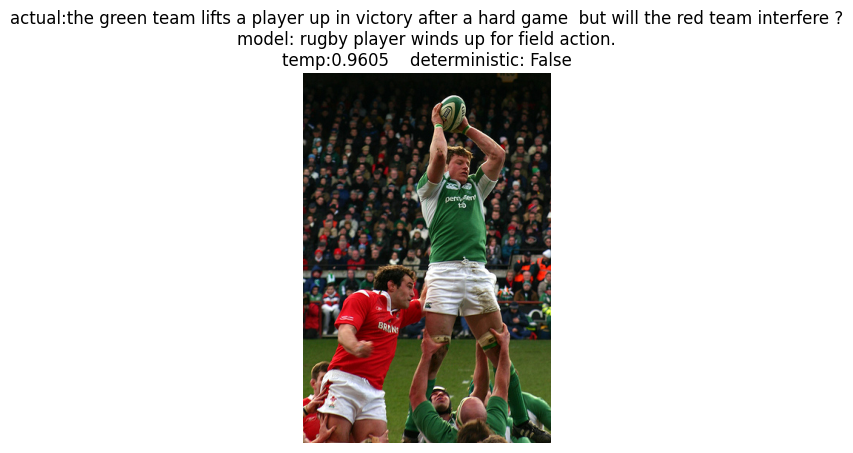

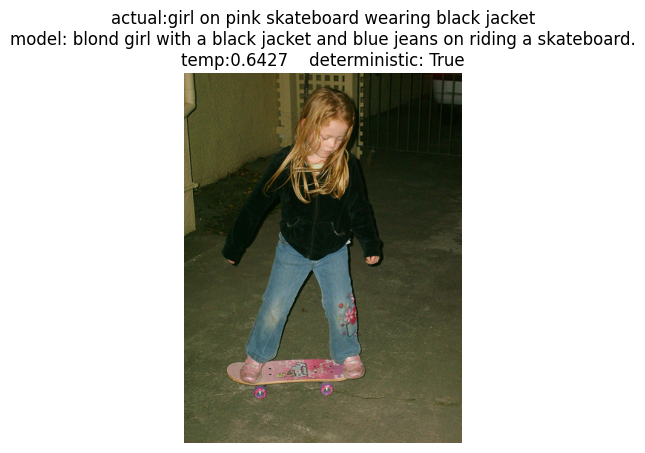

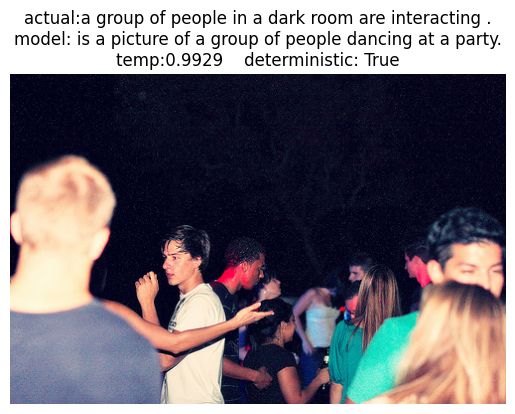

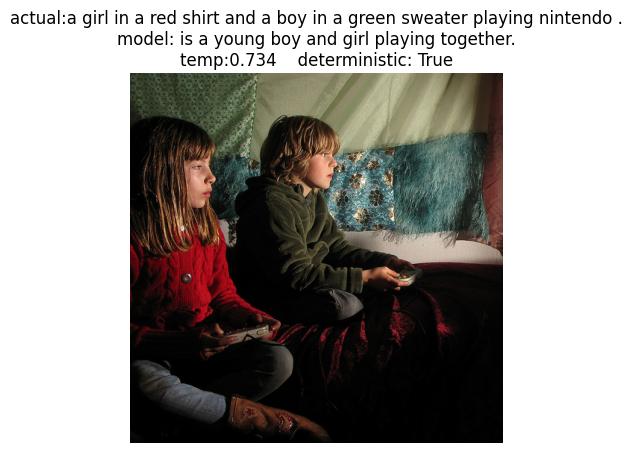

Here are a few examples of the prediction done on the Validation dataset

As we can see these are not the most amazing predictions. The performance could be improved by training it further and using an even bigger dataset like MS COCO (500k captioned images)

FAQ

Check the full notebook or Kaggle

Download the weights of the model

Model tree for ayushman72/ImageCaptioning

Base model

google/vit-base-patch16-224