Flan-UL2-Alpaca

Model weights are from epoch 0.

This Github repository contains code for leveraging the Stanford Alpaca synthetic dataset to fine tune the Flan-UL2 model, leveraging recent advances in instruction tuning. The Flan UL2 model has been shown to outperform Flan-T5 XXL on a number of metrics and has a 4x improvement in receptive field (2048 vs 512).

Resource Considerations

A goal of this project was to produce this model with a limited budget demonstrating the ability train a robust LLM using systems available to even small businesses and individuals. This had the added benefit of personally saving me money as well :). To achieve this a server was rented on vultr.com with the following pricing/specs:

- Pricing: $1.302/hour

- OS: Ubuntu 22.10 x64

- 6 vCPUs

- 60 GB CPU RAM

- 40 GB GPU RAM (1/2 x A100)

To dramatically reduce memory footprint and compute requirements Low Rank Adaption(LoRA) was used as opposed to finetuning the entire network. Additionally, the Flan-UL2 model was loaded and trained in 8 bit mode, also greatly reducing memory requirements. Finally, a batch size of 1 was used with 8 gradient accumulation steps. Here is a list of training parameters used:

- Epochs: 2

- Learning Rate: 1e-5

- Batch Size: 1

- Gradient Accumulation Steps: 8

- 8 Bit Mode: Yes

Usage

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

from peft import PeftModel, PeftConfig

prompt = "Write a story about an alpaca that went to the zoo."

peft_model_id = 'coniferlabs/flan-ul2-alpaca-lora'

config = PeftConfig.from_pretrained(peft_model_id)

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path, device_map="auto", load_in_8bit=True)

model = PeftModel.from_pretrained(model, peft_model_id, device_map={'': 0})

tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

model.eval()

tokenized_text = tokenizer.encode(prompt, return_tensors="pt").to("cuda")

outputs = model.generate(input_ids=tokenized_text, parameters={"min_length": 10, "max_length": 250})

tokenizer.batch_decode(outputs, skip_special_tokens=True)

###

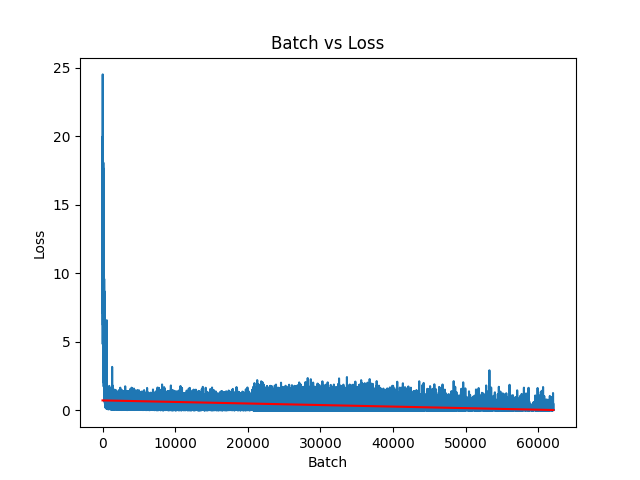

Flan-UL2 Training Results

| Epoch | Train Loss | Eval Loss |

|---|---|---|

| 1 | 12102.7285 | 2048.0518 |

| 2 | 9318.9199 | 2033.5337 |

Loss Trendline: y = -1.1302001815753724e-05x + 0.73000991550589