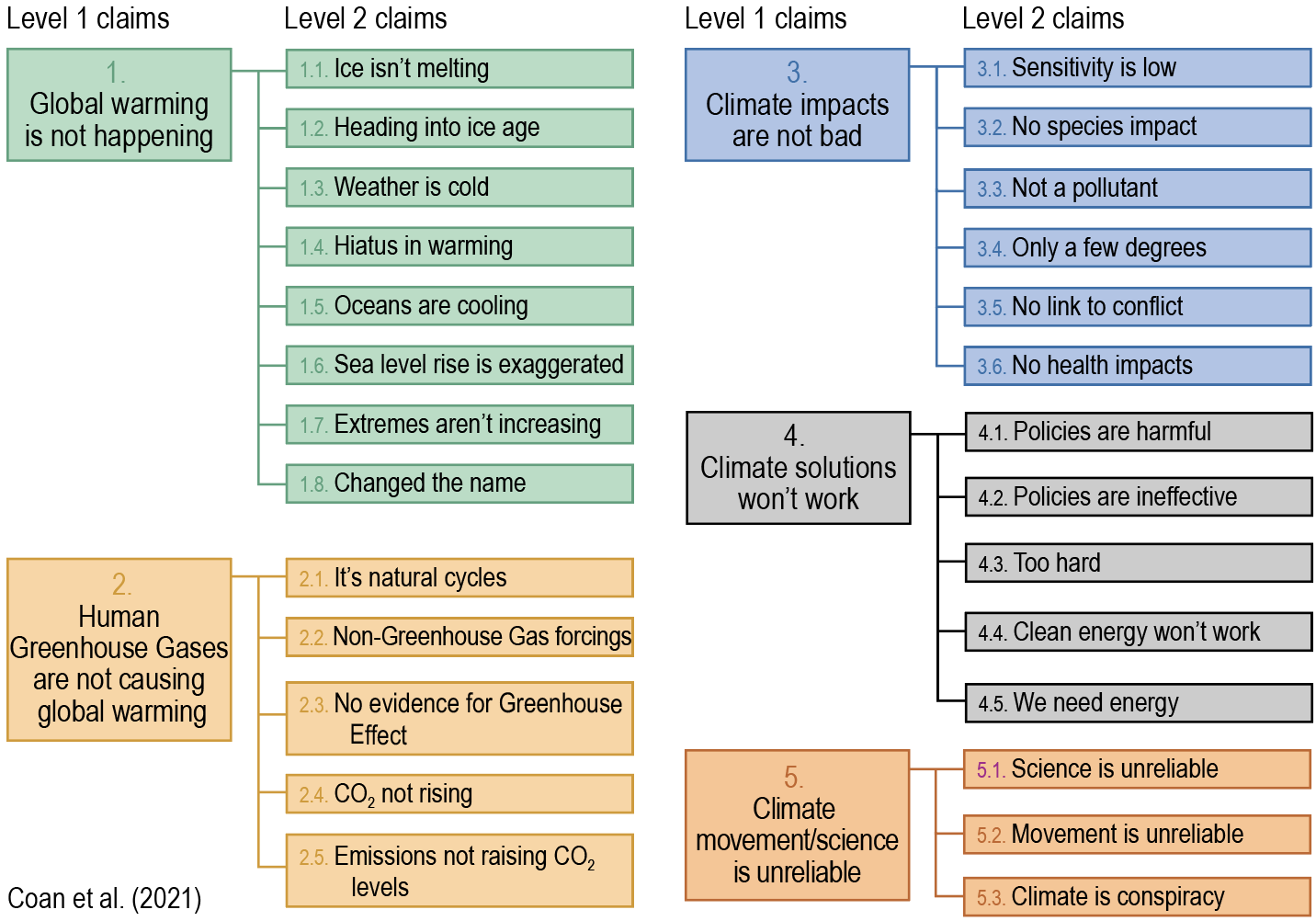

Taxonomy Augmented CARDS

Taxonomy

Metrics

| Category | CARDS | Augmented CARDS | Support |

|---|---|---|---|

| 0_0 | 70.9 | 81.5 | 1049 |

| 1_1 | 60.5 | 70.4 | 28 |

| 1_2 | 40 | 44.4 | 20 |

| 1_3 | 37 | 48.6 | 61 |

| 1_4 | 62.1 | 65.6 | 27 |

| 1_6 | 56.7 | 59.7 | 41 |

| 1_7 | 46.4 | 52 | 89 |

| 2_1 | 68.1 | 69.4 | 154 |

| 2_3 | 36.7 | 25 | 22 |

| 3_1 | 38.5 | 34.8 | 8 |

| 3_2 | 61 | 74.6 | 31 |

| 3_3 | 54.2 | 65.4 | 23 |

| 4_1 | 38.5 | 49.4 | 103 |

| 4_2 | 37.6 | 28.6 | 61 |

| 4_4 | 30.8 | 54.5 | 46 |

| 4_5 | 19.7 | 39.4 | 50 |

| 5_1 | 32.8 | 38.2 | 96 |

| 5_2 | 38.6 | 53.5 | 498 |

| 5.3 | - | 62.9 | 200 |

| Macro Average | 43.69 | 53.57 | 2407 |

Code

To run the model, you need to first evaluate the binary classification model, as shown below:

# Models

MAX_LEN = 256

BINARY_MODEL_DIR = "crarojasca/BinaryAugmentedCARDS"

TAXONOMY_MODEL_DIR = "crarojasca/TaxonomyAugmentedCARDS"

# Loading tokenizer

tokenizer = AutoTokenizer.from_pretrained(

BINARY_MODEL_DIR,

max_length = MAX_LEN, padding = "max_length",

return_token_type_ids = True

)

# Loading Models

## 1. Binary Model

print("Loading binary model: {}".format(BINARY_MODEL_DIR))

config = AutoConfig.from_pretrained(BINARY_MODEL_DIR)

binary_model = AutoModelForSequenceClassification.from_pretrained(BINARY_MODEL_DIR, config=config)

binary_model.to(device)

## 2. Taxonomy Model

print("Loading taxonomy model: {}".format(TAXONOMY_MODEL_DIR))

config = AutoConfig.from_pretrained(TAXONOMY_MODEL_DIR)

taxonomy_model = AutoModelForSequenceClassification.from_pretrained(TAXONOMY_MODEL_DIR, config=config)

taxonomy_model.to(device)

# Load Dataset

id2label = {

0: '1_1', 1: '1_2', 2: '1_3', 3: '1_4', 4: '1_6', 5: '1_7', 6: '2_1',

7: '2_3', 8: '3_1', 9: '3_2', 10: '3_3', 11: '4_1', 12: '4_2', 13: '4_4',

14: '4_5', 15: '5_1', 16: '5_2', 17: '5_3'

}

text = "Climate change is just a natural phenomenon"

tokenized_text = tokenizer(text, return_tensors = "pt")

# Running Binary Model

outputs = binary_model(**tokenized_text)

binary_score = outputs.logits.softmax(dim = 1)

binary_prediction = torch.argmax(outputs.logits, axis=1)

binary_predictions = binary_prediction.to('cpu').item()

# Running Taxonomy Model

outputs = taxonomy_model(**tokenized_text)

taxonomy_score = outputs.logits.softmax(dim = 1)

taxonomy_prediction = torch.argmax(outputs.logits, axis=1)

taxonomy_prediction = taxonomy_prediction.to('cpu').item()

prediction = "0_0" if binary_prediction==0 else id2label[taxonomy_prediction]

prediction

- Downloads last month

- 11

This model does not have enough activity to be deployed to Inference API (serverless) yet. Increase its social

visibility and check back later, or deploy to Inference Endpoints (dedicated)

instead.