source

stringclasses 1

value | text

stringlengths 152

659k

| filtering_features

stringlengths 402

437

| source_other

stringlengths 440

819k

|

|---|---|---|---|

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Add hooks sufficient to build task-local data

username_0: As currently proposed, futures-core 0.3 will not build in task-local data. The idea is to instead address needs here through external libraries via scoped thread-local storage.

For any robust task-local data, however, we want the ability to hook into the task spawning process, so that data inheritance schemes can be set up.

This issue tracks the design of such hooks.

<issue_comment>username_1: If https://github.com/rust-lang-nursery/wg-net/issues/56 proceeds and spawning is removed from task::Context, I expect the value of having a good story around task-local data to increase. In general, I don't really want to stash a `Box<Executor>` in all the places that need spawning.

Things task-local data would be helpful for:

0. Default spawn (https://github.com/rust-lang-nursery/wg-net/issues/56)

1. Storing trace info (https://github.com/tokio-rs/tokio/issues/561)

2. Storing request-local data like user credentials

<issue_comment>username_2: I think most places that need spawning are application code, which can just reference an appropriate executor via globals or handles or whatever it likes directly, as they do today in futures 0.1.

<issue_comment>username_1: Yes, that's certainly true. I've updated my comment to clarify I'm talking about executor-generic framework code.

<issue_comment>username_1: Prior discussion: https://github.com/rust-lang-nursery/futures-rs/issues/937

<issue_comment>username_3: For task-local storage, what is needed is to run code before each top-level future poll (and potentially, if using scoped-tls, code after too).

One solution is to add a hook that allows wrapping every future that comes in the executor into some wrapper future type: this way the wrapper future would be able to execute code around the wrapped future.

Actually, this could even be done by consuming the executor and returning a wrapping executor that first wraps the future with the task-local-wrapper, and then forwards the wrapped future to the wrapped executor.

However, I'm not sure this “consuming the executor and returning it” would actually work: what I actually want is `tokio::spawn` to use the wrapping executor -- which, AFAIU, wouldn't happen with the consume-and-wrap design.

<issue_comment>username_1: I messed around with @mitsuhiko's [execution-context](https://crates.io/crates/execution-context) crate yesterday. It's futures-agnostic and works via TLS, and I made it work with futures exactly as @username_3 said: a wrapper future:

```rust

/// Returns a future that executes within the scope of the current [ExecutionContext].

pub fn context_propagating<F: Future>(future: F) -> impl Future<Output = F::Output> {

ContextFuture {

future,

context: ExecutionContext::capture(),

}

}

/// A future that executes within a specific [ExecutionContext].

struct ContextFuture<F> {

future: F,

context: ExecutionContext,

}

impl<F> Future for ContextFuture<F>

where

F: Future,

{

type Output = F::Output;

fn poll(self: PinMut<Self>, cx: &mut task::Context) -> Poll<F::Output> {

let me = unsafe { PinMut::get_mut_unchecked(self) };

let future = unsafe { PinMut::new_unchecked(&mut me.future) };

me.context.run(|| future.poll(cx))

}

}

```

This works quite well! Like @username_3, I definitely see the value in hooking into the spawn mechanism, because wrapping every future in `context_propagating` before spawning is error-prone. Empirically, I've seen context propagation issues in production servers more times than I can count. Such a hook would have to be opt-in, because not every application needs or can use task-local data via TLS.

<issue_comment>username_2: A portable mechanism for wrapping all top-level `poll` calls might have broader applications as well: for example, task-level profiling, or just logging warnings when a poll call blocks for unreasonably long, would call for something similar.

<issue_comment>username_3: I guess the first design decision is: do we want to add hooks to modify top-level `poll` calls into an existing `Executor`, or do we want to wrap `Executor`s into other, different-top-level-poll-call-behaviour `Executor`s?

I lean for the first option, because it'd work nicely with eg. `tokio::spawn`, while wrapping `Executor`s into other `Executor`s would mean the top-level executor would need to be changed.

<issue_comment>username_0: @username_1 @username_3 A question regarding these executor-level hooks: it seems like if you ever use a library that creates its own executor internally, you would have no way to instrument it with hooks. And of course you could forget to do so on your own executor. Both of which would lead to propagation failures.

Or were you thinking of providing a *global* hook mechanism of some kind?

<issue_comment>username_1: @username_0 I hadn't considered the multi-executor scenario at all. Do you have any thoughts on a global hook mechanism?

<issue_comment>username_3: Actually, the more I think about this, would it be for `task_local` or for handling time (`timeout` shouldn't require forcing the executor, it's generic enough), the more I think that futures should look like this (written in the github comment box, please forgive shallowness of reflexion):

```rust

trait Executor {

type Context: BasicContext;

}

trait BasicContext {

type Waker: BasicWaker;

fn get_waker(&mut Self) -> Self::Waker;

}

trait BasicWaker {

// ...

}

trait Future<Exec: Executor> {

type Output;

fn poll(self: Pin<&mut Self>, cx: &mut <Exec as Executor>::Context) -> Poll<Self::Output>;

}

```

This could be expanded with:

```rust

trait TaskLocalExecutor: Executor

where <Self as Executor>::Context: TaskLocalContext,

{ }

trait TaskLocalContext {

fn get_task_local(ctx: &mut <Self as Executor>::Context) -> &mut TaskLocalMap;

}

// some magic using TaskLocalMap to make it available as a task-local through some macro

```

And

```rust

trait TimeoutExecutor: Executor

where <Self as Executor>::Context: TimeoutContext,

{

type TimeoutFuture: Future<Output = ()>;

fn timeout_future(ctx: &mut <Self as Executor>::Context, d: Duration) -> TimeoutFuture;

}

// Some macro to make it easily usable from async/await

```

and [etc.] (actually, even `Waker` could be moved to specific executors, not all futures require that the executor is able to wake them and some executors may not support waking but just run each future in a round-robin fashion… though that'd maybe be over-doing it)

A `Future` would then be written like:

```rust

impl<E: Executor + TimeoutExecutor> Future for MyFuture {

type Output = /* ... */;

fn poll(self: Pin<&mut Self>, cx: &mut <Exec as Executor>::Context) -> Poll<Self::Output> {

// ...

}

}

```

And a future combinator function would look like:

```rust

fn run_after_10s<E: Executor + TimeoutExecutor, F: Future<E>>(f: F) -> impl Future<E> {

E::timeout_future(Duration::from_millis(10000)).and_then(|_| f)

}

```

Assuming that `async/await` is able to infer the bounds to set to its `Executor` depending on its contents, this sounds like a minor inconvenience when writing futures manually (a bit more boilerplate) for much more flexibility and portability (task locals, timeouts, and likely other things that will be implemented by lots of executors and that we've not yet thought about)

I guess this has already been discussed somewhere… could someone point me to where, so I understand the discussion around it?

<issue_comment>username_3: Oh, forgot to mention: it would also allow experimenting with cross-executor task_locals / timeouts outside of `std`, given that `std` would only need to provide the `Executor` trait, and libraries could define `TaskLocalExecutor`, `TimeoutExecutor`, etc.

<issue_comment>username_4: @username_3 https://github.com/rust-lang-nursery/futures-rs/issues/1196 has some similar prior discussion. The place where I've always thought this would become far too tedious is in propagating the bounds everywhere it's needed (i.e. when you have a function generic over `F: Future` you now need to have `E: Executor, F: Future<E>` and `f: impl Future` becomes `f: impl Future<impl Executor>`). Maybe that just won't be a common enough issue to really matter.

<issue_comment>username_3: @username_4 Thank you for the pointer! I'll continue discussion there :)

<issue_comment>username_2: It's worth noting that tokio constructs its executor(s) lazily, so there is adequate opportunity to hook into them during startup without forcing ugly global state into standard APIs.<issue_closed> | {'fraction_non_alphanumeric': 0.08020536223616657, 'fraction_numerical': 0.005818596691386195, 'mean_word_length': 4.345121951219512, 'pattern_counts': {'":': 0, '<': 44, '<?xml version=': 0, '>': 52, 'https://': 6, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '1158655', 'n_tokens_mistral': 2448, 'n_tokens_neox': 2337, 'n_words': 1124} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Updating the server to allow optional policy_path values

username_0: This change updates the server, updating how it processes config values and allowing it to handle optional or ommitted policy_path values. This fixes a bug where users could not leave the policy_path config file unset, in addition to a bug that forced users to use '/etc/pykmip/policies' as their policy directory.

Fixes #210 | {'fraction_non_alphanumeric': 0.037037037037037035, 'fraction_numerical': 0.009259259259259259, 'mean_word_length': 5.185714285714286, 'pattern_counts': {'":': 0, '<': 2, '<?xml version=': 0, '>': 2, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '15383225', 'n_tokens_mistral': 107, 'n_tokens_neox': 104, 'n_words': 62} |

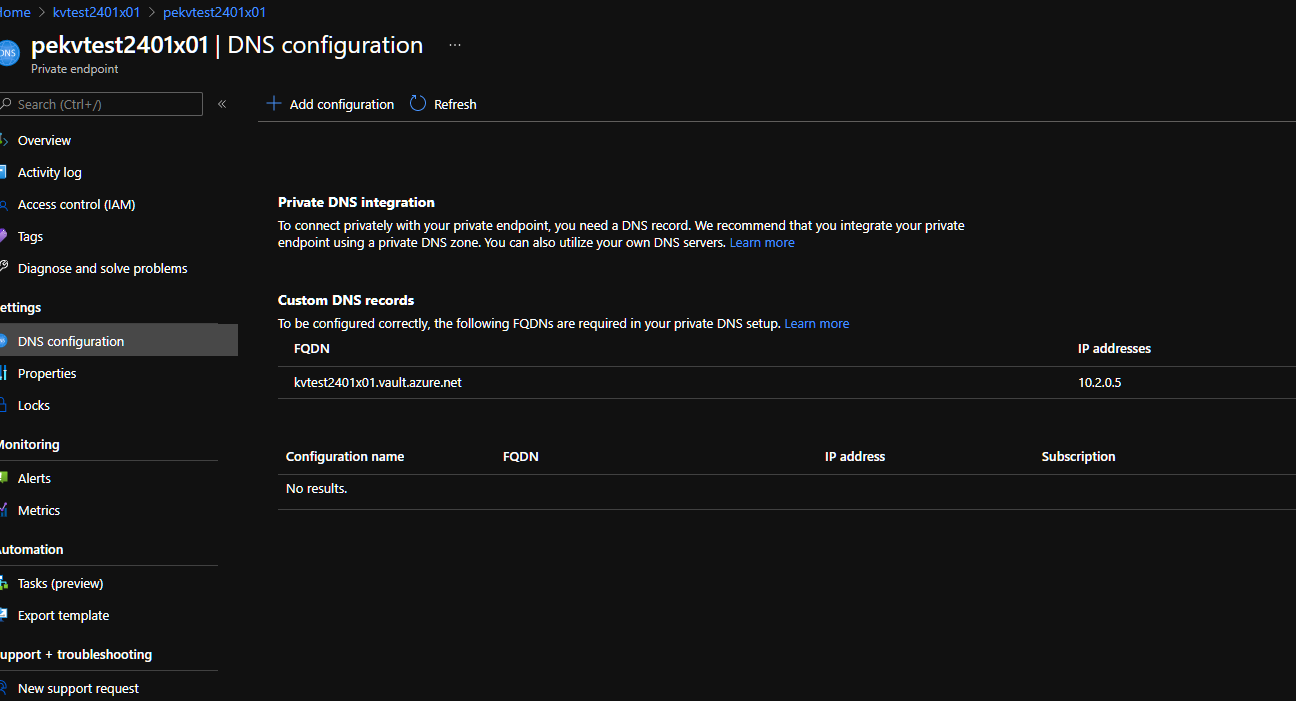

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: privatelink.vault.azure.net is maybe missing

username_0: Hi,

I think that the private dns zone privatelink.vault.azure.net is missing for Keyvault.

Regards

---

#### Document Details

⚠ *Do not edit this section. It is required for docs.microsoft.com ➟ GitHub issue linking.*

* ID: a88af182-32aa-a8f3-359d-b92ae1cfb8c7

* Version Independent ID: 01ce07fc-4bc2-def5-9ecd-a80330ad9488

* Content: [Azure Private Endpoint DNS configuration](https://docs.microsoft.com/en-us/azure/private-link/private-endpoint-dns)

* Content Source: [articles/private-link/private-endpoint-dns.md](https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/private-link/private-endpoint-dns.md)

* Service: **private-link**

* GitHub Login: @asudbring

* Microsoft Alias: **allensu**

<issue_comment>username_1: @username_0, from portal configuration it looks like there is only one private dns ("privatelink.vaultcore.azure.net") is available. I did test it locally and can see only **keyvault-name.privatelink.vaultcore.azure.net** getting populated on creating new private endpoint connection to Azure key vault.

<issue_comment>username_0: This is strange: I created a keyvault and the DNS configuration of the private endpoint is the following:

<issue_comment>username_0: Ok I see, there is not parity like other services; vault.azure.net is translated in privatelink.vaultcore.azure.net ?

<issue_comment>username_1: @username_0 , you should be able to see the private DNS zone you have created in their respective category,

<issue_comment>username_0: Thanks for you help. I didn't find it first because I had to create the DNS zone before and I thought that there is a consistent pattern like blob table...<issue_closed> | {'fraction_non_alphanumeric': 0.10285714285714286, 'fraction_numerical': 0.07142857142857142, 'mean_word_length': 5.1976401179941005, 'pattern_counts': {'":': 0, '<': 8, '<?xml version=': 0, '>': 8, 'https://': 5, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '18195959', 'n_tokens_mistral': 772, 'n_tokens_neox': 686, 'n_words': 187} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: CI: pin setuptool<0.60 in Azure template

username_0: Azure pipelines are failing. Ex: https://dev.azure.com/scipy-org/SciPy/_build/results?buildId=17282&view=logs&jobId=7902a2c1-7b88-5b2d-6408-84b88759c642&j=7902a2c1-7b88-5b2d-6408-84b88759c642&t=893309fc-8329-5d49-356d-625e41704eb4

<details>

<summary>Details</summary>

```

`numpy.distutils` is deprecated since NumPy 1.23.0, as a result

of the deprecation of `distutils` itself. It will be removed for

Python >= 3.12. For older Python versions it will remain present.

It is recommended to use `setuptools < 60.0` for those Python versions.

For more details, see:

https://numpy.org/devdocs/reference/distutils_status_migration.html

from numpy.distutils.core import setup

Running from SciPy source directory.

/opt/hostedtoolcache/Python/3.8.12/x64/lib/python3.8/site-packages/setuptools/config/pyprojecttoml.py:100: _ExperimentalProjectMetadata: Support for project metadata in `pyproject.toml` is still experimental and may be removed (or change) in future releases.

warnings.warn(msg, _ExperimentalProjectMetadata)

Traceback (most recent call last):

File "setup.py", line 532, in <module>

setup_package()

File "setup.py", line 528, in setup_package

```

</details>

<issue_comment>username_0: Another issue which I think is not related is with our pyproject.toml

```

distutils.errors.DistutilsOptionError: Impossible to expand dynamic value of 'description'. No configuration found for `tool.setuptools.dynamic.description`

```

<issue_comment>username_0: `refguide_asv_check` failure is not related. Jinja got an update yesterday cc @rossbar

<issue_comment>username_0: Cool, GH actions is in maintenance now...

<issue_comment>username_0: We don't see it yet due to the maintenance but logs are here https://dev.azure.com/scipy-org/SciPy/_build/results?buildId=17289&view=results

Seems like we can merge. | {'fraction_non_alphanumeric': 0.09744897959183674, 'fraction_numerical': 0.061224489795918366, 'mean_word_length': 4.717201166180758, 'pattern_counts': {'":': 0, '<': 13, '<?xml version=': 0, '>': 12, 'https://': 3, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '19006590', 'n_tokens_mistral': 717, 'n_tokens_neox': 629, 'n_words': 179} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Insurance price/payout issue

username_0: Insurance payouts much higher than price.

<issue_comment>username_0: ROF table was not being properly imported by insurance class when using imported ROF tables. Now that it is, insurance price is higher than it should be by some ~50% on average when using imported tables, although I believe this is a theoretical and not a code problem -- maybe an amplification of the errors of the imported tables. | {'fraction_non_alphanumeric': 0.039832285115303984, 'fraction_numerical': 0.008385744234800839, 'mean_word_length': 5.207792207792208, 'pattern_counts': {'":': 0, '<': 3, '<?xml version=': 0, '>': 3, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '28126376', 'n_tokens_mistral': 110, 'n_tokens_neox': 107, 'n_words': 70} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: send login data as JSON

username_0: Set the content-type to `application/json` and send the login data as stringified JSON.

Per @skiqh's [work-around](https://github.com/username_2/pouchdb-authentication/issues/111#issuecomment-242810853) in #111

For anyone else with the issue in the meantime, you can use his fork:

`npm i --save https://github.com/skiqh/pouchdb-authentication.git\#patch-1`

<issue_comment>username_1: @username_2 I also like to have this PR. Can also release a version for you.

<issue_comment>username_2: This passes the test suite, so I'm perfectly fine with this. Will do a release today. Thanks!

<issue_comment>username_2: published in 0.5.4

<issue_comment>username_1: Thanks! | {'fraction_non_alphanumeric': 0.09421265141318977, 'fraction_numerical': 0.034993270524899055, 'mean_word_length': 5.048780487804878, 'pattern_counts': {'":': 0, '<': 6, '<?xml version=': 0, '>': 6, 'https://': 2, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '5643489', 'n_tokens_mistral': 245, 'n_tokens_neox': 227, 'n_words': 83} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Incorrect mapping of sourcemaps using node-sass@1.1.4

username_0: @dlmanning I decided to update gulp-sass to 1.2.2. today and noticed that all my css classes mapped to incorrect scss sourcefiles and/or line numbers. Figured it had to be something that changed. I'm no JS wizzkid but I checked your dependencies and noticed you're using the relaxt ^ instead of ~ for node-sass. dependency.They recently bumped from 1.0.3 to 1.1.4 and with ^1.0 it would require the new 1.1.4 version. If I use the 1.0.3 version the problem is resolved.

<issue_comment>username_1: As this is an upstream bug, I'm going to close this issue.<issue_closed> | {'fraction_non_alphanumeric': 0.06706408345752608, 'fraction_numerical': 0.03278688524590164, 'mean_word_length': 4.291338582677166, 'pattern_counts': {'":': 0, '<': 4, '<?xml version=': 0, '>': 4, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '30960125', 'n_tokens_mistral': 207, 'n_tokens_neox': 197, 'n_words': 99} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Scenarios are not running: ConnectionClose, ConnectionClose, DbFortunesDapper, DbFortunesEf, DbFortunesRaw ...

username_0: Some scenarios have stopped running:

| Scenario | Environment | Last Run |

| -------- | ----------- | -------- |

| ConnectionClose | Linux, Physical, http, KestrelSockets | 9/16/19 12:45:21 AM +00:00 |

| ConnectionClose | Linux, Physical, https, KestrelSockets | 9/16/19 12:45:39 AM +00:00 |

| DbFortunesDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:39:58 AM +00:00 |

| DbFortunesEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:40:40 AM +00:00 |

| DbFortunesRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:39:15 AM +00:00 |

| DbMultiQueryDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:31:39 AM +00:00 |

| DbMultiQueryEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:32:20 AM +00:00 |

| DbMultiQueryRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:30:56 AM +00:00 |

| DbMultiUpdateDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:35:49 AM +00:00 |

| DbMultiUpdateEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:36:30 AM +00:00 |

| DbMultiUpdateRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:35:06 AM +00:00 |

| DbSingleQueryDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:27:24 AM +00:00 |

| DbSingleQueryEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:28:07 AM +00:00 |

| DbSingleQueryRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:26:43 AM +00:00 |

| HttpClient | Linux, Physical, http, KestrelSockets | 9/16/19 12:43:35 AM +00:00 |

| HttpClientFactory | Linux, Physical, http, KestrelSockets | 9/16/19 12:45:02 AM +00:00 |

| HttpClientParallel | Linux, Physical, http, KestrelSockets | 9/16/19 12:44:16 AM +00:00 |

| Json | Linux, Physical, h2, KestrelSockets | 9/16/19 12:21:11 AM +00:00 |

| Json | Linux, Physical, h2c, KestrelSockets | 9/16/19 12:21:51 AM +00:00 |

| MemoryCachePlaintext | Linux, Physical, http, KestrelSockets | 9/16/19 12:22:34 AM +00:00 |

| MemoryCachePlaintextSetRemove | Linux, Physical, http, KestrelSockets | 9/16/19 12:23:16 AM +00:00 |

| MvcDbFortunesDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:42:04 AM +00:00 |

| MvcDbFortunesEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:42:47 AM +00:00 |

| MvcDbFortunesRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:41:22 AM +00:00 |

| MvcDbMultiQueryDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:33:44 AM +00:00 |

| MvcDbMultiQueryEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:34:25 AM +00:00 |

| MvcDbMultiQueryRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:33:01 AM +00:00 |

| MvcDbMultiUpdateDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:37:53 AM +00:00 |

| MvcDbMultiUpdateEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:38:34 AM +00:00 |

| MvcDbSingleQueryDapper | Linux, Physical, http, KestrelSockets | 9/16/19 12:29:30 AM +00:00 |

| MvcDbSingleQueryEf | Linux, Physical, http, KestrelSockets | 9/16/19 12:30:12 AM +00:00 |

| MvcDbSingleQueryRaw | Linux, Physical, http, KestrelSockets | 9/16/19 12:28:48 AM +00:00 |

| PlaintextNonPipelined | Linux, Physical, https, KestrelSockets | 9/16/19 12:19:07 AM +00:00 |

| PlaintextNonPipelined | Linux, Physical, h2, KestrelSockets | 9/16/19 12:19:49 AM +00:00 |

| PlaintextNonPipelined | Linux, Physical, h2c, KestrelSockets | 9/16/19 12:20:29 AM +00:00 |

| PlaintextNonPipelinedLogging | Linux, Physical, http, KestrelSockets | 9/16/19 12:47:34 AM +00:00 |

| PlaintextNonPipelinedLoggingNoScopes | Linux, Physical, http, KestrelSockets | 9/16/19 12:48:17 AM +00:00 |

| ResponseCachingPlaintextCached | Linux, Physical, http, KestrelSockets | 9/16/19 12:23:58 AM +00:00 |

| ResponseCachingPlaintextRequestNoCache | Linux, Physical, http, KestrelSockets | 9/16/19 12:25:20 AM +00:00 |

| ResponseCachingPlaintextResponseNoCache | Linux, Physical, http, KestrelSockets | 9/16/19 12:24:39 AM +00:00 |

| ResponseCachingPlaintextVaryByCached | Linux, Physical, http, KestrelSockets | 9/16/19 12:26:02 AM +00:00 |

[Logs](https://aka.ms/aspnet/benchmarks/jenkins)<issue_closed>

<issue_comment>username_1: Fixed | {'fraction_non_alphanumeric': 0.14285714285714285, 'fraction_numerical': 0.14810398282852372, 'mean_word_length': 5.09593023255814, 'pattern_counts': {'":': 0, '<': 4, '<?xml version=': 0, '>': 4, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '25447637', 'n_tokens_mistral': 2031, 'n_tokens_neox': 1624, 'n_words': 392} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Is PM reciever muted

username_0: ## Issue

When PlayerA sends a PM to PlayerB, while PlayerB is muted PlayerA does not get any information about this.

## Suggested behaviour

1. PlayerA is not an admin.

2. PlayerA gets a message "PlayerB is muted for another X minutes".

3. PlayerB does not recieve any PM.<issue_closed> | {'fraction_non_alphanumeric': 0.06077348066298342, 'fraction_numerical': 0.011049723756906077, 'mean_word_length': 4.112676056338028, 'pattern_counts': {'":': 0, '<': 3, '<?xml version=': 0, '>': 3, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '21258582', 'n_tokens_mistral': 111, 'n_tokens_neox': 108, 'n_words': 53} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: property value is truncated to 40 characters

username_0: Hello,

I tried using the properties dataformat, and in my test I find that the mapped property value is truncated to 40 characters.

Example:

foo.bar: Loremipsumdolorsitametconsecteturadipiscingelit

This is be mapped to a String in my POJO with value "Loremipsumdolorsitametconsecteturadip...".

Is this an issue with my test or with the mapper?

Thanks.

<issue_comment>username_1: What dataformat? With which Jackson version? With what code?

Please include a reproduction showing how truncation occurs: there are no known problems like this reported.<issue_closed> | {'fraction_non_alphanumeric': 0.044977511244377814, 'fraction_numerical': 0.008995502248875561, 'mean_word_length': 5.242990654205608, 'pattern_counts': {'":': 0, '<': 4, '<?xml version=': 0, '>': 4, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '13608112', 'n_tokens_mistral': 193, 'n_tokens_neox': 182, 'n_words': 86} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Issues reading Yubikey 5

username_0: Hello,

I recently installed LineageOS 16 on my oneplus 3. I am trying to read my one time passwords with the Yubico Authenticator app but unfortunately I only manage to get a "Error in yubikey communication error!". I am not really sure what causes the issue but found a clue in the logcat I made.

```

06-28 08:32:30.897 4518 4546 E yubioath: Error using OathClient

06-28 08:32:30.897 4518 4546 E yubioath: java.io.IOException: Transceive length exceeds supported maximum

06-28 08:32:30.897 4518 4546 E yubioath: at android.nfc.TransceiveResult.getResponseOrThrow(TransceiveResult.java:50)

06-28 08:32:30.897 4518 4546 E yubioath: at android.nfc.tech.BasicTagTechnology.transceive(BasicTagTechnology.java:151)

06-28 08:32:30.897 4518 4546 E yubioath: at android.nfc.tech.IsoDep.transceive(IsoDep.java:172)

06-28 08:32:30.897 4518 4546 E yubioath: at nordpol.android.AndroidCard.transceive(:1)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.f.c.a(:1)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.d.e.a(:22)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.d.e.a(:18)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.d.e.<init>(:3)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.a.d.<init>(:2)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.g.e.a(:18)

06-28 08:32:30.897 4518 4546 E yubioath: at c.c.a.g.f.a(:8)

06-28 08:32:30.897 4518 4546 E yubioath: at d.c.a.b.a.a.c(:2)

06-28 08:32:30.897 4518 4546 E yubioath: at e.a.a.X$a.a(:11)

06-28 08:32:30.897 4518 4546 E yubioath: at e.a.a.V.run(:1)

06-28 08:32:30.897 4518 4546 E yubioath: at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1167)

06-28 08:32:30.897 4518 4546 E yubioath: at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:641)

06-28 08:32:30.897 4518 4546 E yubioath: at java.lang.Thread.run(Thread.java:764)

```

The full logcat is available [here](https://pastebin.com/25x3UkXx).

I am trying to figure out if the issue is caused by the Yubico app or by LineageOS but unfortunately I am not to familiar with android logcat files so I would be more than happy if you guys could point me in the right direction here.

<issue_comment>username_1: Might be related to #95, you might want to check that for clues. Although it looks like you're getting a different error message. What version of the app are you using?

<issue_comment>username_0: I am using version v2.1.0 of the Yubico Authenticator app. I also posted my issue on the [XDA forums](https://forum.xda-developers.com/showpost.php?p=79810033&postcount=2650) and nvertigo67 on XDA told me to try adding `ISO_DEP_MAX_TRANSCEIVE=0xFEFF` in my `libnfc-nxp.conf`. I'll try looking into that aswell.

<issue_comment>username_1: One other thing you can try is installing the latest 2.2.0 beta, you can get it from the Play Store by opting in to the beta channel for the app.

<issue_comment>username_0: The fix provided by nvertigo67 worked, I am going to try and get this fix inside the installer image for LineageOS :D<issue_closed> | {'fraction_non_alphanumeric': 0.10097822656989587, 'fraction_numerical': 0.14799621331650362, 'mean_word_length': 4.007898894154819, 'pattern_counts': {'":': 0, '<': 9, '<?xml version=': 0, '>': 9, 'https://': 2, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '7264963', 'n_tokens_mistral': 1512, 'n_tokens_neox': 1250, 'n_words': 396} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Why mapEventToState have currentState?

username_0: Hello

Why do you use `currentState` in `mapEventToState` if `currentState` is a property of `bloc` and can be access with the same way without be a parameter of `mapEventToState`

Thanks.

```dart

@override

Stream<int> mapEventToState(int currentState, CounterEvent event) async* {

yield currentState +1;

}

```

the same as this

```dart

@override

Stream<int> mapEventToState( CounterEvent event) async* {

yield currentState +1;

}

```

<issue_comment>username_1: @username_0 thanks for bringing this up! Originally, blocs didn't have a currentState property so the `mapEventToState` signature needed it. Now that `currentState` is a property in all Blocs it is no longer needed. #162 will address this change.

Thanks again for bringing this up! I totally forgot to make this simplification after adding `currentState`.

<issue_comment>username_1: published [bloc v0.11.0](https://pub.dartlang.org/packages/bloc) 🎉<issue_closed> | {'fraction_non_alphanumeric': 0.08484270734032412, 'fraction_numerical': 0.012392755004766444, 'mean_word_length': 4.769230769230769, 'pattern_counts': {'":': 0, '<': 7, '<?xml version=': 0, '>': 7, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '22037342', 'n_tokens_mistral': 326, 'n_tokens_neox': 305, 'n_words': 121} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Alter title and heading on world_location_news pages

username_0: title change to 'UK and COUNTRY', and heading change to 'COUNTRY and the

UK'

<issue_comment>username_1: Hi @username_0 👋 Could you post the link to the trello card that this PR relates to (in the PR description)?

<issue_comment>username_0: Hi @username_1, the link is - https://trello.com/c/aQPSDsVW/74-change-page-title-and-description-for-world-location-news-pages

<issue_comment>username_1: @username_0 when you add the Trello card to the PR description (at the top), it will automatically pop a link to the PR into the Trello card, which is quite useful! | {'fraction_non_alphanumeric': 0.07738998482549317, 'fraction_numerical': 0.013657056145675266, 'mean_word_length': 5.407766990291262, 'pattern_counts': {'":': 0, '<': 5, '<?xml version=': 0, '>': 5, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '27615956', 'n_tokens_mistral': 208, 'n_tokens_neox': 197, 'n_words': 84} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: OeMiddleware - does support POST / PATCH / DELETE ?

username_0: I have tried to create a new entity with Dynamic Data from connection string -

app.UseOdataToEntityMiddleware<OePageMiddleware>("/odata3", edmModel);

and shows data as it was a GET , not a POST .

Apparently, from the code in

`public class OeMiddleware

public async Task Invoke(HttpContext httpContext)

private async Task InvokeApi(HttpContext httpContext)`

it calls always GET , not POST.

There is a

parser.ExecutePostAsync

but it is not called. And , when I try to call POST , verifies if it is batch or OperationImportSegment , then it call anyway the ExecuteQueryAsync

OeMiddleware - support POST / PATCH / DELETE ?

<issue_comment>username_1: [Test](https://github.com/username_1/OdataToEntity/blob/master/test/OdataToEntity.Test/Common/BatchTest.cs)

[Test data](https://github.com/username_1/OdataToEntity/tree/master/test/OdataToEntity.Test/Batches)

<issue_comment>username_0: I have tried - with no luck. Working example:

I have http://netcoreblockly.herokuapp.com/odata/$metadata

You can see the classRooms at

http://netcoreblockly.herokuapp.com/odata/ClassRoom

I tried to POST ( with PostMan) to the endpoint:

http://netcoreblockly.herokuapp.com/odata/ClassRoom

The following:

{

"Name": "Classdasds1",

"idClassRoom": 10

}

It answers as the GET .

it is necessary , for the POST to work, to have a schema ?

(my code is: edmModel = DynamicMiddlewareHelper.CreateEdmModel(providerSchema, informationSchemaMapping: null); )

<issue_comment>username_1: I will try to implement this on the weekend<issue_closed>

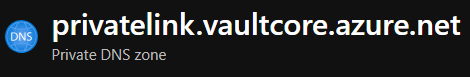

<issue_comment>username_1:

<issue_comment>username_0: Thanks! Could you deploy on NuGet also ?

<issue_comment>username_1: Hi @username_0

[2.5.0.2](https://www.nuget.org/packages/OdataToEntity.EfCore.DynamicDataContext/)

<issue_comment>username_0: I have a problem with DELETE

It does not Delete ( verb: delete) Could you please verify?

Thanks again

<issue_comment>username_0: As an example:

GET - http://netcoreblockly.herokuapp.com/odatadb/ClassRoom(23)

works

PATCH- http://netcoreblockly.herokuapp.com/odatadb/ClassRoom(23)

BODY:

{

"Name":"testAndrei

}

works

DELETE- http://netcoreblockly.herokuapp.com/odatadb/ClassRoom(23)

does NOT work

<issue_comment>username_1: will fix it on the weekend

<issue_comment>username_1: I have tried to create a new entity with Dynamic Data from connection string -

app.UseOdataToEntityMiddleware<OePageMiddleware>("/odata", edmModel);

and shows data as it was a GET , not a POST .

Apparently, from the code in

`public class OeMiddleware

public async Task Invoke(HttpContext httpContext)

private async Task InvokeApi(HttpContext httpContext)`

it calls always GET , not POST.

There is a

parser.ExecutePostAsync

but it is not called. And , when I try to call POST , verifies if it is batch or OperationImportSegment , then it call anyway the ExecuteQueryAsync

OeMiddleware - support POST / PATCH / DELETE ?<issue_closed>

<issue_comment>username_0: Please deploy on nuget

<issue_comment>username_1: [OdataToEntity.AspNetCore 2.5.0.3](https://www.nuget.org/packages/OdataToEntity.AspNetCore/)

<issue_comment>username_0: This package depends on

https://www.nuget.org/packages/OdataToEntity/

version 2.5.0.3 that was not deployed.

So I cannot update reference.

Please deploy also OdataToEntity

<issue_comment>username_0: It works!

Thanks!

( With your software , we can now edit any SqlServer / MySql / PostGres ) if provided a connection string. - and I will put on Blockly) | {'fraction_non_alphanumeric': 0.08666843360720912, 'fraction_numerical': 0.020938245428041347, 'mean_word_length': 4.3760683760683765, 'pattern_counts': {'":': 3, '<': 20, '<?xml version=': 0, '>': 20, 'https://': 6, 'lorem ipsum': 0, 'www.': 3, 'xml': 0}, 'pii_count': 3, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '10828552', 'n_tokens_mistral': 1254, 'n_tokens_neox': 1195, 'n_words': 366} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Crash on file copy using redirected drive

username_0: **Describe the bug**

Copying a large file (200M) between a Debian 10 box and a Windows 10 VM, I received a crash at 93%. Reconnected, started transfer again and it worked the second time with no problems. On the Win10 VM, I was copying the file from a redirected folder on my Debian 10 box.

**To Reproduce**

Could not reproduce.

**Expected behavior**

File copy with no errors - second time worked

**Screenshots**

N/A - provided dump file.

**Application details**

* FreeRDP Version

This is FreeRDP version 2.0.0-dev5 (dc89923f4

* Command line used

$ /opt/freerdp-nightly/bin/xfreerdp +old-license /log-level:info /u:Administrator /p:Password /size:1600x800 +clipboard /drive:Temp,/home/user/Temp /admin /sec:nla /cert-ignore /v:192.168.0.XX

* output of `/buildconfig`

$ /opt/freerdp-nightly/bin/xfreerdp /buildconfig

This is FreeRDP version 2.0.0-dev5 (dc89923f4)

Build configuration: BUILD_TESTING=OFF BUILTIN_CHANNELS=ON HAVE_AIO_H=1 HAVE_EXECINFO_H=1 HAVE_FCNTL_H=1 HAVE_INTTYPES_H=1 HAVE_MATH_C99_LONG_DOUBLE=1 HAVE_POLL_H=1 HAVE_PTHREAD_MUTEX_TIMEDLOCK=ON HAVE_PTHREAD_MUTEX_TIMEDLOCK_LIB=1 HAVE_PTHREAD_MUTEX_TIMEDLOCK_SYMBOL= HAVE_SYSLOG_H=1 HAVE_SYS_EVENTFD_H=1 HAVE_SYS_FILIO_H= HAVE_SYS_MODEM_H= HAVE_SYS_SELECT_H=1 HAVE_SYS_SOCKIO_H= HAVE_SYS_STRTIO_H= HAVE_SYS_TIMERFD_H=1 HAVE_TM_GMTOFF=1 HAVE_UNISTD_H=1 HAVE_XI_TOUCH_CLASS=1 WITH_ALSA=ON WITH_CAIRO=ON WITH_CCACHE=ON WITH_CHANNELS=ON WITH_CLANG_FORMAT=ON WITH_CLIENT=ON WITH_CLIENT_AVAILABLE=1 WITH_CLIENT_CHANNELS=ON WITH_CLIENT_CHANNELS_AVAILABLE=1 WITH_CLIENT_COMMON=ON WITH_CLIENT_INTERFACE=OFF WITH_CUPS=ON WITH_DEBUG_ALL=OFF WITH_DEBUG_CAPABILITIES=OFF WITH_DEBUG_CERTIFICATE=OFF WITH_DEBUG_CHANNELS=OFF WITH_DEBUG_CLIPRDR=OFF WITH_DEBUG_DVC=OFF WITH_DEBUG_KBD=OFF WITH_DEBUG_LICENSE=OFF WITH_DEBUG_MUTEX=OFF WITH_DEBUG_NEGO=OFF WITH_DEBUG_NLA=OFF WITH_DEBUG_NTLM=OFF WITH_DEBUG_RAIL=OFF WITH_DEBUG_RDP=OFF WITH_DEBUG_RDPDR=OFF WITH_DEBUG_RDPEI=OFF WITH_DEBUG_RDPGFX=OFF WITH_DEBUG_REDIR=OFF WITH_DEBUG_RFX=OFF WITH_DEBUG_RINGBUFFER=OFF WITH_DEBUG_SCARD=OFF WITH_DEBUG_SND=OFF WITH_DEBUG_SVC=OFF WITH_DEBUG_SYMBOLS=OFF WITH_DEBUG_THREADS=OFF WITH_DEBUG_TIMEZONE=OFF WITH_DEBUG_TRANSPORT=OFF WITH_DEBUG_TSG=OFF WITH_DEBUG_TSMF=OFF WITH_DEBUG_WND=OFF WITH_DEBUG_X11=OFF WITH_DEBUG_X11_CLIPRDR=OFF WITH_DEBUG_X11_LOCAL_MOVESIZE=OFF WITH_DEBUG_XV=OFF WITH_DSP_EXPERIMENTAL=OFF WITH_DSP_FFMPEG=ON WITH_EVENTFD_READ_WRITE=1 WITH_FAAC=OFF WITH_FAAD2=OFF WITH_FFMPEG=TRUE WITH_FFMPEG=TRUE WITH_GFX_H264=ON WITH_GPROF=OFF WITH_GSM=OFF WITH_GSSAPI=OFF WITH_GSTREAMER_0_10=ON WITH_GSTREAMER_1_0=ON WITH_ICU=OFF WITH_IPP=OFF WITH_JPEG=OFF WITH_LAME=OFF WITH_LIBRARY_VERSIONING=ON WITH_LIBSYSTEMD=OFF WITH_MACAUDIO=OFF WITH_MACAUDIO=OFF WITH_MACAUDIO_AVAILABLE=0 WITH_MANPAGES=ON WITH_MBEDTLS=OFF WITH_OPENCL=OFF WITH_OPENH264=OFF WITH_OPENSLES=OFF WITH_OPENSSL=ON WITH_OSS=ON WITH_PAM=ON WITH_PCSC=ON WITH_PROFILER=OFF WITH_PROXY_MODULES=OFF WITH_PULSE=ON WITH_SAMPLE=OFF WITH_SANITIZE_ADDRESS=ON WITH_SANITIZE_ADDRESS_AVAILABLE=1 WITH_SANITIZE_MEMORY=OFF WITH_SANITIZE_MEMORY=OFF WITH_SANITIZE_MEMORY_AVAILABLE=0 WITH_SANITIZE_THREAD=OFF WITH_SANITIZE_THREAD=OFF WITH_SANITIZE_THREAD_AVAILABLE=0 WITH_SERVER=ON WITH_SERVER_CHANNELS=ON WITH_SERVER_INTERFACE=ON WITH_SMARTCARD_INSPECT=OFF WITH_SOXR=OFF WITH_SSE2=ON WITH_SWSCALE=OFF WITH_THIRD_PARTY=OFF WITH_VAAPI=OFF WITH_VALGRIND_MEMCHECK=OFF WITH_VALGRIND_MEMCHECK=OFF WITH_VALGRIND_MEMCHECK_AVAILABLE=0 WITH_WAYLAND=OFF WITH_WINPR_TOOLS=ON WITH_X11=ON WITH_X264=OFF WITH_XCURSOR=ON WITH_XDAMAGE=ON WITH_XEXT=ON WITH_XFIXES=ON WITH_XI=ON WITH_XINERAMA=ON WITH_XKBFILE=ON WITH_XRANDR=ON WITH_XRENDER=ON WITH_XSHM=ON WITH_XTEST=ON WITH_XV=ON WITH_ZLIB=ON

Build type: Debug

CFLAGS: -g -O2 -fdebug-prefix-map=/build/freerdp-nightly-2.0.0+0~20200222024833.771~1.gbpdc8992=. -fstack-protector-strong -Wformat -Werror=format-security -Wdate-time -D_FORTIFY_SOURCE=2 -fPIC -Wall -Wno-unused-result -Wno-unused-but-set-variable -Wno-deprecated-declarations -fvisibility=hidden -Wimplicit-function-declaration -Wredundant-decls -g -fsanitize=address -fsanitize-address-use-after-scope -fno-omit-frame-pointer -DWINPR_DLL

Compiler: GNU, 8.3.0

Target architecture: x64

* OS version connecting to

Windows 10 VM latest updates Version 1903

* If available the log output from a run with `/log-level:trace`

[15:47:07:309] [12332:12333] [INFO][com.freerdp.core] - freerdp_connect:freerdp_set_last_error_ex resetting error state

[15:47:07:309] [12332:12333] [INFO][com.freerdp.client.common.cmdline] - loading channelEx rdpdr

[15:47:07:309] [12332:12333] [INFO][com.freerdp.client.common.cmdline] - loading channelEx rdpsnd

[15:47:07:309] [12332:12333] [INFO][com.freerdp.client.common.cmdline] - loading channelEx cliprdr

[15:47:07:644] [12332:12333] [INFO][com.freerdp.primitives] - primitives autodetect, using optimized

[15:47:07:649] [12332:12333] [INFO][com.freerdp.core] - freerdp_tcp_is_hostname_resolvable:freerdp_set_last_error_ex resetting error state

[15:47:07:649] [12332:12333] [INFO][com.freerdp.core] - freerdp_tcp_connect:freerdp_set_last_error_ex resetting error state

[15:47:09:204] [12332:12333] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[15:47:09:204] [12332:12333] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_RGB16

[15:47:09:239] [12332:12333] [INFO][com.winpr.clipboard] - initialized POSIX local file subsystem

[15:47:09:240] [12332:12333] [INFO][com.freerdp.channels.rdpsnd.client] - Loaded fake backend for rdpsnd

[15:47:09:240] [12332:12338] [INFO][com.freerdp.channels.rdpdr.client] - Loading device service drive [Temp] (static)

[15:47:10:468] [12332:12333] [INFO][com.freerdp.client.x11] - Logon Error Info LOGON_WARNING [LOGON_MSG_SESSION_CONTINUE]

[15:47:10:610] [12332:12338] [INFO][com.freerdp.channels.rdpdr.client] - registered device #1: Temp (type=8 id=1)

[15:47:10:791] [12332:12339] [WARN][com.freerdp.channels.cliprdr.common] - [cliprdr_packet_format_list_new] called with invalid type 00000000

=================================================================

==12332==ERROR: AddressSanitizer: heap-buffer-overflow on address 0x6020000085f0 at pc 0x55e569a789a2 bp 0x7f9924decac0 sp 0x7f9924decab8

READ of size 4 at 0x6020000085f0 thread T1

#0 0x55e569a789a1 (/opt/freerdp-nightly/bin/xfreerdp+0x3e9a1)

#1 0x55e569a69320 (/opt/freerdp-nightly/bin/xfreerdp+0x2f320)

#2 0x55e569a992f5 (/opt/freerdp-nightly/bin/xfreerdp+0x5f2f5)

#3 0x55e569a99a5c (/opt/freerdp-nightly/bin/xfreerdp+0x5fa5c)

#4 0x7f9932d8688b (/opt/freerdp-nightly/bin/../lib/libwinpr2.so.2+0x16488b)

#5 0x7f9932a42fa2 in start_thread (/lib/x86_64-linux-gnu/libpthread.so.0+0x7fa2)

#6 0x7f9932b5a4ce in clone (/lib/x86_64-linux-gnu/libc.so.6+0xf94ce)

0x6020000085f1 is located 0 bytes to the right of 1-byte region [0x6020000085f0,0x6020000085f1)

allocated by thread T1 here:

#0 0x7f99345a8330 in __interceptor_malloc (/lib/x86_64-linux-gnu/libasan.so.5+0xe9330)

#1 0x7f99343a8144 in XGetWindowProperty (/lib/x86_64-linux-gnu/libX11.so.6+0x2a144)

Thread T1 created by T0 here:

#0 0x7f993450fdb0 in __interceptor_pthread_create (/lib/x86_64-linux-gnu/libasan.so.5+0x50db0)

#1 0x7f9932d863eb (/opt/freerdp-nightly/bin/../lib/libwinpr2.so.2+0x1643eb)

#2 0x7f9932d86c9a in CreateThread (/opt/freerdp-nightly/bin/../lib/libwinpr2.so.2+0x164c9a)

#3 0x55e569a8f2ff (/opt/freerdp-nightly/bin/xfreerdp+0x552ff)

#4 0x55e569a4dba6 (/opt/freerdp-nightly/bin/xfreerdp+0x13ba6)

#5 0x7f9932a8509a in __libc_start_main (/lib/x86_64-linux-gnu/libc.so.6+0x2409a)

SUMMARY: AddressSanitizer: heap-buffer-overflow (/opt/freerdp-nightly/bin/xfreerdp+0x3e9a1)

Shadow bytes around the buggy address:

0x0c047fff9060: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fff9070: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fff9080: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fff9090: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fff90a0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

=>0x0c047fff90b0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa[01]fa

0x0c047fff90c0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

[Truncated]

Stack after return: f5

Stack use after scope: f8

Global redzone: f9

Global init order: f6

Poisoned by user: f7

Container overflow: fc

Array cookie: ac

Intra object redzone: bb

ASan internal: fe

Left alloca redzone: ca

Right alloca redzone: cb

==12332==ABORTING

**Desktop (please complete the following information):**

- OS: [e.g. iOS] Debian 10

- Browser [e.g. chrome, safari] N/A

- Version [e.g. 22] N/A

**Additional context**

I'm not sure if this problem happened because of FreeRDP or some other external issue (network, VPN, etc) but I wanted to bring it to your attention in case you can see something in the trace/dump. HTH

<issue_comment>username_1: Thank you for the report, that one I was not aware of.

Could you reproduce with the -dbg package installed?

<issue_comment>username_1: One thing that can be said is it looks like this has nothing to do with drive redirection, `XGetWindowProperty` where the memory is allocated allows to determine that.

<issue_comment>username_0: @username_1, here is another crash log after installing the -dbg package from last night:

/opt/freerdp-nightly/bin/xfreerdp +old-license /log-level:info /u:Administrator /p:Password /size:1600x800 +clipboard /drive:Temp,/home/user/Temp /admin /sec:nla /cert-ignore /v:192.168.0.XX

[13:23:40:146] [4191:4192] [INFO][com.freerdp.core] - freerdp_connect:freerdp_set_last_error_ex resetting error state

[13:23:40:146] [4191:4192] [INFO][com.freerdp.client.common.cmdline] - loading channelEx rdpdr

[13:23:40:146] [4191:4192] [INFO][com.freerdp.client.common.cmdline] - loading channelEx rdpsnd

[13:23:40:147] [4191:4192] [INFO][com.freerdp.client.common.cmdline] - loading channelEx cliprdr

[13:23:40:484] [4191:4192] [INFO][com.freerdp.primitives] - primitives autodetect, using optimized

[13:23:40:489] [4191:4192] [INFO][com.freerdp.core] - freerdp_tcp_is_hostname_resolvable:freerdp_set_last_error_ex resetting error state

[13:23:40:489] [4191:4192] [INFO][com.freerdp.core] - freerdp_tcp_connect:freerdp_set_last_error_ex resetting error state

[13:23:42:097] [4191:4192] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[13:23:42:097] [4191:4192] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_RGB16

[13:23:42:138] [4191:4192] [INFO][com.winpr.clipboard] - initialized POSIX local file subsystem

[13:23:42:138] [4191:4192] [INFO][com.freerdp.channels.rdpsnd.client] - Loaded fake backend for rdpsnd

[13:23:42:139] [4191:4197] [INFO][com.freerdp.channels.rdpdr.client] - Loading device service drive [Temp] (static)

[13:23:43:809] [4191:4192] [INFO][com.freerdp.client.x11] - Logon Error Info LOGON_FAILED_OTHER [LOGON_MSG_SESSION_CONTINUE]

[13:23:43:887] [4191:4197] [INFO][com.freerdp.channels.rdpdr.client] - registered device #1: Temp (type=8 id=1)

[13:23:43:892] [4191:4198] [WARN][com.freerdp.channels.cliprdr.common] - [cliprdr_packet_format_list_new] called with invalid type 00000000

=================================================================

==4191==ERROR: AddressSanitizer: heap-buffer-overflow on address 0x602000013f70 at pc 0x55e0964ea9a2 bp 0x7f83106ecac0 sp 0x7f83106ecab8

READ of size 4 at 0x602000013f70 thread T1

#0 0x55e0964ea9a1 in xf_cliprdr_process_selection_request client/X11/xf_cliprdr.c:894

#1 0x55e0964ea9a1 in xf_cliprdr_handle_xevent client/X11/xf_cliprdr.c:1020

#2 0x55e0964db320 in xf_event_process client/X11/xf_event.c:1062

#3 0x55e09650b2f5 in xf_process_x_events client/X11/xf_client.c:503

#4 0x55e09650b2f5 in handle_window_events client/X11/xf_client.c:1468

#5 0x55e09650ba5c in xf_client_thread client/X11/xf_client.c:1635

#6 0x7f831e68888b in thread_launcher winpr/libwinpr/thread/thread.c:327

#7 0x7f831e344fa2 in start_thread (/lib/x86_64-linux-gnu/libpthread.so.0+0x7fa2)

#8 0x7f831e45c4ce in clone (/lib/x86_64-linux-gnu/libc.so.6+0xf94ce)

0x602000013f71 is located 0 bytes to the right of 1-byte region [0x602000013f70,0x602000013f71)

allocated by thread T1 here:

#0 0x7f831fee8330 in __interceptor_malloc (/lib/x86_64-linux-gnu/libasan.so.5+0xe9330)

#1 0x7f831fce8144 in XGetWindowProperty (/lib/x86_64-linux-gnu/libX11.so.6+0x2a144)

Thread T1 created by T0 here:

#0 0x7f831fe4fdb0 in __interceptor_pthread_create (/lib/x86_64-linux-gnu/libasan.so.5+0x50db0)

#1 0x7f831e6883eb in winpr_StartThread winpr/libwinpr/thread/thread.c:356

#2 0x7f831e688c9a in CreateThread winpr/libwinpr/thread/thread.c:469

#3 0x55e0965012ff in xfreerdp_client_start client/X11/xf_client.c:1768

#4 0x55e0964bfba6 in main client/X11/cli/xfreerdp.c:74

#5 0x7f831e38709a in __libc_start_main (/lib/x86_64-linux-gnu/libc.so.6+0x2409a)

SUMMARY: AddressSanitizer: heap-buffer-overflow client/X11/xf_cliprdr.c:894 in xf_cliprdr_process_selection_request

Shadow bytes around the buggy address:

0x0c047fffa790: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa7a0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa7b0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa7c0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa7d0: fa fa fa fa fa fa fa fa fa fa fa fa fa fa 00 07

=>0x0c047fffa7e0: fa fa 00 07 fa fa fa fa fa fa fa fa fa fa[01]fa

0x0c047fffa7f0: fa fa fd fd fa fa fd fd fa fa fd fd fa fa fd fd

0x0c047fffa800: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa810: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa820: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

0x0c047fffa830: fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa fa

Shadow byte legend (one shadow byte represents 8 application bytes):

Addressable: 00

Partially addressable: 01 02 03 04 05 06 07

Heap left redzone: fa

Freed heap region: fd

Stack left redzone: f1

Stack mid redzone: f2

Stack right redzone: f3

Stack after return: f5

Stack use after scope: f8

Global redzone: f9

Global init order: f6

Poisoned by user: f7

Container overflow: fc

Array cookie: ac

Intra object redzone: bb

ASan internal: fe

Left alloca redzone: ca

Right alloca redzone: cb

==4191==ABORTING

HTH

<issue_comment>username_1: @username_0 thank you, that was exactly what I was looking for.<issue_closed> | {'fraction_non_alphanumeric': 0.09868155522669178, 'fraction_numerical': 0.11226960850262344, 'mean_word_length': 4.932561851556265, 'pattern_counts': {'":': 0, '<': 7, '<?xml version=': 0, '>': 9, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 2, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '29965654', 'n_tokens_mistral': 6958, 'n_tokens_neox': 5978, 'n_words': 1367} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Can't add functions to the JSON object

username_0: <!--

IF YOU DON'T FILL OUT THE FOLLOWING INFORMATION YOUR ISSUE MIGHT BE CLOSED WITHOUT INVESTIGATING

-->

I'm trying to add [Crockford's Cycle.js](https://github.com/douglascrockford/JSON-js/blob/master/cycle.js) to my app.

### Bug Report or Feature Request (mark with an `x`)

```

- [x] bug report -> please search issues before submitting

- [ ] feature request

```

### Versions.

<!--

Output from: `ng --version`.

If nothing, output from: `node --version` and `npm --version`.

Windows (7/8/10). Linux (incl. distribution). macOS (El Capitan? Sierra?)

-->

@angular/cli: 1.1.2

node: 7.10.0

os: linux x64

@angular/animations: 4.3.4

@angular/common: 4.3.4

@angular/compiler: 4.3.4

@angular/core: 4.3.4

@angular/forms: 4.3.4

@angular/http: 4.3.4

@angular/platform-browser: 4.3.4

@angular/platform-browser-dynamic: 4.3.4

@angular/router: 4.3.4

@angular/cli: 1.1.2

@angular/compiler-cli: 4.3.4

@angular/language-service: 4.3.4

### Repro steps.

<!--

Simple steps to reproduce this bug.

Please include: commands run, packages added, related code changes.

A link to a sample repo would help too.

-->

1. Generate new CLI project with `ng new test`

2. Append to `head` of `index.html`

```

<script type="text/javascript">

// Copy paste Crockford's raw script. This basically begins like:

// if (typeof JSON.decycle !== "function") {

// JSON.decycle = function decycle(object, replacer) {

// ...

// }

// }

</script>

```

3. Run `ng serve`

4. From dev console, `console.log(JSON.decycle)` prints `undefined`.

### The log given by the failure.

<!-- Normally this include a stack trace and some more information. -->

No errors are logged.

### Desired functionality.

<!--

What would like to see implemented?

What is the usecase?

-->

I should be able to use `JSON.decycle` and `JSON.retrocycle`. Running `console.log(JSON)` shows that these methods do not exist on the JSON object.

### Mention any other details that might be useful.

<!-- Please include a link to the repo if this is related to an OSS project. -->

If I insert a `console.log(JSON.decycle)` inside the setup script tag, right after the declaration of `JSON.decycle`, I see that `decycle` is indeed a method on the JSON object. However, when I attempt the same thing outside of that script tag (in code, or in the dev console), `console.log(JSON.decycle)` prints `undefined`.

Also, just dropping that entire script into the dev console successfully adds the methods to the JSON object.

It seems like modifications to the JSON object aren't being preserved.

<issue_comment>username_1: For support requests, you can use gitter or take a look at StackOverflow for more information. If I've misunderstood the problem, please open a new issue, thanks!<issue_closed> | {'fraction_non_alphanumeric': 0.11895161290322581, 'fraction_numerical': 0.018481182795698926, 'mean_word_length': 3.0284167794316645, 'pattern_counts': {'":': 0, '<': 12, '<?xml version=': 0, '>': 13, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '15808294', 'n_tokens_mistral': 965, 'n_tokens_neox': 915, 'n_words': 362} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Use font icons in hierarchy toggle, prettify default theme

username_0: <!-- Reviewable:start -->

This change is [<img src="https://reviewable.io/review_button.svg" height="34" align="absmiddle" alt="Reviewable"/>](https://reviewable.io/reviews/vaadin/vaadin-grid/1074)

<!-- Reviewable:end -->

<issue_comment>username_1: Hierarchy toggle icons are so simple, we could just use CCS triangles instead https://css-tricks.com/snippets/css/css-triangle/

---

*Comments from [Reviewable](https://reviewable.io:443/reviews/vaadin/vaadin-grid/1074)*

<!-- Sent from Reviewable.io -->

<issue_comment>username_0: Review status: 0 of 2 files reviewed at latest revision, 1 unresolved discussion, some commit checks failed.

---

*[vaadin-grid-hierarchy-toggle.html, line 11 at r1](https://reviewable.io:443/reviews/vaadin/vaadin-grid/1074#-Kxw8DpSrkl1s9P_u42V:-KxwPZn13nmxYJ9_ysK2:b-ycrqjc) ([raw file](https://github.com/vaadin/vaadin-grid/blob/0260cb20679510fd3a652cf482ad60a19fd73f1d/vaadin-grid-hierarchy-toggle.html#L11)):*

<details><summary><i>Previously, username_1 (<NAME>) wrote…</i></summary><blockquote>

Hierarchy toggle icons are so simple, we could just use CCS triangles instead https://css-tricks.com/snippets/css/css-triangle/

</blockquote></details>

Font icons don’t require alignment with text, and also provide out-of-the-box support for changing color, font size and line height.

Apart from that, if we do CSS triangles manually here, we would have to decide the sizes, position, angle values, and so on. I could put decisions to my taste here, but nobody trusts my design taste. :-(

---

*Comments from [Reviewable](https://reviewable.io:443/reviews/vaadin/vaadin-grid/1074)*

<!-- Sent from Reviewable.io -->

<issue_comment>username_2: <img class="emoji" title=":lgtm:" alt=":lgtm:" align="absmiddle" src="https://reviewable.io/lgtm.png" height="20" width="61"/>

---

Reviewed 2 of 2 files at r1.

Review status: all files reviewed at latest revision, 1 unresolved discussion, some commit checks failed.

---

*Comments from [Reviewable](https://reviewable.io:443/reviews/vaadin/vaadin-grid/1074#-:-KxwePMmpkEJyLZ_vd_m:bnfp4nl)*

<!-- Sent from Reviewable.io -->

<issue_comment>username_1: <img class="emoji" title=":lgtm:" alt=":lgtm:" align="absmiddle" src="https://reviewable.io/lgtm.png" height="20" width="61"/>

---

Reviewed 2 of 2 files at r1.

Review status: all files reviewed at latest revision, all discussions resolved, some commit checks failed.

---

*Comments from [Reviewable](https://reviewable.io:443/reviews/vaadin/vaadin-grid/1074#-:-KxwefO5tv9zaouJUWov:bnfp4nl)*

<!-- Sent from Reviewable.io --> | {'fraction_non_alphanumeric': 0.14724615961034096, 'fraction_numerical': 0.04083926564256276, 'mean_word_length': 5.082004555808656, 'pattern_counts': {'":': 4, '<': 24, '<?xml version=': 0, '>': 24, 'https://': 12, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '21316347', 'n_tokens_mistral': 993, 'n_tokens_neox': 887, 'n_words': 223} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Handle arrays as the root object.

username_0: Hi there! We're using this for JSON serialization/deserialization. Thanks for the project.

We noticed that although the library handles nested arrays well, if you give it an array as an initial value, you get the mapObj back. So, this PR just casts the mapObj it back to an array if the input was one.

<issue_comment>username_1: Hi ben thanks for taking the time todo the PR, I've had a look from what I understand you're saying. ```camelCaseKeys([])``` returns a mapobj not an array?

I've looked at the tests and the test 'Should return an array' checks for this.

If this is not the problem you are suggesting would you be able to add a test to demonstrate the problem?

Thanks

<issue_comment>username_1: see latest release - https://github.com/username_1/camelcase-keys-recursive/releases/tag/v0.8.0 | {'fraction_non_alphanumeric': 0.06361607142857142, 'fraction_numerical': 0.0078125, 'mean_word_length': 4.3076923076923075, 'pattern_counts': {'":': 0, '<': 4, '<?xml version=': 0, '>': 4, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '14280049', 'n_tokens_mistral': 253, 'n_tokens_neox': 240, 'n_words': 132} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Move instead of copy.

username_0: [Place](https://github.com/altmp/cpp-sdk/blob/d52d98b8a239499c6caef85cd637fbebe1a23814/types/Array.h#L128)

There will be a deep copying of the object, and here just moving it is enough. Change to:

`newData[i] = std::move(data[i]);`

<issue_comment>username_1: Can you describe why this is needed.

<issue_comment>username_0: You won't believe it, but moving an object is much faster and more efficient than copying it. Can you imagine? Shock.

<issue_comment>username_1: Thats depending on the type of the array.

<issue_comment>username_1: Doesn't that makes the data invalid when its setted to the array and then invalidated inside the resize method but also used outside the array.

<issue_comment>username_0: The memory you move from is deleted in the next line. Accordingly, you need to MOVE from it, not copy it.

<issue_comment>username_1: The next line only deletes the arrays, but doesn't the elements inside it.

<issue_comment>username_0: Learn the basics.<issue_closed> | {'fraction_non_alphanumeric': 0.07545367717287488, 'fraction_numerical': 0.03247373447946514, 'mean_word_length': 5.591194968553459, 'pattern_counts': {'":': 0, '<': 10, '<?xml version=': 0, '>': 10, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '18505327', 'n_tokens_mistral': 332, 'n_tokens_neox': 308, 'n_words': 132} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Nested table style not being applied correctly to cells (uses parent table cell)

username_0: Nested style on InnerTable td (with text-align:center) is not being applied; the text-align:left from the containing OuterTable td is being applied.

Please see example HTML:

https://gist.github.com/username_0/5fe6ced6b180c9122907

<issue_comment>username_1: I can confirm this issue.

```html

<style>

table.outerclass td {

color: red;

}

table.innerclass td {

color: green;

}

</style>

<table class="outerclass">

<tr>

<td>

<table class="innerclass">

<tr>

<td>

this should be green

</td>

</tr>

</table>

</td>

</tr>

</table>

```

renders to

```html

<table class="outerclass">

<tbody><tr>

<td style="color: red">

<table class="innerclass">

<tbody><tr>

<td style="color: red">

this should be green

</td>

</tr>

</tbody></table>

</td>

</tr>

</tbody></table>

```<issue_closed> | {'fraction_non_alphanumeric': 0.11629746835443038, 'fraction_numerical': 0.012658227848101266, 'mean_word_length': 1.5, 'pattern_counts': {'":': 0, '<': 34, '<?xml version=': 0, '>': 34, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '26909526', 'n_tokens_mistral': 381, 'n_tokens_neox': 353, 'n_words': 102} |

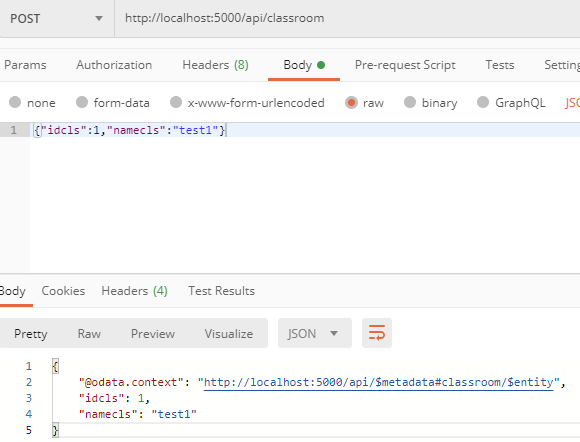

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: The improved issue template is confusing

username_0: ### New Issue Checklist

- [ ] The title is short and descriptive

- [ ] The issue contains an:

- [ ] Idea (new feature, user story, etc)

- [ ] Problem

- [ ] You have explained the:

- [ ] Context

- [ ] Problem or idea

- [ ] Solution or next step

### Context

I was creating new issues this morning. As I was filling things out, I found the new template jarring.

### Problem or idea

The context seems like it should come before the problem. Also, the checklist that is supposed to be used to make sure the issue is reasonably complete is commented out.

### Solution or next step

I'd propose that we revert the portions of https://github.com/AlexsLemonade/refinebio/pull/137 that change the checklist.

<issue_comment>username_1: I agree

<issue_comment>username_2: I don't like having to read everybody else's checklist for every new ticket. It's up to you to do file a proper ticket, it's not up to people who actually work on tickets to have to read somebody else's checklist of things that should be done anyway every time.

<issue_comment>username_0: There were two changes.

* One puts `context` before `problem`.

* The other is the commenting out of the checklist.

As someone who files tickets, we'll get better ones if the template works for people. Asking for context before asking for the problem seems like the natural ordering.

Also, giving people a checklist can be a handy way to go about getting better tickets. It also helps to quickly triage bad tickets. Someone who didn't fill out the checklist probably also didn't do the other things that make a ticket useful.

<issue_comment>username_0: Yea - the GitHub -> ToDo is suboptimal there. I think our checklist that is not a checklist can be converted to a checklist that is a checklist. 😄

<issue_comment>username_3: I think that will remind people about the things we care about. Also I'm not sure I agree that the checklist makes it easier to triage bad tickets. I think we can just as easily visibly check that each of the sections has something in it as we can check that the corresponding checkboxes have been filled out.

There's a few things I find annoying about the checklist, but this is one that hasn't been brought up yet:

<issue_comment>username_0: On my plate. Will aim to do it today.<issue_closed> | {'fraction_non_alphanumeric': 0.06381381381381382, 'fraction_numerical': 0.027402402402402402, 'mean_word_length': 4.018832391713747, 'pattern_counts': {'":': 0, '<': 9, '<?xml version=': 0, '>': 10, 'https://': 3, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '24561113', 'n_tokens_mistral': 804, 'n_tokens_neox': 720, 'n_words': 372} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Checks from policy not automatically applying

username_0: I have some checks set up as a policy and have the policy applied. However they do not show up until I create a check manually and then delete the check from a device. Then the policy checks show up for only that device.

<issue_comment>username_1: this is a known bug see the discussion in https://github.com/username_1/tacticalrmm/issues/6<issue_closed>

<issue_comment>username_1: I have some checks set up as a policy and have the policy applied. However they do not show up until I create a check manually and then delete the check from a device. Then the policy checks show up for only that device.

<issue_comment>username_1: gonna re-open this for visibility and tracking

<issue_comment>username_2: @username_0 Can you see if your issue is resolved with the latest updates?

<issue_comment>username_0: It is working now!<issue_closed>

<issue_comment>username_2: @username_0 I am doing some rework on the policy checks and we look at this. Thanks for testing!

<issue_comment>username_2: @username_0 I think this is due to policies not applying to newly installed agents. I will make a fix shortly.<issue_closed>

<issue_comment>username_2: #78 Should fix this issue. Once it is merged, let me know if you have any issues. Thanks! | {'fraction_non_alphanumeric': 0.04909365558912387, 'fraction_numerical': 0.012084592145015106, 'mean_word_length': 5.220657276995305, 'pattern_counts': {'":': 0, '<': 13, '<?xml version=': 0, '>': 13, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '20199903', 'n_tokens_mistral': 344, 'n_tokens_neox': 342, 'n_words': 195} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Only lets me login 1 time per account?

username_0: ### Expected Behavior

To be able to start the Bot in terminal, then stop it, then be able to start it again whenever I choose.

### Actual Behavior

I can only run the bot one time per account. After that, I have to change my account information in the config file for it to work.

### Steps to Reproduce

Just running the bot

### Other Information

This is the error that shows Traceback (most recent call last):

File "pokecli.py", line 220, in <module>

main()

File "pokecli.py", line 206, in main

bot.start()

File "/Users/userstuff/PokemonGo-Bot/pokemongo_bot/__init__.py", line 31, in start

self._setup_api()

File "/Users/userstuff/PokemonGo-Bot/pokemongo_bot/__init__.py", line 159, in _setup_api

balls_stock = self.pokeball_inventory()

File "/Users/userstuff/PokemonGo-Bot/pokemongo_bot/__init__.py", line 234, in pokeball_inventory

inventory_dict = inventory_req['responses']['GET_INVENTORY'][

KeyError: 'GET_INVENTORY'

OS:

Git Commit: commit 4182e93f9bfbe471d07b74e95c55cdfbacda127b

Python Version: Python 2.7.10

<issue_comment>username_1: your error has nothing to do with what you are describing

<issue_comment>username_0: Could you please explain how to fix both issues? The error I show only

appears when I use Control C in terminal to stop the bot, then try to

restart the bot with the same account logged in. For example, if I tried to

use the bot with <EMAIL>, it would work fine for the first use. But

if I stopped the bot in terminal, then tried to start it again (still using

the account <EMAIL>) the error I pasted in my issue would appear.

However, the error disappears after I try using a new account with the bot.

Any help would be greatly appreciated.

Cheers

<issue_comment>username_2: I get that Get_Inventory error often, usually first time starting the bot. I just attempt to start it again immediately afterwards and it works.<issue_closed> | {'fraction_non_alphanumeric': 0.06666666666666667, 'fraction_numerical': 0.021890547263681594, 'mean_word_length': 4.2643979057591626, 'pattern_counts': {'":': 0, '<': 9, '<?xml version=': 0, '>': 9, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '10839786', 'n_tokens_mistral': 640, 'n_tokens_neox': 590, 'n_words': 275} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: v1.3 Volunteer management

username_0: **Volunteer Management:**

Things to consider/To-do:

* Do we want to show m and f to users?

* Should date of birth be displayed in numbers format? Perhaps a human-friendly way to display it would be something like 3 Jan 1998.

* Also need to touch up on the right panel

* Documentation<issue_closed> | {'fraction_non_alphanumeric': 0.08979591836734693, 'fraction_numerical': 0.08571428571428572, 'mean_word_length': 4.776470588235294, 'pattern_counts': {'":': 0, '<': 3, '<?xml version=': 0, '>': 3, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '8443785', 'n_tokens_mistral': 182, 'n_tokens_neox': 157, 'n_words': 54} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: http post with esp32

username_0: Hello

i want to communicate with esp32 over the wifi using dart language.

i want to send a message from flutter that should receive by esp 32 and vice versa

<issue_comment>username_1: I will just paste your [SO question](https://stackoverflow.com/questions/58153426/how-to-communicate-with-esp-32-wifi-module) here in case somebody will help there earlier.

<issue_comment>username_0: no sir no one helped me over there

<issue_comment>username_2: Please ask questions like this on stackoverflow as we can't give individual support here.

<issue_comment>username_0: This is a flutter related issue

<issue_comment>username_2: Hi,

no this is not a Flutter related problem but standard network programming with the Dart HttpClient, http package or dio package.

This place is for reporting bugs and suggest new features | {'fraction_non_alphanumeric': 0.05174353205849269, 'fraction_numerical': 0.024746906636670417, 'mean_word_length': 5.267605633802817, 'pattern_counts': {'":': 0, '<': 7, '<?xml version=': 0, '>': 7, 'https://': 1, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '16832476', 'n_tokens_mistral': 248, 'n_tokens_neox': 232, 'n_words': 116} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: New Crowdin translations

username_0:

<issue_comment>username_0: :tada: This PR is included in version 1.4.23 :tada:

The release is available on:

- [npm package (@latest dist-tag)](https://www.npmjs.com/package/warframe-worldstate-data/v/1.4.23)

- [GitHub release](https://github.com/WFCD/warframe-worldstate-data/releases/tag/v1.4.23)

Your **[semantic-release](https://github.com/semantic-release/semantic-release)** bot :package::rocket: | {'fraction_non_alphanumeric': 0.16176470588235295, 'fraction_numerical': 0.029411764705882353, 'mean_word_length': 5.625, 'pattern_counts': {'":': 0, '<': 3, '<?xml version=': 0, '>': 3, 'https://': 3, 'lorem ipsum': 0, 'www.': 1, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '30147252', 'n_tokens_mistral': 177, 'n_tokens_neox': 162, 'n_words': 30} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Tidy up CSS link formatting

username_0: - no need for `type/css` in HTML5 documents

- having `rel="stylesheet"` first can improve readability/scannability of the code and as it's more consistent aids with gZip compression.

- no need for closing `/` in HTML5 documents

<issue_comment>username_1: But is makes it more readable and is a style preference. Let's leave it in.

<issue_comment>username_0: Then it should be consistent I guess? Should I edit the PR to add the closing slash to the other stylesheets in the head?

<issue_comment>username_1: Yes please. Thank you.

Also, the test failures we're see here are not related to this change, but are being addressed in #1782. | {'fraction_non_alphanumeric': 0.06162464985994398, 'fraction_numerical': 0.014005602240896359, 'mean_word_length': 4.72, 'pattern_counts': {'":': 0, '<': 5, '<?xml version=': 0, '>': 5, 'https://': 0, 'lorem ipsum': 0, 'www.': 0, 'xml': 0}, 'pii_count': 0, 'substrings_counts': 0, 'word_list_counts': {'cursed_substrings.json': 0, 'profanity_word_list.json': 0, 'sexual_word_list.json': 0, 'zh_pornsignals.json': 0}} | {'dir': 'github-issues-filtered-structured', 'id': '19343137', 'n_tokens_mistral': 203, 'n_tokens_neox': 190, 'n_words': 104} |

starcoder-github-issues-filtered-structured | <issue_start><issue_comment>Title: Does grunt-contrib-requirejs add semicolons to the end of sourcemaps references that do not have a newline?