id

int64 599M

2.47B

| url

stringlengths 58

61

| repository_url

stringclasses 1

value | events_url

stringlengths 65

68

| labels

listlengths 0

4

| active_lock_reason

null | updated_at

stringlengths 20

20

| assignees

listlengths 0

4

| html_url

stringlengths 46

51

| author_association

stringclasses 4

values | state_reason

stringclasses 3

values | draft

bool 2

classes | milestone

dict | comments

sequencelengths 0

30

| title

stringlengths 1

290

| reactions

dict | node_id

stringlengths 18

32

| pull_request

dict | created_at

stringlengths 20

20

| comments_url

stringlengths 67

70

| body

stringlengths 0

228k

⌀ | user

dict | labels_url

stringlengths 72

75

| timeline_url

stringlengths 67

70

| state

stringclasses 2

values | locked

bool 1

class | number

int64 1

7.11k

| performed_via_github_app

null | closed_at

stringlengths 20

20

⌀ | assignee

dict | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

2,473,367,848 | https://api.github.com/repos/huggingface/datasets/issues/7109 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7109/events | [] | null | 2024-08-19T13:29:12Z | [

{

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

}

] | https://github.com/huggingface/datasets/issues/7109 | MEMBER | null | null | null | [] | ConnectionError for gated datasets and unauthenticated users | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7109/reactions"

} | I_kwDODunzps6TbJko | null | 2024-08-19T13:27:45Z | https://api.github.com/repos/huggingface/datasets/issues/7109/comments | Since the Hub returns dataset info for gated datasets and unauthenticated users, there is dead code: https://github.com/huggingface/datasets/blob/98fdc9e78e6d057ca66e58a37f49d6618aab8130/src/datasets/load.py#L1846-L1852

We should remove the dead code and properly handle this case: currently we are raising a `ConnectionError` instead of a `DatasetNotFoundError` (as before).

See:

- https://github.com/huggingface/dataset-viewer/issues/3025

- https://github.com/huggingface/huggingface_hub/issues/2457 | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | https://api.github.com/repos/huggingface/datasets/issues/7109/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7109/timeline | open | false | 7,109 | null | null | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | false |

2,470,665,327 | https://api.github.com/repos/huggingface/datasets/issues/7108 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7108/events | [] | null | 2024-08-19T13:21:12Z | [] | https://github.com/huggingface/datasets/issues/7108 | NONE | completed | null | null | [

"I don't reproduce, I was able to create a new repo: https://huggingface.co/datasets/severo/reproduce-datasets-issues-7108. Can you confirm it's still broken?",

"I have just tried again.\r\n\r\nFirefox: The `Create dataset` doesn't work. It has worked in the past. It's my preferred browser.\r\n\r\nChrome: The `Create dataset` works.\r\n\r\nIt seems to be a Firefox specific issue.",

"I have updated Firefox 129.0 (64 bit), and now the `Create dataset` is working again in Firefox.\r\n\r\nUX: It would be nice with better error messages on HuggingFace.",

"maybe an issue with the cookie. cc @Wauplin @coyotte508 "

] | website broken: Create a new dataset repository, doesn't create a new repo in Firefox | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7108/reactions"

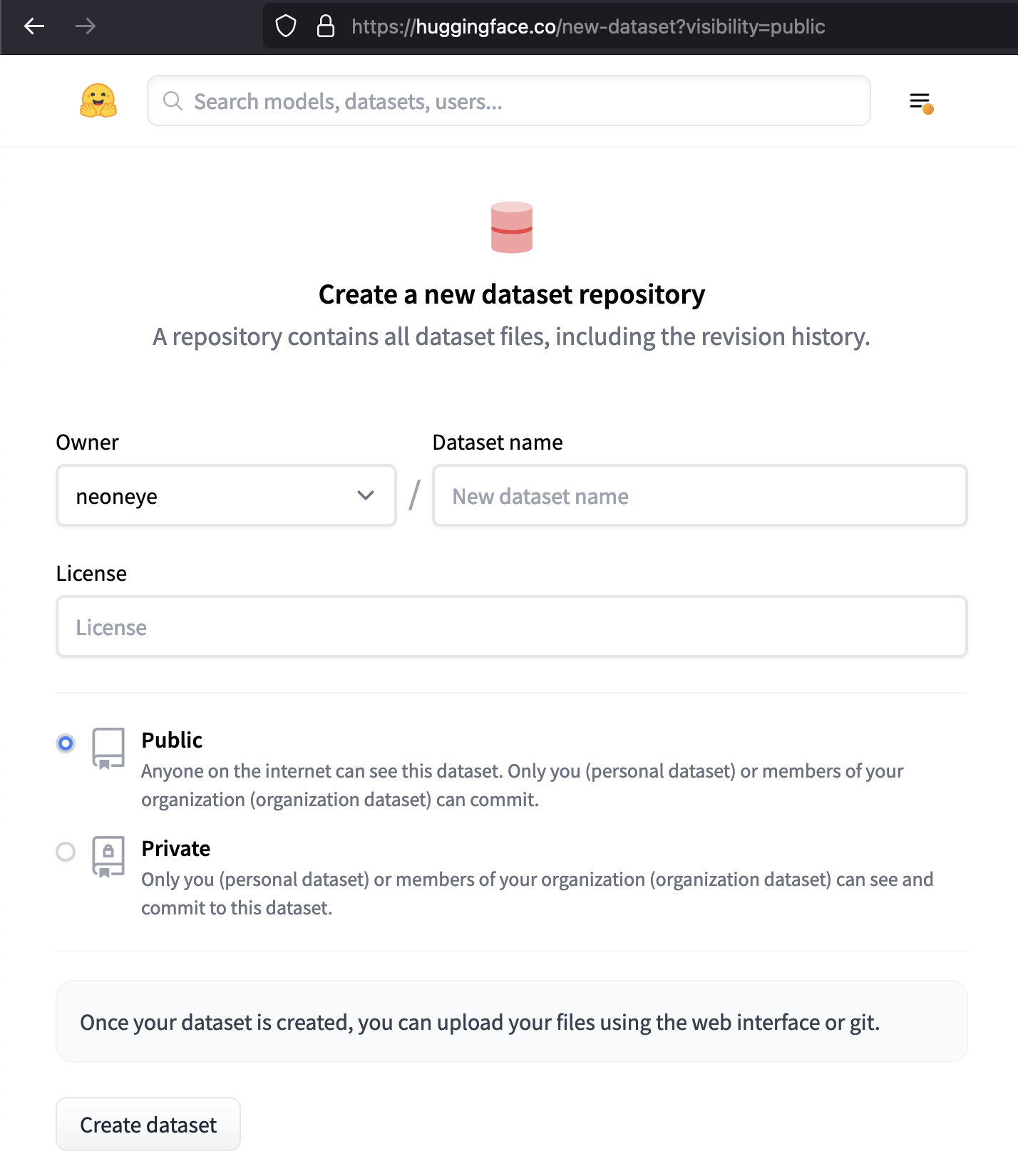

} | I_kwDODunzps6TQ1xv | null | 2024-08-16T17:23:00Z | https://api.github.com/repos/huggingface/datasets/issues/7108/comments | ### Describe the bug

This issue is also reported here:

https://discuss.huggingface.co/t/create-a-new-dataset-repository-broken-page/102644

This page is broken.

https://huggingface.co/new-dataset

I fill in the form with my text, and click `Create Dataset`.

Then the form gets wiped. And no repo got created. No error message visible in the developer console.

# Idea for improvement

For better UX, if the repo cannot be created, then show an error message, that something went wrong.

# Work around, that works for me

```python

from huggingface_hub import HfApi, HfFolder

repo_id = 'simon-arc-solve-fractal-v3'

api = HfApi()

username = api.whoami()['name']

repo_url = api.create_repo(repo_id=repo_id, exist_ok=True, private=True, repo_type="dataset")

```

### Steps to reproduce the bug

Go https://huggingface.co/new-dataset

Fill in the form.

Click `Create dataset`.

Now the form is cleared. And the page doesn't jump anywhere.

### Expected behavior

The moment the user clicks `Create dataset`, the repo gets created and the page jumps to the created repo.

### Environment info

Firefox 128.0.3 (64-bit)

macOS Sonoma 14.5

| {

"avatar_url": "https://avatars.githubusercontent.com/u/147971?v=4",

"events_url": "https://api.github.com/users/neoneye/events{/privacy}",

"followers_url": "https://api.github.com/users/neoneye/followers",

"following_url": "https://api.github.com/users/neoneye/following{/other_user}",

"gists_url": "https://api.github.com/users/neoneye/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/neoneye",

"id": 147971,

"login": "neoneye",

"node_id": "MDQ6VXNlcjE0Nzk3MQ==",

"organizations_url": "https://api.github.com/users/neoneye/orgs",

"received_events_url": "https://api.github.com/users/neoneye/received_events",

"repos_url": "https://api.github.com/users/neoneye/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/neoneye/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/neoneye/subscriptions",

"type": "User",

"url": "https://api.github.com/users/neoneye"

} | https://api.github.com/repos/huggingface/datasets/issues/7108/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7108/timeline | closed | false | 7,108 | null | 2024-08-19T06:52:48Z | null | false |

2,470,444,732 | https://api.github.com/repos/huggingface/datasets/issues/7107 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7107/events | [] | null | 2024-08-18T09:28:43Z | [] | https://github.com/huggingface/datasets/issues/7107 | NONE | completed | null | null | [

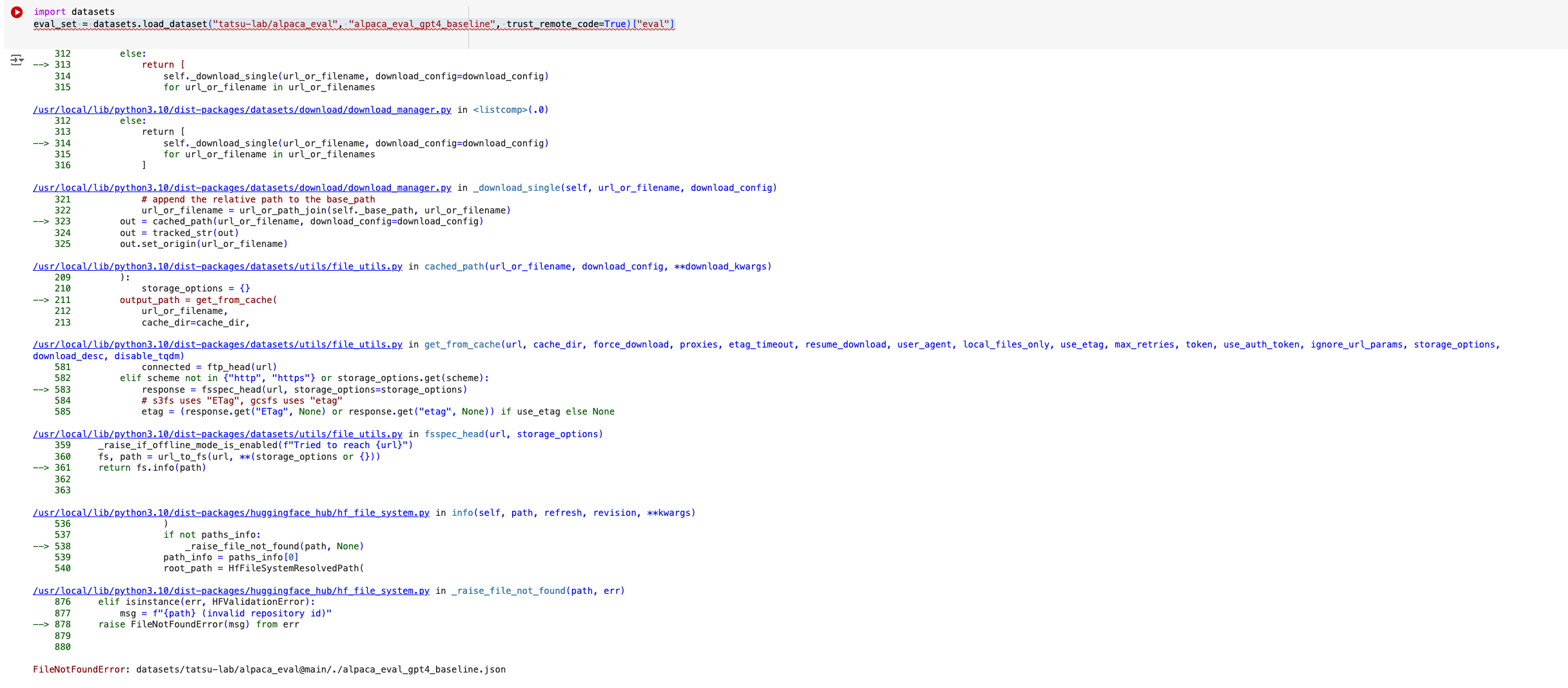

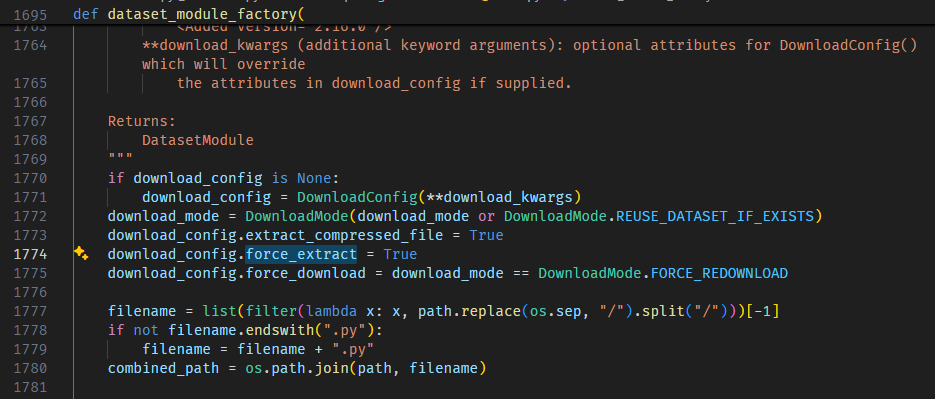

"There seems to be a PR related to the load_dataset path that went into 2.21.0 -- https://github.com/huggingface/datasets/pull/6862/files\r\n\r\nTaking a look at it now",

"+1\r\n\r\nDowngrading to 2.20.0 fixed my issue, hopefully helpful for others.",

"I tried adding a simple test to `test_load.py` with the alpaca eval dataset but the test didn't fail :(. \r\n\r\nSo looks like this might have something to do with the environment? ",

"There was an issue with the script of the \"tatsu-lab/alpaca_eval\" dataset.\r\n\r\nI was fixed with this PR: \r\n- [Fix FileNotFoundError](https://huggingface.co/datasets/tatsu-lab/alpaca_eval/discussions/2)\r\n\r\nIt should work now if you retry to load the dataset."

] | load_dataset broken in 2.21.0 | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 1,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7107/reactions"

} | I_kwDODunzps6TP_68 | null | 2024-08-16T14:59:51Z | https://api.github.com/repos/huggingface/datasets/issues/7107/comments | ### Describe the bug

`eval_set = datasets.load_dataset("tatsu-lab/alpaca_eval", "alpaca_eval_gpt4_baseline", trust_remote_code=True)`

used to work till 2.20.0 but doesn't work in 2.21.0

In 2.20.0:

in 2.21.0:

### Steps to reproduce the bug

1. Spin up a new google collab

2. `pip install datasets==2.21.0`

3. `import datasets`

4. `eval_set = datasets.load_dataset("tatsu-lab/alpaca_eval", "alpaca_eval_gpt4_baseline", trust_remote_code=True)`

5. Will throw an error.

### Expected behavior

Try steps 1-5 again but replace datasets version with 2.20.0, it will work

### Environment info

- `datasets` version: 2.21.0

- Platform: Linux-6.1.85+-x86_64-with-glibc2.35

- Python version: 3.10.12

- `huggingface_hub` version: 0.23.5

- PyArrow version: 17.0.0

- Pandas version: 2.1.4

- `fsspec` version: 2024.5.0

| {

"avatar_url": "https://avatars.githubusercontent.com/u/1911631?v=4",

"events_url": "https://api.github.com/users/anjor/events{/privacy}",

"followers_url": "https://api.github.com/users/anjor/followers",

"following_url": "https://api.github.com/users/anjor/following{/other_user}",

"gists_url": "https://api.github.com/users/anjor/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/anjor",

"id": 1911631,

"login": "anjor",

"node_id": "MDQ6VXNlcjE5MTE2MzE=",

"organizations_url": "https://api.github.com/users/anjor/orgs",

"received_events_url": "https://api.github.com/users/anjor/received_events",

"repos_url": "https://api.github.com/users/anjor/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/anjor/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/anjor/subscriptions",

"type": "User",

"url": "https://api.github.com/users/anjor"

} | https://api.github.com/repos/huggingface/datasets/issues/7107/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7107/timeline | closed | false | 7,107 | null | 2024-08-18T09:27:12Z | null | false |

2,469,854,262 | https://api.github.com/repos/huggingface/datasets/issues/7106 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7106/events | [] | null | 2024-08-16T09:31:37Z | [] | https://github.com/huggingface/datasets/pull/7106 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7106). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | Rename LargeList.dtype to LargeList.feature | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7106/reactions"

} | PR_kwDODunzps54jntM | {

"diff_url": "https://github.com/huggingface/datasets/pull/7106.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7106",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/7106.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7106"

} | 2024-08-16T09:12:04Z | https://api.github.com/repos/huggingface/datasets/issues/7106/comments | Rename `LargeList.dtype` to `LargeList.feature`.

Note that `dtype` is usually used for NumPy data types ("int64", "float32",...): see `Value.dtype`.

However, `LargeList` attribute (like `Sequence.feature`) expects a `FeatureType` instead.

With this renaming:

- we avoid confusion about the expected type and

- we also align `LargeList` with `Sequence`. | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | https://api.github.com/repos/huggingface/datasets/issues/7106/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7106/timeline | open | false | 7,106 | null | null | null | true |

2,468,207,039 | https://api.github.com/repos/huggingface/datasets/issues/7105 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7105/events | [] | null | 2024-08-19T15:08:49Z | [] | https://github.com/huggingface/datasets/pull/7105 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7105). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update.",

"Nice\r\n\r\n<img width=\"141\" alt=\"Capture d’écran 2024-08-19 à 15 25 00\" src=\"https://github.com/user-attachments/assets/18c7b3ec-a57e-45d7-9b19-0b12df9feccd\">\r\n",

"fyi the CI failure on test_py310_numpy2 is unrelated to this PR (it's a dependency install failure)"

] | Use `huggingface_hub` cache | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 2,

"laugh": 0,

"rocket": 0,

"total_count": 2,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7105/reactions"

} | PR_kwDODunzps54eZ0D | {

"diff_url": "https://github.com/huggingface/datasets/pull/7105.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7105",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/7105.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7105"

} | 2024-08-15T14:45:22Z | https://api.github.com/repos/huggingface/datasets/issues/7105/comments | wip

- use `hf_hub_download()` from `huggingface_hub` for HF files

- `datasets` cache_dir is still used for:

- caching datasets as Arrow files (that back `Dataset` objects)

- extracted archives, uncompressed files

- files downloaded via http (datasets with scripts)

- I removed code that were made for http files (and also the dummy_data / mock_download_manager stuff that happened to rely on them and have been legacy for a while now) | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | https://api.github.com/repos/huggingface/datasets/issues/7105/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7105/timeline | open | false | 7,105 | null | null | null | true |

2,467,788,212 | https://api.github.com/repos/huggingface/datasets/issues/7104 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7104/events | [] | null | 2024-08-15T10:24:13Z | [] | https://github.com/huggingface/datasets/pull/7104 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7104). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update.",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.005343 / 0.011353 (-0.006010) | 0.003562 / 0.011008 (-0.007447) | 0.062785 / 0.038508 (0.024277) | 0.031459 / 0.023109 (0.008349) | 0.246497 / 0.275898 (-0.029401) | 0.268258 / 0.323480 (-0.055222) | 0.003201 / 0.007986 (-0.004785) | 0.004153 / 0.004328 (-0.000175) | 0.049003 / 0.004250 (0.044753) | 0.042780 / 0.037052 (0.005728) | 0.263857 / 0.258489 (0.005368) | 0.278578 / 0.293841 (-0.015263) | 0.030357 / 0.128546 (-0.098190) | 0.012341 / 0.075646 (-0.063305) | 0.206010 / 0.419271 (-0.213262) | 0.036244 / 0.043533 (-0.007289) | 0.245799 / 0.255139 (-0.009340) | 0.265467 / 0.283200 (-0.017733) | 0.019473 / 0.141683 (-0.122210) | 1.147913 / 1.452155 (-0.304242) | 1.209968 / 1.492716 (-0.282749) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.099393 / 0.018006 (0.081387) | 0.300898 / 0.000490 (0.300408) | 0.000258 / 0.000200 (0.000058) | 0.000044 / 0.000054 (-0.000010) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.018888 / 0.037411 (-0.018523) | 0.062452 / 0.014526 (0.047926) | 0.073799 / 0.176557 (-0.102757) | 0.121297 / 0.737135 (-0.615839) | 0.074855 / 0.296338 (-0.221484) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.283969 / 0.215209 (0.068760) | 2.808820 / 2.077655 (0.731165) | 1.446106 / 1.504120 (-0.058014) | 1.321622 / 1.541195 (-0.219573) | 1.348317 / 1.468490 (-0.120173) | 0.738369 / 4.584777 (-3.846408) | 2.349825 / 3.745712 (-1.395887) | 2.913964 / 5.269862 (-2.355897) | 1.870585 / 4.565676 (-2.695092) | 0.080141 / 0.424275 (-0.344134) | 0.005174 / 0.007607 (-0.002433) | 0.335977 / 0.226044 (0.109933) | 3.356267 / 2.268929 (1.087338) | 1.811149 / 55.444624 (-53.633475) | 1.510685 / 6.876477 (-5.365792) | 1.524960 / 2.142072 (-0.617112) | 0.803900 / 4.805227 (-4.001328) | 0.138294 / 6.500664 (-6.362370) | 0.042241 / 0.075469 (-0.033229) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.975597 / 1.841788 (-0.866191) | 11.395109 / 8.074308 (3.320801) | 9.837724 / 10.191392 (-0.353668) | 0.141474 / 0.680424 (-0.538950) | 0.015075 / 0.534201 (-0.519126) | 0.304285 / 0.579283 (-0.274998) | 0.267845 / 0.434364 (-0.166519) | 0.342808 / 0.540337 (-0.197529) | 0.434299 / 1.386936 (-0.952637) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.005612 / 0.011353 (-0.005741) | 0.003808 / 0.011008 (-0.007201) | 0.050533 / 0.038508 (0.012024) | 0.032635 / 0.023109 (0.009526) | 0.265522 / 0.275898 (-0.010376) | 0.289763 / 0.323480 (-0.033716) | 0.004395 / 0.007986 (-0.003590) | 0.002868 / 0.004328 (-0.001460) | 0.048443 / 0.004250 (0.044193) | 0.040047 / 0.037052 (0.002995) | 0.279013 / 0.258489 (0.020524) | 0.314499 / 0.293841 (0.020658) | 0.032321 / 0.128546 (-0.096225) | 0.011902 / 0.075646 (-0.063744) | 0.059827 / 0.419271 (-0.359445) | 0.034388 / 0.043533 (-0.009145) | 0.270660 / 0.255139 (0.015521) | 0.290776 / 0.283200 (0.007576) | 0.017875 / 0.141683 (-0.123808) | 1.188085 / 1.452155 (-0.264070) | 1.221384 / 1.492716 (-0.271332) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.095619 / 0.018006 (0.077613) | 0.305331 / 0.000490 (0.304841) | 0.000217 / 0.000200 (0.000018) | 0.000049 / 0.000054 (-0.000006) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.022481 / 0.037411 (-0.014930) | 0.076957 / 0.014526 (0.062431) | 0.087830 / 0.176557 (-0.088726) | 0.128290 / 0.737135 (-0.608845) | 0.090565 / 0.296338 (-0.205774) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.291861 / 0.215209 (0.076652) | 2.869776 / 2.077655 (0.792121) | 1.575114 / 1.504120 (0.070994) | 1.449873 / 1.541195 (-0.091322) | 1.450333 / 1.468490 (-0.018158) | 0.723319 / 4.584777 (-3.861458) | 0.972603 / 3.745712 (-2.773109) | 2.940909 / 5.269862 (-2.328953) | 1.889664 / 4.565676 (-2.676012) | 0.078654 / 0.424275 (-0.345621) | 0.005197 / 0.007607 (-0.002410) | 0.344380 / 0.226044 (0.118336) | 3.387509 / 2.268929 (1.118580) | 1.981590 / 55.444624 (-53.463034) | 1.643214 / 6.876477 (-5.233263) | 1.640435 / 2.142072 (-0.501638) | 0.802037 / 4.805227 (-4.003191) | 0.133016 / 6.500664 (-6.367648) | 0.040861 / 0.075469 (-0.034608) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.026372 / 1.841788 (-0.815416) | 11.959931 / 8.074308 (3.885623) | 10.122523 / 10.191392 (-0.068869) | 0.144443 / 0.680424 (-0.535981) | 0.015629 / 0.534201 (-0.518572) | 0.304802 / 0.579283 (-0.274481) | 0.120538 / 0.434364 (-0.313826) | 0.343394 / 0.540337 (-0.196943) | 0.437544 / 1.386936 (-0.949392) |\n\n</details>\n</details>\n\n\n"

] | remove more script docs | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7104/reactions"

} | PR_kwDODunzps54dAhE | {

"diff_url": "https://github.com/huggingface/datasets/pull/7104.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7104",

"merged_at": "2024-08-15T10:18:25Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7104.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7104"

} | 2024-08-15T10:13:26Z | https://api.github.com/repos/huggingface/datasets/issues/7104/comments | null | {

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

} | https://api.github.com/repos/huggingface/datasets/issues/7104/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7104/timeline | closed | false | 7,104 | null | 2024-08-15T10:18:25Z | null | true |

2,467,664,581 | https://api.github.com/repos/huggingface/datasets/issues/7103 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7103/events | [] | null | 2024-08-16T09:18:29Z | [] | https://github.com/huggingface/datasets/pull/7103 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7103). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update.",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.005255 / 0.011353 (-0.006098) | 0.003344 / 0.011008 (-0.007664) | 0.062062 / 0.038508 (0.023554) | 0.030154 / 0.023109 (0.007045) | 0.233728 / 0.275898 (-0.042170) | 0.258799 / 0.323480 (-0.064681) | 0.004105 / 0.007986 (-0.003880) | 0.002708 / 0.004328 (-0.001621) | 0.048689 / 0.004250 (0.044439) | 0.041864 / 0.037052 (0.004812) | 0.247221 / 0.258489 (-0.011268) | 0.274067 / 0.293841 (-0.019774) | 0.029108 / 0.128546 (-0.099439) | 0.011867 / 0.075646 (-0.063779) | 0.203181 / 0.419271 (-0.216090) | 0.035162 / 0.043533 (-0.008371) | 0.239723 / 0.255139 (-0.015416) | 0.256679 / 0.283200 (-0.026521) | 0.018362 / 0.141683 (-0.123321) | 1.139974 / 1.452155 (-0.312181) | 1.193946 / 1.492716 (-0.298770) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.135477 / 0.018006 (0.117471) | 0.298500 / 0.000490 (0.298011) | 0.000225 / 0.000200 (0.000025) | 0.000042 / 0.000054 (-0.000012) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.018743 / 0.037411 (-0.018668) | 0.062999 / 0.014526 (0.048474) | 0.073466 / 0.176557 (-0.103090) | 0.119227 / 0.737135 (-0.617908) | 0.074338 / 0.296338 (-0.222000) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.280747 / 0.215209 (0.065538) | 2.750660 / 2.077655 (0.673006) | 1.461004 / 1.504120 (-0.043116) | 1.348439 / 1.541195 (-0.192756) | 1.365209 / 1.468490 (-0.103281) | 0.718416 / 4.584777 (-3.866361) | 2.333568 / 3.745712 (-1.412144) | 2.854639 / 5.269862 (-2.415223) | 1.821144 / 4.565676 (-2.744532) | 0.077234 / 0.424275 (-0.347041) | 0.005111 / 0.007607 (-0.002497) | 0.330749 / 0.226044 (0.104705) | 3.277189 / 2.268929 (1.008260) | 1.825886 / 55.444624 (-53.618739) | 1.515078 / 6.876477 (-5.361399) | 1.527288 / 2.142072 (-0.614785) | 0.786922 / 4.805227 (-4.018305) | 0.131539 / 6.500664 (-6.369125) | 0.042365 / 0.075469 (-0.033104) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.961809 / 1.841788 (-0.879979) | 11.184540 / 8.074308 (3.110232) | 9.473338 / 10.191392 (-0.718054) | 0.138460 / 0.680424 (-0.541964) | 0.014588 / 0.534201 (-0.519613) | 0.301503 / 0.579283 (-0.277780) | 0.261092 / 0.434364 (-0.173271) | 0.336480 / 0.540337 (-0.203857) | 0.427665 / 1.386936 (-0.959271) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.005517 / 0.011353 (-0.005836) | 0.003417 / 0.011008 (-0.007591) | 0.049338 / 0.038508 (0.010830) | 0.033411 / 0.023109 (0.010302) | 0.264328 / 0.275898 (-0.011570) | 0.286750 / 0.323480 (-0.036730) | 0.004299 / 0.007986 (-0.003686) | 0.002506 / 0.004328 (-0.001823) | 0.049511 / 0.004250 (0.045260) | 0.041471 / 0.037052 (0.004418) | 0.276732 / 0.258489 (0.018243) | 0.311908 / 0.293841 (0.018067) | 0.031683 / 0.128546 (-0.096863) | 0.011700 / 0.075646 (-0.063946) | 0.060084 / 0.419271 (-0.359188) | 0.037757 / 0.043533 (-0.005776) | 0.265342 / 0.255139 (0.010203) | 0.287782 / 0.283200 (0.004583) | 0.018692 / 0.141683 (-0.122990) | 1.163462 / 1.452155 (-0.288692) | 1.219236 / 1.492716 (-0.273481) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.094102 / 0.018006 (0.076096) | 0.303976 / 0.000490 (0.303487) | 0.000208 / 0.000200 (0.000008) | 0.000042 / 0.000054 (-0.000012) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023252 / 0.037411 (-0.014160) | 0.076986 / 0.014526 (0.062461) | 0.088831 / 0.176557 (-0.087726) | 0.128661 / 0.737135 (-0.608475) | 0.089082 / 0.296338 (-0.207256) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.297428 / 0.215209 (0.082218) | 2.951568 / 2.077655 (0.873913) | 1.597627 / 1.504120 (0.093508) | 1.466556 / 1.541195 (-0.074639) | 1.455522 / 1.468490 (-0.012968) | 0.723576 / 4.584777 (-3.861201) | 0.951113 / 3.745712 (-2.794599) | 2.889671 / 5.269862 (-2.380190) | 1.877330 / 4.565676 (-2.688347) | 0.079124 / 0.424275 (-0.345151) | 0.005146 / 0.007607 (-0.002461) | 0.344063 / 0.226044 (0.118018) | 3.432190 / 2.268929 (1.163261) | 1.927049 / 55.444624 (-53.517576) | 1.638552 / 6.876477 (-5.237924) | 1.647791 / 2.142072 (-0.494282) | 0.800526 / 4.805227 (-4.004701) | 0.131858 / 6.500664 (-6.368806) | 0.040852 / 0.075469 (-0.034618) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.025536 / 1.841788 (-0.816252) | 11.798302 / 8.074308 (3.723994) | 10.012051 / 10.191392 (-0.179341) | 0.137701 / 0.680424 (-0.542723) | 0.015151 / 0.534201 (-0.519050) | 0.298972 / 0.579283 (-0.280311) | 0.123816 / 0.434364 (-0.310548) | 0.337292 / 0.540337 (-0.203046) | 0.432729 / 1.386936 (-0.954207) |\n\n</details>\n</details>\n\n\n"

] | Fix args of feature docstrings | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7103/reactions"

} | PR_kwDODunzps54clrp | {

"diff_url": "https://github.com/huggingface/datasets/pull/7103.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7103",

"merged_at": "2024-08-15T10:33:30Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7103.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7103"

} | 2024-08-15T08:46:08Z | https://api.github.com/repos/huggingface/datasets/issues/7103/comments | Fix Args section of feature docstrings.

Currently, some args do not appear in the docs because they are not properly parsed due to the lack of their type (between parentheses). | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | https://api.github.com/repos/huggingface/datasets/issues/7103/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7103/timeline | closed | false | 7,103 | null | 2024-08-15T10:33:30Z | null | true |

2,466,893,106 | https://api.github.com/repos/huggingface/datasets/issues/7102 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7102/events | [] | null | 2024-08-15T16:17:31Z | [] | https://github.com/huggingface/datasets/issues/7102 | NONE | null | null | null | [

"Hi @lajd , I was skeptical about how we are saving the shards each as their own dataset (arrow file) in the script above, and so I updated the script to try out saving the shards in a few different file formats. From the experiments I ran, I saw binary format show significantly the best performance, with arrow and parquet about the same. However, I was unable to reproduce a drastically slower iteration speed after shuffling in any case when using the revised script -- pasting below:\r\n\r\n```python\r\nimport time\r\nfrom datasets import load_dataset, Dataset, IterableDataset\r\nfrom pathlib import Path\r\nimport torch\r\nimport pandas as pd\r\nimport pickle\r\nimport pyarrow as pa\r\nimport pyarrow.parquet as pq\r\n\r\n\r\ndef generate_random_example():\r\n return {\r\n 'inputs': torch.randn(128).tolist(),\r\n 'indices': torch.randint(0, 10000, (2, 20000)).tolist(),\r\n 'values': torch.randn(20000).tolist(),\r\n }\r\n\r\n\r\ndef generate_shard_data(examples_per_shard: int = 512):\r\n return [generate_random_example() for _ in range(examples_per_shard)]\r\n\r\n\r\ndef save_shard_as_arrow(shard_idx, save_dir, examples_per_shard):\r\n # Generate shard data\r\n shard_data = generate_shard_data(examples_per_shard)\r\n\r\n # Convert data to a Hugging Face Dataset\r\n dataset = Dataset.from_dict({\r\n 'inputs': [example['inputs'] for example in shard_data],\r\n 'indices': [example['indices'] for example in shard_data],\r\n 'values': [example['values'] for example in shard_data],\r\n })\r\n\r\n # Define the shard save path\r\n shard_write_path = Path(save_dir) / f\"shard_{shard_idx}\"\r\n\r\n # Save the dataset to disk using the Arrow format\r\n dataset.save_to_disk(str(shard_write_path))\r\n\r\n return str(shard_write_path)\r\n\r\n\r\ndef save_shard_as_parquet(shard_idx, save_dir, examples_per_shard):\r\n # Generate shard data\r\n shard_data = generate_shard_data(examples_per_shard)\r\n\r\n # Convert data to a pandas DataFrame for easy conversion to Parquet\r\n df = pd.DataFrame(shard_data)\r\n\r\n # Define the shard save path\r\n shard_write_path = Path(save_dir) / f\"shard_{shard_idx}.parquet\"\r\n\r\n # Convert DataFrame to PyArrow Table for Parquet saving\r\n table = pa.Table.from_pandas(df)\r\n\r\n # Save the table as a Parquet file\r\n pq.write_table(table, shard_write_path)\r\n\r\n return str(shard_write_path)\r\n\r\n\r\ndef save_shard_as_binary(shard_idx, save_dir, examples_per_shard):\r\n # Generate shard data\r\n shard_data = generate_shard_data(examples_per_shard)\r\n\r\n # Define the shard save path\r\n shard_write_path = Path(save_dir) / f\"shard_{shard_idx}.bin\"\r\n\r\n # Save each example as a serialized binary object using pickle\r\n with open(shard_write_path, 'wb') as f:\r\n for example in shard_data:\r\n f.write(pickle.dumps(example))\r\n\r\n return str(shard_write_path)\r\n\r\n\r\ndef generate_split_shards(save_dir, filetype=\"parquet\", num_shards: int = 16, examples_per_shard: int = 512):\r\n shard_filepaths = []\r\n for shard_idx in range(num_shards):\r\n if filetype == \"parquet\":\r\n shard_filepaths.append(save_shard_as_parquet(shard_idx, save_dir, examples_per_shard))\r\n elif filetype == \"binary\":\r\n shard_filepaths.append(save_shard_as_binary(shard_idx, save_dir, examples_per_shard))\r\n elif filetype == \"arrow\":\r\n shard_filepaths.append(save_shard_as_arrow(shard_idx, save_dir, examples_per_shard))\r\n else:\r\n raise ValueError(f\"Unsupported filetype: {filetype}. Choose either 'parquet' or 'binary'.\")\r\n return shard_filepaths\r\n\r\n\r\ndef _binary_dataset_generator(files):\r\n for filepath in files:\r\n with open(filepath, 'rb') as f:\r\n while True:\r\n try:\r\n example = pickle.load(f)\r\n yield example\r\n except EOFError:\r\n break\r\n\r\n\r\ndef load_binary_dataset(shard_filepaths):\r\n return IterableDataset.from_generator(\r\n _binary_dataset_generator, gen_kwargs={\"files\": shard_filepaths},\r\n )\r\n\r\n\r\ndef load_parquet_dataset(shard_filepaths):\r\n # Load the dataset as an IterableDataset\r\n return load_dataset(\r\n \"parquet\",\r\n data_files={split: shard_filepaths},\r\n streaming=True,\r\n split=split,\r\n )\r\n\r\n\r\ndef load_arrow_dataset(shard_filepaths):\r\n # Load the dataset as an IterableDataset\r\n shard_filepaths = [f + \"/data-00000-of-00001.arrow\" for f in shard_filepaths]\r\n return load_dataset(\r\n \"arrow\",\r\n data_files={split: shard_filepaths},\r\n streaming=True,\r\n split=split,\r\n )\r\n\r\n\r\ndef load_dataset_wrapper(filetype: str, shard_filepaths: list[str]):\r\n if filetype == \"parquet\":\r\n return load_parquet_dataset(shard_filepaths)\r\n if filetype == \"binary\":\r\n return load_binary_dataset(shard_filepaths)\r\n if filetype == \"arrow\":\r\n return load_arrow_dataset(shard_filepaths)\r\n else:\r\n raise ValueError(\"Unsupported filetype\")\r\n\r\n\r\n# Example usage:\r\nsplit = \"train\"\r\nsplit_save_dir = \"/tmp/random_split\"\r\n\r\nfiletype = \"binary\" # or \"parquet\", or \"arrow\"\r\nnum_shards = 16\r\n\r\nshard_filepaths = generate_split_shards(split_save_dir, filetype=filetype, num_shards=num_shards)\r\ndataset = load_dataset_wrapper(filetype=filetype, shard_filepaths=shard_filepaths)\r\n\r\ndataset = dataset.shuffle(buffer_size=100, seed=42)\r\n\r\nstart_time = time.time()\r\nfor count, item in enumerate(dataset):\r\n if count > 0 and count % 100 == 0:\r\n elapsed_time = time.time() - start_time\r\n iterations_per_second = count / elapsed_time\r\n print(f\"Processed {count} items at an average of {iterations_per_second:.2f} iterations/second\")\r\n```",

"update: I was able to reproduce the issue you described -- but ONLY if I do \r\n\r\n```\r\nrandom_dataset = random_dataset.with_format(\"numpy\")\r\n```\r\n\r\nIf I do this, I see similar numbers as what you reported. If I do not use numpy format, parquet and arrow are about 17 iterations per second regardless of whether or not we shuffle. Using binary, (again no numpy format tried with this yet), still shows the fastest speeds on average (shuffle and no shuffle) of about 850 it/sec.\r\n\r\nI suspect some issues with arrow and numpy being optimized for sequential reads, and shuffling cuases issuses... hmm"

] | Slow iteration speeds when using IterableDataset.shuffle with load_dataset(data_files=..., streaming=True) | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7102/reactions"

} | I_kwDODunzps6TCc0y | null | 2024-08-14T21:44:44Z | https://api.github.com/repos/huggingface/datasets/issues/7102/comments | ### Describe the bug

When I load a dataset from a number of arrow files, as in:

```

random_dataset = load_dataset(

"arrow",

data_files={split: shard_filepaths},

streaming=True,

split=split,

)

```

I'm able to get fast iteration speeds when iterating over the dataset without shuffling.

When I shuffle the dataset, the iteration speed is reduced by ~1000x.

It's very possible the way I'm loading dataset shards is not appropriate; if so please advise!

Thanks for the help

### Steps to reproduce the bug

Here's full code to reproduce the issue:

- Generate a random dataset

- Create shards of data independently using Dataset.save_to_disk()

- The below will generate 16 shards (arrow files), of 512 examples each

```

import time

from pathlib import Path

from multiprocessing import Pool, cpu_count

import torch

from datasets import Dataset, load_dataset

split = "train"

split_save_dir = "/tmp/random_split"

def generate_random_example():

return {

'inputs': torch.randn(128).tolist(),

'indices': torch.randint(0, 10000, (2, 20000)).tolist(),

'values': torch.randn(20000).tolist(),

}

def generate_shard_dataset(examples_per_shard: int = 512):

dataset_dict = {

'inputs': [],

'indices': [],

'values': []

}

for _ in range(examples_per_shard):

example = generate_random_example()

dataset_dict['inputs'].append(example['inputs'])

dataset_dict['indices'].append(example['indices'])

dataset_dict['values'].append(example['values'])

return Dataset.from_dict(dataset_dict)

def save_shard(shard_idx, save_dir, examples_per_shard):

shard_dataset = generate_shard_dataset(examples_per_shard)

shard_write_path = Path(save_dir) / f"shard_{shard_idx}"

shard_dataset.save_to_disk(shard_write_path)

return str(Path(shard_write_path) / "data-00000-of-00001.arrow")

def generate_split_shards(save_dir, num_shards: int = 16, examples_per_shard: int = 512):

with Pool(cpu_count()) as pool:

args = [(m, save_dir, examples_per_shard) for m in range(num_shards)]

shard_filepaths = pool.starmap(save_shard, args)

return shard_filepaths

shard_filepaths = generate_split_shards(split_save_dir)

```

Load the dataset as IterableDataset:

```

random_dataset = load_dataset(

"arrow",

data_files={split: shard_filepaths},

streaming=True,

split=split,

)

random_dataset = random_dataset.with_format("numpy")

```

Observe the iterations/second when iterating over the dataset directly, and applying shuffling before iterating:

Without shuffling, this gives ~1500 iterations/second

```

start_time = time.time()

for count, item in enumerate(random_dataset):

if count > 0 and count % 100 == 0:

elapsed_time = time.time() - start_time

iterations_per_second = count / elapsed_time

print(f"Processed {count} items at an average of {iterations_per_second:.2f} iterations/second")

```

```

Processed 100 items at an average of 705.74 iterations/second

Processed 200 items at an average of 1169.68 iterations/second

Processed 300 items at an average of 1497.97 iterations/second

Processed 400 items at an average of 1739.62 iterations/second

Processed 500 items at an average of 1931.11 iterations/second`

```

When shuffling, this gives ~3 iterations/second:

```

random_dataset = random_dataset.shuffle(buffer_size=100,seed=42)

start_time = time.time()

for count, item in enumerate(random_dataset):

if count > 0 and count % 100 == 0:

elapsed_time = time.time() - start_time

iterations_per_second = count / elapsed_time

print(f"Processed {count} items at an average of {iterations_per_second:.2f} iterations/second")

```

```

Processed 100 items at an average of 3.75 iterations/second

Processed 200 items at an average of 3.93 iterations/second

```

### Expected behavior

Iterations per second should be barely affected by shuffling, especially with a small buffer size

### Environment info

Datasets version: 2.21.0

Python 3.10

Ubuntu 22.04 | {

"avatar_url": "https://avatars.githubusercontent.com/u/13192126?v=4",

"events_url": "https://api.github.com/users/lajd/events{/privacy}",

"followers_url": "https://api.github.com/users/lajd/followers",

"following_url": "https://api.github.com/users/lajd/following{/other_user}",

"gists_url": "https://api.github.com/users/lajd/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lajd",

"id": 13192126,

"login": "lajd",

"node_id": "MDQ6VXNlcjEzMTkyMTI2",

"organizations_url": "https://api.github.com/users/lajd/orgs",

"received_events_url": "https://api.github.com/users/lajd/received_events",

"repos_url": "https://api.github.com/users/lajd/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lajd/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lajd/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lajd"

} | https://api.github.com/repos/huggingface/datasets/issues/7102/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7102/timeline | open | false | 7,102 | null | null | null | false |

2,466,510,783 | https://api.github.com/repos/huggingface/datasets/issues/7101 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7101/events | [] | null | 2024-08-18T10:33:38Z | [] | https://github.com/huggingface/datasets/issues/7101 | NONE | null | null | null | [

"Having looked into this further it seems the core of the issue is with two different formats in the same repo.\r\n\r\nWhen the `parquet` config is first, the `WebDataset`s are loaded as `parquet`, if the `WebDataset` configs are first, the `parquet` is loaded as `WebDataset`.\r\n\r\nA workaround in my case would be to just turn the `parquet` into a `WebDataset`, although I'd still need the Dataset Viewer config limit increasing. In other cases using the same format may not be possible.\r\n\r\nRelevant code: \r\n- [HubDatasetModuleFactoryWithoutScript](https://github.com/huggingface/datasets/blob/5f42139a2c5583a55d34a2f60d537f5fba285c28/src/datasets/load.py#L964)\r\n- [get_data_patterns](https://github.com/huggingface/datasets/blob/5f42139a2c5583a55d34a2f60d537f5fba285c28/src/datasets/data_files.py#L415)"

] | `load_dataset` from Hub with `name` to specify `config` using incorrect builder type when multiple data formats are present | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7101/reactions"

} | I_kwDODunzps6TA_e_ | null | 2024-08-14T18:12:25Z | https://api.github.com/repos/huggingface/datasets/issues/7101/comments | Following [documentation](https://huggingface.co/docs/datasets/repository_structure#define-your-splits-and-subsets-in-yaml) I had defined different configs for [`Dataception`](https://huggingface.co/datasets/bigdata-pw/Dataception), a dataset of datasets:

```yaml

configs:

- config_name: dataception

data_files:

- path: dataception.parquet

split: train

default: true

- config_name: dataset_5423

data_files:

- path: datasets/5423.tar

split: train

...

- config_name: dataset_721736

data_files:

- path: datasets/721736.tar

split: train

```

The intent was for metadata to be browsable via Dataset Viewer, in addition to each individual dataset, and to allow datasets to be loaded by specifying the config/name to `load_dataset`.

While testing `load_dataset` I encountered the following error:

```python

>>> dataset = load_dataset("bigdata-pw/Dataception", "dataset_7691")

Downloading readme: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 467k/467k [00:00<00:00, 1.99MB/s]

Downloading data: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 71.0M/71.0M [00:02<00:00, 26.8MB/s]

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "datasets\load.py", line 2145, in load_dataset

builder_instance.download_and_prepare(

File "datasets\builder.py", line 1027, in download_and_prepare

self._download_and_prepare(

File "datasets\builder.py", line 1100, in _download_and_prepare

split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "datasets\packaged_modules\parquet\parquet.py", line 58, in _split_generators

self.info.features = datasets.Features.from_arrow_schema(pq.read_schema(f))

^^^^^^^^^^^^^^^^^

File "pyarrow\parquet\core.py", line 2325, in read_schema

file = ParquetFile(

^^^^^^^^^^^^

File "pyarrow\parquet\core.py", line 318, in __init__

self.reader.open(

File "pyarrow\_parquet.pyx", line 1470, in pyarrow._parquet.ParquetReader.open

File "pyarrow\error.pxi", line 91, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: Parquet magic bytes not found in footer. Either the file is corrupted or this is not a parquet file.

```

The correct file is downloaded, however the incorrect builder type is detected; `parquet` due to other content of the repository. It would appear that the config needs to be taken into account.

Note that I have removed the additional configs from the repository because of this issue and there is a limit of 3000 configs anyway so the Dataset Viewer doesn't work as I intended. I'll add them back in if it assists with testing.

| {

"avatar_url": "https://avatars.githubusercontent.com/u/106811348?v=4",

"events_url": "https://api.github.com/users/hlky/events{/privacy}",

"followers_url": "https://api.github.com/users/hlky/followers",

"following_url": "https://api.github.com/users/hlky/following{/other_user}",

"gists_url": "https://api.github.com/users/hlky/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/hlky",

"id": 106811348,

"login": "hlky",

"node_id": "U_kgDOBl3P1A",

"organizations_url": "https://api.github.com/users/hlky/orgs",

"received_events_url": "https://api.github.com/users/hlky/received_events",

"repos_url": "https://api.github.com/users/hlky/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/hlky/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/hlky/subscriptions",

"type": "User",

"url": "https://api.github.com/users/hlky"

} | https://api.github.com/repos/huggingface/datasets/issues/7101/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7101/timeline | open | false | 7,101 | null | null | null | false |

2,465,529,414 | https://api.github.com/repos/huggingface/datasets/issues/7100 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7100/events | [] | null | 2024-08-14T11:01:51Z | [] | https://github.com/huggingface/datasets/issues/7100 | NONE | null | null | null | [] | IterableDataset: cannot resolve features from list of numpy arrays | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7100/reactions"

} | I_kwDODunzps6S9P5G | null | 2024-08-14T11:01:51Z | https://api.github.com/repos/huggingface/datasets/issues/7100/comments | ### Describe the bug

when resolve features of `IterableDataset`, got `pyarrow.lib.ArrowInvalid: Can only convert 1-dimensional array values` error.

```

Traceback (most recent call last):

File "test.py", line 6

iter_ds = iter_ds._resolve_features()

File "lib/python3.10/site-packages/datasets/iterable_dataset.py", line 2876, in _resolve_features

features = _infer_features_from_batch(self.with_format(None)._head())

File "lib/python3.10/site-packages/datasets/iterable_dataset.py", line 63, in _infer_features_from_batch

pa_table = pa.Table.from_pydict(batch)

File "pyarrow/table.pxi", line 1813, in pyarrow.lib._Tabular.from_pydict

File "pyarrow/table.pxi", line 5339, in pyarrow.lib._from_pydict

File "pyarrow/array.pxi", line 374, in pyarrow.lib.asarray

File "pyarrow/array.pxi", line 344, in pyarrow.lib.array

File "pyarrow/array.pxi", line 42, in pyarrow.lib._sequence_to_array

File "pyarrow/error.pxi", line 154, in pyarrow.lib.pyarrow_internal_check_status

File "pyarrow/error.pxi", line 91, in pyarrow.lib.check_status

pyarrow.lib.ArrowInvalid: Can only convert 1-dimensional array values

```

### Steps to reproduce the bug

```python

from datasets import Dataset

import numpy as np

# create list of numpy

iter_ds = Dataset.from_dict({'a': [[[1, 2, 3], [1, 2, 3]]]}).to_iterable_dataset().map(lambda x: {'a': [np.array(x['a'])]})

iter_ds = iter_ds._resolve_features() # errors here

```

### Expected behavior

features can be successfully resolved

### Environment info

- `datasets` version: 2.21.0

- Platform: Linux-5.15.0-94-generic-x86_64-with-glibc2.35

- Python version: 3.10.13

- `huggingface_hub` version: 0.23.4

- PyArrow version: 15.0.0

- Pandas version: 2.2.0

- `fsspec` version: 2023.10.0 | {

"avatar_url": "https://avatars.githubusercontent.com/u/18899212?v=4",

"events_url": "https://api.github.com/users/VeryLazyBoy/events{/privacy}",

"followers_url": "https://api.github.com/users/VeryLazyBoy/followers",

"following_url": "https://api.github.com/users/VeryLazyBoy/following{/other_user}",

"gists_url": "https://api.github.com/users/VeryLazyBoy/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/VeryLazyBoy",

"id": 18899212,

"login": "VeryLazyBoy",

"node_id": "MDQ6VXNlcjE4ODk5MjEy",

"organizations_url": "https://api.github.com/users/VeryLazyBoy/orgs",

"received_events_url": "https://api.github.com/users/VeryLazyBoy/received_events",

"repos_url": "https://api.github.com/users/VeryLazyBoy/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/VeryLazyBoy/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/VeryLazyBoy/subscriptions",

"type": "User",

"url": "https://api.github.com/users/VeryLazyBoy"

} | https://api.github.com/repos/huggingface/datasets/issues/7100/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7100/timeline | open | false | 7,100 | null | null | null | false |

2,465,221,827 | https://api.github.com/repos/huggingface/datasets/issues/7099 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7099/events | [] | null | 2024-08-14T08:45:17Z | [] | https://github.com/huggingface/datasets/pull/7099 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7099). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update.",

"<details>\n<summary>Show benchmarks</summary>\n\nPyArrow==8.0.0\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.005649 / 0.011353 (-0.005704) | 0.003918 / 0.011008 (-0.007091) | 0.064333 / 0.038508 (0.025825) | 0.031909 / 0.023109 (0.008800) | 0.249020 / 0.275898 (-0.026878) | 0.273563 / 0.323480 (-0.049917) | 0.004184 / 0.007986 (-0.003802) | 0.002809 / 0.004328 (-0.001519) | 0.049066 / 0.004250 (0.044816) | 0.043324 / 0.037052 (0.006272) | 0.257889 / 0.258489 (-0.000600) | 0.285410 / 0.293841 (-0.008431) | 0.030681 / 0.128546 (-0.097865) | 0.012389 / 0.075646 (-0.063258) | 0.206172 / 0.419271 (-0.213100) | 0.036500 / 0.043533 (-0.007032) | 0.253674 / 0.255139 (-0.001465) | 0.272086 / 0.283200 (-0.011114) | 0.019558 / 0.141683 (-0.122125) | 1.149501 / 1.452155 (-0.302653) | 1.198036 / 1.492716 (-0.294680) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.139977 / 0.018006 (0.121971) | 0.301149 / 0.000490 (0.300659) | 0.000253 / 0.000200 (0.000053) | 0.000049 / 0.000054 (-0.000005) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.019137 / 0.037411 (-0.018274) | 0.062616 / 0.014526 (0.048090) | 0.075965 / 0.176557 (-0.100591) | 0.120976 / 0.737135 (-0.616159) | 0.076384 / 0.296338 (-0.219954) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.283801 / 0.215209 (0.068592) | 2.794074 / 2.077655 (0.716419) | 1.475633 / 1.504120 (-0.028487) | 1.336270 / 1.541195 (-0.204925) | 1.376159 / 1.468490 (-0.092331) | 0.718768 / 4.584777 (-3.866009) | 2.375970 / 3.745712 (-1.369742) | 2.969121 / 5.269862 (-2.300741) | 1.900236 / 4.565676 (-2.665440) | 0.082463 / 0.424275 (-0.341812) | 0.005159 / 0.007607 (-0.002448) | 0.329057 / 0.226044 (0.103012) | 3.250535 / 2.268929 (0.981607) | 1.846415 / 55.444624 (-53.598210) | 1.496622 / 6.876477 (-5.379855) | 1.538125 / 2.142072 (-0.603947) | 0.806127 / 4.805227 (-3.999101) | 0.135272 / 6.500664 (-6.365392) | 0.042668 / 0.075469 (-0.032801) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.983035 / 1.841788 (-0.858753) | 11.725835 / 8.074308 (3.651527) | 9.962818 / 10.191392 (-0.228574) | 0.131928 / 0.680424 (-0.548496) | 0.015784 / 0.534201 (-0.518417) | 0.301640 / 0.579283 (-0.277643) | 0.266251 / 0.434364 (-0.168113) | 0.339723 / 0.540337 (-0.200614) | 0.443384 / 1.386936 (-0.943552) |\n\n</details>\nPyArrow==latest\n\n<details>\n<summary>Show updated benchmarks!</summary>\n\n### Benchmark: benchmark_array_xd.json\n\n| metric | read_batch_formatted_as_numpy after write_array2d | read_batch_formatted_as_numpy after write_flattened_sequence | read_batch_formatted_as_numpy after write_nested_sequence | read_batch_unformated after write_array2d | read_batch_unformated after write_flattened_sequence | read_batch_unformated after write_nested_sequence | read_col_formatted_as_numpy after write_array2d | read_col_formatted_as_numpy after write_flattened_sequence | read_col_formatted_as_numpy after write_nested_sequence | read_col_unformated after write_array2d | read_col_unformated after write_flattened_sequence | read_col_unformated after write_nested_sequence | read_formatted_as_numpy after write_array2d | read_formatted_as_numpy after write_flattened_sequence | read_formatted_as_numpy after write_nested_sequence | read_unformated after write_array2d | read_unformated after write_flattened_sequence | read_unformated after write_nested_sequence | write_array2d | write_flattened_sequence | write_nested_sequence |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.006301 / 0.011353 (-0.005052) | 0.004346 / 0.011008 (-0.006662) | 0.051406 / 0.038508 (0.012898) | 0.032263 / 0.023109 (0.009154) | 0.273715 / 0.275898 (-0.002183) | 0.300982 / 0.323480 (-0.022498) | 0.004533 / 0.007986 (-0.003452) | 0.002911 / 0.004328 (-0.001418) | 0.050464 / 0.004250 (0.046214) | 0.041131 / 0.037052 (0.004078) | 0.289958 / 0.258489 (0.031469) | 0.328632 / 0.293841 (0.034791) | 0.033545 / 0.128546 (-0.095001) | 0.013145 / 0.075646 (-0.062501) | 0.062241 / 0.419271 (-0.357031) | 0.035095 / 0.043533 (-0.008438) | 0.273303 / 0.255139 (0.018164) | 0.293652 / 0.283200 (0.010452) | 0.019980 / 0.141683 (-0.121703) | 1.155432 / 1.452155 (-0.296722) | 1.211409 / 1.492716 (-0.281307) |\n\n### Benchmark: benchmark_getitem\\_100B.json\n\n| metric | get_batch_of\\_1024\\_random_rows | get_batch_of\\_1024\\_rows | get_first_row | get_last_row |\n|--------|---|---|---|---|\n| new / old (diff) | 0.094885 / 0.018006 (0.076879) | 0.307423 / 0.000490 (0.306933) | 0.000254 / 0.000200 (0.000054) | 0.000068 / 0.000054 (0.000013) |\n\n### Benchmark: benchmark_indices_mapping.json\n\n| metric | select | shard | shuffle | sort | train_test_split |\n|--------|---|---|---|---|---|\n| new / old (diff) | 0.023462 / 0.037411 (-0.013949) | 0.081980 / 0.014526 (0.067454) | 0.089890 / 0.176557 (-0.086666) | 0.131058 / 0.737135 (-0.606078) | 0.091873 / 0.296338 (-0.204465) |\n\n### Benchmark: benchmark_iterating.json\n\n| metric | read 5000 | read 50000 | read_batch 50000 10 | read_batch 50000 100 | read_batch 50000 1000 | read_formatted numpy 5000 | read_formatted pandas 5000 | read_formatted tensorflow 5000 | read_formatted torch 5000 | read_formatted_batch numpy 5000 10 | read_formatted_batch numpy 5000 1000 | shuffled read 5000 | shuffled read 50000 | shuffled read_batch 50000 10 | shuffled read_batch 50000 100 | shuffled read_batch 50000 1000 | shuffled read_formatted numpy 5000 | shuffled read_formatted_batch numpy 5000 10 | shuffled read_formatted_batch numpy 5000 1000 |\n|--------|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 0.298522 / 0.215209 (0.083313) | 2.981771 / 2.077655 (0.904116) | 1.632515 / 1.504120 (0.128395) | 1.502885 / 1.541195 (-0.038310) | 1.496868 / 1.468490 (0.028377) | 0.750145 / 4.584777 (-3.834632) | 0.988853 / 3.745712 (-2.756859) | 3.029162 / 5.269862 (-2.240700) | 1.952304 / 4.565676 (-2.613373) | 0.082418 / 0.424275 (-0.341857) | 0.005724 / 0.007607 (-0.001883) | 0.356914 / 0.226044 (0.130870) | 3.523804 / 2.268929 (1.254875) | 1.983254 / 55.444624 (-53.461370) | 1.673135 / 6.876477 (-5.203342) | 1.716639 / 2.142072 (-0.425433) | 0.821568 / 4.805227 (-3.983659) | 0.136113 / 6.500664 (-6.364551) | 0.041593 / 0.075469 (-0.033876) |\n\n### Benchmark: benchmark_map_filter.json\n\n| metric | filter | map fast-tokenizer batched | map identity | map identity batched | map no-op batched | map no-op batched numpy | map no-op batched pandas | map no-op batched pytorch | map no-op batched tensorflow |\n|--------|---|---|---|---|---|---|---|---|---|\n| new / old (diff) | 1.044670 / 1.841788 (-0.797118) | 12.739375 / 8.074308 (4.665066) | 10.263619 / 10.191392 (0.072227) | 0.132811 / 0.680424 (-0.547613) | 0.015491 / 0.534201 (-0.518710) | 0.305545 / 0.579283 (-0.273738) | 0.129226 / 0.434364 (-0.305138) | 0.345532 / 0.540337 (-0.194805) | 0.460406 / 1.386936 (-0.926530) |\n\n</details>\n</details>\n\n\n"

] | Set dev version | {

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/7099/reactions"

} | PR_kwDODunzps54U7s4 | {

"diff_url": "https://github.com/huggingface/datasets/pull/7099.diff",

"html_url": "https://github.com/huggingface/datasets/pull/7099",

"merged_at": "2024-08-14T08:39:25Z",

"patch_url": "https://github.com/huggingface/datasets/pull/7099.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7099"

} | 2024-08-14T08:31:17Z | https://api.github.com/repos/huggingface/datasets/issues/7099/comments | null | {

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/albertvillanova",

"id": 8515462,

"login": "albertvillanova",

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"type": "User",

"url": "https://api.github.com/users/albertvillanova"

} | https://api.github.com/repos/huggingface/datasets/issues/7099/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7099/timeline | closed | false | 7,099 | null | 2024-08-14T08:39:25Z | null | true |

2,465,016,562 | https://api.github.com/repos/huggingface/datasets/issues/7098 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7098/events | [] | null | 2024-08-14T06:41:07Z | [] | https://github.com/huggingface/datasets/pull/7098 | MEMBER | null | false | null | [

"The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/datasets/pr_7098). All of your documentation changes will be reflected on that endpoint. The docs are available until 30 days after the last update."

] | Release: 2.21.0 | {