anchor

stringlengths 58

24.4k

| positive

stringlengths 9

13.4k

| negative

stringlengths 166

14k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Overview

We made a gorgeous website to plan flights with Jet Blue's data sets. Come check us out! | ## Inspiration

We were inspired by the numerous Facebook posts, Slack messages, WeChat messages, emails, and even Google Sheets that students at Stanford create in order to coordinate Ubers/Lyfts to the airport as holiday breaks approach. This was mainly for two reasons, one being the safety of sharing a ride with other trusted Stanford students (often at late/early hours), and the other being cost reduction. We quickly realized that this idea of coordinating rides could also be used not just for ride sharing to the airport, but simply transportation to anywhere!

## What it does

Students can access our website with their .edu accounts and add "trips" that they would like to be matched with other users for. Our site will create these pairings using a matching algorithm and automatically connect students with their matches through email and a live chatroom in the site.

## How we built it

We utilized Wix Code to build the site and took advantage of many features including Wix Users, Members, Forms, Databases, etc. We also integrated SendGrid API for automatic email notifications for matches.

## Challenges we ran into

## Accomplishments that we're proud of

Most of us are new to Wix Code, JavaScript, and web development, and we are proud of ourselves for being able to build this project from scratch in a short amount of time.

## What we learned

## What's next for Runway | ## Inspiration

Have you wondered where to travel or how to plan your trip more interesting?

Wanna make trips more adventerous ?

## What it does

Xplore is an **AI based-travel application** that allows you to experience destinations in a whole new way. It keeps your adrenaline pumping by keeping your vacation destinations undisclosed.

## How we built it

* Xplore is completely functional web application built with Html, Css, Bootstrap, Javscript and Sqlite.

* Multiple Google Cloud Api's such as Geolocation API, Maps Javascript API, Directions API were used to achieve our map functionalities.

* Web3.storage was also used for data storage service and to retrieves data on IPFS and Filecoin.

## Challenges we ran into

While integrating multiple cloud API's and API token from Web3.Strorage with our project, we discovered that it was a little complex.

## What's next for Xplore

* Mobile Application for easier access.

* Multiple language Support

* Seasonal travel suggestions. | partial |

## Inspiration

Everyone gets tired waiting for their large downloads to complete. BitTorrent is awesome, but you may not have a bunch of peers ready to seed it. Fastify, a download accelerator as a service, solves both these problems and regularly enables 4x download speeds.

## What it does

The service accepts a URL and spits out a `.torrent` file. This `.torrent` file allows you to tap into Fastify's speedy seed servers for your download.

We even cache some downloads so popular downloads will be able to be pulled from Fastify even speedier!

Without any cache hits, we saw the following improvements in download speeds with our test files:

```

| | 512Mb | 1Gb | 2Gb | 5Gb |

|-------------------|----------|--------|---------|---------|

| Regular Download | 3 mins | 7 mins | 13 mins | 30 mins |

| Fastify | 1.5 mins | 3 mins | 5 mins | 9 mins |

|-------------------|----------|--------|---------|---------|

| Effective Speedup | 2x | 2.33x | 2.6x | 3.3x |

```

*test was performed with slices of the ubuntu 16.04 iso file, on the eduroam network*

## How we built it

Created an AWS cluster and began writing Go code to accept requests and the front-end to send them. Over time we added more workers to the AWS cluster and improved the front-end. Also, we generously received some well-needed Vitamin Water.

## Challenges we ran into

The BitTorrent protocol and architecture was more complicated for seeding than we thought. We were able to create `.torrent` files that enabled downloads on some BitTorrent clients but not others.

Also, our "buddy" (*\*cough\** James *\*cough\**) ditched our team, so we were down to only 2 people off the bat.

## Accomplishments that we're proud of

We're able to accelerate large downloads by 2-5 times as fast as the regular download. That's only with a cluster of 4 computers.

## What we learned

Bittorrent is tricky. James can't be trusted.

## What's next for Fastify

More servers on the cluster. Demo soon too. | ## Inspiration

You use Apple Music. Your friends all use Spotify. But you're all stuck in a car together on the way to Tahoe and have the perfect song to add to the road trip playlist. With TrainTrax, you can all add songs to the same playlist without passing the streaming device around or hassling with aux cords.

Have you ever been out with friends on a road trip or at a party and wished there was a way to more seamlessly share music? TrainTrax is a music streaming middleware that lets cross platform users share music without pulling out the aux cord.

## How it Works

The app authenticates a “host” user sign through their Apple Music or Spotify Premium accounts and let's them create a party where they can invite friends to upload music to a shared playlist. Friends with or without those streaming service accounts can port through the host account to queue up their favorite songs. Hear a song you like? TrainTrax uses Button to deep links songs directly to your iTunes account, so that amazing song you heard is just a click away from being yours.

## How We Built It

The application is built with Swift 3 and Node.js/Express. A RESTful API let’s users create parties, invite friends, and add songs to a queue. The app integrates with Button to deep link users to songs on iTunes, letting them purchase songs directly through the application.

## Challenges We Ran Into

• The application depended a lot on third party tools, which did not always have great documentation or support.

• This was the first hackathon for three of our four members, so a lot of the experience came with a learning curve. In the spirit of collaboration, our team approached this as a learning opportunity, and each member worked to develop a new skill to support the building of the application. The end result was an experience focused more on learning and less on optimization.

• Rain.

## Accomplishments that we're proud of

• SDK Integrations: Successful integration with Apple Music and Spotify SDKs!

• Button: Deep linking with Button

• UX: There are some strange UX flows involved with adding songs to a shared playlist, but we kicked of the project with a post-it design thinking brainstorm session that set us up well for creating these complex user flows later on.

• Team bonding: Most of us just met on Friday, and we built a strong fun team culture.

## What we learned

Everyone on our team learned different things.

## What's next for TrainTrax

• A web application for non-iPhone users to host and join parties

• Improved UI and additional features to fine tune the user experience — we've got a lot of ideas for the next version in the pipeline, including some already designed in this prototype: [TrainTrax prototype link](https://invis.io/CSAIRSU6U#/219754962_Invision-_User_Types) | ## Inspiration

I like looking at things. I do not enjoy bad quality videos . I do not enjoy waiting. My CPU is a lazy fool. He just lays there like a drunkard on new years eve. My poor router has a heart attack every other day so I can stream the latest Kylie Jenner video blog post, or has the kids these days call it, a 'vlog' post.

CPU isn't being effectively leveraged to improve video quality. Deep learning methods are in their own world, concerned more with accuracy than applications. We decided to develop a machine learning application to enhance resolution while developing our models in such a way that they can effective run without 10,000 GPUs.

## What it does

We reduce your streaming bill. We let you stream Kylie's vlog in high definition. We connect first world medical resources to developing nations. We make convert an unrecognizeable figure in a cop's body cam to a human being. We improve video resolution.

## How I built it

Wow. So lots of stuff.

Web scraping youtube videos for datasets of 144, 240, 360, 480 pixels. Error catching, thread timeouts, yada, yada. Data is the most import part of machine learning, and no one cares in the slightest. So I'll move on.

## ML stuff now. Where the challenges begin

We tried research papers. Super Resolution Generative Adversarial Model [link](https://arxiv.org/abs/1609.04802). SRGAN with an attention layer [link](https://arxiv.org/pdf/1812.04821.pdf). These were so bad. The models were to large to hold in our laptop, much less in real time. The model's weights alone consisted of over 16GB. And yeah, they get pretty good accuracy. That's the result of training a million residual layers (actually *only* 80 layers) for months on GPU clusters. We did not have the time or resources to build anything similar to these papers. We did not follow onward with this path.

We instead looked to our own experience. Our team had previously analyzed the connection between image recognition and natural language processing and their shared relationship to high dimensional spaces [see here](https://arxiv.org/abs/1809.05286). We took these learnings and built a model that minimized the root mean squared error as it upscaled from 240 to 480 px.

However, we quickly hit a wall, as this pixel based loss consistently left the upscaled output with blurry edges. In order to address these edges, we used our model as the Generator in a Generative Adversarial Network. However, our generator was too powerful, and the discriminator was lost.

We decided then to leverage the work of the researchers before us in order to build this application for the people. We loaded a pretrained VGG network and leveraged its image embeddings as preprocessing for our discriminator. Leveraging this pretrained model, we were able to effectively iron out the blurry edges while still minimizing mean squared error.

Now model built. We then worked at 4 AM to build an application that can convert videos into high resolution.

## Accomplishments that I'm proud of

Building it good.

## What I learned

Balanced approaches and leveraging past learning

## What's next for Crystallize

Real time stream-enhance app. | partial |

## Inspiration

Provide valuable data to on-premise coordinators just seconds before the firefighters make entry to a building on fire, minimizing the time required to search for and rescue victims. Reports conditions around high-risk areas to alert firefighters for what lies ahead in their path. Increase operational awareness through live, autonomous data collection.

## What it does

We are able to control the drone from a remote location, allowing it to take off, fly in patterns, and autonomously navigate through an enclosed area in order to look for dangerous conditions and potential victims, using a proprietary face-detection algorithm. The web interface then relays a live video stream, location, temperature, and humidity data back to the remote user. The drone saves locations of faces detected, and coordinators are able to quickly pinpoint the location of individuals at risk. The firefighters make use of this information in order to quickly diffuse life-threatening conditions with increased awareness of the conditions inside of the affected area.

## How we built it

We used a JS and HTML front-end, using Solace's PubSub+ broker in order to relay commands sent from the web UI to the drone with minimal latency. Our AI stack consists of an HaaR cascade that finds AI markers and detects faces using a unique face detection algorithm through OpenCV. In order to find fires, we're looking for areas with the highest light intensity and heat, which instructs the drone to fly near and around the areas of concern. Once a face is found, a picture is taken and telemetry information is relayed back to the remote web console. We have our Solace PubSub+ broker instance running on Google Cloud Platform.

## Challenges we ran into

Setting up the live video stream on the Raspberry Pi 4B proved to be an impossible task, as the .h264 raw output from the Raspberry Pi's GPU was impossible to encode into a .mp4 container on the fly. However, when the script was run on Windows, the live video stream, as well as all AI functionality, worked perfectly. We spent a lot of time trying to debug the program on the Raspberry Pi in order to acquire our UDP video live stream, as all ML and AI functionality was inoperational without it. In the end, we somehow got it to work.

Brute forcing into every port of the DJI Tello drone in order to collect serial output took nearly 5 hours and required us to spin up a DigitalOcean instance in order to allow us access to the drone's control surfaces and video data.

## Accomplishments that we're proud of

We were really proud to get the autonomous flying of the drone working using facial recognition. It was quite the task to brute force every wifi port on the drone in order to manipulate it the way we wanted it to, so we were super happy to get all the functionality working by the end of the makeathon.

## What we learned

You can't use GPS indoors because it's impossible to get a satellite lock.

## What's next for FireFly

Commercialization. | ## Inspiration

In times of disaster, the capacity of rigid networks like cell service and internet dramatically decreases at the same time demand increases as people try to get information and contact loved ones. This can lead to crippled telecom services which can significantly impact first responders in disaster struck areas, especially in dense urban environments where traditional radios don't work well. We wanted to test newer radio and AI/ML technologies to see if we could make a better solution to this problem, which led to this project.

## What it does

Device nodes in the field network to each other and to the command node through LoRa to send messages, which helps increase the range and resiliency as more device nodes join. The command & control center is provided with summaries of reports coming from the field, which are visualized on the map.

## How we built it

We built the local devices using Wio Terminals and LoRa modules provided by Seeed Studio; we also integrated magnetometers into the devices to provide a basic sense of direction. Whisper was used for speech-to-text with Prediction Guard for summarization, keyword extraction, and command extraction, and trained a neural network on Intel Developer Cloud to perform binary image classification to distinguish damaged and undamaged buildings.

## Challenges we ran into

The limited RAM and storage of microcontrollers made it more difficult to record audio and run TinyML as we intended. Many modules, especially the LoRa and magnetometer, did not have existing libraries so these needed to be coded as well which added to the complexity of the project.

## Accomplishments that we're proud of:

* We wrote a library so that LoRa modules can communicate with each other across long distances

* We integrated Intel's optimization of AI models to make efficient, effective AI models

* We worked together to create something that works

## What we learned:

* How to prompt AI models

* How to write drivers and libraries from scratch by reading datasheets

* How to use the Wio Terminal and the LoRa module

## What's next for Meshworks - NLP LoRa Mesh Network for Emergency Response

* We will improve the audio quality captured by the Wio Terminal and move edge-processing of the speech-to-text to increase the transmission speed and reduce bandwidth use.

* We will add a high-speed LoRa network to allow for faster communication between first responders in a localized area

* We will integrate the microcontroller and the LoRa modules onto a single board with GPS in order to improve ease of transportation and reliability | ## Inspiration

Alex K's girlfriend Allie is a writer and loves to read, but has had trouble with reading for the last few years because of an eye tracking disorder. She now tends towards listening to audiobooks when possible, but misses the experience of reading a physical book.

Millions of other people also struggle with reading, whether for medical reasons or because of dyslexia (15-43 million Americans) or not knowing how to read. They face significant limitations in life, both for reading books and things like street signs, but existing phone apps that read text out loud are cumbersome to use, and existing "reading glasses" are thousands of dollars!

Thankfully, modern technology makes developing "reading glasses" much cheaper and easier, thanks to advances in AI for the software side and 3D printing for rapid prototyping. We set out to prove through this hackathon that glasses that open the world of written text to those who have trouble entering it themselves can be cheap and accessible.

## What it does

Our device attaches magnetically to a pair of glasses to allow users to wear it comfortably while reading, whether that's on a couch, at a desk or elsewhere. The software tracks what they are seeing and when written words appear in front of it, chooses the clearest frame and transcribes the text and then reads it out loud.

## How we built it

**Software (Alex K)** -

On the software side, we first needed to get image-to-text (OCR or optical character recognition) and text-to-speech (TTS) working. After trying a couple of libraries for each, we found Google's Cloud Vision API to have the best performance for OCR and their Google Cloud Text-to-Speech to also be the top pick for TTS.

The TTS performance was perfect for our purposes out of the box, but bizarrely, the OCR API seemed to predict characters with an excellent level of accuracy individually, but poor accuracy overall due to seemingly not including any knowledge of the English language in the process. (E.g. errors like "Intreduction" etc.) So the next step was implementing a simple unigram language model to filter down the Google library's predictions to the most likely words.

Stringing everything together was done in Python with a combination of Google API calls and various libraries including OpenCV for camera/image work, pydub for audio and PIL and matplotlib for image manipulation.

**Hardware (Alex G)**: We tore apart an unsuspecting Logitech webcam, and had to do some minor surgery to focus the lens at an arms-length reading distance. We CAD-ed a custom housing for the camera with mounts for magnets to easily attach to the legs of glasses. This was 3D printed on a Form 2 printer, and a set of magnets glued in to the slots, with a corresponding set on some NerdNation glasses.

## Challenges we ran into

The Google Cloud Vision API was very easy to use for individual images, but making synchronous batched calls proved to be challenging!

Finding the best video frame to use for the OCR software was also not easy and writing that code took up a good fraction of the total time.

Perhaps most annoyingly, the Logitech webcam did not focus well at any distance! When we cracked it open we were able to carefully remove bits of glue holding the lens to the seller’s configuration, and dial it to the right distance for holding a book at arm’s length.

We also couldn’t find magnets until the last minute and made a guess on the magnet mount hole sizes and had an *exciting* Dremel session to fit them which resulted in the part cracking and being beautifully epoxied back together.

## Acknowledgements

The Alexes would like to thank our girlfriends, Allie and Min Joo, for their patience and understanding while we went off to be each other's Valentine's at this hackathon. | partial |

## Overview of Siacoin

Sia is a decentralized storage platform secured by blockchain technology. The Sia Storage Platform leverages underutilized hard drive capacity around the world to create a data storage marketplace that is more reliable and lower cost than traditional cloud storage providers.

Your data is sliced up, encrypted, and stored on nodes all across the globe to eliminate any single point of failure and ensure the highest possible uptime. Since you hold the keys, you own your data. No outside company can access or control your files.

## Inspiration

Currently, Siacoin runs on charging the host for bandwidth and storage used for the file. We think this leaves the file especially vulnerable when the host stops watching and paying for the file. Additionally, the host might not have the ability to buy Siacoin quickly. We want to make decentralized storage truly free for all.

## What it does

To mitigate this issue, we're creating an ad-supported upload and download web server portal that will allow the file to sustain itself monetarily on the network.

To use, the user will visit the site, upload the file after watching an ad and then receive a download link as well as their Sia permanent link. They could then disseminate the links to the users who would like to access the file.

## Based on these calculations the Sia Cost: (prices as of 9/4/19)

Storage $0.68 / 1TB $6.8E-7/1MB

Upload $0.11 /1TB $1.1E-7/1MB

Download $0.59 /1TB $5.9E-7/1MB

We can clearly see that one 30 second video ad ~$0.01 can conservatively handle the price of 1gb of data on a download. One 30 second video ad on upload can also cover close to a year of hosting. One could say this isn't the true cost as explained below but we'll use this as a rough order of magnitude approximation.

## Prototype Status:

We tried using a Node.Js server backend to get the files into the Siacoin. We had some difficulties understanding the API and understanding how the Sia daemon uses the Node.Js server to fully do everything we need. Additionally, It was difficult to get the Sia client working.

Still if we got the right configuration working, the upload side of the file would have to go to the web server, then uploaded to Sia. On the reverse side, the file needs to be queried from Sia to the web server, then downloaded to the client. In summary, this increases the bandwidth requirements considerably to use Sia as the final destination which will increase costs.

However, there are situations that this could make sense. On fairly small files, few ads could cover all of these costs anyway. One could assert that the bandwidth is the majority cost of the file hosting so this is only useful for mostly cold storage.

However, as long as the original Sia path is known, the file is not reliant on the central web server shutting down. This allows the file to stay up indefinitely as multiple client software can still access the file. One could simply recover their password for their Sia files they uploaded and still access them.

## What's next for Sustainable Ad-Supported Blockchain File Storage

Of course, this is all in the abstract. Due to difficulties getting the wallet working, much development would have to be done in the future. This idea was thought up by our hackathon team and we think might have promise in the future for Sia. | ## Inspiration

As the lines between AI-generated and real-world images blur, the integrity and trustworthiness of visual content have become critical concerns. Traditional metadata isn't as reliable as it once was, prompting us to seek out groundbreaking solutions to ensure authenticity.

## What it does

"The Mask" introduces a revolutionary approach to differentiate between AI-generated images and real-world photos. By integrating a masking layer during the propagation step of stable diffusion, it embeds a unique hash. This hash is directly obtained from the Solana blockchain, acting as a verifiable seal of authenticity. Whenever someone encounters an image, they can instantly verify its origin: whether it's an AI creation or an authentic capture from the real world.

## How we built it

Our team began with an in-depth study of the stable diffusion mechanism, pinpointing the most effective point to integrate the masking layer. We then collaborated with blockchain experts to harness Solana's robust infrastructure, ensuring seamless and secure hash integration. Through iterative testing and refining, we combined these components into a cohesive, reliable system.

## Challenges we ran into

Melding the complex world of blockchain with the intricacies of stable diffusion was no small feat. We faced hurdles in ensuring the hash's non-intrusiveness, so it didn't distort the image. Achieving real-time hash retrieval and embedding while maintaining system efficiency was another significant challenge.

As the lines between AI-generated and real-world images blur, the integrity and trustworthiness of visual content have become critical concerns. Traditional metadata isn't as reliable as it once was, prompting us to seek out groundbreaking solutions to ensure authenticity.

## Accomplishments that we're proud of

Successfully integrating a seamless masking layer that does not compromise image quality.

Achieving instantaneous hash retrieval from Solana, ensuring real-time verification.

Pioneering a solution that addresses a pressing concern in the AI and digital era.

Garnering interest from major digital platforms for potential integration.

## What we learned

The journey taught us the importance of interdisciplinary collaboration. Bringing together experts in AI, image processing, and blockchain was crucial. We also discovered the potential of blockchain beyond cryptocurrency, especially in preserving digital integrity.\

## What's next for The Mask

We envision "The Mask" as the future gold standard for digital content verification. We're in talks with online platforms and content creators to integrate our solution. Furthermore, we're exploring the potential to expand beyond images, offering verification solutions for videos, audio, and other digital content forms. | ## Inspiration

There are 1.3 billion iMessage users and 600 million Discord users. Furtermore, 65% of phones use SMS and SMS does not allow video sharing. File sharing is IMPORTANT! Unfortunately, these applications use cloud-sharing with heavy limitations, and other cloud based video-upload systems such as Youtube and Google Drive take too long. Sometimes we just want to send videos to our friends without having to compress, crop, or have to upload them on a lengthy system. Furthermore, once users upload their videos, centralized systems own their data and users are not the ones in control. Being able to monetize and encrypt your own data without losing autonomy to the bigger players is essential.

## What it does

VidTooLong allows the user to not only upload videos onto the Fabric, but also allows easy link sharing within seconds. The user is also given the autonomy to monetize and encrypt their data before sending it out. This allows it so the receipients cannot leverage the content against the sender. It's easy and helps fix the issues within most big cloud sharing platforms as well as beating the smaller competition as its free!

## How we built it

I built this using Vercel, NextJS, TypeScript, React, & Eluv.io

## Challenges we ran into

My biggest challenge was working solo on this project and starting very late on Saturday. As I flew in from Toronto, Canada and arrived Saturday morning, I spent most of the day playing catch-up and getting to know all the sponsors better. Eluv.io caught my eye and I had to spend all night working. Furthermore, nobody was awake to load my wallet so I couldn't experiment with the API

## Accomplishments that we're proud of

Making a useful and intuitive web2 and web3 product!

## What's next for VidTooLong

Incorporate Web3 and Web2 Wallet integration and a Firebase Authentication system for more personalization and security.

Further build the full-stack elements so that the website can be useable and properly implements the API

Make it so that the user can control how long the link will be active for, further putting their content within their control

Web3 Venmo for Media Content

Add Photo Sharing as well | partial |

## Inspiration

Have you ever had to stand in line and tediously fill out your information for contact tracing at your favorite local restaurant? Have you ever asked yourself what's the point of traffic jams at restaurants which rather than reducing the risk of contributing to the spreading of the outbreak ends up increasing social contact and germ propagation? If yes, JamFree is for you!

## What it does

JamFree is a web application that supports small businesses and restaurants during the pandemic by completely automating contact tracing in order to minimize physical exposure and eliminate the possibility of human error in the event where tracing back on customer visits is necessary. This application helps support local restaurants and small businesses by alleviating the pressure and negative impact this pandemic has had on their business.

In order to accomplish this goal, here's how it would be used:

1. Customer creates an account by filling out the required information restaurants would use for contact tracing such as name, email, and phone number.

2. A QR code is generated by our application

3. Restaurants also create a JamFree account with the possibility of integrating with their favorite POS software

4. Upon arrival at their favorite restaurant, the restaurant staff would scan the customer's QR code from our application

5. Customer visit has now been recorded on the restaurant's POS as well as JamFree's records

## How we built it

We divided the project into two main components; the front-end with react components to make things interactive while the back-end used Express to create a REST API that interacts with a cockroach database. The whole project was deployed using amazon-web services (serverless servers for a quick and efficient deployment).

## Challenges we ran into

We had to figure out how to complete the integration of QR codes for the first time, how to integrate our application with third-party software such as Square or Shopify (OAuth), and how to level out the playing field with the adaptability of new technologies and different languages used across the team.

## Accomplishments that we're proud of

We successfully and simply integrated or app with POS software (e.g. using a free Square Account and Square APIs in order to access the customer base of restaurants while keeping everything centralized and easily accessible).

## What we learned

We became familiar with OAuth 2.0 Protocols, React, and Node. Half of our team was compromised of first-time hackers who had to quickly become familiar with the technologies we used. We learnt that coding can be a pain in the behind but it is well worth it in the end! Teamwork makes the dream work ;)

## What's next for JamFree

We are planning to improve and expand on our services in order to provide them to local restaurants. We will start by integrating it into one of our teammate's family-owned restaurant as well as pitch it to our local parishes to make things safer and easier. We are looking into integrating geofencing in the future in order to provide targeted advertisements and better support our clients in this difficult time for small businesses. | **Inspiration**

Toronto ranks among the top five cities in the world with the worst traffic congestion. As both students and professionals, we faced the daily challenge of navigating this chaos and saving time on our commutes. This led us to question the accuracy of traditional navigation tools like Google Maps. We wondered if there were better, faster routes that could be discovered through innovative technology.

**What it does**

ruteX is an AI-driven navigation app that revolutionizes how users find their way. By integrating Large Language Models (LLMs) and action agents, ruteX facilitates seamless voice-to-voice communication with users. This allows the app to create customized routes based on various factors, including multi-modal transportation options (both private and public), environmental considerations such as carbon emissions, health metrics like calories burned, and cost factors like the cheapest parking garages and gas savings.

**How we built it**

We developed ruteX by leveraging cutting-edge AI technologies. The core of our system is powered by LLMs that interact with action agents, ensuring that users receive personalized route recommendations. We focused on creating a user-friendly interface that simplifies the navigation process while providing comprehensive data on various routing options.

**Challenges we ran into**

Throughout the development process, we encountered challenges such as integrating real-time data for traffic and environmental factors, ensuring accuracy in route recommendations, and maintaining a smooth user experience in the face of complex interactions. Balancing these elements while keeping the app intuitive required significant iterative testing and refinement.

**Accomplishments that we're proud of**

We take pride in our app's simplistic user interface that enhances usability without sacrificing functionality. Our innovative LLM action agents (using fetch ai) effectively communicate with users, making navigation a more interactive experience. Additionally, utilizing Gemini as the "brain" of our ecosystem has allowed us to optimize our AI capabilities, setting ruteX apart from existing navigation solutions.

**What we learned**

This journey has taught us the importance of user feedback in refining our app's features. We've learned how critical it is to prioritize user needs and preferences while also staying flexible in our approach to integrating AI technologies. Our experience also highlighted the potential of AI in transforming traditional industries like navigation.

**What's next for ruteX**

Looking ahead, we plan to scale ruteX to its full potential, aiming to completely revolutionize traditional navigation methods. We are exploring integration with wearables like smartwatches and smart lenses, allowing users to interact with their travel assistant effortlessly. Our vision is for users to simply voice their needs and enjoy their journey without the complexities of conventional navigation. | ## Inspiration

Ideas for interactions from:

* <http://paperprograms.org/>

* <http://dynamicland.org/>

but I wanted to go from the existing computer down, rather from the bottom up, and make something that was a twist on the existing desktop: Web browser, Terminal, chat apps, keyboard, windows.

## What it does

Maps your Mac desktop windows onto pieces of paper + tracks a keyboard and lets you focus on whichever one is closest to the keyboard. Goal is to make something you might use day-to-day as a full computer.

## How I built it

A webcam and pico projector mounted above desk + OpenCV doing basic computer vision to find all the pieces of paper and the keyboard.

## Challenges I ran into

* Reliable tracking under different light conditions.

* Feedback effects from projected light.

* Tracking the keyboard reliably.

* Hooking into macOS to control window focus

## Accomplishments that I'm proud of

Learning some CV stuff, simplifying the pipelines I saw online by a lot and getting better performance (binary thresholds are great), getting a surprisingly usable system.

Cool emergent things like combining pieces of paper + the side ideas I mention below.

## What I learned

Some interesting side ideas here:

* Playing with the calibrated camera is fun on its own; you can render it in place and get a cool ghost effect

* Would be fun to use a deep learning thing to identify and compute with arbitrary objects

## What's next for Computertop Desk

* Pointing tool (laser pointer?)

* More robust CV pipeline? Machine learning?

* Optimizations: run stuff on GPU, cut latency down, improve throughput

* More 'multiplayer' stuff: arbitrary rotations of pages, multiple keyboards at once | losing |

## Inspiration

Amidst the hectic lives and pandemic struck world, mental health has taken a back seat. This thought gave birth to our inspiration of this web based app that would provide activities customised to a person’s mood that will help relax and rejuvenate.

## What it does

We planned to create a platform that could detect a users mood through facial recognition, recommends yoga poses to enlighten the mood and evaluates their correctness, helps user jot their thoughts in self care journal.

## How we built it

Frontend: HTML5, CSS(frameworks used:Tailwind,CSS),Javascript

Backend: Python,Javascript

Server side> Nodejs, Passport js

Database> MongoDB( for user login), MySQL(for mood based music recommendations)

## Challenges we ran into

Incooperating the open CV in our project was a challenge, but it was very rewarding once it all worked .

But since all of us being first time hacker and due to time constraints we couldn't deploy our website externally.

## Accomplishments that we're proud of

Mental health issues are the least addressed diseases even though medically they rank in top 5 chronic health conditions.

We at umang are proud to have taken notice of such an issue and help people realise their moods and cope up with stresses encountered in their daily lives. Through are app we hope to give people are better perspective as well as push them towards a more sound mind and body

We are really proud that we could create a website that could help break the stigma associated with mental health. It was an achievement that in this website includes so many features to help improving the user's mental health like making the user vibe to music curated just for their mood, engaging the user into physical activity like yoga to relax their mind and soul and helping them evaluate their yoga posture just by sitting at home with an AI instructor.

Furthermore, completing this within 24 hours was an achievement in itself since it was our first hackathon which was very fun and challenging.

## What we learned

We have learnt on how to implement open CV in projects. Another skill set we gained was on how to use Css Tailwind. Besides, we learned a lot about backend and databases and how to create shareable links and how to create to do lists.

## What's next for Umang

While the core functionality of our app is complete, it can of course be further improved .

1)We would like to add a chatbot which can be the user's guide/best friend and give advice to the user when in condition of mental distress.

2)We would also like to add a mood log which can keep a track of the user's daily mood and if a serious degradation of mental health is seen it can directly connect the user to medical helpers, therapist for proper treatement.

This lays grounds for further expansion of our website. Our spirits are up and the sky is our limit | ## Inspiration

As students, we’ve all heard it from friends, read it on social media or even experienced it ourselves: students in need of mental health support will book counselling appointments, only to be waitlisted for the foreseeable future without knowing alternatives. Or worse, they get overwhelmed by the process of finding a suitable mental health service for their needs, give up and deal with their struggles alone.

The search for the right mental health service can be daunting but it doesn’t need to be!

## What it does

MindfulU centralizes information on mental health services offered by UBC, SFU, and other organizations. It assists students in finding, learning about, and using mental health resources through features like a chatbot, meditation mode, and an interactive services map.

## How we built it

Before building, we designed the UI of the website first with Figma to visualize how the website should look like.

The website is built with React and Twilio API for its core feature to connect users with the Twilio chatbot to connect them with the correct helpline. We also utilized many npm libraries to ensure the website has a smooth look to it.

Lastly, we deployed the website using Vercel.

## Challenges We ran into

We had a problem in making the website responsive for the smaller screens. As this is a hackathon, we were focusing on trying to implement the designs and critical features for laptop screen size.

## Accomplishments that we're proud of

We are proud that we had the time to implement the core features that we wanted, especially implementing all the designs from Figma into React components and ensuring it fits in a laptop screen size.

## What we learned

We learned that it's not only the tech stack and implementation of the project that matters but also the purpose and message that the project is trying to convey.

## What's next for MindfulU

We want to make the website more responsive towards any screen size to ensure every user can access it from any device. | ## Inspiration

We wanted to create a convenient, modernized journaling application with methods and components that are backed by science. Our spin on the readily available journal logging application is our take on the idea of awareness itself. What does it mean to be aware? What form or shape can mental health awareness come in? These were the key questions that we were curious about exploring, and we wanted to integrate this idea of awareness into our application. The “awareness” approach of the journal functions by providing users with the tools to track and analyze their moods and thoughts, as well as allowing them to engage with the visualizations of the journal entries to foster meaningful reflections.

## What it does

Our product provides a user-friendly platform for logging and recording journal entries and incorporates natural language processing (NLP) to conduct sentiment analysis. Users will be able to see generated insights from their journal entries, such as how their sentiments have changed over time.

## How we built it

Our front-end is powered by the ReactJS library, while our backend is powered by ExpressJS. Our sentiment analyzer was integrated with our NodeJS backend, which is also connected to a MySQL database.

## Challenges we ran into

Creating this app idea under such a short period of time proved to be more challenge than we anticipated. Our product was meant to comprise of more features that helped the journaling aspect of the app as well as the mood tracking aspect of the app. We had planned on showcasing an aggregation of the user's mood over different time periods, for instance, daily, weekly, monthly, etc. And on top of that, we had initially planned on deploying our web app on a remote hosting server but due to the time constraint, we had decided to reduce our proof-of-concept to the most essential cores features for our idea.

## Accomplishments that we're proud of

Designing and building such an amazing web app has been a wonderful experience. To think that we created a web app that could potentially be used by individuals all over the world and could help them keep track of their mental health has been such a proud moment. It really embraces the essence of a hackathon in its entirety. And this accomplishment has been a moment that our team can proud of. The animation video is an added bonus, visual presentations have a way of captivating an audience.

## What we learned

By going through the whole cycle of app development, we learned how one single part does not comprise the whole. What we mean is that designing an app is more than just coding it, the real work starts in showcasing the idea to others. In addition to that, we learned the importance of a clear roadmap for approaching issues (for example, coming up with an idea) and that complicated problems do not require complicated solutions, for instance, our app in simplicity allows for users to engage in a journal activity and to keep track of their moods over time. And most importantly, we learned how the simplest of ideas can be the most useful if they are thought right.

## What's next for Mood for Thought

Making a mobile app could have been better, given that it would align with our goals of making journaling as easy as possible. Users could also retain a degree of functionality offline. This could have also enabled a notification feature that would encourage healthy habits.

More sophisticated machine learning would have the potential to greatly improve the functionality of our app. Right now, simply determining either positive/negative sentiment could be a bit vague.

Adding recommendations on good journaling practices could have been an excellent addition to the project. These recommendations could be based on further sentiment analysis via NLP. | partial |

## Inspiration

Being students in a technical field, we all have to write and submit resumes and CVs on a daily basis. We wanted to incorporate multiple non-supervised machine learning algorithms to allow users to view their resumes from different lenses, all the while avoiding the bias introduced from the labeling of supervised machine learning.

## What it does

The app accepts a resume in .pdf or image format as well as a prompt describing the target job. We wanted to judge the resume based on layout and content. Layout encapsulates font, color, etc., and the coordination of such features. Content encapsulates semantic clustering for relevance to the target job and preventing repeated mentions.

### Optimal Experience Selection

Suppose you are applying for a job and you want to mention five experiences, but only have room for three. cv.ai will compare the experience section in your CV with the job posting's requirements and determine the three most relevant experiences you should keep.

### Text/Space Analysis

Many professionals do not use the space on their resume effectively. Our text/space analysis feature determines the ratio of characters to resume space in each section of your resume and provides insights and suggestions about how you could improve your use of space.

### Word Analysis

This feature analyzes each bullet point of a section and highlights areas where redundant words can be eliminated, freeing up more resume space and allowing for a cleaner representation of the user.

## How we built it

We used a word-encoder TensorFlow model to provide insights about semantic similarity between two words, phrases or sentences. We created a REST API with Flask for querying the TF model. Our front end uses Angular to deliver a clean, friendly user interface.

## Challenges we ran into

We are a team of two new hackers and two seasoned hackers. We ran into problems with deploying the TensorFlow model, as it was initially available only in a restricted Colab environment. To resolve this issue, we built a RESTful API that allowed us to process user data through the TensorFlow model.

## Accomplishments that we're proud of

We spent a lot of time planning and defining our problem and working out the layers of abstraction that led to actual processes with a real, concrete TensorFlow model, which is arguably the hardest part of creating a useful AI application.

## What we learned

* Deploy Flask as a RESTful API to GCP Kubernetes platform

* Use most Google Cloud Vision services

## What's next for cv.ai

We plan on adding a few more features and making cv.ai into a real web-based tool that working professionals can use to improve their resumes or CVs. Furthermore, we will extend our application to include LinkedIn analysis between a user's LinkedIn profile and a chosen job posting on LinkedIn. | # 🎓 **Inspiration**

Entering our **junior year**, we realized we were unprepared for **college applications**. Over the last couple of weeks, we scrambled to find professors to work with to possibly land a research internship. There was one big problem though: **we had no idea which professors we wanted to contact**. This naturally led us to our newest product, **"ScholarFlow"**. With our website, we assure you that finding professors and research papers that interest you will feel **effortless**, like **flowing down a stream**. 🌊

# 💡 **What it Does**

Similar to the popular dating app **Tinder**, we provide you with **hundreds of research articles** and papers, and you choose whether to approve or discard them by **swiping right or left**. Our **recommendation system** will then provide you with what we think might interest you. Additionally, you can talk to our chatbot, **"Scholar Chat"** 🤖. This chatbot allows you to ask specific questions like, "What are some **Machine Learning** papers?". Both the recommendation system and chatbot will provide you with **links, names, colleges, and descriptions**, giving you all the information you need to find your next internship and accelerate your career 🚀.

# 🛠️ **How We Built It**

While half of our team worked on **REST API endpoints** and **front-end development**, the rest worked on **scraping Google Scholar** for data on published papers. The website was built using **HTML/CSS/JS** with the **Bulma** CSS framework. We used **Flask** to create API endpoints for JSON-based communication between the server and the front end.

To process the data, we used **sentence-transformers from HuggingFace** to vectorize everything. Afterward, we performed **calculations on the vectors** to find the optimal vector for the highest accuracy in recommendations. **MongoDB Vector Search** was key to retrieving documents at lightning speed, which helped provide context to the **Cerebras Llama3 LLM** 🧠. The query is summarized, keywords are extracted, and top-k similar documents are retrieved from the vector database. We then combined context with some **prompt engineering** to create a seamless and **human-like interaction** with the LLM.

# 🚧 **Challenges We Ran Into**

The biggest challenge we faced was gathering data from **Google Scholar** due to their servers blocking requests from automated bots 🤖⛔. It took several hours of debugging and thinking to obtain a large enough dataset. Another challenge was collaboration – **LiveShare from Visual Studio Code** would frequently disconnect, making teamwork difficult. Many tasks were dependent on one another, so we often had to wait for one person to finish before another could begin. However, we overcame these obstacles and created something we're **truly proud of**! 💪

# 🏆 **Accomplishments That We're Proud Of**

We’re most proud of the **chatbot**, both in its front and backend implementations. What amazed us the most was how **accurately** the **Llama3** model understood the context and delivered relevant answers. We could even ask follow-up questions and receive **blazing-fast responses**, thanks to **Cerebras** 🏅.

# 📚 **What We Learned**

The most important lesson was learning how to **work together as a team**. Despite the challenges, we **pushed each other to the limit** to reach our goal and finish the project. On the technical side, we learned how to use **Bulma** and **Vector Search** from MongoDB. But the most valuable lesson was using **Cerebras** – the speed and accuracy were simply incredible! **Cerebras is the future of LLMs**, and we can't wait to use it in future projects. 🚀

# 🔮 **What's Next for ScholarFlow**

Currently, our data is **limited**. In the future, we’re excited to **expand our dataset by collaborating with Google Scholar** to gain even more information for our platform. Additionally, we have plans to develop an **iOS app** 📱 so people can discover new professors on the go! | ## Inspiration

Inspired by a team member's desire to study through his courses by listening to his textbook readings recited by his favorite anime characters, functionality that does not exist on any app on the market, we realized that there was an opportunity to build a similar app that would bring about even deeper social impact. Dyslexics, the visually impaired, and those who simply enjoy learning by having their favorite characters read to them (e.g. children, fans of TV series, etc.) would benefit from a highly personalized app.

## What it does

Our web app, EduVoicer, allows a user to upload a segment of their favorite template voice audio (only needs to be a few seconds long) and a PDF of a textbook and uses existing Deepfake technology to synthesize the dictation from the textbook using the users' favorite voice. The Deepfake tech relies on a multi-network model trained using transfer learning on hours of voice data. The encoder first generates a fixed embedding of a given voice sample of only a few seconds, which characterizes the unique features of the voice. Then, this embedding is used in conjunction with a seq2seq synthesis network that generates a mel spectrogram based on the text (obtained via optical character recognition from the PDF). Finally, this mel spectrogram is converted into the time-domain via the Wave-RNN vocoder (see [this](https://arxiv.org/pdf/1806.04558.pdf) paper for more technical details). Then, the user automatically downloads the .WAV file of his/her favorite voice reading the PDF contents!

## How we built it

We combined a number of different APIs and technologies to build this app. For leveraging scalable machine learning and intelligence compute, we heavily relied on the Google Cloud APIs -- including the Google Cloud PDF-to-text API, Google Cloud Compute Engine VMs, and Google Cloud Storage; for the deep learning techniques, we mainly relied on existing Deepfake code written for Python and Tensorflow (see Github repo [here](https://github.com/rodrigo-castellon/Real-Time-Voice-Cloning), which is a fork). For web server functionality, we relied on Python's Flask module, the Python standard library, HTML, and CSS. In the end, we pieced together the web server with Google Cloud Platform (GCP) via the GCP API, utilizing Google Cloud Storage buckets to store and manage the data the app would be manipulating.

## Challenges we ran into

Some of the greatest difficulties were encountered in the superficially simplest implementations. For example, the front-end initially seemed trivial (what's more to it than a page with two upload buttons?), but many of the intricacies associated with communicating with Google Cloud meant that we had to spend multiple hours creating even a landing page with just drag-and-drop and upload functionality. On the backend, 10 excruciating hours were spent attempting (successfully) to integrate existing Deepfake/Voice-cloning code with the Google Cloud Platform. Many mistakes were made, and in the process, there was much learning.

## Accomplishments that we're proud of

We're immensely proud of piecing all of these disparate components together quickly and managing to arrive at a functioning build. What started out as merely an idea manifested itself into usable app within hours.

## What we learned

We learned today that sometimes the seemingly simplest things (dealing with python/CUDA versions for hours) can be the greatest barriers to building something that could be socially impactful. We also realized the value of well-developed, well-documented APIs (e.g. Google Cloud Platform) for programmers who want to create great products.

## What's next for EduVoicer

EduVoicer still has a long way to go before it could gain users. Our first next step is to implementing functionality, possibly with some image segmentation techniques, to decide what parts of the PDF should be scanned; this way, tables and charts could be intelligently discarded (or, even better, referenced throughout the audio dictation). The app is also not robust enough to handle large multi-page PDF files; the preliminary app was designed as a minimum viable product, only including enough to process a single-page PDF. Thus, we plan on ways of both increasing efficiency (time-wise) and scaling the app by splitting up PDFs into fragments, processing them in parallel, and returning the output to the user after collating individual text-to-speech outputs. In the same vein, the voice cloning algorithm was restricted by length of input text, so this is an area we seek to scale and parallelize in the future. Finally, we are thinking of using some caching mechanisms server-side to reduce waiting time for the output audio file. | partial |

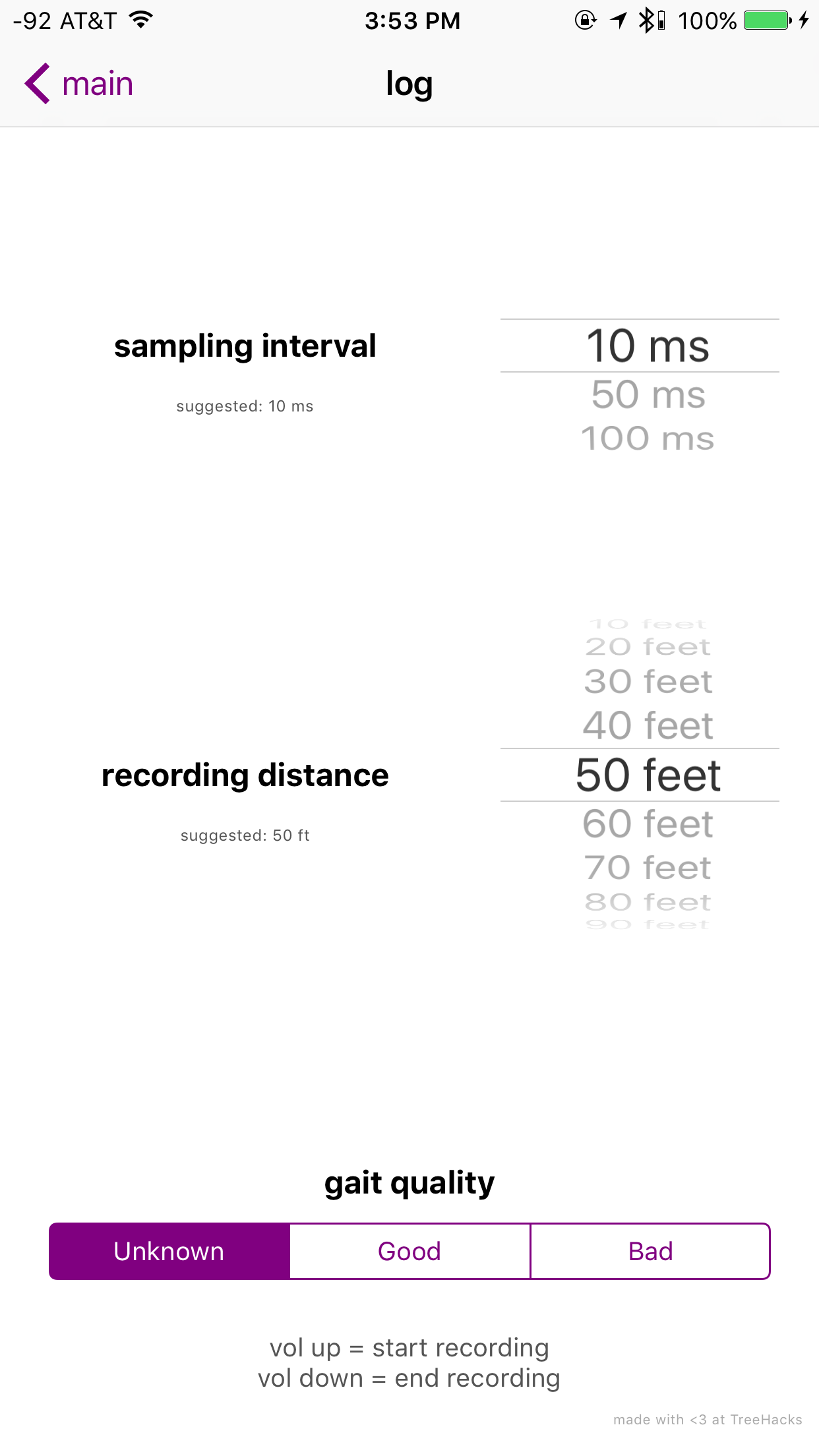

## Inspiration

We want to fix healthcare! 48% of physicians in the US are burned out, which is a driver for higher rates of medical error, lower patient satisfaction, higher rates of depression and suicide. Three graduate students at Stanford have been applying design thinking to the burnout epidemic. A CS grad from USC joined us for TreeHacks!

We conducted 300 hours of interviews, learned iteratively using low-fidelity prototypes, to discover,

i) There was no “check engine” light that went off warning individuals to “re-balance”

ii) Current wellness services weren’t designed for individuals working 80+ hour weeks

iii) Employers will pay a premium to prevent burnout

And Code Coral was born.

## What it does

Our platform helps highly-trained individuals and teams working in stressful environments proactively manage their burnout. The platform captures your phones’ digital phenotype to monitor the key predictors of burnout using machine learning. With timely, bite-sized reminders we reinforce individuals’ atomic wellness habits and provide personalized services from laundry to life-coaching.

Check out more information about our project goals: <https://youtu.be/zjV3KeNv-ok>

## How we built it

We built the backend using a combination of API's to Fitbit/Googlemaps/Apple Health/Beiwe; Built a machine learning algorithm and relied on an App Builder for the front end.

## Challenges we ran into

API's not working the way we want. Collecting and aggregating "tagged" data for our machine learning algorithm. Trying to figure out which features are the most relevant!

## Accomplishments that we're proud of

We had figured out a unique solution to addressing burnout but hadn't written any lines of code yet! We are really proud to have gotten this project off the ground!

i) Setting up a system to collect digital phenotyping features from a smart phone ii) Building machine learning experiments to hypothesis test going from our digital phenotype to metrics of burnout iii) We figured out how to detect anomalies using an individual's baseline data on driving, walking and time at home using the Microsoft Azure platform iv) Build a working front end with actual data!

Note - login information to codecoral.net: username - test password - testtest

## What we learned

We are learning how to set up AWS, a functioning back end, building supervised learning models, integrating data from many source to give new insights. We also flexed our web development skills.

## What's next for Coral Board

We would like to connect the backend data and validating our platform with real data! | ## Inspiration

Our hack was inspired by CANSOFCOM's "Help Build a Better Quantum Computer Using Orqestra-quantum library". Our team was interested in exploring quantum computing so it was a natural step to choose this challenge.

## What it does

We implemented a Zapata QuantumBackend called PauliSandwichBackend to perform Pauli sandwiching on a given gate in a circuit. This decreases noise in near-term quantum computers and returns a new circuit with this decreased noise. It will then run this new circuit with a given backend.

## How we built it

Using python we built upon the Zapata QuantumBackend API. Using advanced math and quantum theory to build an algorithms dedicated to lessening the noise and removing error from quantum computing. We implanted a new error migration technique with Pauli Sandwiching

## Challenges we ran into

We found it challenging to find clear documentation on the subject of quantum computing. Between the new API and for the most of us a first time experience with quantum theory we had to delicate a large chunk of our time on research and trial and error.

## Accomplishments that we're proud of

We are extremely proud of the fact we were able to get so far into a very niche section of computer science. While we did not have much experience we have jump a new experience that a very small group of people actually get work with.

## What we learned

We learned much about facing unfamiliar ground. While we have a strong background in code and math we did run into many challenge trying to understand quantum physics. Not only did this open a new software to us it was a great experience to be put back into the unknown with logic we were unfamiliar with it

## What's next for Better Quantum Computer

We hope to push forward our new knowledge on quantum computers to develop not only this algorithm, but many to come as quantum computing is such an unstable and untap resource at this time | ## Inspiration

So many people around the world, including those dear to us, suffer from mental health issues such as depression. Here in Berkeley, for example, the resources put aside to combat these problems are constrained. Journaling is one method commonly employed to fight mental issues; it evokes mindfulness and provides greater sense of confidence and self-identity.

## What it does

SmartJournal is a place for people to write entries into an online journal. These entries are then routed to and monitored by a therapist, who can see the journals of multiple people under their care. The entries are analyzed via Natural Language Processing and data analytics to give the therapist better information with which they can help their patient, such as an evolving sentiment and scans for problematic language. The therapist in turn monitors these journals with the help of these statistics and can give feedback to their patients.

## How we built it

We built the web application using the Flask web framework, with Firebase acting as our backend. Additionally, we utilized Microsoft Azure for sentiment analysis and Key Phrase Extraction. We linked everything together using HTML, CSS, and Native Javascript.

## Challenges we ran into

We struggled with vectorizing lots of Tweets to figure out key phrases linked with depression, and it was very hard to test as every time we did so we would have to wait another 40 minutes. However, it ended up working out finally in the end!

## Accomplishments that we're proud of

We managed to navigate through Microsoft Azure and implement Firebase correctly. It was really cool building a live application over the course of this hackathon and we are happy that we were able to tie everything together at the end, even if at times it seemed very difficult

## What we learned

We learned a lot about Natural Language Processing, both naively doing analysis and utilizing other resources. Additionally, we gained a lot of web development experience from trial and error.

## What's next for SmartJournal

We aim to provide better analysis on the actual journal entires to further aid the therapist in their treatments, and moreover to potentially actually launch the web application as we feel that it could be really useful for a lot of people in our community. | partial |

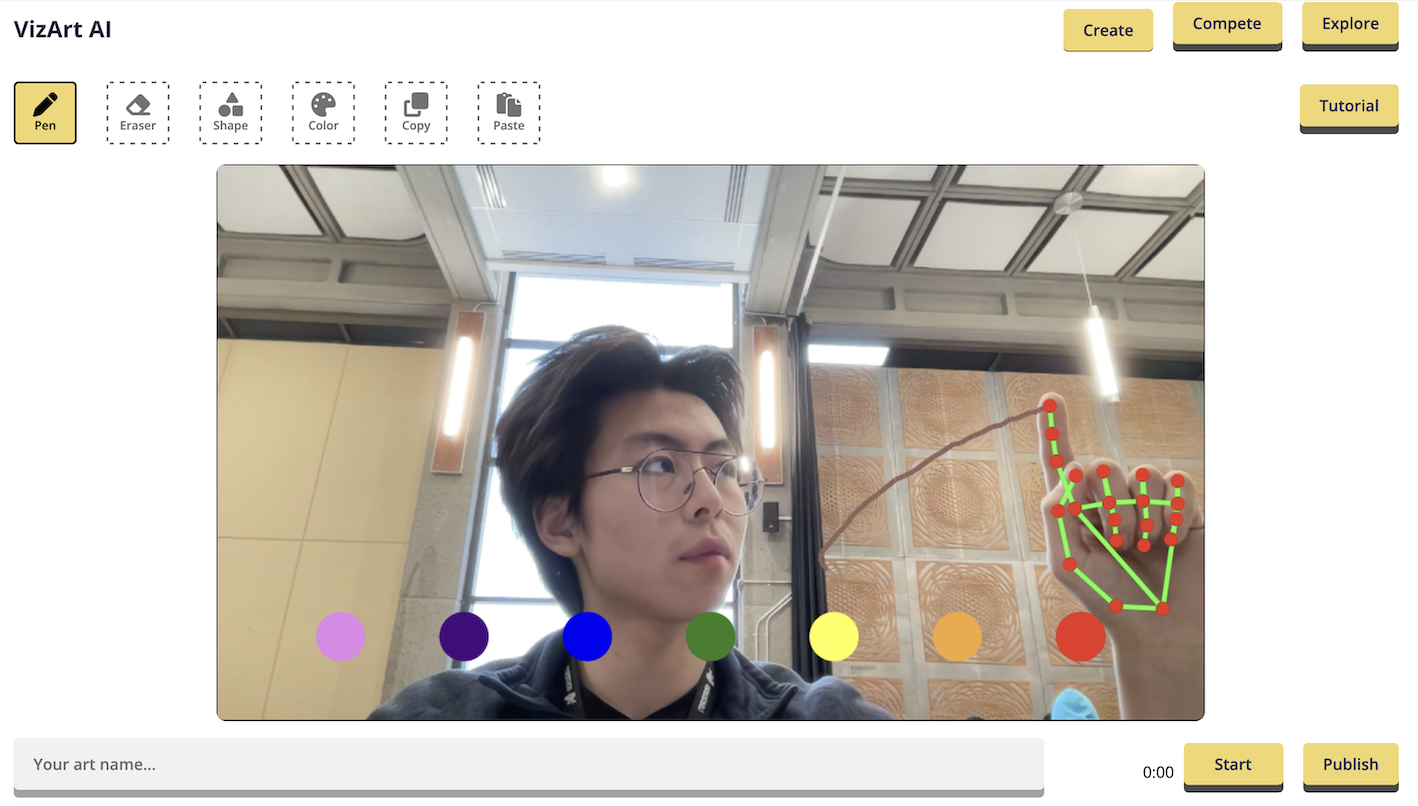

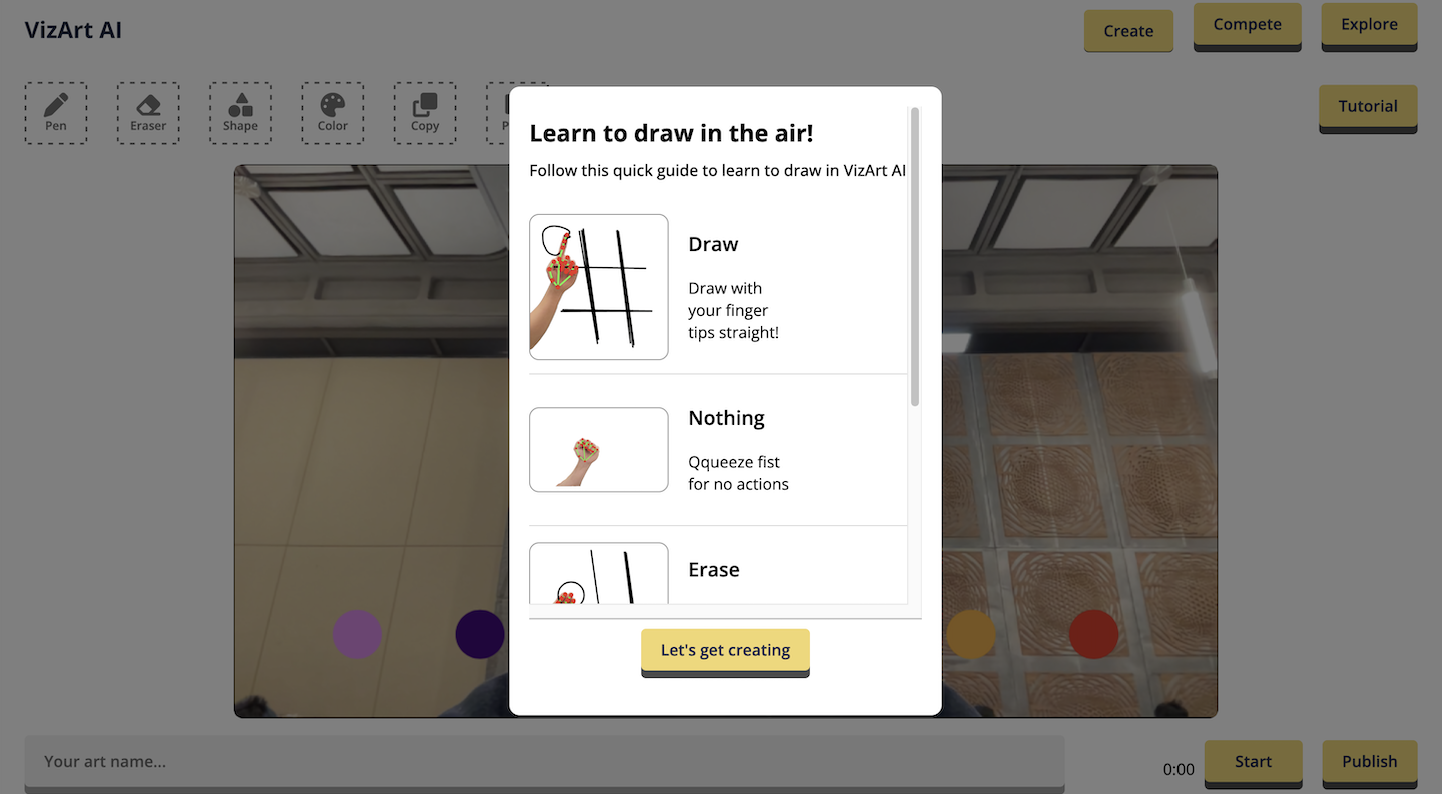

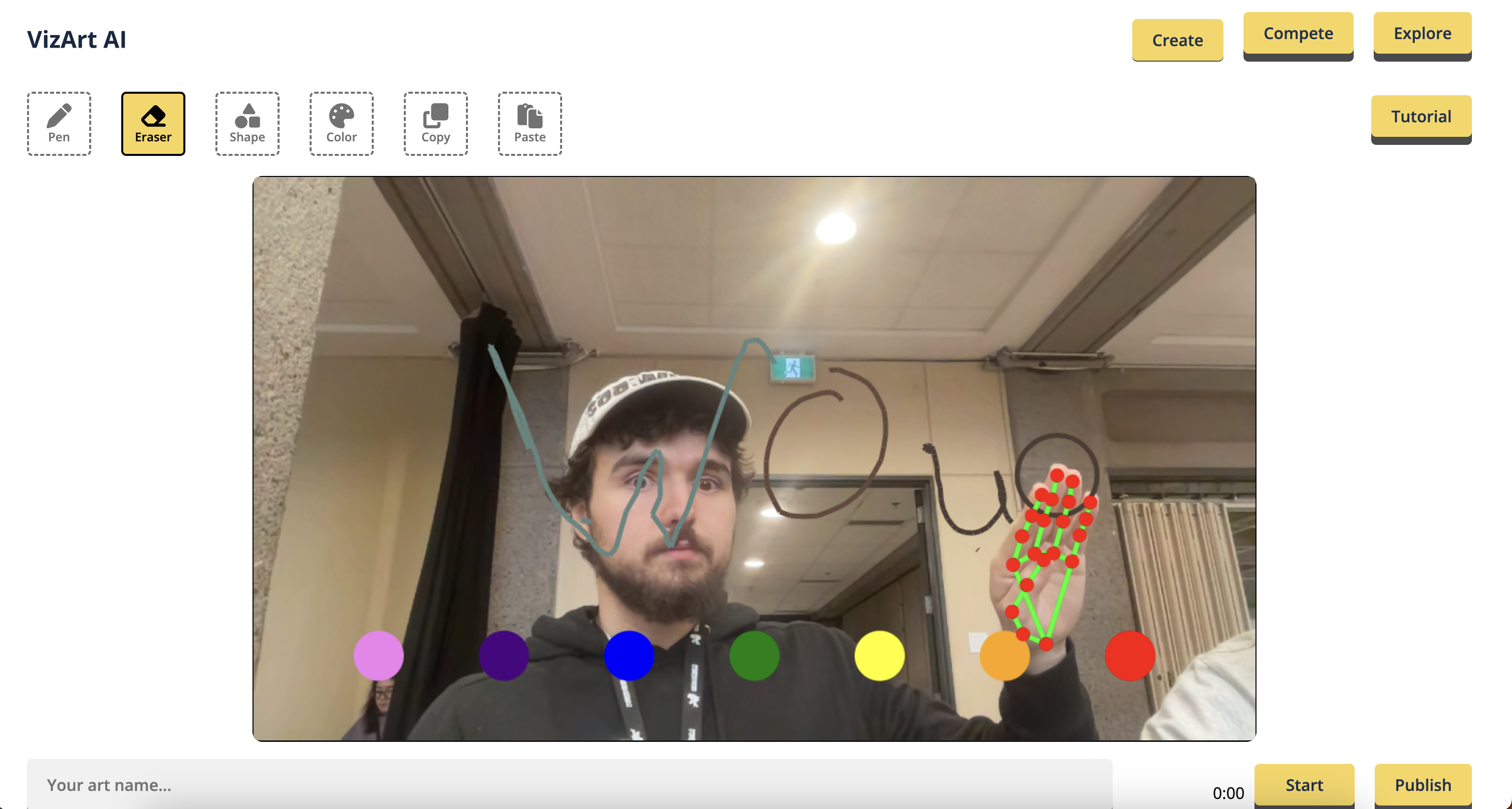

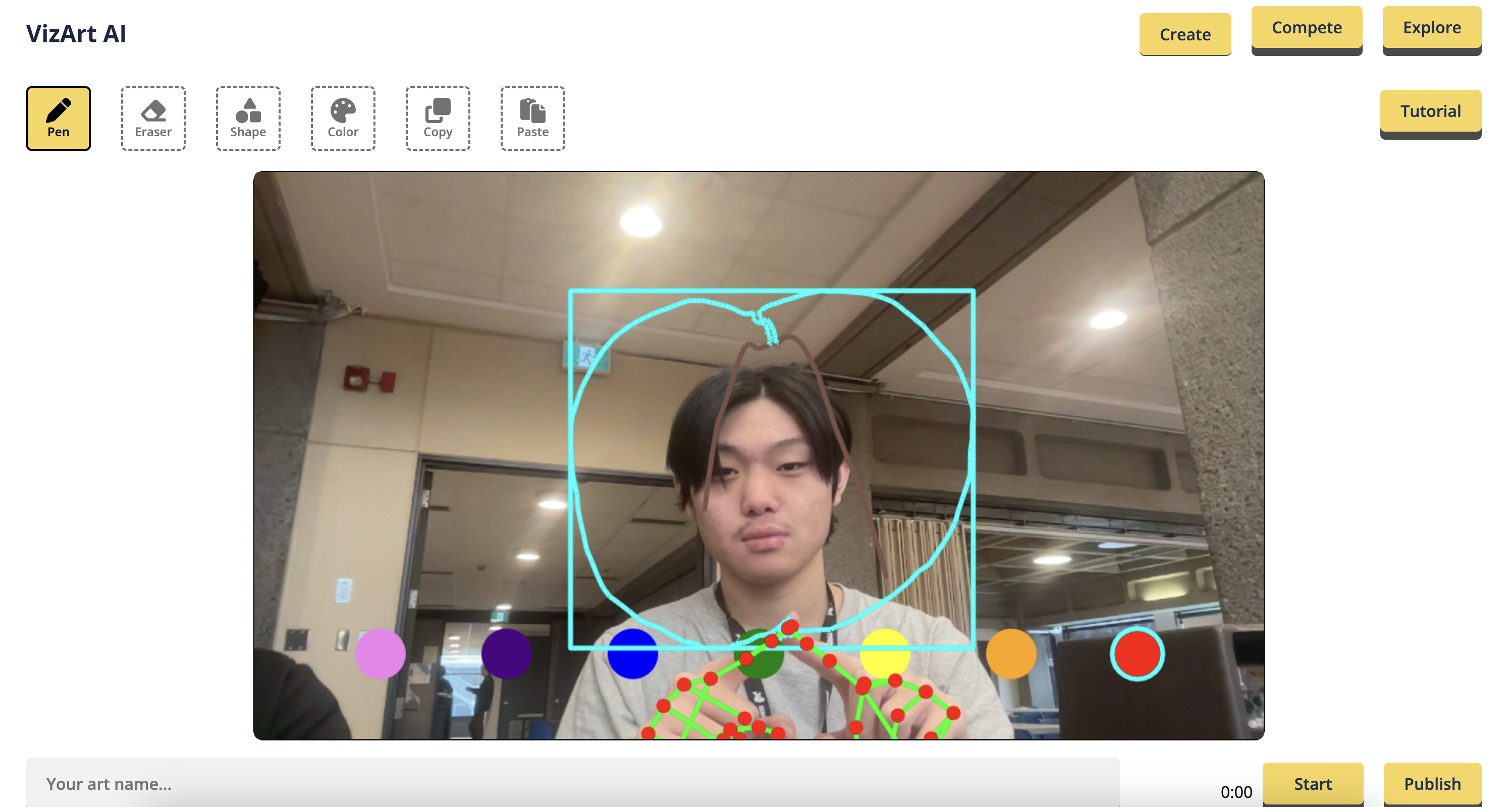

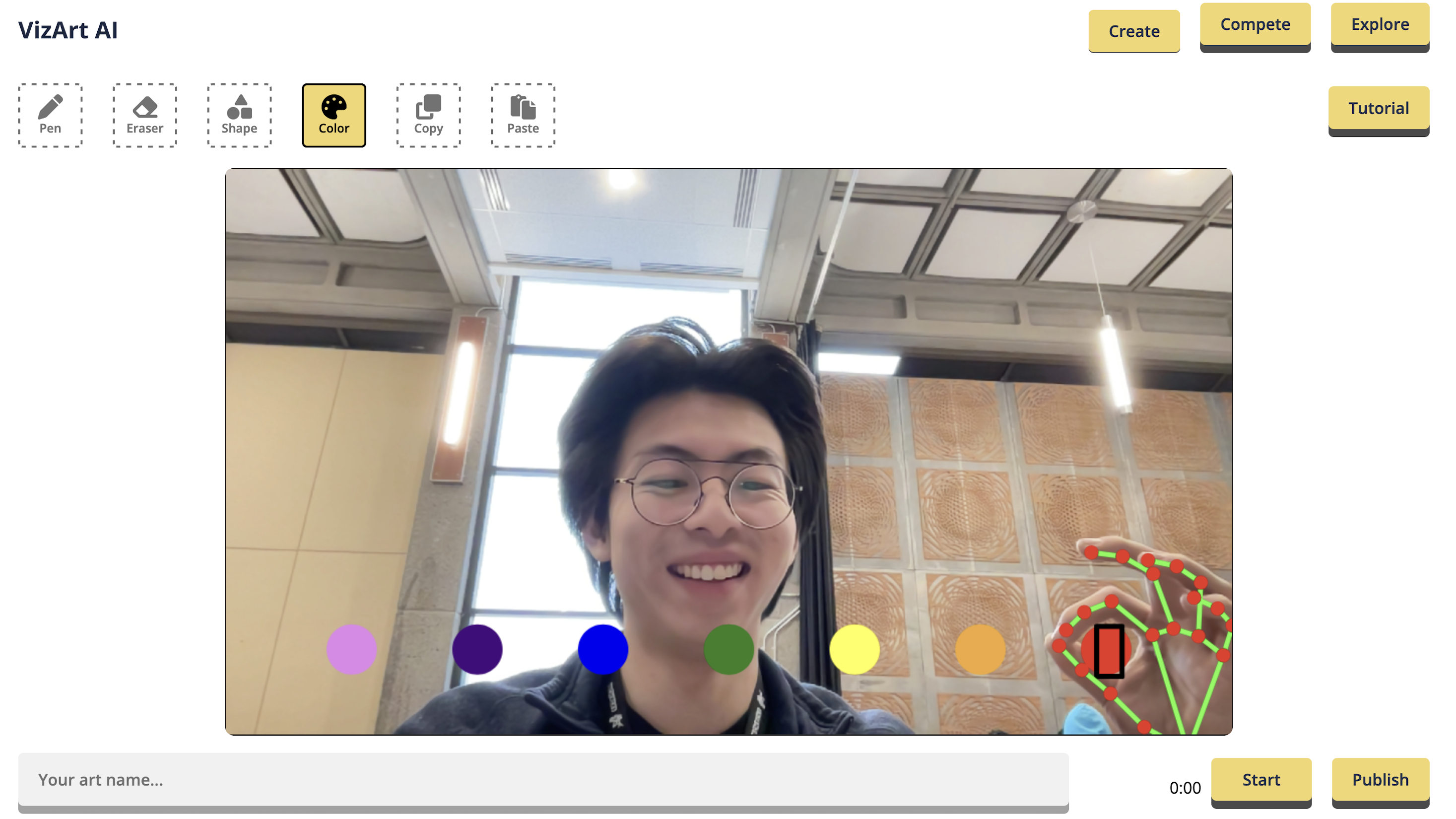

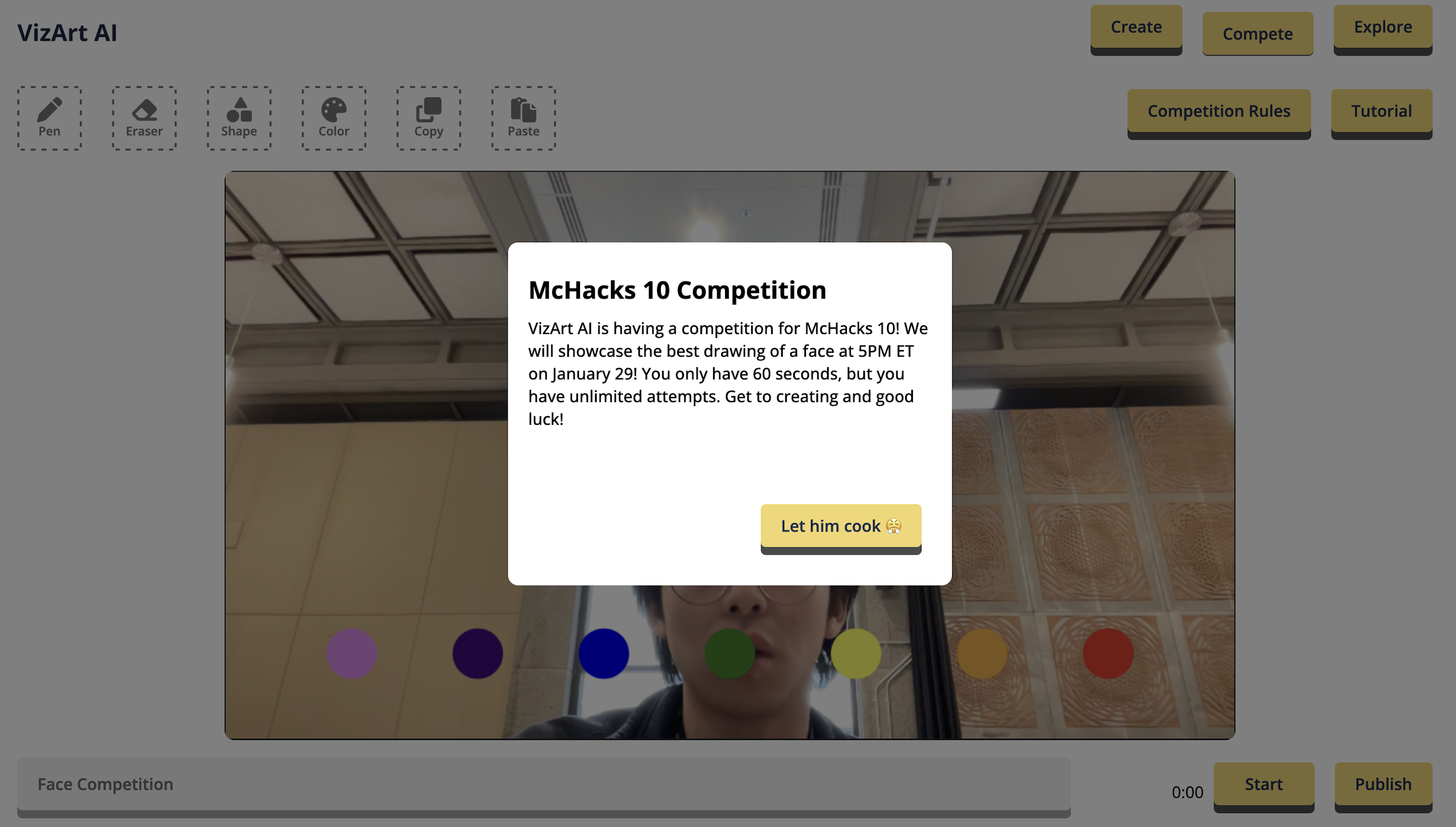

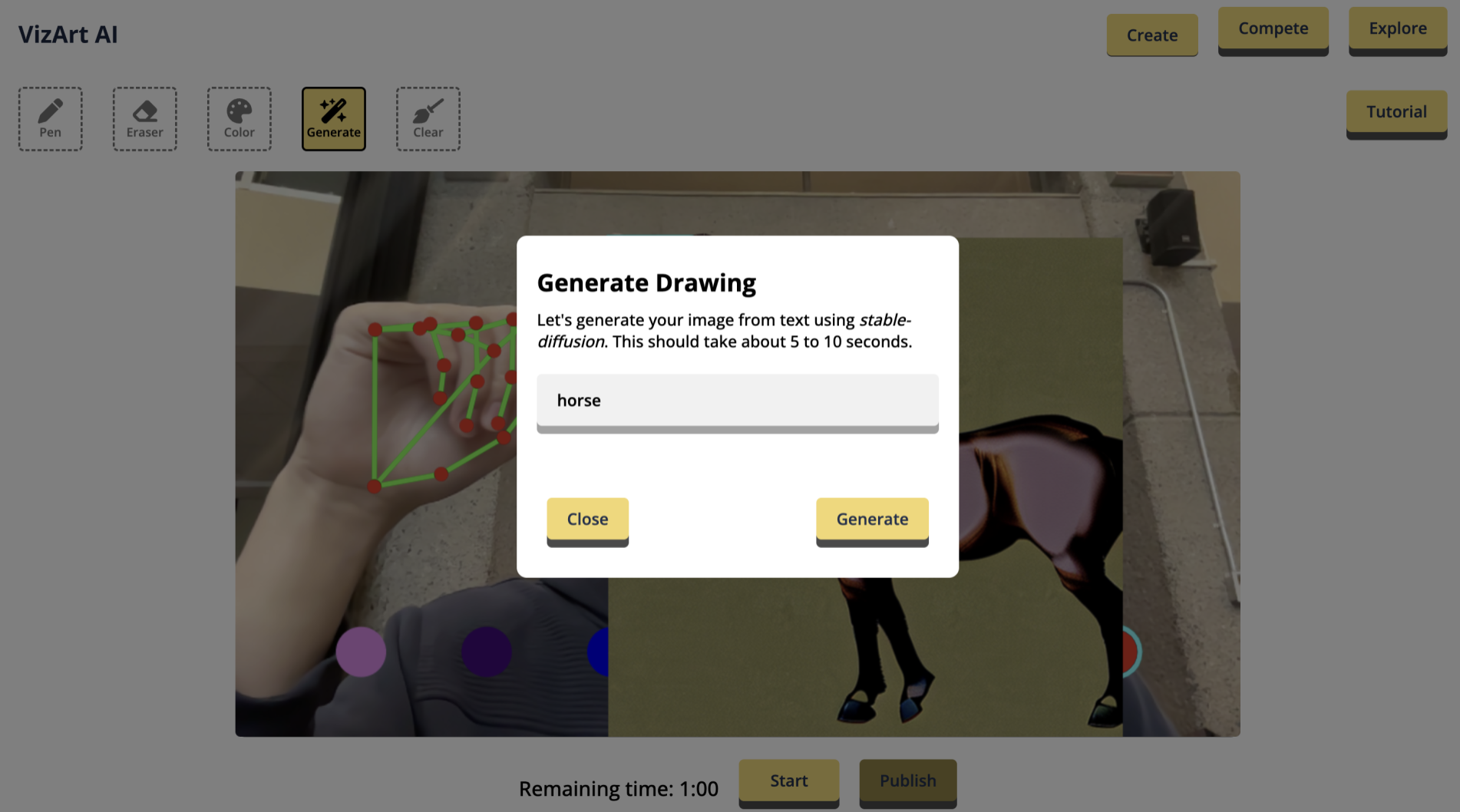

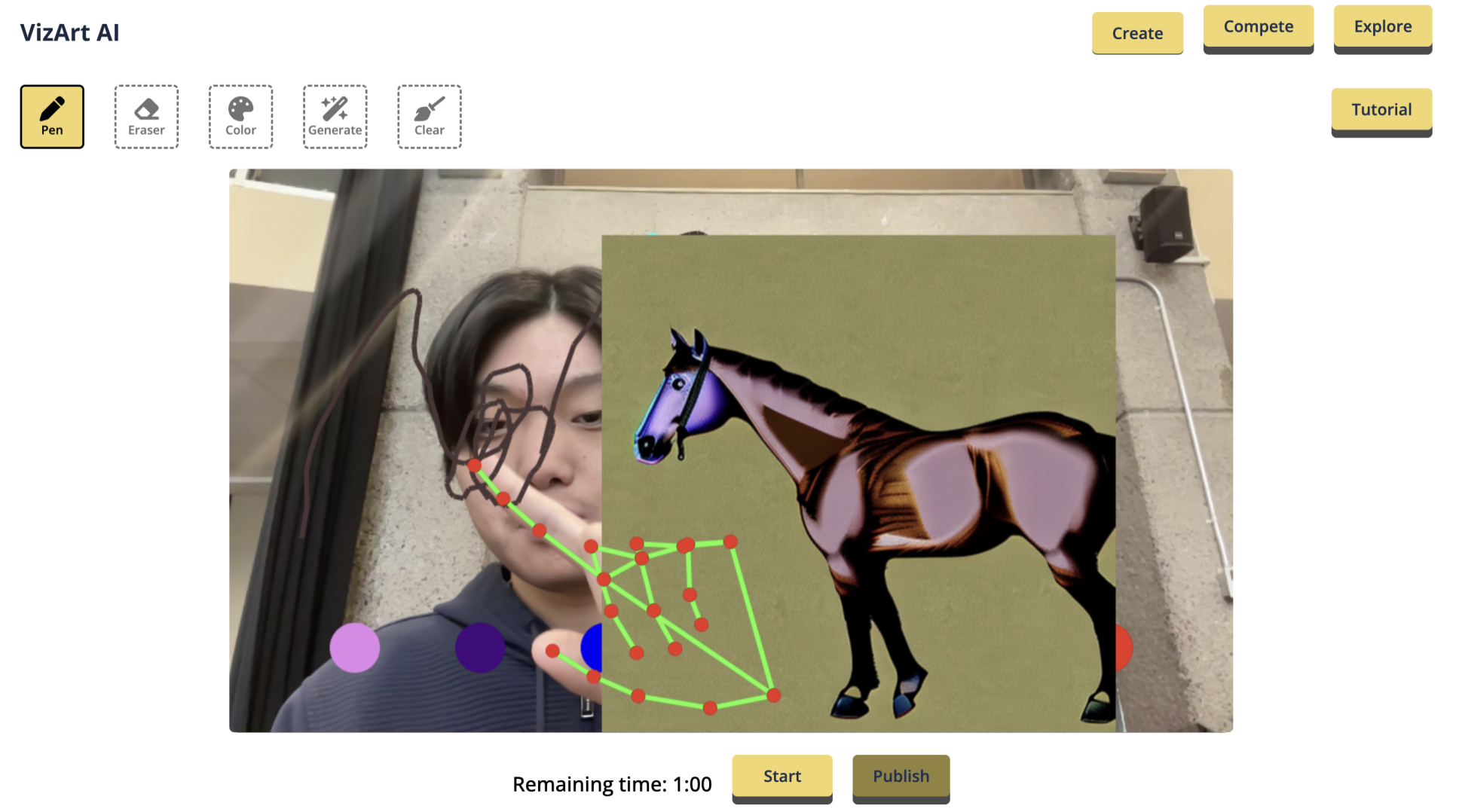

# 🤖🖌️ [VizArt Computer Vision Drawing Platform](https://vizart.tech)

Create and share your artwork with the world using VizArt - a simple yet powerful air drawing platform.

## 💫 Inspiration

>

> "Art is the signature of civilizations." - Beverly Sills

>

>

>

Art is a gateway to creative expression. With [VizArt](https://vizart.tech/create), we are pushing the boundaries of what's possible with computer vision and enabling a new level of artistic expression. ***We envision a world where people can interact with both the physical and digital realms in creative ways.***

We started by pushing the limits of what's possible with customizable deep learning, streaming media, and AR technologies. With VizArt, you can draw in art, interact with the real world digitally, and share your creations with your friends!

>

> "Art is the reflection of life, and life is the reflection of art." - Unknow

>

>

>

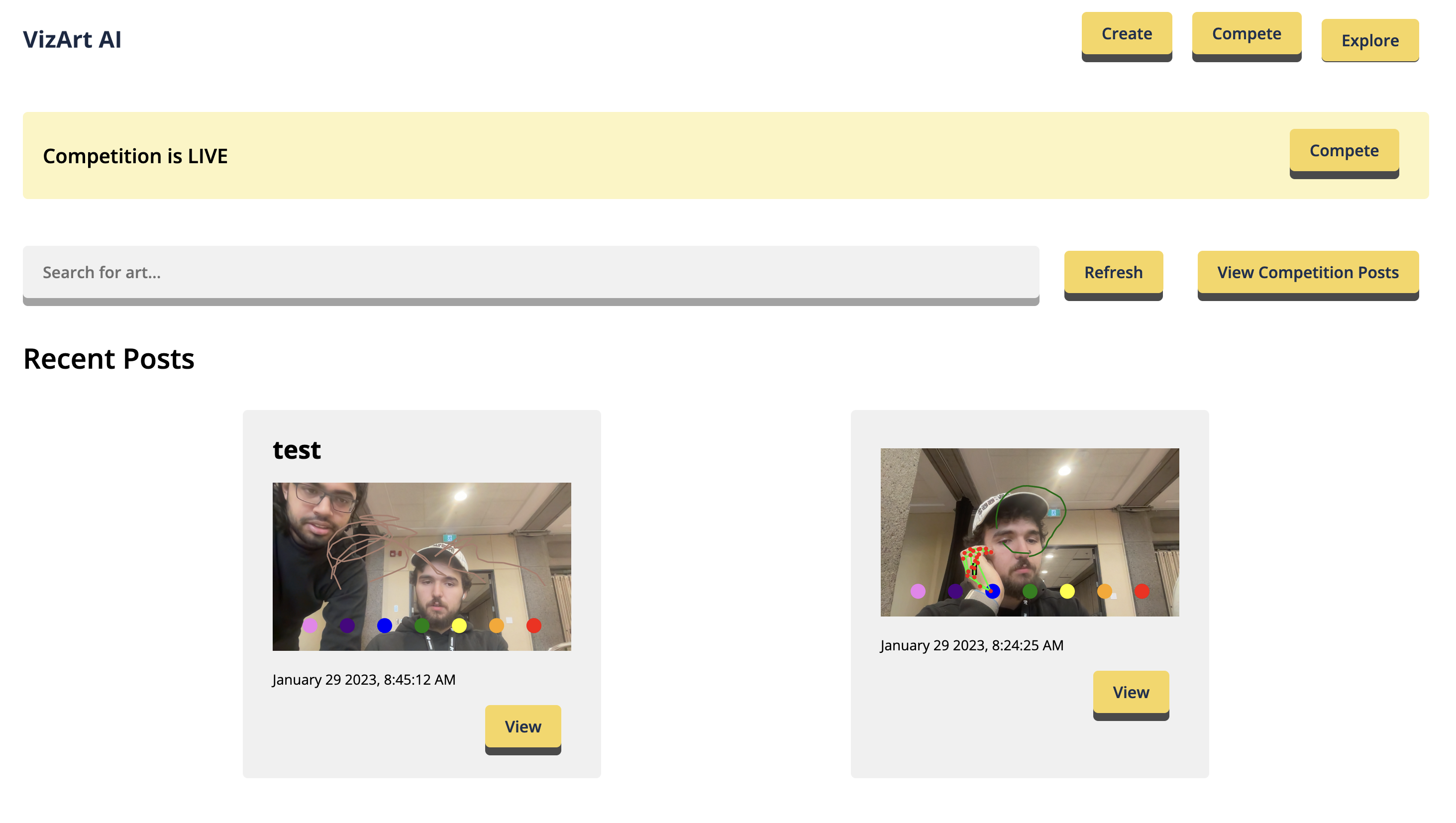

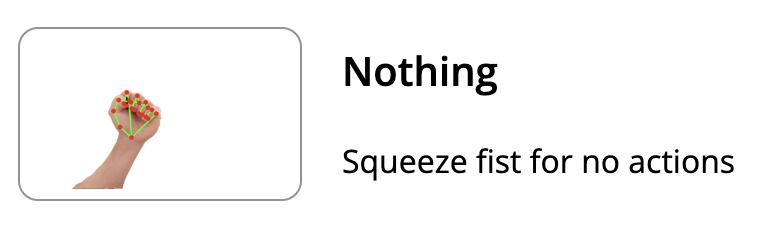

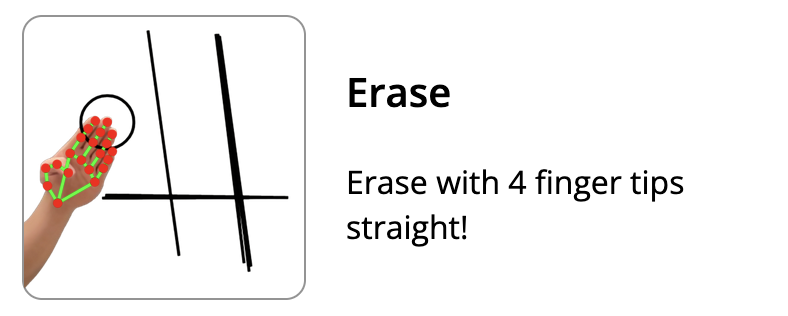

Air writing is made possible with hand gestures, such as a pen gesture to draw and an eraser gesture to erase lines. With VizArt, you can turn your ideas into reality by sketching in the air.

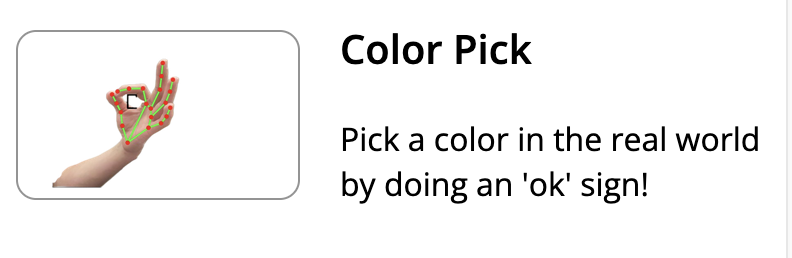

Our computer vision algorithm enables you to interact with the world using a color picker gesture and a snipping tool to manipulate real-world objects.

>

> "Art is not what you see, but what you make others see." - Claude Monet

>

>

>

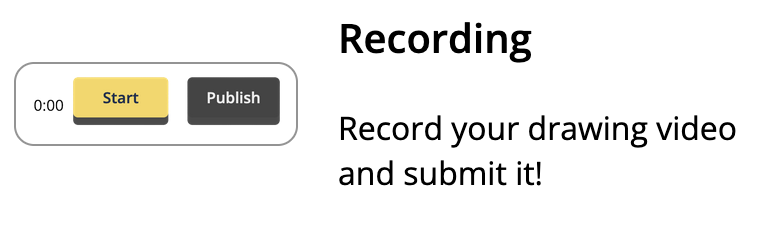

The features I listed above are great! But what's the point of creating something if you can't share it with the world? That's why we've built a platform for you to showcase your art. You'll be able to record and share your drawings with friends.

I hope you will enjoy using VizArt and share it with your friends. Remember: Make good gifts, Make good art.

# ❤️ Use Cases

### Drawing Competition/Game

VizArt can be used to host a fun and interactive drawing competition or game. Players can challenge each other to create the best masterpiece, using the computer vision features such as the color picker and eraser.

### Whiteboard Replacement

VizArt is a great alternative to traditional whiteboards. It can be used in classrooms and offices to present ideas, collaborate with others, and make annotations. Its computer vision features make drawing and erasing easier.

### People with Disabilities

VizArt enables people with disabilities to express their creativity. Its computer vision capabilities facilitate drawing, erasing, and annotating without the need for physical tools or contact.

### Strategy Games

VizArt can be used to create and play strategy games with friends. Players can draw their own boards and pieces, and then use the computer vision features to move them around the board. This allows for a more interactive and engaging experience than traditional board games.

### Remote Collaboration

With VizArt, teams can collaborate remotely and in real-time. The platform is equipped with features such as the color picker, eraser, and snipping tool, making it easy to interact with the environment. It also has a sharing platform where users can record and share their drawings with anyone. This makes VizArt a great tool for remote collaboration and creativity.

# 👋 Gestures Tutorial

# ⚒️ Engineering

Ah, this is where even more fun begins!

## Stack

### Frontend

We designed the frontend with Figma and after a few iterations, we had an initial design to begin working with. The frontend was made with React and Typescript and styled with Sass.

### Backend

We wrote the backend in Flask. To implement uploading videos along with their thumbnails we simply use a filesystem database.

## Computer Vision AI

We use MediaPipe to grab the coordinates of the joints and upload images. WIth the coordinates, we plot with CanvasRenderingContext2D on the canvas, where we use algorithms and vector calculations to determinate the gesture. Then, for image generation, we use the DeepAI open source library.

# Experimentation

We were using generative AI to generate images, however we ran out of time.

# 👨💻 Team (”The Sprint Team”)

@Sheheryar Pavaz

@Anton Otaner

@Jingxiang Mo

@Tommy He | ## Inspiration

Ever wish you didn’t need to purchase a stylus to handwrite your digital notes? Each person at some point hasn’t had the free hands to touch their keyboard. Whether you are a student learning to type or a parent juggling many tasks, sometimes a keyboard and stylus are not accessible. We believe the future of technology won’t even need to touch anything in order to take notes. HoverTouch utilizes touchless drawings and converts your (finger)written notes to typed text! We also have a text to speech function that is Google adjacent.

## What it does

Using your index finger as a touchless stylus, you can write new words and undo previous strokes, similar to features on popular note-taking apps like Goodnotes and OneNote. As a result, users can eat a slice of pizza or hold another device in hand while achieving their goal. HoverTouch tackles efficiency, convenience, and retention all in one.

## How we built it

Our pre-trained model from media pipe works in tandem with an Arduino nano, flex sensors, and resistors to track your index finger’s drawings. Once complete, you can tap your pinky to your thumb and HoverTouch captures a screenshot of your notes as a JPG. Afterward, the JPG undergoes a masking process where it is converted to a black and white picture. The blue ink (from the user’s pen strokes) becomes black and all other components of the screenshot such as the background become white. With our game-changing Google Cloud Vision API, custom ML model, and vertex AI vision, it reads the API and converts your text to be displayed on our web browser application.

## Challenges we ran into

Given that this was our first hackathon, we had to make many decisions regarding feasibility of our ideas and researching ways to implement them. In addition, this entire event has been an ongoing learning process where we have felt so many emotions — confusion, frustration, and excitement. This truly tested our grit but we persevered by uplifting one another’s spirits, recognizing our strengths, and helping each other out wherever we could.

One challenge we faced was importing the Google Cloud Vision API. For example, we learned that we were misusing the terminal and our disorganized downloads made it difficult to integrate the software with our backend components. Secondly, while developing the hand tracking system, we struggled with producing functional Python lists. We wanted to make line strokes when the index finger traced thin air, but we eventually transitioned to using dots instead to achieve the same outcome.

## Accomplishments that we're proud of

Ultimately, we are proud to have a working prototype that combines high-level knowledge and a solution with significance to the real world. Imagine how many students, parents, friends, in settings like your home, classroom, and workplace could benefit from HoverTouch's hands free writing technology.

This was the first hackathon for ¾ of our team, so we are thrilled to have undergone a time-bounded competition and all the stages of software development (ideation, designing, prototyping, etc.) toward a final product. We worked with many cutting-edge softwares and hardwares despite having zero experience before the hackathon.

In terms of technicals, we were able to develop varying thickness of the pen strokes based on the pressure of the index finger. This means you could write in a calligraphy style and it would be translated from image to text in the same manner.

## What we learned

This past weekend we learned that our **collaborative** efforts led to the best outcomes as our teamwork motivated us to preserve even in the face of adversity. Our continued **curiosity** led to novel ideas and encouraged new ways of thinking given our vastly different skill sets.

## What's next for HoverTouch

In the short term, we would like to develop shape recognition. This is similar to Goodnotes feature where a hand-drawn square or circle automatically corrects to perfection.

In the long term, we want to integrate our software into web-conferencing applications like Zoom. We initially tried to do this using WebRTC, something we were unfamiliar with, but the Zoom SDK had many complexities that were beyond our scope of knowledge and exceeded the amount of time we could spend on this stage.

### [HoverTouch Website](hoverpoggers.tech) | ## Inspiration

## What it does

You can point your phone's camera at a checkers board and it will show you all of the legal moves and mark the best one.

## How we built it

We used Android studio to develop an Android app that streams camera captures to a python server that handles

## Challenges we ran into

Detection of the orientation of the checkers board and the location of the pieces.

## Accomplishments that we're proud of

We used markers to provide us easy to detect reference points which we used to infer the orientation of the board.

## What we learned

* Android Camera API

* Computer Vision never works as robust as you think it will.

## What's next for Augmented Checkers

* Better graphics and UI

* Other games | winning |

# SlideDown

## Inspiration

Recently, we had to make a set of workshops for one of our organizations at school. We created all of the curriculum in Markdown, so that it could be reviewed and changed easily, but once we were done, we had 10+ Markdown files to convert into actual slidedecks. That was our inspiration for SlideDown: a way to save time and effort when making slides.

## What it does

SlideDown is a command-line tool that takes in a Markdown file, parses through the content, and makes a Google Slides presentation that maintains the same content and formatting.

## How we built it

SlideDown was made with a Python script that uses the Google Slides API to create slides and content. It's pure Python3 :).

## Challenges we ran into

One of the biggest hurdles was figuring out how the Google Slides API worked. There is documentation available, but some of it can be confusing, especially for the Python implementation.

## Accomplishments that we're proud of

Getting the tool to actually make a presentation!

## What's next for SlideDown

Implement more tag support from Markdown, improve looks, actually get it working :^). | ## Inspiration

Having to prepare presentations is already dreadful enough. But also having to prepare slides to accompany it? So much worse. As students, we could not be any more familiar with this situation. While our speeches for English class may be a valid indicator of our English skills, the slides we make are not. The time we spend making these slides is better allocated elsewhere, and this is where Script2Slides comes in.

## What it does

This productivity tool we created automatically generates a visually appealing slideshow containing key information and relevant images. All you need to do is copy and paste your script into the textbox on our website, and your brand-new slideshow will be downloaded for you to use!

## How we built it

Script2Slides was created with HTML and styled with CSS. The script is summarized into bullet points in GPT-3.5 using the OpenAI API, and an image description is generated. We then use this description to find relevant images for the slides using Google Image Search API. With both bullet points and images, we create a PowerPoint file downloaded for the user.

## Challenges we ran into

We decided on the idea quite late — around 11:30 a.m. on Saturday! This meant we only had a day to work on the project. A few more challenges we ran into included crafting a prompt that worked well with GPT and downloading the slideshow as a PowerPoint since the formatting would have problems.

## Accomplishments that we're proud of

We’re proud of creating our first hack that successfully implements AI. But beyond that, we’re simply happy to solve an issue the whole team can relate to.

## What we learned

Since this is our first time implementing AI, we learned how to use OpenAI API, and more specifically, Victor learned how to use Flask.

## What's next for Script2Slides

In the future, we wish to run Script2Slides in a way that is more personalized. It would be nice to make things more customizable; for instance, the software can detect the theme of a presentation, i.e. formal, business, technology, etc. and then design the slides accordingly. We’d also like to allow the user to download the slides in formats other than PowerPoint. | ## Inspiration

A brief recap of the inspiration for Presentalk 1.0: We wanted to make it easier to navigate presentations. Handheld clickers are useful for going to the next and last slide, but they are unable to skip to specific slides in the presentation. Also, we wanted to make it easier to pull up additional information like maps, charts, and pictures during a presentation without breaking the visual continuity of the presentation. To do that, we added the ability to search for and pull up images using voice commands, without leaving the presentation.

Last year, we finished our prototype, but it was a very hacky and unclean implementation of Presentalk. After the positive feedback we heard after the event, despite our code's problems, we resolved to come back this year to make the product something we could actually host online and let everyone use.

## What it does

Presentalk solves this problem with voice commands that allow you to move forward and back, skip to specific slides and keywords, and go to specific images in your presentation using image recognition. Presentalk recognizes voice commands, including:

* Next Slide

+ Goes to the next slide

* Last Slide

+ Goes to the previous slide

* Go to Slide 3

+ Goes to the 3rd slide

* Go to the slide with the dog