datasetId

stringlengths 5

121

| author

stringlengths 2

42

| last_modified

unknown | downloads

int64 0

4.73M

| likes

int64 0

7.59k

| tags

sequencelengths 1

7.92k

| task_categories

sequencelengths 0

47

⌀ | createdAt

unknown | card

stringlengths 15

1.02M

|

|---|---|---|---|---|---|---|---|---|

tuandunghcmut/sp_bilingual_ds | tuandunghcmut | "2024-09-04T09:59:04Z" | 0 | 0 | [

"size_categories:1M<n<10M",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-09-04T08:33:31Z" | ---

dataset_info:

features:

- name: image_name

dtype: string

- name: person_id

dtype: int64

- name: caption_0

dtype: string

- name: caption_1

dtype: string

- name: attributes

dtype: string

- name: prompt_caption

dtype: string

- name: image

dtype: image

- name: viet_captions

sequence: string

- name: viet_prompt_caption

sequence: string

splits:

- name: train

num_bytes: 54940531595.615

num_examples: 4791127

download_size: 51005008832

dataset_size: 54940531595.615

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

QuanHoangNgoc/EVJ_NonCluster | QuanHoangNgoc | "2024-10-22T04:18:28Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-10-22T04:12:29Z" | ---

dataset_info:

features:

- name: answer

dtype: string

- name: image

dtype: image

- name: prompt

dtype: string

splits:

- name: train

num_bytes: 1101443348.656

num_examples: 7748

- name: test

num_bytes: 89786903.0

num_examples: 567

download_size: 3546373791

dataset_size: 1191230251.656

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

QuanHoangNgoc/EVJ_Cluster | QuanHoangNgoc | "2024-10-22T13:55:58Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-10-22T13:55:09Z" | ---

dataset_info:

features:

- name: answer

dtype: string

- name: cluster

dtype: int32

- name: image

dtype: image

- name: prompt

dtype: string

splits:

- name: train

num_bytes: 965568274.42

num_examples: 5820

- name: test

num_bytes: 358795481.815

num_examples: 2495

download_size: 1239772291

dataset_size: 1324363756.235

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

QuanHoangNgoc/EVJ_Cluster-Normal | QuanHoangNgoc | "2024-10-23T09:19:34Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-10-23T09:18:39Z" | ---

dataset_info:

features:

- name: answer

dtype: string

- name: cluster

dtype: int32

- name: image

dtype: image

- name: prompt

dtype: string

splits:

- name: li_train

num_bytes: 900073595.5

num_examples: 5820

- name: li_test

num_bytes: 354791631.125

num_examples: 2495

download_size: 1239073417

dataset_size: 1254865226.625

configs:

- config_name: default

data_files:

- split: li_train

path: data/li_train-*

- split: li_test

path: data/li_test-*

---

|

ayyuce/drugs | ayyuce | "2024-11-06T23:31:49Z" | 0 | 0 | [

"license:mit",

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-11-06T23:30:48Z" | ---

license: mit

---

|

QuanHoangNgoc/EVJ_Cluster-Expanded | QuanHoangNgoc | "2024-11-13T14:19:21Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-11-13T14:16:49Z" | ---

dataset_info:

features:

- name: answer

dtype: string

- name: cluster

dtype: int32

- name: image

dtype: image

- name: prompt

dtype: string

- name: description

dtype: string

splits:

- name: li_train

num_bytes: 901224571.5

num_examples: 5820

- name: li_test

num_bytes: 355286984.125

num_examples: 2495

download_size: 1239503465

dataset_size: 1256511555.625

configs:

- config_name: default

data_files:

- split: li_train

path: data/li_train-*

- split: li_test

path: data/li_test-*

---

|

shroom-semeval25/hallucinated_answer_generated_dataset_cleaned | shroom-semeval25 | "2024-11-13T22:39:51Z" | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-11-13T22:34:17Z" | ---

dataset_info:

features:

- name: id

dtype: string

- name: title

dtype: string

- name: context

dtype: string

- name: question

dtype: string

- name: answers

struct:

- name: answer_start

sequence: int32

- name: text

sequence: string

- name: correct_answer_generated

dtype: string

- name: hallucinated_answer_generated

dtype: string

splits:

- name: train

num_bytes: 397086774

num_examples: 373848

- name: validation

num_bytes: 49601442

num_examples: 46731

- name: test

num_bytes: 49545525

num_examples: 46732

download_size: 319248685

dataset_size: 496233741

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: validation

path: data/validation-*

- split: test

path: data/test-*

---

|

QuanHoangNgoc/MS_TestSet_5k | QuanHoangNgoc | "2024-12-27T06:56:58Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2024-12-27T06:55:30Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: caption

sequence: string

splits:

- name: test

num_bytes: 2417550920.0

num_examples: 5000

download_size: 2417029684

dataset_size: 2417550920.0

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

---

|

kevin017/tokenized_bioS_QA_b_city_large | kevin017 | "2025-02-28T07:22:57Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-09T20:17:56Z" | ---

dataset_info:

features:

- name: input_ids

sequence: int32

- name: attention_mask

sequence: int8

splits:

- name: train

num_bytes: 87638953

num_examples: 34061

- name: test

num_bytes: 87641526

num_examples: 34062

download_size: 12501009

dataset_size: 175280479

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

kevin017/tokenized_bioS_QA_b_date_large | kevin017 | "2025-02-28T07:23:07Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-09T20:18:05Z" | ---

dataset_info:

features:

- name: input_ids

sequence: int32

- name: attention_mask

sequence: int8

splits:

- name: train

num_bytes: 87690413

num_examples: 34081

- name: test

num_bytes: 87664683

num_examples: 34071

download_size: 13740130

dataset_size: 175355096

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

kevin017/tokenized_bioS_QA_c_city_large | kevin017 | "2025-02-28T07:23:17Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-09T20:18:15Z" | ---

dataset_info:

features:

- name: input_ids

sequence: int32

- name: attention_mask

sequence: int8

splits:

- name: train

num_bytes: 87170667

num_examples: 33879

- name: test

num_bytes: 87165521

num_examples: 33877

download_size: 10015228

dataset_size: 174336188

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

kevin017/tokenized_bioS_QA_c_name_large | kevin017 | "2025-02-28T07:23:28Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-09T20:18:22Z" | ---

dataset_info:

features:

- name: input_ids

sequence: int32

- name: attention_mask

sequence: int8

splits:

- name: train

num_bytes: 87232419

num_examples: 33903

- name: test

num_bytes: 87214408

num_examples: 33896

download_size: 12468376

dataset_size: 174446827

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

kevin017/tokenized_bioS_QA_univ_large | kevin017 | "2025-02-28T07:23:49Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-09T20:18:39Z" | ---

dataset_info:

features:

- name: input_ids

sequence: int32

- name: attention_mask

sequence: int8

splits:

- name: train

num_bytes: 87353350

num_examples: 33950

- name: test

num_bytes: 87343058

num_examples: 33946

download_size: 13016656

dataset_size: 174696408

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

QuanHoangNgoc/MS-Flickr30k | QuanHoangNgoc | "2025-01-19T03:36:29Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-01-19T03:31:54Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: caption

dtype: string

splits:

- name: test

num_bytes: 6740690168.25

num_examples: 36014

download_size: 6721561298

dataset_size: 6740690168.25

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

---

|

roomtour3d/Self-Critic-Hallucination_withGT | roomtour3d | "2025-02-25T07:09:15Z" | 0 | 0 | [

"license:mit",

"size_categories:10K<n<100K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-02-25T06:51:38Z" | ---

license: mit

dataset_info:

features:

- name: ds_name

dtype: string

- name: image

dtype: image

- name: question

dtype: string

- name: chosen

dtype: string

- name: rejected

dtype: string

- name: origin_dataset

dtype: string

- name: origin_split

dtype: string

- name: idx

dtype: string

- name: image_path

dtype: string

- name: gt

list:

- name: answer

dtype: string

- name: answer_id

dtype: int64

splits:

- name: train

num_bytes: 4414647639.4

num_examples: 28696

download_size: 4391984529

dataset_size: 4414647639.4

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

inkoziev/ArsPoetica | inkoziev | "2025-03-01T04:38:37Z" | 0 | 1 | [

"task_categories:text-generation",

"language:ru",

"license:cc-by-4.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"poetry"

] | [

"text-generation"

] | "2025-02-27T16:28:19Z" | ---

license: cc-by-4.0

task_categories:

- text-generation

language:

- ru

tags:

- poetry

pretty_name: Ars Poetica

size_categories:

- 1K<n<10K

---

# Ars Poetica

The **Ars Poetica** dataset is a collection of Russian-language poetry from the 19th and 20th centuries, annotated with stress marks. This dataset is designed to support research in generative poetry, computational linguistics, and related fields.

Stress marks were automatically assigned using the [RussianPoetryScansionTool](https://github.com/Koziev/RussianPoetryScansionTool) library. While the dataset has undergone selective manual validation, users should be aware of potential inaccuracies due to the automated process.

## Example

```

За́йку бро́сила хозя́йка —

Под дождё́м оста́лся за́йка.

Со скаме́йки сле́зть не мо́г,

Ве́сь до ни́точки промо́к.

```

## Citing

If you use this dataset in your research or projects, please cite it as follows:

```bibtex

@misc{Conversations,

author = {Ilya Koziev},

title = {Ars Poetica Dataset},

year = {2025},

publisher = {Hugging Face},

howpublished = {\url{https://huggingface.co/datasets/inkoziev/ArsPoetica}},

}

```

## License

This dataset is licensed under the [CC-BY-NC-4.0](https://creativecommons.org/licenses/by-nc/4.0/) license, which permits non-commercial use

only. For commercial use, please contact the author at [inkoziev@gmail.com].

By using this dataset, you agree to:

- Provide proper attribution to the author.

- Refrain from using the dataset for commercial purposes without explicit permission.

## Other resources

If you are interested in stress placement and homograph resolution, check out our [Homograph Resolution Evaluation Dataset](https://huggingface.co/datasets/inkoziev/HomographResolutionEval) and [Rifma](https://github.com/Koziev/Rifma) datasets.

## Limitations

- **Automated Processing**: The dataset was generated through automated methods with only selective manual validation. As a result, some poems may contain misspellings, typos, or other imperfections.

- **Limited Scope**: The dataset does not encompass the full range of Russian poetic works. Many genres, forms, and longer compositions are excluded, making it unsuitable as a comprehensive anthology of Russian poetry. |

caitwong/balanced_translation_dataset4 | caitwong | "2025-03-01T17:49:51Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-02-28T10:42:27Z" | ---

dataset_info:

- config_name: batch_1

features:

- name: None

dtype: string

- name: en

dtype: string

- name: vi

dtype: string

- name: source_file

dtype: string

- name: target_lang

dtype: string

- name: idx

dtype: int64

- name: th

dtype: string

- name: conversation_id

dtype: string

- name: category

dtype: string

- name: zh

dtype: string

- name: hi

dtype: string

- name: ms

dtype: string

splits:

- name: train

num_bytes: 2470346

num_examples: 5654

download_size: 1201569

dataset_size: 2470346

- config_name: batch_2

features:

- name: en

dtype: string

- name: tl

dtype: string

- name: source_file

dtype: string

- name: target_lang

dtype: string

- name: idx

dtype: int64

- name: conversation_id

dtype: string

- name: category

dtype: string

- name: zh

dtype: string

- name: id

dtype: string

splits:

- name: train

num_bytes: 2192989

num_examples: 3222

download_size: 1213459

dataset_size: 2192989

- config_name: batch_3

features:

- name: en

dtype: string

- name: id

dtype: string

- name: source_file

dtype: string

- name: target_lang

dtype: string

- name: idx

dtype: int64

splits:

- name: train

num_bytes: 1254295

num_examples: 5072

download_size: 803079

dataset_size: 1254295

- config_name: batch_4

features:

- name: None

dtype: string

- name: en

dtype: string

- name: vi

dtype: string

- name: source_file

dtype: string

- name: target_lang

dtype: string

- name: idx

dtype: int64

- name: conversation_id

dtype: string

- name: category

dtype: string

- name: zh

dtype: string

- name: th

dtype: string

splits:

- name: train

num_bytes: 6529648

num_examples: 8992

download_size: 3312547

dataset_size: 6529648

- config_name: batch_5

features:

- name: tl

dtype: string

- name: en

dtype: string

- name: source_file

dtype: string

- name: target_lang

dtype: string

- name: idx

dtype: int64

splits:

- name: train

num_bytes: 2627409

num_examples: 3137

download_size: 1475207

dataset_size: 2627409

configs:

- config_name: batch_1

data_files:

- split: train

path: batch_1/train-*

- config_name: batch_2

data_files:

- split: train

path: batch_2/train-*

- config_name: batch_3

data_files:

- split: train

path: batch_3/train-*

- config_name: batch_4

data_files:

- split: train

path: batch_4/train-*

- config_name: batch_5

data_files:

- split: train

path: batch_5/train-*

---

|

simwit/medmoe-vqa-rad | simwit | "2025-03-01T07:52:46Z" | 0 | 0 | [

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-02-28T10:50:55Z" | ---

license: apache-2.0

dataset_info:

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

dtype: string

- name: modality

dtype: string

- name: answer_type

dtype: string

splits:

- name: test_all

num_bytes: 23826356.0

num_examples: 451

- name: test_open

num_bytes: 9281911.0

num_examples: 179

- name: test_closed

num_bytes: 14544445.0

num_examples: 272

download_size: 26472530

dataset_size: 47652712.0

configs:

- config_name: default

data_files:

- split: test_all

path: data/test_all-*

- split: test_open

path: data/test_open-*

- split: test_closed

path: data/test_closed-*

---

|

g-ronimo/IN1k256-AR-buckets-latents_dc-ae-f32c32-sana-1.0_ | g-ronimo | "2025-02-28T19:54:06Z" | 0 | 0 | [

"size_categories:100K<n<1M",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-02-28T17:52:21Z" | ---

dataset_info:

features:

- name: label

dtype: string

- name: latent

sequence:

sequence:

sequence:

sequence: float32

splits:

- name: train_AR_4_to_3.part_0

num_bytes: 1144094328

num_examples: 100000

- name: train_AR_4_to_3.part_1

num_bytes: 1144088430

num_examples: 100000

- name: train_AR_3_to_4.part_0

num_bytes: 1169764996

num_examples: 100000

- name: train_AR_4_to_3.part_2

num_bytes: 1144091474

num_examples: 100000

download_size: 1573133511

dataset_size: 4602039228

configs:

- config_name: default

data_files:

- split: train_AR_4_to_3.part_0

path: data/train_AR_4_to_3.part_0-*

- split: train_AR_4_to_3.part_1

path: data/train_AR_4_to_3.part_1-*

- split: train_AR_3_to_4.part_0

path: data/train_AR_3_to_4.part_0-*

- split: train_AR_4_to_3.part_2

path: data/train_AR_4_to_3.part_2-*

---

|

yvngexe/data_generated_by_armo | yvngexe | "2025-03-01T14:18:28Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-02-28T23:26:25Z" | ---

dataset_info:

features:

- name: prompt_id

dtype: string

- name: prompt

dtype: string

- name: response_0

dtype: string

- name: response_1

dtype: string

- name: response_2

dtype: string

- name: response_3

dtype: string

- name: response_4

dtype: string

- name: response_0_reward

dtype: float64

- name: response_1_reward

dtype: float64

- name: response_2_reward

dtype: float64

- name: response_3_reward

dtype: float64

- name: response_4_reward

dtype: float64

splits:

- name: train

num_bytes: 591568112

num_examples: 61814

download_size: 319605004

dataset_size: 591568112

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

ZStack-AI/LongDPO_openqa | ZStack-AI | "2025-03-01T04:45:14Z" | 0 | 0 | [

"license:apache-2.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"region:us"

] | null | "2025-03-01T03:41:58Z" | ---

license: apache-2.0

---

|

inkoziev/HomographResolutionEval | inkoziev | "2025-03-01T04:32:49Z" | 0 | 1 | [

"task_categories:text2text-generation",

"language:ru",

"license:cc-by-4.0",

"size_categories:1K<n<10K",

"format:json",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"homograph_resolution",

"accentuation"

] | [

"text2text-generation"

] | "2025-03-01T04:14:23Z" | ---

license: cc-by-4.0

task_categories:

- text2text-generation

language:

- ru

tags:

- homograph_resolution

- accentuation

pretty_name: Homograph Resulution Evaluation Dataset

size_categories:

- 1K<n<10K

---

# Homograph Resolution Evaluation Dataset

This dataset is designed to evaluate the performance of Text-to-Speech (TTS) systems and Language Models (LLMs) in resolving homographs in the Russian language. It contains carefully curated sentences, each featuring at least one homograph with the correct stress indicated. The dataset is particularly useful for assessing stress assignment tasks in TTS systems and LLMs.

## Key Features

- **Language**: Russian

- **Focus**: Homograph resolution and stress assignment

- **Unique Samples**: All sentences are original and highly unlikely to be present in existing training datasets.

- **Stress Annotation**: Correct stress marks are provided for homographs, enabling precise evaluation.

## Dataset Fields

- `context`: A sentence containing one or more homographs.

- `homograph`: The homograph with the correct stress mark.

- `accentuated_context`: The full sentence with correct stress marks applied.

**Note**: When evaluating, stress marks on words other than the homograph can be ignored.

## Limitations

1. **Single Stress Variant**: Each sample provides only one stress variant for a homograph, even if the homograph appears multiple times in the sentence (though such cases are rare).

2. **Limited Homograph Coverage**: The dataset includes a small subset of homographs in the Russian language and is not exhaustive.

## Intended Use

This dataset is ideal for:

- Evaluating TTS systems on homograph resolution and stress assignment.

- Benchmarking LLMs on their ability to handle ambiguous linguistic constructs.

- Research in computational linguistics, particularly in stress disambiguation and homograph resolution.

## Citing the Dataset

If you use this dataset in your research or projects, please cite it as follows:

```bibtex

@misc{HomographResolutionEval,

author = {Ilya Koziev},

title = {Homograph Resolution Evaluation Dataset},

year = {2025},

publisher = {Hugging Face},

howpublished = {\url{https://huggingface.co/datasets/inkoziev/HomographResolutionEval}}

}

|

RyanYr/simpleRLZero_matheval | RyanYr | "2025-03-01T04:21:24Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T04:21:23Z" | ---

dataset_info:

features:

- name: data_source

dtype: string

- name: problem

dtype: string

- name: solution

dtype: string

- name: answer

dtype: string

- name: prompt

list:

- name: content

dtype: string

- name: role

dtype: string

- name: reward_model

struct:

- name: ground_truth

dtype: string

- name: style

dtype: string

- name: responses

dtype: string

- name: gt_ans

dtype: string

- name: extracted_solution

dtype: string

- name: rm_scores

dtype: bool

splits:

- name: train

num_bytes: 6208004

num_examples: 1517

download_size: 2469747

dataset_size: 6208004

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

introvoyz041/Lilac | introvoyz041 | "2025-03-01T04:34:24Z" | 0 | 0 | [

"license:apache-2.0",

"region:us"

] | null | "2025-03-01T04:34:00Z" | ---

license: apache-2.0

---

|

RyanYr/RLHFlowOnlineDPOPPOZero_matheval | RyanYr | "2025-03-01T04:34:28Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T04:34:26Z" | ---

dataset_info:

features:

- name: data_source

dtype: string

- name: problem

dtype: string

- name: solution

dtype: string

- name: answer

dtype: string

- name: prompt

list:

- name: content

dtype: string

- name: role

dtype: string

- name: reward_model

struct:

- name: ground_truth

dtype: string

- name: style

dtype: string

- name: responses

dtype: string

- name: gt_ans

dtype: string

- name: extracted_solution

dtype: string

- name: rm_scores

dtype: bool

splits:

- name: train

num_bytes: 5914469

num_examples: 1517

download_size: 2481535

dataset_size: 5914469

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

introvoyz041/Llm4sd | introvoyz041 | "2025-03-01T04:41:48Z" | 0 | 0 | [

"license:apache-2.0",

"size_categories:10K<n<100K",

"format:text",

"modality:text",

"library:datasets",

"library:mlcroissant",

"region:us"

] | null | "2025-03-01T04:41:28Z" | ---

license: apache-2.0

---

|

RyanYr/Qwen2.5-7B-DPO-Zero_matheval | RyanYr | "2025-03-01T04:47:45Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T04:47:44Z" | ---

dataset_info:

features:

- name: data_source

dtype: string

- name: problem

dtype: string

- name: solution

dtype: string

- name: answer

dtype: string

- name: prompt

list:

- name: content

dtype: string

- name: role

dtype: string

- name: reward_model

struct:

- name: ground_truth

dtype: string

- name: style

dtype: string

- name: responses

dtype: string

- name: gt_ans

dtype: string

- name: extracted_solution

dtype: string

- name: rm_scores

dtype: bool

splits:

- name: train

num_bytes: 7259615

num_examples: 1517

download_size: 2543503

dataset_size: 7259615

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

meowterspace42/gretel-dd-glue-wnli | meowterspace42 | "2025-03-01T07:18:31Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T04:53:41Z" | ---

dataset_info:

features:

- name: seed_examples

dtype: string

- name: writing_style

dtype: string

- name: domain

dtype: string

- name: target_label

dtype: string

- name: glue_example

dtype: string

- name: eval_metrics

dtype: string

splits:

- name: train

num_bytes: 1743260

num_examples: 1798

download_size: 203981

dataset_size: 1743260

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/test_1 | Isylimanov099 | "2025-03-01T05:00:44Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:00:42Z" | ---

dataset_info:

features:

- name: Description

dtype: string

- name: Client

dtype: string

- name: Lawyer

dtype: string

splits:

- name: train

num_bytes: 88010

num_examples: 100

download_size: 36369

dataset_size: 88010

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/FAInAselmOon | Isylimanov099 | "2025-03-01T05:01:37Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:01:35Z" | ---

dataset_info:

features:

- name: Описание

dtype: float64

- name: Вопрос

dtype: string

- name: Ответ

dtype: string

splits:

- name: train

num_bytes: 3062

num_examples: 13

download_size: 4077

dataset_size: 3062

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/Test-books | Isylimanov099 | "2025-03-01T05:05:14Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:05:10Z" | ---

dataset_info:

features:

- name: Описание

dtype: string

- name: Вопрос

dtype: string

- name: Ответ

dtype: string

splits:

- name: train

num_bytes: 7866

num_examples: 29

download_size: 7873

dataset_size: 7866

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/kamila09 | Isylimanov099 | "2025-03-01T05:05:18Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:05:16Z" | ---

dataset_info:

features:

- name: Описание

dtype: string

- name: Вопросы

dtype: string

- name: Ответы

dtype: string

splits:

- name: train

num_bytes: 8603

num_examples: 50

download_size: 6236

dataset_size: 8603

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/Koala | Isylimanov099 | "2025-03-01T05:05:25Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:05:23Z" | ---

dataset_info:

features:

- name: Описание

dtype: string

- name: Вопрос

dtype: string

- name: Ответ

dtype: string

splits:

- name: train

num_bytes: 16480

num_examples: 43

download_size: 10197

dataset_size: 16480

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/Travel | Isylimanov099 | "2025-03-01T05:06:35Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:06:32Z" | ---

dataset_info:

features:

- name: 'Unnamed: 0'

dtype: float64

- name: 描述

dtype: string

- name: 问题

dtype: string

- name: 回答

dtype: string

splits:

- name: train

num_bytes: 9557

num_examples: 16

download_size: 9231

dataset_size: 9557

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/IT-Venera | Isylimanov099 | "2025-03-01T05:08:30Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:08:25Z" | ---

dataset_info:

features:

- name: 'Unnamed: 0'

dtype: string

- name: 'Unnamed: 1'

dtype: string

- name: 'Unnamed: 2'

dtype: string

- name: 'Unnamed: 3'

dtype: string

splits:

- name: train

num_bytes: 66500

num_examples: 75

download_size: 28823

dataset_size: 66500

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/a.bolotbekovvvna | Isylimanov099 | "2025-03-01T05:08:35Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:08:33Z" | ---

dataset_info:

features:

- name: ОПИСАНИЕ

dtype: string

- name: ВОПРОС

dtype: string

- name: ОТВЕТ

dtype: string

splits:

- name: train

num_bytes: 7046

num_examples: 20

download_size: 6608

dataset_size: 7046

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/asel | Isylimanov099 | "2025-03-01T05:12:05Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:12:02Z" | ---

dataset_info:

features:

- name: Описание

dtype: string

- name: Вопросы

dtype: string

- name: Ответ

dtype: string

splits:

- name: train

num_bytes: 1516

num_examples: 4

download_size: 3866

dataset_size: 1516

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Isylimanov099/JanybekovaAijamal | Isylimanov099 | "2025-03-01T05:20:32Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T05:20:30Z" | ---

dataset_info:

features:

- name: Описание

dtype: string

- name: Вопрос

dtype: string

- name: Ответ

dtype: float64

- name: 'Unnamed: 3'

dtype: float64

- name: Кв. 3

dtype: float64

- name: Кв. 4

dtype: float64

splits:

- name: train

num_bytes: 20168

num_examples: 277

download_size: 6889

dataset_size: 20168

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

jmhb/VidDiffBench | jmhb | "2025-03-01T08:23:54Z" | 0 | 1 | [

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"modality:video",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"video"

] | null | "2025-03-01T06:08:38Z" | ---

tags:

- video

dataset_info:

features:

- name: sample_key

dtype: string

- name: vid0_thumbnail

dtype: image

- name: vid1_thumbnail

dtype: image

- name: videos

dtype: string

- name: action

dtype: string

- name: action_name

dtype: string

- name: action_description

dtype: string

- name: source_dataset

dtype: string

- name: sample_hash

dtype: int64

- name: retrieval_frames

dtype: string

- name: differences_annotated

dtype: string

- name: differences_gt

dtype: string

- name: domain

dtype: string

- name: split

dtype: string

- name: n_differences_open_prediction

dtype: int64

splits:

- name: test

num_bytes: 15219230.154398564

num_examples: 549

download_size: 6445835

dataset_size: 15219230.154398564

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

---

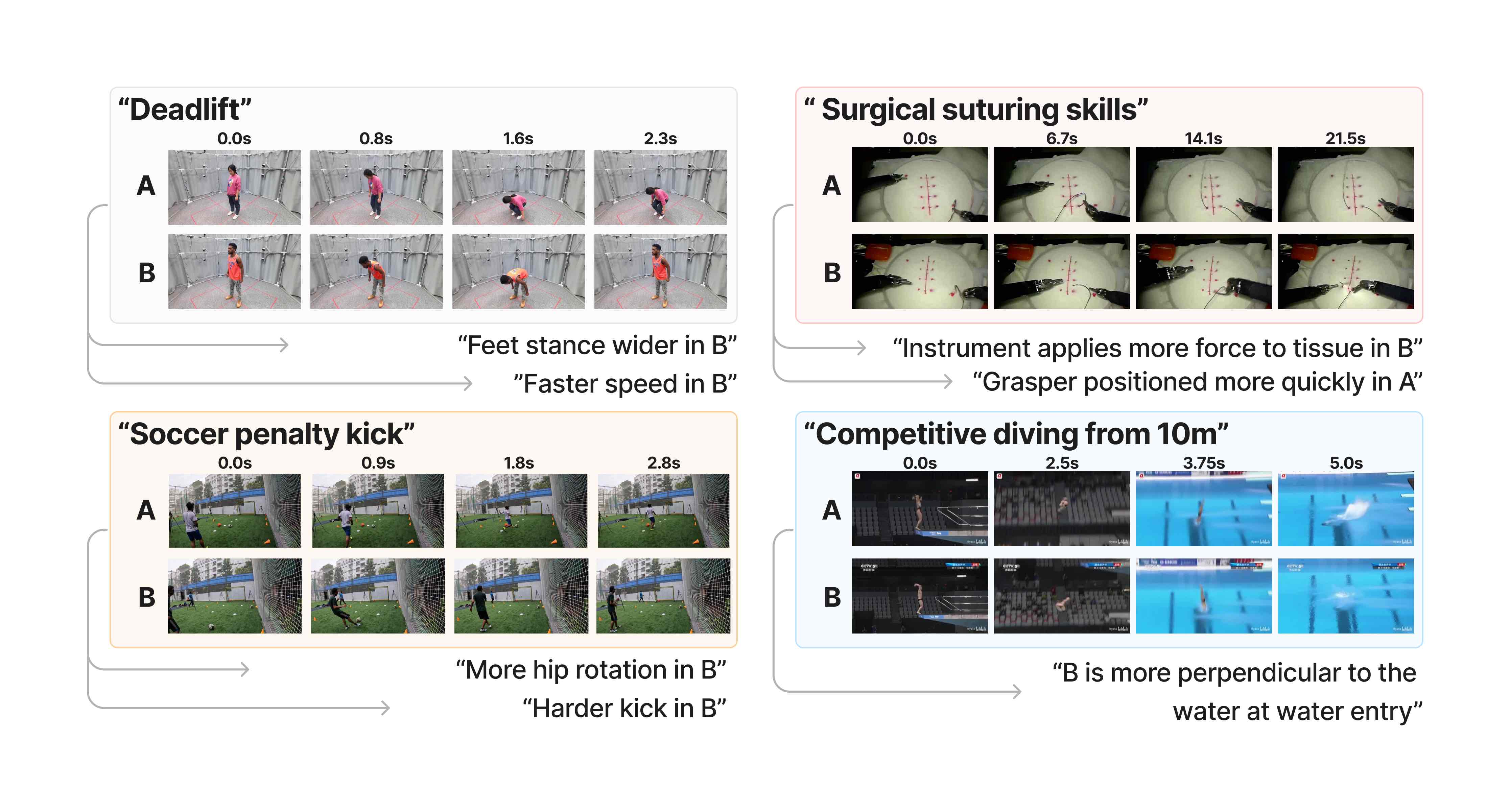

# Dataset card for "VidDiffBench"

This is the dataset / benchmark for [Video Action Differencing](https://openreview.net/forum?id=3bcN6xlO6f) (ICLR 2025), a new task that compares how an action is performed between two videos. This page introduces the task, the dataset structure, and how to access the data. See the paper for details on dataset construction. The code for running evaluation, and for benchmarking popular LMMs is at [https://jmhb0.github.io/viddiff](https://jmhb0.github.io/viddiff).

```

@inproceedings{burgessvideo,

title={Video Action Differencing},

author={Burgess, James and Wang, Xiaohan and Zhang, Yuhui and Rau, Anita and Lozano, Alejandro and Dunlap, Lisa and Darrell, Trevor and Yeung-Levy, Serena},

booktitle={The Thirteenth International Conference on Learning Representations}

}

```

# The Video Action Differencing task: closed and open evaluation

The Video Action Differencing task compares two videos of the same action. The goal is to identify differences in how the action is performed, where the differences are expressed in natural language.

In closed evaluation:

- Input: two videos of the same action, action description string, a list of candidate difference strings.

- Output: for each difference string, either 'a' if the statement applies more to video a, or 'b' if it applies more to video 'b'.

In open evaluation, the model must generate the difference strings:

- Input: two videos of the same action, action description string, a number 'n_differences'.

- Output: a list of difference strings (at most 'n_differences'). For each difference string, 'a' if the statement applies more to video a, or 'b' if it applies more to video 'b'.

<!--

Some more details on these evaluation modes. See the paper for more discussion:

- In closed eval, we only provide difference strings where the gt label is 'a' or 'b'; if the gt label is 'c' meaning "not different", it's skipped. This is because different annotators (or models) may have different calibration: a different judgement of "how different is different enough".

- In open evaluation, the model is allowed to predict at most `n_differences`, which we set to be 1.5x the number of differences we included in our annotation taxonomy. This is because there may be valid differences not in our annotation set, and models should not be penalized for that. But a limit is required to prevent cheating by enumerating too many possible differences.

The eval scripts are at [https://jmhb0.github.io/viddiff](https://jmhb0.github.io/viddiff).

-->

# Dataset structure

After following the 'getting the data' section: we have `dataset` as a HuggingFace dataset and `videos` as a list. For row `i`: video A is `videos[0][i]`, video B is `videos[1][i]`, and `dataset[i]` is the annotation for the difference between the videos.

The videos:

- `videos[0][i]['video']` and is a numpy array with shape `(nframes,H,W,3)`.

- `videos[0][i]['fps_original']` is an int, frames per second.

The annotations in `dataset`:

- `sample_key` a unique key.

- `videos` metadata about the videos A and B used by the dataloader: the video filename, and the start and end frames.

- `action` action key like "fitness_2"

- `action_name` a short action name, like "deadlift"

- `action_description` a longer action description, like "a single free weight deadlift without any weight"

- `source_dataset` the source dataset for the videos (but not annotation), e.g. 'humman' [here](https://caizhongang.com/projects/HuMMan/).

- `split` difficulty split, one of `{'easy', 'medium', 'hard'}`

- `n_differences_open_prediction` in open evaluation, the max number of difference strings the model is allowed to generate.

- `differences_annotated` a dict with the difference strings, e.g:

```

{

"0": {

"description": "the feet stance is wider",

"name": "feet stance wider",

"num_frames": "1",

},

"1": {

"description": "the speed of hip rotation is faster",

"name": "speed",

"num_frames": "gt_1",

},

"2" : null,

...

```

- and these keys are:

- the key is the 'key_difference'

- `description` is the 'difference string' (passed as input in closed eval, or the model must generate a semantically similar string in open eval).

- `num_frames` (not used) is '1' if an LMM could solve it from a single (well-chosen) frame, or 'gt_1' if more frames are needed.

- Some values might be `null`. This is because the Huggingface datasets enforces that all elements in a column have the same schema.

- `differences_gt` has the gt label, e.g. `{"0": "b", "1":"a", "2":null}`. For example, difference "the feet stance is wider" applies more to video B.

- `domain` activity domain. One of `{'fitness', 'ballsports', 'diving', 'surgery', 'music'}`.

# Getting the data

Getting the dataset requires a few steps. We distribute the annotations, but since we don't own the videos, you'll have to download them elsewhere.

**Get the annotations**

First, get the annotations from the hub like this:

```

from datasets import load_dataset

repo_name = "jmhb/VidDiffBench"

dataset = load_dataset(repo_name)

```

**Get the videos**

We get videos from prior works (which should be cited if you use the benchmark - see the end of this doc).

The source dataset is in the dataset column `source_dataset`.

First, download some `.py` files from this repo into your local `data/` file.

```

GIT_LFS_SKIP_SMUDGE=1 git clone git@hf.co:datasets/jmhb/VidDiffBench data/

```

A few datasets let us redistribute videos, so you can download them from this HF repo like this:

```

python data/download_data.py

```

If you ONLY need the 'easy' split, you can stop here. The videos includes the source datasets [Humann](https://caizhongang.com/projects/HuMMan/) (and 'easy' only draws from this data) and [JIGSAWS](https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/).

For 'medium' and 'hard' splits, you'll need to download these other datasets from the EgoExo4D and FineDiving. Here's how to do that:

*Download EgoExo4d videos*

These are needed for 'medium' and 'hard' splits. First Request an access key from the [docs](https://docs.ego-exo4d-data.org/getting-started/) (it takes 48hrs). Then follow the instructions to install the CLI download tool `egoexo`. We only need a small number of these videos, so get the uids list from `data/egoexo4d_uids.json` and use `egoexo` to download:

```

uids=$(jq -r '.[]' data/egoexo4d_uids.json | tr '\n' ' ' | sed 's/ $//')

egoexo -o data/src_EgoExo4D --parts downscaled_takes/448 --uids $uids

```

Common issue: remember to put your access key into `~/.aws/credentials`.

*Download FineDiving videos*

These are needed for 'medium' split. Follow the instructions in [the repo](https://github.com/xujinglin/FineDiving) to request access (it takes at least a day), download the whole thing, and set up a link to it:

```

ln -s <path_to_fitnediving> data/src_FineDiving

```

**Making the final dataset with videos**

Install these packages:

```

pip install numpy Pillow datasets decord lmdb tqdm huggingface_hub

```

Now run:

```

from data.load_dataset import load_dataset, load_all_videos

dataset = load_dataset(splits=['easy'], subset_mode="0") # splits are one of {'easy','medium','hard'}

videos = load_all_videos(dataset, cache=True, cache_dir="cache/cache_data")

```

For row `i`: video A is `videos[0][i]`, video B is `videos[1][i]`, and `dataset[i]` is the annotation for the difference between the videos. For video A, the video itself is `videos[0][i]['video']` and is a numpy array with shape `(nframes,3,H,W)`; the fps is in `videos[0][i]['fps_original']`.

By passing the argument `cache=True` to `load_all_videos`, we create a cache directory at `cache/cache_data/`, and save copies of the videos using numpy memmap (total directory size for the whole dataset is 55Gb). Loading the videos and caching will take a few minutes per split (faster for the 'easy' split), and about 25mins for the whole dataset. But on subsequent runs, it should be fast - a few seconds for the whole dataset.

Finally, you can get just subsets, for example setting `subset_mode='3_per_action'` will take 3 video pairs per action, while `subset_mode="0"` gets them all.

# More dataset info

We have more dataset metadata in this dataset repo:

- Differences taxonomy `data/difference_taxonomy.csv`.

- Actions and descriptions `data/actions.csv`.

# License

The annotations and all other non-video metadata is realeased under an MIT license.

The videos retain the license of the original dataset creators, and the source dataset is given in dataset column `source_dataset`.

- EgoExo4D, license is online at [this link](https://ego4d-data.org/pdfs/Ego-Exo4D-Model-License.pdf)

- JIGSAWS release notes at [this link](https://cirl.lcsr.jhu.edu/research/hmm/datasets/jigsaws_release/ )

- Humman uses "S-Lab License 1.0" at [this link](https://caizhongang.com/projects/HuMMan/license.txt)

- FineDiving use [this MIT license](https://github.com/xujinglin/FineDiving/blob/main/LICENSE)

# Citation

Below is the citation for our paper, and the original source datasets:

```

@inproceedings{burgessvideo,

title={Video Action Differencing},

author={Burgess, James and Wang, Xiaohan and Zhang, Yuhui and Rau, Anita and Lozano, Alejandro and Dunlap, Lisa and Darrell, Trevor and Yeung-Levy, Serena},

booktitle={The Thirteenth International Conference on Learning Representations}

}

@inproceedings{cai2022humman,

title={{HuMMan}: Multi-modal 4d human dataset for versatile sensing and modeling},

author={Cai, Zhongang and Ren, Daxuan and Zeng, Ailing and Lin, Zhengyu and Yu, Tao and Wang, Wenjia and Fan,

Xiangyu and Gao, Yang and Yu, Yifan and Pan, Liang and Hong, Fangzhou and Zhang, Mingyuan and

Loy, Chen Change and Yang, Lei and Liu, Ziwei},

booktitle={17th European Conference on Computer Vision, Tel Aviv, Israel, October 23--27, 2022,

Proceedings, Part VII},

pages={557--577},

year={2022},

organization={Springer}

}

@inproceedings{parmar2022domain,

title={Domain Knowledge-Informed Self-supervised Representations for Workout Form Assessment},

author={Parmar, Paritosh and Gharat, Amol and Rhodin, Helge},

booktitle={Computer Vision--ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23--27, 2022, Proceedings, Part XXXVIII},

pages={105--123},

year={2022},

organization={Springer}

}

@inproceedings{grauman2024ego,

title={Ego-exo4d: Understanding skilled human activity from first-and third-person perspectives},

author={Grauman, Kristen and Westbury, Andrew and Torresani, Lorenzo and Kitani, Kris and Malik, Jitendra and Afouras, Triantafyllos and Ashutosh, Kumar and Baiyya, Vijay and Bansal, Siddhant and Boote, Bikram and others},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={19383--19400},

year={2024}

}

@inproceedings{gao2014jhu,

title={Jhu-isi gesture and skill assessment working set (jigsaws): A surgical activity dataset for human motion modeling},

author={Gao, Yixin and Vedula, S Swaroop and Reiley, Carol E and Ahmidi, Narges and Varadarajan, Balakrishnan and Lin, Henry C and Tao, Lingling and Zappella, Luca and B{\'e}jar, Benjam{\i}n and Yuh, David D and others},

booktitle={MICCAI workshop: M2cai},

volume={3},

number={2014},

pages={3},

year={2014}

}

```

|

mshojaei77/PDC | mshojaei77 | "2025-03-01T06:30:06Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:10:23Z" | ---

dataset_info:

features:

- name: text

dtype: string

- name: file_name

dtype: string

splits:

- name: train

num_bytes: 1624495382

num_examples: 13111

download_size: 549673162

dataset_size: 1624495382

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

simwit/medmoe-slake | simwit | "2025-03-01T07:53:30Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:27:40Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

dtype: string

- name: modality

dtype: string

- name: answer_type

dtype: string

splits:

- name: test_all

num_bytes: 109097147.213

num_examples: 1061

- name: test_open

num_bytes: 69131481.0

num_examples: 645

- name: test_closed

num_bytes: 37653859.0

num_examples: 416

download_size: 27747526

dataset_size: 215882487.213

configs:

- config_name: default

data_files:

- split: test_all

path: data/test_all-*

- split: test_open

path: data/test_open-*

- split: test_closed

path: data/test_closed-*

---

|

Hkang/summarize_sft-test_lm-EleutherAI_pythia-1b_seed-42_numex-250_20K-BON_alpha-0.7_temp-0.7_64 | Hkang | "2025-03-01T06:29:50Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:29:49Z" | ---

dataset_info:

features:

- name: id

dtype: string

- name: subreddit

dtype: string

- name: title

dtype: string

- name: post

dtype: string

- name: summary

dtype: string

- name: query_input_ids

sequence: int64

- name: query_attention_mask

sequence: int64

- name: query

dtype: string

- name: reference_response

dtype: string

- name: reference_response_input_ids

sequence: int64

- name: reference_response_attention_mask

sequence: int64

- name: reference_response_token_len

dtype: int64

- name: query_reference_response

dtype: string

- name: query_reference_response_input_ids

sequence: int64

- name: query_reference_response_attention_mask

sequence: int64

- name: query_reference_response_token_response_label

sequence: int64

- name: query_reference_response_token_len

dtype: int64

- name: model_response

dtype: string

splits:

- name: test

num_bytes: 6845542

num_examples: 250

download_size: 1156613

dataset_size: 6845542

configs:

- config_name: default

data_files:

- split: test

path: data/test-*

---

|

LunaCookie/RPG-datasets | LunaCookie | "2025-03-01T06:32:38Z" | 0 | 0 | [

"license:openrail",

"size_categories:n<1K",

"format:audiofolder",

"modality:audio",

"library:datasets",

"library:mlcroissant",

"region:us"

] | null | "2025-03-01T06:31:09Z" | ---

license: openrail

---

|

simwit/medmoe-path-vqa | simwit | "2025-03-01T07:50:59Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:31:44Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: question

dtype: string

- name: answer

dtype: string

- name: answer_type

dtype: string

splits:

- name: test_all

num_bytes: 487625910.222

num_examples: 6761

- name: test_open

num_bytes: 428189626.97

num_examples: 3370

- name: test_closed

num_bytes: 417624057.619

num_examples: 3391

download_size: 475692126

dataset_size: 1333439594.811

configs:

- config_name: default

data_files:

- split: test_all

path: data/test_all-*

- split: test_open

path: data/test_open-*

- split: test_closed

path: data/test_closed-*

---

|

isaiahbjork/showui-reasoning | isaiahbjork | "2025-03-01T06:32:30Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:32:01Z" | ---

dataset_info:

features:

- name: conversation

list:

- name: content

list:

- name: text

dtype: string

- name: type

dtype: string

- name: role

dtype: string

- name: image

dtype: binary

splits:

- name: train

num_bytes: 9372012432

num_examples: 20000

download_size: 714885901

dataset_size: 9372012432

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

Dhruveshsd/dvs | Dhruveshsd | "2025-03-01T06:33:45Z" | 0 | 0 | [

"license:bigscience-openrail-m",

"region:us"

] | null | "2025-03-01T06:33:45Z" | ---

license: bigscience-openrail-m

---

|

Yiheyihe/galaxea-r1-shelf-1ep-normalized | Yiheyihe | "2025-03-01T09:04:58Z" | 0 | 0 | [

"task_categories:robotics",

"license:apache-2.0",

"size_categories:n<1K",

"format:parquet",

"modality:tabular",

"modality:timeseries",

"modality:video",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us",

"LeRobot"

] | [

"robotics"

] | "2025-03-01T06:42:10Z" | ---

license: apache-2.0

task_categories:

- robotics

tags:

- LeRobot

configs:

- config_name: default

data_files: data/*/*.parquet

---

This dataset was created using [LeRobot](https://github.com/huggingface/lerobot).

## Dataset Description

- **Homepage:** [More Information Needed]

- **Paper:** [More Information Needed]

- **License:** apache-2.0

## Dataset Structure

[meta/info.json](meta/info.json):

```json

{

"codebase_version": "v2.0",

"robot_type": null,

"total_episodes": 1,

"total_frames": 508,

"total_tasks": 1,

"total_videos": 3,

"total_chunks": 1,

"chunks_size": 1000,

"fps": 30,

"splits": {

"train": "0:1"

},

"data_path": "data/chunk-{episode_chunk:03d}/episode_{episode_index:06d}.parquet",

"video_path": "videos/chunk-{episode_chunk:03d}/{video_key}/episode_{episode_index:06d}.mp4",

"features": {

"timestamp": {

"dtype": "float32",

"shape": [

1

],

"names": null

},

"observation.state": {

"dtype": "float32",

"shape": [

21

]

},

"action": {

"dtype": "float32",

"shape": [

21

]

},

"observation.images.head": {

"dtype": "video",

"shape": [

3,

94,

168

],

"names": [

"channels",

"height",

"width"

],

"info": {

"video.fps": 30.0,

"video.height": 94,

"video.width": 168,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"has_audio": false

}

},

"observation.images.left_wrist": {

"dtype": "video",

"shape": [

3,

94,

168

],

"names": [

"channels",

"height",

"width"

],

"info": {

"video.fps": 30.0,

"video.height": 94,

"video.width": 168,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"has_audio": false

}

},

"observation.images.right_wrist": {

"dtype": "video",

"shape": [

3,

94,

168

],

"names": [

"channels",

"height",

"width"

],

"info": {

"video.fps": 30.0,

"video.height": 94,

"video.width": 168,

"video.channels": 3,

"video.codec": "h264",

"video.pix_fmt": "yuv420p",

"video.is_depth_map": false,

"has_audio": false

}

},

"frame_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"episode_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"index": {

"dtype": "int64",

"shape": [

1

],

"names": null

},

"task_index": {

"dtype": "int64",

"shape": [

1

],

"names": null

}

}

}

```

## Citation

**BibTeX:**

```bibtex

[More Information Needed]

``` |

gajanhcc/fashion-detail-query-10images | gajanhcc | "2025-03-01T06:50:24Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:50:22Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: item_ID

dtype: string

- name: query

dtype: string

- name: title

dtype: string

- name: position

dtype: int64

- name: specific_detail_query

dtype: string

splits:

- name: train

num_bytes: 96642.7

num_examples: 7

- name: test

num_bytes: 41418.3

num_examples: 3

download_size: 144469

dataset_size: 138061.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

jinchenliuljc/FinSumCOT | jinchenliuljc | "2025-03-01T06:58:05Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:51:50Z" | ---

dataset_info:

features:

- name: 'Unnamed: 0'

dtype: int64

- name: text

dtype: string

- name: summary

dtype: string

- name: deepseek_summary

dtype: string

- name: deepseek_reasoning

dtype: string

splits:

- name: train

num_bytes: 86154

num_examples: 5

download_size: 66081

dataset_size: 86154

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

gajanhcc/fashion-detail-query-annotated-10images | gajanhcc | "2025-03-01T07:06:29Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:52:49Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: item_ID

dtype: string

- name: query

dtype: string

- name: title

dtype: string

- name: position

dtype: int64

- name: original_image

dtype: image

- name: specific_detail_query

dtype: string

splits:

- name: train

num_bytes: 117483829.6

num_examples: 800

- name: test

num_bytes: 29370957.4

num_examples: 200

download_size: 146828393

dataset_size: 146854787.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

svjack/Genshin_Impact_Yae_Miko_MMD_Video_Dataset | svjack | "2025-03-01T11:55:36Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:video",

"library:datasets",

"library:dask",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T06:58:10Z" | ---

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

dataset_info:

features:

- name: video

dtype: video

splits:

- name: train

num_bytes: 1447150184.556

num_examples: 1061

download_size: 159078

dataset_size: 1447150184.556

---

<video controls autoplay src="https://cdn-uploads.huggingface.co/production/uploads/634dffc49b777beec3bc6448/8aCjIslNTHwNqEENpgpg6.mp4"></video>

Reorganized version of [`Wild-Heart/Disney-VideoGeneration-Dataset`](https://huggingface.co/datasets/Wild-Heart/Disney-VideoGeneration-Dataset). This is needed for [Mochi-1 fine-tuning](https://github.com/genmoai/mochi/tree/aba74c1b5e0755b1fa3343d9e4bd22e89de77ab1/demos/fine_tuner). |

gymprathap/Driver-Distracted-Dataset | gymprathap | "2025-03-01T08:29:19Z" | 0 | 0 | [

"language:en",

"license:cc",

"size_categories:100K<n<1M",

"modality:image",

"region:us",

"art"

] | null | "2025-03-01T07:04:54Z" | ---

license: cc

language:

- en

tags:

- art

size_categories:

- 100K<n<1M

--- |

gajanhcc/fashion-detail-query-annotated-1000images | gajanhcc | "2025-03-01T07:09:51Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:image",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T07:09:43Z" | ---

dataset_info:

features:

- name: image

dtype: image

- name: item_ID

dtype: string

- name: query

dtype: string

- name: title

dtype: string

- name: position

dtype: int64

- name: original_image

dtype: image

- name: specific_detail_query

dtype: string

splits:

- name: train

num_bytes: 117483829.6

num_examples: 800

- name: test

num_bytes: 29370957.4

num_examples: 200

download_size: 146828393

dataset_size: 146854787.0

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

- split: test

path: data/test-*

---

|

khcho1954/ragas-test-dataset | khcho1954 | "2025-03-01T07:11:43Z" | 0 | 0 | [

"size_categories:n<1K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T07:09:50Z" | ---

dataset_info:

features:

- name: contexts

dtype: string

- name: evolution_type

dtype: string

- name: metadata

dtype: string

- name: episode_done

dtype: bool

- name: question

dtype: string

- name: ground_truth

dtype: string

splits:

- name: korean_v1

num_bytes: 30243

num_examples: 10

download_size: 0

dataset_size: 30243

configs:

- config_name: default

data_files:

- split: korean_v1

path: data/korean_v1-*

---

# Dataset Card for "ragas-test-dataset"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) |

Arjuna17/lepala-ai-swahili-hausa-dataset | Arjuna17 | "2025-03-01T07:11:27Z" | 0 | 0 | [

"license:apache-2.0",

"region:us"

] | null | "2025-03-01T07:11:27Z" | ---

license: apache-2.0

---

|

Yuanxin-Liu/test_yx_noanswer-math_gsm-gemma-1.1-7b-it-iter_sample_7500_temp_1.0_gen_10_mlr5e-5 | Yuanxin-Liu | "2025-03-01T07:19:22Z" | 0 | 0 | [

"size_categories:1K<n<10K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T07:19:20Z" | ---

dataset_info:

features:

- name: question

dtype: string

- name: answer

dtype: string

- name: rational_answer

dtype: string

splits:

- name: train

num_bytes: 5409009

num_examples: 5802

download_size: 2877131

dataset_size: 5409009

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

---

|

mangopy/ToolRet-before-sample | mangopy | "2025-03-01T07:39:53Z" | 0 | 0 | [

"size_categories:10K<n<100K",

"format:parquet",

"modality:text",

"library:datasets",

"library:pandas",

"library:mlcroissant",

"library:polars",

"region:us"

] | null | "2025-03-01T07:25:37Z" | ---

dataset_info:

- config_name: apibank

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 189985

num_examples: 101

download_size: 45023

dataset_size: 189985

- config_name: apigen

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 991447

num_examples: 1000

download_size: 352171

dataset_size: 991447

- config_name: appbench

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 2302790

num_examples: 801

download_size: 167561

dataset_size: 2302790

- config_name: autotools-food

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 783804

num_examples: 41

download_size: 138180

dataset_size: 783804

- config_name: autotools-music

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 8965195

num_examples: 50

download_size: 1884066

dataset_size: 8965195

- config_name: autotools-weather

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 2171417

num_examples: 50

download_size: 369274

dataset_size: 2171417

- config_name: craft-math-algebra

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 328289

num_examples: 280

download_size: 119720

dataset_size: 328289

- config_name: craft-tabmwp

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 293350

num_examples: 174

download_size: 93220

dataset_size: 293350

- config_name: craft-vqa

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 270344

num_examples: 200

download_size: 85397

dataset_size: 270344

- config_name: gorilla-huggingface

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 831939

num_examples: 500

download_size: 290310

dataset_size: 831939

- config_name: gorilla-pytorch

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 295446

num_examples: 186

download_size: 43369

dataset_size: 295446

- config_name: gorilla-tensor

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 837843

num_examples: 688

download_size: 62197

dataset_size: 837843

- config_name: gpt4tools

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 1607837

num_examples: 1727

download_size: 305122

dataset_size: 1607837

- config_name: gta

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 19979

num_examples: 14

download_size: 18173

dataset_size: 19979

- config_name: metatool

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 2122091

num_examples: 5327

download_size: 516864

dataset_size: 2122091

- config_name: mnms

features:

- name: id

dtype: string

- name: query

dtype: string

- name: instruction

dtype: string

- name: labels

dtype: string

- name: category

dtype: string

splits:

- name: queries

num_bytes: 4932111