path

stringlengths 9

135

| content

stringlengths 8

143k

|

|---|---|

qdrant-landing/content/blog/hybrid-cloud-ovhcloud.md | ---

draft: false

title: "Qdrant and OVHcloud Bring Vector Search to All Enterprises"

short_description: "Collaborating to support startups and enterprises in Europe with a strong focus on data control and privacy."

description: "Collaborating to support startups and enterprises in Europe with a strong focus on data control and privacy."

preview_image: /blog/hybrid-cloud-ovhcloud/hybrid-cloud-ovhcloud.png

date: 2024-04-10T00:05:00Z

author: Qdrant

featured: false

weight: 1004

tags:

- Qdrant

- Vector Database

---

With the official release of [Qdrant Hybrid Cloud](/hybrid-cloud/), businesses running their data infrastructure on [OVHcloud](https://ovhcloud.com/) are now able to deploy a fully managed vector database in their existing OVHcloud environment. We are excited about this partnership, which has been established through the [OVHcloud Open Trusted Cloud](https://opentrustedcloud.ovhcloud.com/en/) program, as it is based on our shared understanding of the importance of trust, control, and data privacy in the context of the emerging landscape of enterprise-grade AI applications. As part of this collaboration, we are also providing a detailed use case tutorial on building a recommendation system that demonstrates the benefits of running Qdrant Hybrid Cloud on OVHcloud.

Deploying Qdrant Hybrid Cloud on OVHcloud's infrastructure represents a significant leap for European businesses invested in AI-driven projects, as this collaboration underscores the commitment to meeting the rigorous requirements for data privacy and control of European startups and enterprises building AI solutions. As businesses are progressing on their AI journey, they require dedicated solutions that allow them to make their data accessible for machine learning and AI projects, without having it leave the company's security perimeter. Prioritizing data sovereignty, a crucial aspect in today's digital landscape, will help startups and enterprises accelerate their AI agendas and build even more differentiating AI-enabled applications. The ability of running Qdrant Hybrid Cloud on OVHcloud not only underscores the commitment to innovative, secure AI solutions but also ensures that companies can navigate the complexities of AI and machine learning workloads with the flexibility and security required.

> *“The partnership between OVHcloud and Qdrant Hybrid Cloud highlights, in the European AI landscape, a strong commitment to innovative and secure AI solutions, empowering startups and organisations to navigate AI complexities confidently. By emphasizing data sovereignty and security, we enable businesses to leverage vector databases securely.“* Yaniv Fdida, Chief Product and Technology Officer, OVHcloud

#### Qdrant & OVHcloud: High Performance Vector Search With Full Data Control

Through the seamless integration between Qdrant Hybrid Cloud and OVHcloud, developers and businesses are able to deploy the fully managed vector database within their existing OVHcloud setups in minutes, enabling faster, more accurate AI-driven insights.

- **Simple setup:** With the seamless “one-click” installation, developers are able to deploy Qdrant’s fully managed vector database to their existing OVHcloud environment.

- **Trust and data sovereignty**: Deploying Qdrant Hybrid Cloud on OVHcloud enables developers with vector search that prioritizes data sovereignty, a crucial aspect in today's AI landscape where data privacy and control are essential. True to its “Sovereign by design” DNA, OVHcloud guarantees that all the data stored are immune to extraterritorial laws and comply with the highest security standards.

- **Open standards and open ecosystem**: OVHcloud’s commitment to open standards and an open ecosystem not only facilitates the easy integration of Qdrant Hybrid Cloud with OVHcloud’s AI services and GPU-powered instances but also ensures compatibility with a wide range of external services and applications, enabling seamless data workflows across the modern AI stack.

- **Cost efficient sector search:** By leveraging Qdrant's quantization for efficient data handling and pairing it with OVHcloud's eco-friendly, water-cooled infrastructure, known for its superior price/performance ratio, this collaboration provides a strong foundation for cost efficient vector search.

#### Build a RAG-Based System with Qdrant Hybrid Cloud and OVHcloud

To show how Qdrant Hybrid Cloud deployed on OVHcloud allows developers to leverage the benefits of an AI use case that is completely run within the existing infrastructure, we put together a comprehensive use case tutorial. This tutorial guides you through creating a recommendation system using collaborative filtering and sparse vectors with Qdrant Hybrid Cloud on OVHcloud. It employs the Movielens dataset for practical application, providing insights into building efficient, scalable recommendation engines suitable for developers and data scientists looking to leverage advanced vector search technologies within a secure, GDPR-compliant European cloud infrastructure.

[Try the Tutorial](/documentation/tutorials/recommendation-system-ovhcloud/)

#### Get Started Today and Leverage the Benefits of Qdrant Hybrid Cloud

Setting up Qdrant Hybrid Cloud on OVHcloud is straightforward and quick, thanks to the intuitive integration with Kubernetes. Here's how:

- **Hybrid Cloud Activation**: Log into your Qdrant account and enable 'Hybrid Cloud'.

- **Cluster Integration**: Add your OVHcloud Kubernetes clusters as a Hybrid Cloud Environment in the Hybrid Cloud settings.

- **Effortless Deployment**: Use the Qdrant Management Console for easy deployment and management of Qdrant clusters on OVHcloud.

[Read Hybrid Cloud Documentation](/documentation/hybrid-cloud/)

#### Ready to Get Started?

Create a [Qdrant Cloud account](https://cloud.qdrant.io/login) and deploy your first **Qdrant Hybrid Cloud** cluster in a few minutes. You can always learn more in the [official release blog](/blog/hybrid-cloud/). |

qdrant-landing/content/blog/hybrid-cloud-red-hat-openshift.md | ---

draft: false

title: "Red Hat OpenShift and Qdrant Hybrid Cloud Offer Seamless and Scalable AI"

short_description: "Qdrant brings managed vector databases to Red Hat OpenShift for large-scale GenAI."

description: "Qdrant brings managed vector databases to Red Hat OpenShift for large-scale GenAI."

preview_image: /blog/hybrid-cloud-red-hat-openshift/hybrid-cloud-red-hat-openshift.png

date: 2024-04-11T00:04:00Z

author: Qdrant

featured: false

weight: 1003

tags:

- Qdrant

- Vector Database

---

We’re excited about our collaboration with Red Hat to bring the Qdrant vector database to [Red Hat OpenShift](https://www.redhat.com/en/technologies/cloud-computing/openshift) customers! With the release of [Qdrant Hybrid Cloud](/hybrid-cloud/), developers can now deploy and run the Qdrant vector database directly in their Red Hat OpenShift environment. This collaboration enables developers to scale more seamlessly, operate more consistently across hybrid cloud environments, and maintain complete control over their vector data. This is a big step forward in simplifying AI infrastructure and empowering data-driven projects, like retrieval augmented generation (RAG) use cases, advanced search scenarios, or recommendations systems.

In the rapidly evolving field of Artificial Intelligence and Machine Learning, the demand for being able to manage the modern AI stack within the existing infrastructure becomes increasingly relevant for businesses. As enterprises are launching new AI applications and use cases into production, they require the ability to maintain complete control over their data, since these new apps often work with sensitive internal and customer-centric data that needs to remain within the owned premises. This is why enterprises are increasingly looking for maximum deployment flexibility for their AI workloads.

>*“Red Hat is committed to driving transparency, flexibility and choice for organizations to more easily unlock the power of AI. By working with partners like Qdrant to enable streamlined integration experiences on Red Hat OpenShift for AI use cases, organizations can more effectively harness critical data and deliver real business outcomes,”* said Steven Huels, Vice President and General Manager, AI Business Unit, Red Hat.

#### The Synergy of Qdrant Hybrid Cloud and Red Hat OpenShift

Qdrant Hybrid Cloud is the first vector database that can be deployed anywhere, with complete database isolation, while still providing a fully managed cluster management. Running Qdrant Hybrid Cloud on Red Hat OpenShift allows enterprises to deploy and run a fully managed vector database in their own environment, ultimately allowing businesses to run managed vector search on their existing cloud and infrastructure environments, with full data sovereignty.

Red Hat OpenShift, the industry’s leading hybrid cloud application platform powered by Kubernetes, helps streamline the deployment of Qdrant Hybrid Cloud within an enterprise's secure premises. Red Hat OpenShift provides features like auto-scaling, load balancing, and advanced security controls that can help you manage and maintain your vector database deployments more effectively. In addition, Red Hat OpenShift supports deployment across multiple environments, including on-premises, public, private and hybrid cloud landscapes. This flexibility, coupled with Qdrant Hybrid Cloud, allows organizations to choose the deployment model that best suits their needs.

#### Why Run Qdrant Hybrid Cloud on Red Hat OpenShift?

- **Scalability**: Red Hat OpenShift's container orchestration effortlessly scales Qdrant Hybrid Cloud components, accommodating fluctuating workload demands with ease.

- **Portability**: The consistency across hybrid cloud environments provided by Red Hat OpenShift allows for smoother operation of Qdrant Hybrid Cloud across various infrastructures.

- **Automation**: Deployment, scaling, and management tasks are automated, reducing operational overhead and simplifying the management of Qdrant Hybrid Cloud.

- **Security**: Red Hat OpenShift provides built-in security features, including container isolation, network policies, and role-based access control (RBAC), enhancing the security posture of Qdrant Hybrid Cloud deployments.

- **Flexibility:** Red Hat OpenShift supports a wide range of programming languages, frameworks, and tools, providing flexibility in developing and deploying Qdrant Hybrid Cloud applications.

- **Integration:** Red Hat OpenShift can be integrated with various Red Hat and third-party tools, facilitating seamless integration of Qdrant Hybrid Cloud with other enterprise systems and services.

#### Get Started with Qdrant Hybrid Cloud on Red Hat OpenShift

We're thrilled about our collaboration with Red Hat to help simplify AI infrastructure for developers and enterprises alike. By deploying Qdrant Hybrid Cloud on Red Hat OpenShift, developers can gain the ability to more easily scale and maintain greater operational consistency across hybrid cloud environments.

To get started, we created a comprehensive tutorial that shows how to build next-gen AI applications with Qdrant Hybrid Cloud on Red Hat OpenShift. Additionally, you can find more details on the seamless deployment process in our documentation:

#### Tutorial: Private Chatbot for Interactive Learning

In this tutorial, you will build a chatbot without public internet access. The goal is to keep sensitive data secure and isolated. Your RAG system will be built with Qdrant Hybrid Cloud on Red Hat OpenShift, leveraging Haystack for enhanced generative AI capabilities. This tutorial especially explores how this setup ensures that not a single data point leaves the environment.

[Try the Tutorial](/documentation/tutorials/rag-chatbot-red-hat-openshift-haystack/)

#### Documentation: Deploy Qdrant in a Few Clicks

> Our simple Kubernetes-native design allows you to deploy Qdrant Hybrid Cloud on your Red Hat OpenShift instance in just a few steps. Learn how in our documentation.

[Read Hybrid Cloud Documentation](/documentation/hybrid-cloud/)

This collaboration marks an important milestone in the quest for simplified AI infrastructure, offering a robust, scalable, and security-optimized solution for managing vector databases in a hybrid cloud environment. The combination of Qdrant's performance and Red Hat OpenShift's operational excellence opens new avenues for enterprises looking to leverage the power of AI and ML.

#### Ready to Get Started?

Create a [Qdrant Cloud account](https://cloud.qdrant.io/login) and deploy your first **Qdrant Hybrid Cloud** cluster in a few minutes. You can always learn more in the [official release blog](/blog/hybrid-cloud/).

|

qdrant-landing/content/blog/hybrid-cloud-scaleway.md | ---

draft: false

title: "Qdrant Hybrid Cloud and Scaleway Empower GenAI"

short_description: "Supporting innovation in AI with the launch of a revolutionary managed database for startups and enterprises."

description: "Supporting innovation in AI with the launch of a revolutionary managed database for startups and enterprises."

preview_image: /blog/hybrid-cloud-scaleway/hybrid-cloud-scaleway.png

date: 2024-04-10T00:06:00Z

author: Qdrant

featured: false

weight: 1002

tags:

- Qdrant

- Vector Database

---

In a move to empower the next wave of AI innovation, Qdrant and [Scaleway](https://www.scaleway.com/en/) collaborate to introduce [Qdrant Hybrid Cloud](/hybrid-cloud/), a fully managed vector database that can be deployed on existing Scaleway environments. This collaboration is set to democratize access to advanced AI capabilities, enabling developers to easily deploy and scale vector search technologies within Scaleway's robust and developer-friendly cloud infrastructure. By focusing on the unique needs of startups and the developer community, Qdrant and Scaleway are providing access to intuitive and easy to use tools, making cutting-edge AI more accessible than ever before.

Building on this vision, the integration between Scaleway and Qdrant Hybrid Cloud leverages the strengths of both Qdrant, with its leading open-source vector database, and Scaleway, known for its innovative and scalable cloud solutions. This integration means startups and developers can now harness the power of vector search - essential for AI applications like recommendation systems, image recognition, and natural language processing - within their existing environment without the complexity of maintaining such advanced setups.

*"With our partnership with Qdrant, Scaleway reinforces its status as Europe's leading cloud provider for AI innovation. The integration of Qdrant's fast and accurate vector database enriches our expanding suite of AI solutions. This means you can build smarter, faster AI projects with us, worry-free about performance and security." Frédéric BARDOLLE, Lead PM AI @ Scaleway*

#### Developing a Retrieval Augmented Generation (RAG) Application with Qdrant Hybrid Cloud, Scaleway, and LangChain

Retrieval Augmented Generation (RAG) enhances Large Language Models (LLMs) by integrating vector search to provide precise, context-rich responses. This combination allows LLMs to access and incorporate specific data in real-time, vastly improving the quality of AI-generated content.

RAG applications often rely on sensitive or proprietary internal data, emphasizing the importance of data sovereignty. Running the entire stack within your own environment becomes crucial for maintaining control over this data. Qdrant Hybrid Cloud deployed on Scaleway addresses this need perfectly, offering a secure, scalable platform that respects data sovereignty requirements while leveraging the full potential of RAG for sophisticated AI solutions.

We created a tutorial that guides you through setting up and leveraging Qdrant Hybrid Cloud on Scaleway for a RAG application, providing insights into efficiently managing data within a secure, sovereign framework. It highlights practical steps to integrate vector search with LLMs, optimizing the generation of high-quality, relevant AI content, while ensuring data sovereignty is maintained throughout.

[Try the Tutorial](/documentation/tutorials/rag-chatbot-scaleway/)

#### The Benefits of Running Qdrant Hybrid Cloud on Scaleway

Choosing Qdrant Hybrid Cloud and Scaleway for AI applications offers several key advantages:

- **AI-Focused Resources:** Scaleway aims to be the cloud provider of choice for AI companies, offering the resources and infrastructure to power complex AI and machine learning workloads, helping to advance the development and deployment of AI technologies. This paired with Qdrant Hybrid Cloud provides a strong foundational platform for advanced AI applications.

- **Scalable Vector Search:** Qdrant Hybrid Cloud provides a fully managed vector database that allows to effortlessly scale the setup through vertical or horizontal scaling. Deployed on Scaleway, this is a robust setup that is designed to meet the needs of businesses at every stage of growth, from startups to large enterprises, ensuring a full spectrum of solutions for various projects and workloads.

- **European Roots and Focus**: With a strong presence in Europe and a commitment to supporting the European tech ecosystem, Scaleway is ideally positioned to partner with European-based companies like Qdrant, providing local expertise and infrastructure that aligns with European regulatory standards.

- **Sustainability Commitment**: Scaleway leads with an eco-conscious approach, featuring adiabatic data centers that significantly reduce cooling costs and environmental impact. Scaleway prioritizes extending hardware lifecycle beyond industry norms to lessen our ecological footprint.

#### Get Started in a Few Seconds

Setting up Qdrant Hybrid Cloud on Scaleway is streamlined and quick, thanks to its Kubernetes-native architecture. Follow these simple three steps to launch:

1. **Activate Hybrid Cloud**: First, log into your [Qdrant Cloud account](https://cloud.qdrant.io/login) and select ‘Hybrid Cloud’ to activate.

2. **Integrate Your Clusters**: Navigate to the Hybrid Cloud settings and add your Scaleway Kubernetes clusters as a Hybrid Cloud Environment.

3. **Simplified Management**: Use the Qdrant Management Console for easy creation and oversight of your Qdrant clusters on Scaleway.

For more comprehensive guidance, our documentation provides step-by-step instructions for deploying Qdrant on Scaleway.

[Read Hybrid Cloud Documentation](/documentation/hybrid-cloud/)

#### Ready to Get Started?

Create a [Qdrant Cloud account](https://cloud.qdrant.io/login) and deploy your first **Qdrant Hybrid Cloud** cluster in a few minutes. You can always learn more in the [official release blog](/blog/hybrid-cloud/). |

qdrant-landing/content/blog/hybrid-cloud-stackit.md | ---

draft: false

title: "STACKIT and Qdrant Hybrid Cloud for Best Data Privacy"

short_description: "Empowering German AI development with a data privacy-first platform."

description: "Empowering German AI development with a data privacy-first platform."

preview_image: /blog/hybrid-cloud-stackit/hybrid-cloud-stackit.png

date: 2024-04-10T00:07:00Z

author: Qdrant

featured: false

weight: 1001

tags:

- Qdrant

- Vector Database

---

Qdrant and [STACKIT](https://www.stackit.de/en/) are thrilled to announce that developers are now able to deploy a fully managed vector database to their STACKIT environment with the introduction of [Qdrant Hybrid Cloud](/hybrid-cloud/). This is a great step forward for the German AI ecosystem as it enables developers and businesses to build cutting edge AI applications that run on German data centers with full control over their data.

Vector databases are an essential component of the modern AI stack. They enable rapid and accurate retrieval of high-dimensional data, crucial for powering search, recommendation systems, and augmenting machine learning models. In the rising field of GenAI, vector databases power retrieval-augmented-generation (RAG) scenarios as they are able to enhance the output of large language models (LLMs) by injecting relevant contextual information. However, this contextual information is often rooted in confidential internal or customer-related information, which is why enterprises are in pursuit of solutions that allow them to make this data available for their AI applications without compromising data privacy, losing data control, or letting data exit the company's secure environment.

Qdrant Hybrid Cloud is the first managed vector database that can be deployed in an existing STACKIT environment. The Kubernetes-native setup allows businesses to operate a fully managed vector database, while maintaining control over their data through complete data isolation. Qdrant Hybrid Cloud's managed service seamlessly integrates into STACKIT's cloud environment, allowing businesses to deploy fully managed vector search workloads, secure in the knowledge that their operations are backed by the stringent data protection standards of Germany's data centers and in full compliance with GDPR. This setup not only ensures that data remains under the businesses control but also paves the way for secure, AI-driven application development.

#### Key Features and Benefits of Qdrant on STACKIT:

- **Seamless Integration and Deployment**: With Qdrant’s Kubernetes-native design, businesses can effortlessly connect their STACKIT cloud as a Hybrid Cloud Environment, enabling a one-step, scalable Qdrant deployment.

- **Enhanced Data Privacy**: Leveraging STACKIT's German data centers ensures that all data processing complies with GDPR and other relevant European data protection standards, providing businesses with unparalleled control over their data.

- **Scalable and Managed AI Solutions**: Deploying Qdrant on STACKIT provides a fully managed vector search engine with the ability to scale vertically and horizontally, with robust support for zero-downtime upgrades and disaster recovery, all within STACKIT's secure infrastructure.

#### Use Case: AI-enabled Contract Management built with Qdrant Hybrid Cloud, STACKIT, and Aleph Alpha

To demonstrate the power of Qdrant Hybrid Cloud on STACKIT, we’ve developed a comprehensive tutorial showcasing how to build secure, AI-driven applications focusing on data sovereignty. This tutorial specifically shows how to build a contract management platform that enables users to upload documents (PDF or DOCx), which are then segmented for searchable access. Designed with multitenancy, users can only access their team or organization's documents. It also features custom sharding for location-specific document storage. Beyond search, the application offers rephrasing of document excerpts for clarity to those without context.

[Try the Tutorial](/documentation/tutorials/rag-contract-management-stackit-aleph-alpha/)

#### Start Using Qdrant with STACKIT

Deploying Qdrant Hybrid Cloud on STACKIT is straightforward, thanks to the seamless integration facilitated by Kubernetes. Here are the steps to kickstart your journey:

1. **Qdrant Hybrid Cloud Activation**: Start by activating ‘Hybrid Cloud’ in your [Qdrant Cloud account](https://cloud.qdrant.io/login).

2. **Cluster Integration**: Add your STACKIT Kubernetes clusters as a Hybrid Cloud Environment in the Hybrid Cloud section.

3. **Effortless Deployment**: Use the Qdrant Management Console to effortlessly create and manage your Qdrant clusters on STACKIT.

We invite you to explore the detailed documentation on deploying Qdrant on STACKIT, designed to guide you through each step of the process seamlessly.

[Read Hybrid Cloud Documentation](/documentation/hybrid-cloud/)

#### Ready to Get Started?

Create a [Qdrant Cloud account](https://cloud.qdrant.io/login) and deploy your first **Qdrant Hybrid Cloud** cluster in a few minutes. You can always learn more in the [official release blog](/blog/hybrid-cloud/). |

qdrant-landing/content/blog/hybrid-cloud-vultr.md | ---

draft: false

title: "Vultr and Qdrant Hybrid Cloud Support Next-Gen AI Projects"

short_description: "Providing a flexible platform for high-performance vector search in next-gen AI workloads."

description: "Providing a flexible platform for high-performance vector search in next-gen AI workloads."

preview_image: /blog/hybrid-cloud-vultr/hybrid-cloud-vultr.png

date: 2024-04-10T00:08:00Z

author: Qdrant

featured: false

weight: 1000

tags:

- Qdrant

- Vector Database

---

We’re excited to share that Qdrant and [Vultr](https://www.vultr.com/) are partnering to provide seamless scalability and performance for vector search workloads. With Vultr's global footprint and customizable platform, deploying vector search workloads becomes incredibly flexible. Qdrant's new [Qdrant Hybrid Cloud](/hybrid-cloud/) offering and its Kubernetes-native design, coupled with Vultr's straightforward virtual machine provisioning, allows for simple setup when prototyping and building next-gen AI apps.

#### Adapting to Diverse AI Development Needs with Customization and Deployment Flexibility

In the fast-paced world of AI and ML, businesses are eagerly integrating AI and generative AI to enhance their products with new features like AI assistants, develop new innovative solutions, and streamline internal workflows with AI-driven processes. Given the diverse needs of these applications, it's clear that a one-size-fits-all approach doesn't apply to AI development. This variability in requirements underscores the need for adaptable and customizable development environments.

Recognizing this, Qdrant and Vultr have teamed up to offer developers unprecedented flexibility and control. The collaboration enables the deployment of a fully managed vector database on Vultr’s adaptable platform, catering to the specific needs of diverse AI projects. This unique setup offers developers the ideal Vultr environment for their vector search workloads. It ensures seamless adaptability and data privacy with all data residing in their environment. For the first time, Qdrant Hybrid Cloud allows for fully managing a vector database on Vultr, promoting rapid development cycles without the hassle of modifying existing setups and ensuring that data remains secure within the organization. Moreover, this partnership empowers developers with centralized management over their vector database clusters via Qdrant’s control plane, enabling precise size adjustments based on workload demands. This joint setup marks a significant step in providing the AI and ML field with flexible, secure, and efficient application development tools.

> *"Our collaboration with Qdrant empowers developers to unlock the potential of vector search applications, such as RAG, by deploying Qdrant Hybrid Cloud with its high-performance search capabilities directly on Vultr's global, automated cloud infrastructure. This partnership creates a highly scalable and customizable platform, uniquely designed for deploying and managing AI workloads with unparalleled efficiency."* Kevin Cochrane, Vultr CMO.

#### The Benefits of Deploying Qdrant Hybrid Cloud on Vultr

Together, Qdrant Hybrid Cloud and Vultr offer enhanced AI and ML development with streamlined benefits:

- **Simple and Flexible Deployment:** Deploy Qdrant Hybrid Cloud on Vultr in a few minutes with a simple “one-click” installation by adding your Vutlr environment as a Hybrid Cloud Environment to Qdrant.

- **Scalability and Customizability**: Qdrant’s efficient data handling and Vultr’s scalable infrastructure means projects can be adjusted dynamically to workload demands, optimizing costs without compromising performance or capabilities.

- **Unified AI Stack Management:** Seamlessly manage the entire lifecycle of AI applications, from vector search with Qdrant Hybrid Cloud to deployment and scaling with the Vultr platform and its AI and ML solutions, all within a single, integrated environment. This setup simplifies workflows, reduces complexity, accelerates development cycles, and simplifies the integration with other elements of the AI stack like model development, finetuning, or inference and training.

- **Global Reach, Local Execution**: With Vultr's worldwide infrastructure and Qdrant's fast vector search, deploy AI solutions globally while ensuring low latency and compliance with local data regulations, enhancing user satisfaction.

#### Getting Started with Qdrant Hybrid Cloud and Vultr

We've compiled an in-depth guide for leveraging Qdrant Hybrid Cloud on Vultr to kick off your journey into building cutting-edge AI solutions. For further insights into the deployment process, refer to our comprehensive documentation.

#### Tutorial: Crafting a Personalized AI Assistant with RAG

This tutorial outlines creating a personalized AI assistant using Qdrant Hybrid Cloud on Vultr, incorporating advanced vector search to power dynamic, interactive experiences. We will develop a RAG pipeline powered by DSPy and detail how to maintain data privacy within your Vultr environment.

[Try the Tutorial](/documentation/tutorials/rag-chatbot-vultr-dspy-ollama/)

#### Documentation: Effortless Deployment with Qdrant

Our Kubernetes-native framework simplifies the deployment of Qdrant Hybrid Cloud on Vultr, enabling you to get started in just a few straightforward steps. Dive into our documentation to learn more.

[Read Hybrid Cloud Documentation](/documentation/hybrid-cloud/)

#### Ready to Get Started?

Create a [Qdrant Cloud account](https://cloud.qdrant.io/login) and deploy your first **Qdrant Hybrid Cloud** cluster in a few minutes. You can always learn more in the [official release blog](/blog/hybrid-cloud/). |

qdrant-landing/content/blog/hybrid-cloud.md | ---

draft: false

title: "Qdrant Hybrid Cloud: the First Managed Vector Database You Can Run Anywhere"

slug: hybrid-cloud

short_description:

description:

preview_image: /blog/hybrid-cloud/hybrid-cloud.png

social_preview_image: /blog/hybrid-cloud/hybrid-cloud.png

date: 2024-04-15T00:01:00Z

author: Andre Zayarni, CEO & Co-Founder

featured: true

tags:

- Hybrid Cloud

---

We are excited to announce the official launch of [Qdrant Hybrid Cloud](/hybrid-cloud/) today, a significant leap forward in the field of vector search and enterprise AI. Rooted in our open-source origin, we are committed to offering our users and customers unparalleled control and sovereignty over their data and vector search workloads. Qdrant Hybrid Cloud stands as **the industry's first managed vector database that can be deployed in any environment** - be it cloud, on-premise, or the edge.

<p align="center"><iframe width="560" height="315" src="https://www.youtube.com/embed/gWH2uhWgTvM" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe></p>

As the AI application landscape evolves, the industry is transitioning from prototyping innovative AI solutions to actively deploying AI applications into production (incl. GenAI, semantic search, or recommendation systems). In this new phase, **privacy**, **data sovereignty**, **deployment flexibility**, and **control** are at the top of developers’ minds. These factors are critical when developing, launching, and scaling new applications, whether they are customer-facing services like AI assistants or internal company solutions for knowledge and information retrieval or process automation.

Qdrant Hybrid Cloud offers developers a vector database that can be deployed in any existing environment, ensuring data sovereignty and privacy control through complete database isolation - with the full capabilities of our managed cloud service.

- **Unmatched Deployment Flexibility**: With its Kubernetes-native architecture, Qdrant Hybrid Cloud provides the ability to bring your own cloud or compute by deploying Qdrant as a managed service on the infrastructure of choice, such as Oracle Cloud Infrastructure (OCI), Vultr, Red Hat OpenShift, DigitalOcean, OVHcloud, Scaleway, STACKIT, Civo, VMware vSphere, AWS, Google Cloud, or Microsoft Azure.

- **Privacy & Data Sovereignty**: Qdrant Hybrid Cloud offers unparalleled data isolation and the flexibility to process vector search workloads in their own environments.

- **Scalable & Secure Architecture**: Qdrant Hybrid Cloud's design ensures scalability and adaptability with its Kubernetes-native architecture, separates data and control for enhanced security, and offers a unified management interface for ease of use, enabling businesses to grow and adapt without compromising privacy or control.

- **Effortless Setup in Seconds**: Setting up Qdrant Hybrid Cloud is incredibly straightforward, thanks to our [simple Kubernetes installation](/documentation/hybrid-cloud/) that connects effortlessly with your chosen infrastructure, enabling secure, scalable deployments right from the get-go

Let’s explore these aspects in more detail:

#### Maximizing Deployment Flexibility: Enabling Applications to Run Across Any Environment

Qdrant Hybrid Cloud, powered by our seamless Kubernetes-native architecture, is the first managed vector database engineered for unparalleled deployment flexibility. This means that regardless of where you run your AI applications, you can now enjoy the benefits of a fully managed Qdrant vector database, simplifying operations across any cloud, on-premise, or edge locations.

For this launch of Qdrant Hybrid Cloud, we are proud to collaborate with key cloud providers, including [Oracle Cloud Infrastructure (OCI)](https://blogs.oracle.com/cloud-infrastructure/post/qdrant-hybrid-cloud-now-available-oci-customers), [Red Hat OpenShift](/blog/hybrid-cloud-red-hat-openshift/), [Vultr](/blog/hybrid-cloud-vultr/), [DigitalOcean](/blog/hybrid-cloud-digitalocean/), [OVHcloud](/blog/hybrid-cloud-ovhcloud/), [Scaleway](/blog/hybrid-cloud-scaleway/), [Civo](/documentation/hybrid-cloud/platform-deployment-options/#civo), and [STACKIT](/blog/hybrid-cloud-stackit/). These partnerships underscore our commitment to delivering a versatile and robust vector database solution that meets the complex deployment requirements of today's AI applications.

In addition to our partnerships with key cloud providers, we are also launching in collaboration with renowned AI development tools and framework leaders, including [LlamaIndex](/blog/hybrid-cloud-llamaindex/), [LangChain](/blog/hybrid-cloud-langchain/), [Airbyte](/blog/hybrid-cloud-airbyte/), [JinaAI](/blog/hybrid-cloud-jinaai/), [Haystack by deepset](/blog/hybrid-cloud-haystack/), and [Aleph Alpha](/blog/hybrid-cloud-aleph-alpha/). These launch partners are instrumental in ensuring our users can seamlessly integrate with essential technologies for their AI applications, enriching our offering and reinforcing our commitment to versatile and comprehensive deployment environments.

Together with our launch partners we have created detailed tutorials that show how to build cutting-edge AI applications with Qdrant Hybrid Cloud on the infrastructure of your choice. These tutorials are available in our [launch partner blog](/blog/hybrid-cloud-launch-partners/). Additionally, you can find expansive [documentation](/documentation/hybrid-cloud/) and instructions on how to [deploy Qdrant Hybrid Cloud](/documentation/hybrid-cloud/hybrid-cloud-setup/).

#### Powering Vector Search & AI with Unmatched Data Sovereignty

Proprietary data, the lifeblood of AI-driven innovation, fuels personalized experiences, accurate recommendations, and timely anomaly detection. This data, unique to each organization, encompasses customer behaviors, internal processes, and market insights - crucial for tailoring AI applications to specific business needs and competitive differentiation. However, leveraging such data effectively while ensuring its **security, privacy, and control** requires diligence.

The innovative architecture of Qdrant Hybrid Cloud ensures **complete database isolation**, empowering developers with the autonomy to tailor where they process their vector search workloads with total data sovereignty. Rooted deeply in our commitment to open-source principles, this approach aims to foster a new level of trust and reliability by providing the essential tools to navigate the exciting landscape of enterprise AI.

#### How We Designed the Qdrant Hybrid Cloud Architecture

We designed the architecture of Qdrant Hybrid Cloud to meet the evolving needs of businesses seeking unparalleled flexibility, control, and privacy.

- **Kubernetes-Native Design**: By embracing Kubernetes, we've ensured that our architecture is both scalable and adaptable. This choice supports our deployment flexibility principle, allowing Qdrant Hybrid Cloud to integrate seamlessly with any infrastructure that can run Kubernetes.

- **Decoupled Data and Control Planes**: Our architecture separates the data plane (where the data is stored and processed) from the control plane (which manages the cluster operations). This separation enhances security, allows for more granular control over the data, and enables the data plane to reside anywhere the user chooses.

- **Unified Management Interface**: Despite the underlying complexity and the diversity of deployment environments, we designed a unified, user-friendly interface that simplifies the Qdrant cluster management. This interface supports everything from deployment to scaling and upgrading operations, all accessible from the [Qdrant Cloud portal](https://cloud.qdrant.io/login).

- **Extensible and Modular**: Recognizing the rapidly evolving nature of technology and enterprise needs, we built Qdrant Hybrid Cloud to be both extensible and modular. Users can easily integrate new services, data sources, and deployment environments as their requirements grow and change.

#### Diagram: Qdrant Hybrid Cloud Architecture

#### Quickstart: Effortless Setup with Our One-Step Installation

We’ve made getting started with Qdrant Hybrid Cloud as simple as possible. The Kubernetes “One-Step” installation will allow you to connect with the infrastructure of your choice. This is how you can get started:

1. **Activate Hybrid Cloud**: Simply sign up for or log into your [Qdrant Cloud](https://cloud.qdrant.io/login) account and navigate to the **Hybrid Cloud** section.

2. **Onboard your Kubernetes cluster**: Follow the onboarding wizard and add your Kubernetes cluster as a Hybrid Cloud Environment - be it in the cloud, on-premise, or at the edge.

3. **Deploy Qdrant clusters securely, with confidence:** Now, you can effortlessly create and manage Qdrant clusters in your own environment, directly from the central Qdrant Management Console. This supports horizontal and vertical scaling, zero-downtime upgrades, and disaster recovery seamlessly, allowing you to deploy anywhere with confidence.

Explore our [detailed documentation](/documentation/hybrid-cloud/) and [tutorials](/documentation/examples/) to seamlessly deploy Qdrant Hybrid Cloud in your preferred environment, and don't miss our [launch partner blog post](/blog/hybrid-cloud-launch-partners/) for practical insights. Start leveraging the full potential of Qdrant Hybrid Cloud and [create your first Qdrant cluster today](https://cloud.qdrant.io/login), unlocking the flexibility and control essential for your AI and vector search workloads.

[](https://cloud.qdrant.io/login)

## Launch Partners

We launched Qdrant Hybrid Cloud with assistance and support of our trusted partners. Learn what they have to say about our latest offering:

#### Oracle Cloud Infrastructure:

> *"We are excited to partner with Qdrant to bring their powerful vector search capabilities to Oracle Cloud Infrastructure. By offering Qdrant Hybrid Cloud as a managed service on OCI, we are empowering enterprises to harness the full potential of AI-driven applications while maintaining complete control over their data. This collaboration represents a significant step forward in making scalable vector search accessible and manageable for businesses across various industries, enabling them to drive innovation, enhance productivity, and unlock valuable insights from their data."* Dr. Sanjay Basu, Senior Director of Cloud Engineering, AI/GPU Infrastructure at Oracle

Read more in [OCI's latest Partner Blog](https://blogs.oracle.com/cloud-infrastructure/post/qdrant-hybrid-cloud-now-available-oci-customers).

#### Red Hat:

> *“Red Hat is committed to driving transparency, flexibility and choice for organizations to more easily unlock the power of AI. By working with partners like Qdrant to enable streamlined integration experiences on Red Hat OpenShift for AI use cases, organizations can more effectively harness critical data and deliver real business outcomes,”* said Steven Huels, vice president and general manager, AI Business Unit, Red Hat.

Read more in our [official Red Hat Partner Blog](/blog/hybrid-cloud-red-hat-openshift/).

#### Vultr:

> *"Our collaboration with Qdrant empowers developers to unlock the potential of vector search applications, such as RAG, by deploying Qdrant Hybrid Cloud with its high-performance search capabilities directly on Vultr's global, automated cloud infrastructure. This partnership creates a highly scalable and customizable platform, uniquely designed for deploying and managing AI workloads with unparalleled efficiency."* Kevin Cochrane, Vultr CMO.

Read more in our [official Vultr Partner Blog](/blog/hybrid-cloud-vultr/).

#### OVHcloud:

> *“The partnership between OVHcloud and Qdrant Hybrid Cloud highlights, in the European AI landscape, a strong commitment to innovative and secure AI solutions, empowering startups and organisations to navigate AI complexities confidently. By emphasizing data sovereignty and security, we enable businesses to leverage vector databases securely."* Yaniv Fdida, Chief Product and Technology Officer, OVHcloud

Read more in our [official OVHcloud Partner Blog](/blog/hybrid-cloud-ovhcloud/).

#### DigitalOcean:

> *“Qdrant, with its seamless integration and robust performance, equips businesses to develop cutting-edge applications that truly resonate with their users. Through applications such as semantic search, Q&A systems, recommendation engines, image search, and RAG, DigitalOcean customers can leverage their data to the fullest, ensuring privacy and driving innovation.“* - Bikram Gupta, Lead Product Manager, Kubernetes & App Platform, DigitalOcean.

Read more in our [official DigitalOcean Partner Blog](/blog/hybrid-cloud-digitalocean/).

#### Scaleway:

> *"With our partnership with Qdrant, Scaleway reinforces its status as Europe's leading cloud provider for AI innovation. The integration of Qdrant's fast and accurate vector database enriches our expanding suite of AI solutions. This means you can build smarter, faster AI projects with us, worry-free about performance and security."* Frédéric Bardolle, Lead PM AI, Scaleway

Read more in our [official Scaleway Partner Blog](/blog/hybrid-cloud-scaleway/).

#### Airbyte:

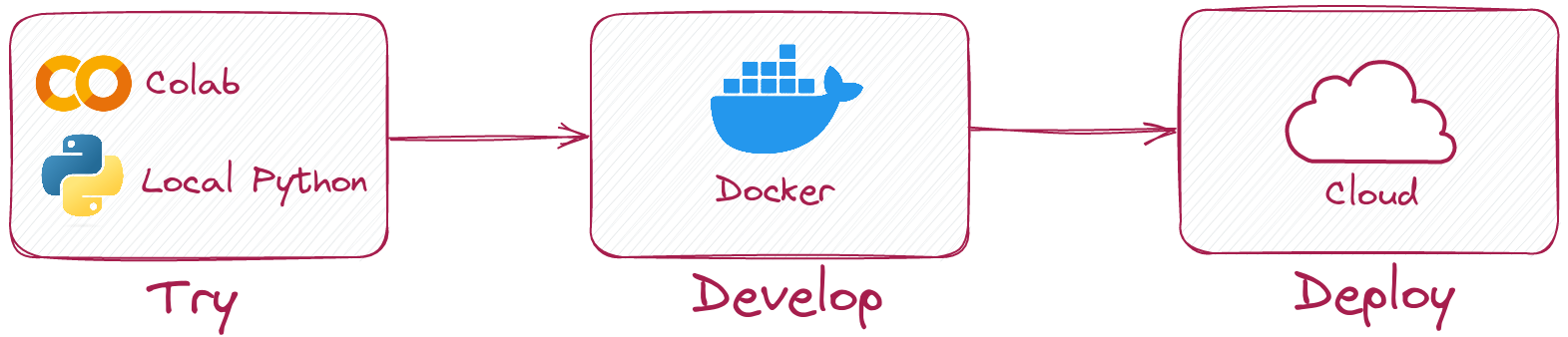

> *“The new Qdrant Hybrid Cloud is an exciting addition that offers peace of mind and flexibility, aligning perfectly with the needs of Airbyte Enterprise users who value the same balance. Being open-source at our core, both Qdrant and Airbyte prioritize giving users the flexibility to build and test locally—a significant advantage for data engineers and AI practitioners. We're enthusiastic about the Hybrid Cloud launch, as it mirrors our vision of enabling users to confidently transition from local development and local deployments to a managed solution, with both cloud and hybrid cloud deployment options.”* AJ Steers, Staff Engineer for AI, Airbyte

Read more in our [official Airbyte Partner Blog](/blog/hybrid-cloud-airbyte/).

#### deepset:

> *“We hope that with Haystack 2.0 and our growing partnerships such as what we have here with Qdrant Hybrid Cloud, engineers are able to build AI systems with full autonomy. Both in how their pipelines are designed, and how their data are managed.”* Tuana Çelik, Developer Relations Lead, deepset.

Read more in our [official Haystack by deepset Partner Blog](/blog/hybrid-cloud-haystack/).

#### LlamaIndex:

> *“LlamaIndex is thrilled to partner with Qdrant on the launch of Qdrant Hybrid Cloud, which upholds Qdrant's core functionality within a Kubernetes-based architecture. This advancement enhances LlamaIndex's ability to support diverse user environments, facilitating the development and scaling of production-grade, context-augmented LLM applications.”* Jerry Liu, CEO and Co-Founder, LlamaIndex

Read more in our [official LlamaIndex Partner Blog](/blog/hybrid-cloud-llamaindex/).

#### LangChain:

> *“The AI industry is rapidly maturing, and more companies are moving their applications into production. We're really excited at LangChain about supporting enterprises' unique data architectures and tooling needs through integrations and first-party offerings through LangSmith. First-party enterprise integrations like Qdrant's greatly contribute to the LangChain ecosystem with enterprise-ready retrieval features that seamlessly integrate with LangSmith's observability, production monitoring, and automation features, and we're really excited to develop our partnership further.”* -Erick Friis, Founding Engineer at LangChain

Read more in our [official LangChain Partner Blog](/blog/hybrid-cloud-langchain/).

#### Jina AI:

> *“The collaboration of Qdrant Hybrid Cloud with Jina AI’s embeddings gives every user the tools to craft a perfect search framework with unmatched accuracy and scalability. It’s a partnership that truly pays off!”* Nan Wang, CTO, Jina AI

Read more in our [official Jina AI Partner Blog](/blog/hybrid-cloud-jinaai/).

We have also launched Qdrant Hybrid Cloud with the support of **Aleph Alpha**, **STACKIT** and **Civo**. Learn more about our valued partners:

- **Aleph Alpha:** [Enhance AI Data Sovereignty with Aleph Alpha and Qdrant Hybrid Cloud](/blog/hybrid-cloud-aleph-alpha/)

- **STACKIT:** [STACKIT and Qdrant Hybrid Cloud for Best Data Privacy](/blog/hybrid-cloud-stackit/)

- **Civo:** [Deploy Qdrant Hybrid Cloud on Civo Kubernetes](/documentation/hybrid-cloud/platform-deployment-options/#civo) |

qdrant-landing/content/blog/indexify-unveiled-diptanu-gon-choudhury-vector-space-talk-009.md | ---

draft: false

title: Indexify Unveiled - Diptanu Gon Choudhury | Vector Space Talks

slug: indexify-content-extraction-engine

short_description: Diptanu Gon Choudhury discusses how Indexify is transforming

the AI-driven workflow in enterprises today.

description: Diptanu Gon Choudhury shares insights on re-imaging Spark and data

infrastructure while discussing his work on Indexify to enhance AI-driven

workflows and knowledge bases.

preview_image: /blog/from_cms/diptanu-choudhury-cropped.png

date: 2024-01-26T16:40:55.469Z

author: Demetrios Brinkmann

featured: false

tags:

- Vector Space Talks

- Indexify

- structured extraction engine

- rag-based applications

---

> *"We have something like Qdrant, which is very geared towards doing Vector search. And so we understand the shape of the storage system now.”*\

— Diptanu Gon Choudhury

>

Diptanu Gon Choudhury is the founder of Tensorlake. They are building Indexify - an open-source scalable structured extraction engine for unstructured data to build near-real-time knowledgebase for AI/agent-driven workflows and query engines. Before building Indexify, Diptanu created the Nomad cluster scheduler at Hashicorp, inventor of the Titan/Titus cluster scheduler at Netflix, led the FBLearner machine learning platform, and built the real-time speech inference engine at Facebook.

***Listen to the episode on [Spotify](https://open.spotify.com/episode/6MSwo7urQAWE7EOxO7WTns?si=_s53wC0wR9C4uF8ngGYQlg), Apple Podcast, Podcast addicts, Castbox. You can also watch this episode on [YouTube](https://youtu.be/RoOgTxHkViA).***

<iframe width="560" height="315" src="https://www.youtube.com/embed/RoOgTxHkViA?si=r0EjWlssjFDVrzo6" title="YouTube video player" frameborder="0" allow="accelerometer; autoplay; clipboard-write; encrypted-media; gyroscope; picture-in-picture; web-share" allowfullscreen></iframe>

<iframe src="https://podcasters.spotify.com/pod/show/qdrant-vector-space-talk/embed/episodes/Indexify-Unveiled-A-Scalable-and-Near-Real-time-Content-Extraction-Engine-for-Multimodal-Unstructured-Data---Diptanu-Gon-Choudhury--Vector-Space-Talk-009-e2el8qc/a-aas4nil" height="102px" width="400px" frameborder="0" scrolling="no"></iframe>

## **Top takeaways:**

Discover how reimagined data infrastructures revolutionize AI-agent workflows as Diptanu delves into Indexify, transforming raw data into real-time knowledge bases, and shares expert insights on optimizing rag-based applications, all amidst the ever-evolving landscape of Spark.

Here's What You'll Discover:

1. **Innovative Data Infrastructure**: Diptanu dives deep into how Indexify is revolutionizing the enterprise world by providing a sharper focus on data infrastructure and a refined abstraction for generative AI this year.

2. **AI-Copilot for Call Centers**: Learn how Indexify streamlines customer service with a real-time knowledge base, transforming how agents interact and resolve issues.

3. **Scaling Real-Time Indexing**: discover the system’s powerful capability to index content as it happens, enabling multiple extractors to run simultaneously. It’s all about the right model and the computing capacity for on-the-fly content generation.

4. **Revamping Developer Experience**: get a glimpse into the future as Diptanu chats with Demetrios about reimagining Spark to fit today's tech capabilities, vastly different from just two years ago!

5. **AI Agent Workflow Insights**: Understand the crux of AI agent-driven workflows, where models dynamically react to data, making orchestrated decisions in live environments.

> Fun Fact: The development of Indexify by Diptanu was spurred by the rising use of Large Language Models in applications and the subsequent need for better data infrastructure to support these technologies.

>

## Show notes:

00:00 AI's impact on model production and workflows.\

05:15 Building agents need indexes for continuous updates.\

09:27 Early RaG and LLMs adopters neglect data infrastructure.\

12:32 Design partner creating copilot for call centers.\

17:00 Efficient indexing and generation using scalable models.\

20:47 Spark is versatile, used for many cases.\

24:45 Recent survey paper on RAG covers tips.\

26:57 Evaluation of various aspects of data generation.\

28:45 Balancing trust and cost in factual accuracy.

## More Quotes from Diptanu:

*"In 2017, when I started doing machine learning, it would take us six months to ship a good model in production. And here we are today, in January 2024, new models are coming out every week, and people are putting them in production.”*\

-- Diptanu Gon Choudhury

*"Over a period of time, you want to extract new information out of existing data, because models are getting better continuously.”*\

-- Diptanu Gon Choudhury

*"We are in the golden age of demos. Golden age of demos with LLMs. Almost anyone, I think with some programming knowledge can kind of like write a demo with an OpenAI API or with an embedding model and so on.”*\

-- Diptanu Gon Choudhury

## Transcript:

Demetrios:

We are live, baby. This is it. Welcome back to another vector space talks. I'm here with my man Diptanu. He is the founder and creator of Tenterlake. They are building indexify, an open source, scalable, structured extraction engine for unstructured data to build near real time knowledge bases for AI agent driven workflows and query engines. And if it sounds like I just threw every buzzword in the book into that sentence, you can go ahead and say, bingo, we are here, and we're about to dissect what all that means in the next 30 minutes. So, dude, first of all, I got to just let everyone know who is here, that you are a bit of a hard hitter.

Demetrios:

You've got some track record under some notches on your belt. We could say before you created Tensorlake, let's just let people know that you were at Hashicorp, you created the nomad cluster scheduler, and you were the inventor of Titus cluster scheduler at Netflix. You led the FB learner machine learning platform and built real time speech inference engine at Facebook. You may be one of the most decorated people we've had on and that I have had the pleasure of talking to, and that's saying a lot. I've talked to a lot of people in my day, so I want to dig in, man. First question I've got for you, it's a big one. What the hell do you mean by AI agent driven workflows? Are you talking to autonomous agents? Are you talking, like the voice agents? What's that?

Diptanu Gon Choudhury:

Yeah, I was going to say that what a great last couple of years has been for AI. I mean, in context, learning has kind of, like, changed the way people do models and access models and use models in production, like at Facebook. In 2017, when I started doing machine learning, it would take us six months to ship a good model in production. And here we are today, in January 2024, new models are coming out every week, and people are putting them in production. It's a little bit of a Yolo where I feel like people have stopped measuring how well models are doing and just ship in production, but here we are. But I think underpinning all of this is kind of like this whole idea that models are capable of reasoning over data and non parametric knowledge to a certain extent. And what we are seeing now is workflows stop being completely heuristics driven, or as people say, like software 10 driven. And people are putting models in the picture where models are reacting to data that a workflow is seeing, and then people are using models behavior on the data and kind of like making the model decide what should the workflow do? And I think that's pretty much like, to me, what an agent is that an agent responds to information of the world and information which is external and kind of reacts to the information and kind of orchestrates some kind of business process or some kind of workflow, some kind of decision making in a workflow.

Diptanu Gon Choudhury:

That's what I mean by agents. And they can be like autonomous. They can be something that writes an email or writes a chat message or something like that. The spectrum is wide here.

Demetrios:

Excellent. So next question, logical question is, and I will second what you're saying. Like the advances that we've seen in the last year, wow. And the times are a change in, we are trying to evaluate while in production. And I like the term, yeah, we just yoloed it, or as the young kids say now, or so I've heard, because I'm not one of them, but we just do it for the plot. So we are getting those models out there, we're seeing if they work. And I imagine you saw some funny quotes from the Chevrolet chat bot, that it was a chat bot on the Chevrolet support page, and it was asked if Teslas are better than Chevys. And it said, yeah, Teslas are better than Chevys.

Demetrios:

So yes, that's what we do these days. This is 2024, baby. We just put it out there and test and prod. Anyway, getting back on topic, let's talk about indexify, because there was a whole lot of jargon that I said of what you do, give me the straight shooting answer. Break it down for me like I was five. Yeah.

Diptanu Gon Choudhury:

So if you are building an agent today, which depends on augmented generation, like retrieval, augmented generation, and given that this is Qdrant's show, I'm assuming people are very much familiar with Arag and augmented generation. So if people are building applications where the data is external or non parametric, and the model needs to see updated information all the time, because let's say, the documents under the hood that the application is using for its knowledge base is changing, or someone is building a chat application where new chat messages are coming all the time, and the agent or the model needs to know about what is happening, then you need like an index, or a set of indexes, which are continuously updated. And you also, over a period of time, you want to extract new information out of existing data, because models are getting better continuously. And the other thing is, AI, until now, or until a couple of years back, used to be very domain oriented or task oriented, where modality was the key behind models. Now we are entering into a world where information being encoded in any form, documents, videos or whatever, are important to these workflows that people are building or these agents that people are building. And so you need capability to ingest any kind of data and then build indexes out of them. And indexes, in my opinion, are not just embedding indexes, they could be indexes of semi structured data. So let's say you have an invoice.

Diptanu Gon Choudhury:

You want to maybe transform that invoice into semi structured data of where the invoice is coming from or what are the line items and so on. So in a nutshell, you need good data infrastructure to store these indexes and serve these indexes. And also you need a scalable compute engine so that whenever new data comes in, you're able to index them appropriately and update the indexes and so on. And also you need capability to experiment, to add new extractors into your platform, add new models into your platform, and so on. Indexify helps you with all that, right? So indexify, imagine indexify to be an online service with an API so that developers can upload any form of unstructured data, and then a bunch of extractors run in parallel on the cluster and extract information out of this unstructured data, and then update indexes on something like Qdrant or postgres for semi structured data continuously.

Demetrios:

Okay?

Diptanu Gon Choudhury:

And you basically get that in a single application, in a single binary, which is distributed on your cluster. You wouldn't have any external dependencies other than storage systems, essentially, to have a very scalable data infrastructure for your Rag applications or for your LLM agents.

Demetrios:

Excellent. So then talk to me about the inspiration for creating this. What was it that you saw that gave you that spark of, you know what? There needs to be something on the market that can handle this. Yeah.

Diptanu Gon Choudhury:

Earlier this year I was working with founder of a generative AI startup here. I was looking at what they were doing, I was helping them out, and I saw that. And then I looked around, I looked around at what is happening. Not earlier this year as in 2023. Somewhere in early 2023, I was looking at how developers are building applications with llms, and we are in the golden age of demos. Golden age of demos with llms. Almost anyone, I think with some programming knowledge can kind of like write a demo with an OpenAI API or with an embedding model and so on. And I mostly saw that the data infrastructure part of those demos or those applications were very basic people would do like one shot transformation of data, build indexes and then do stuff, build an application on top.

Diptanu Gon Choudhury:

And then I started talking to early adopters of RaG and llms in enterprises, and I started talking to them about how they're building their data pipelines and their data infrastructure for llms. And I feel like people were mostly excited about the application layer, right? A very less amount of thought was being put on the data infrastructure, and it was almost like built out of duct tape, right, of pipeline, like pipelines and workflows like RabbitMQ, like x, Y and z, very bespoke pipelines, which are good at one shot transformation of data. So you put in some documents on a queue, and then somehow the documents get embedded and put into something like Qdrant. But there was no thought about how do you re index? How do you add a new capability into your pipeline? Or how do you keep the whole system online, right? Keep the indexes online while reindexing and so on. And so classically, if you talk to a distributed systems engineer, they would be, you know, this is a mapreduce problem, right? So there are tools like Spark, there are tools like any skills ray, and they would classically solve these problems, right? And if you go to Facebook, we use Spark for something like this, or like presto, or we have a ton of big data infrastructure for handling things like this. And I thought that in 2023 we need a better abstraction for doing something like this. The world is moving to our server less, right? Developers understand functions. Developer thinks about computers as functions and functions which are distributed on the cluster and can transform content into something that llms can consume.

Diptanu Gon Choudhury:

And that was the inspiration I was thinking, what would it look like if we redid Spark or ray for generative AI in 2023? How can we make it so easy so that developers can write functions to extract content out of any form of unstructured data, right? You don't need to think about text, audio, video, or whatever. You write a function which can kind of handle a particular data type and then extract something out of it. And now how can we scale it? How can we give developers very transparently, like, all the abilities to manage indexes and serve indexes in production? And so that was the inspiration for it. I wanted to reimagine Mapreduce for generative AI.

Demetrios:

Wow. I like the vision you sent me over some ideas of different use cases that we can walk through, and I'd love to go through that and put it into actual tangible things that you've been seeing out there. And how you can plug it in to these different use cases. I think the first one that I wanted to look at was building a copilot for call center agents and what that actually looks like in practice. Yeah.

Diptanu Gon Choudhury:

So I took that example because that was super close to my heart in the sense that we have a design partner like who is doing this. And you'll see that in a call center, the information that comes in into a call center or the information that an agent in a human being in a call center works with is very rich. In a call center you have phone calls coming in, you have chat messages coming in, you have emails going on, and then there are also documents which are knowledge bases for human beings to answer questions or make decisions on. Right. And so they're working with a lot of data and then they're always pulling up a lot of information. And so one of our design partner is like building a copilot for call centers essentially. And what they're doing is they want the humans in a call center to answer questions really easily based on the context of a conversation or a call that is happening with one of their users, or pull up up to date information about the policies of the company and so on. And so the way they are using indexify is that they ingest all the content, like the raw content that is coming in video, not video, actually, like audio emails, chat messages into indexify.

Diptanu Gon Choudhury:

And then they have a bunch of extractors which handle different type of modalities, right? Some extractors extract information out of emails. Like they would do email classification, they would do embedding of emails, they would do like entity extraction from emails. And so they are creating many different types of indexes from emails. Same with speech. Right? Like data that is coming on through calls. They would transcribe them first using ASR extractor, and from there on the speech would be embedded and the whole pipeline for a text would be invoked into it, and then the speech would be searchable. If someone wants to find out what conversation has happened, they would be able to look up things. There is a summarizer extractor, which is like looking at a phone call and then summarizing what the customer had called and so on.

Diptanu Gon Choudhury:

So they are basically building a near real time knowledge base of one what is happening with the customer. And also they are pulling in information from their documents. So that's like one classic use case. Now the only dependency now they have is essentially like a blob storage system and serving infrastructure for indexes, like in this case, like Qdrant and postgres. And they have a bunch of extractors that they have written in house and some extractors that we have written, they're using them out of the box and they can scale the system to as much as they need. And it's kind of like giving them a high level abstraction of building indexes and using them in llms.

Demetrios:

So I really like this idea of how you have the unstructured and you have the semi structured and how those play together almost. And I think one thing that is very clear is how you've got the transcripts, you've got the embeddings that you're doing, but then you've also got documents that are very structured and maybe it's from the last call and it's like in some kind of a database. And I imagine we could say whatever, salesforce, it's in a salesforce and you've got it all there. And so there is some structure to that data. And now you want to be able to plug into all of that and you want to be able to, especially in this use case, the call center agents, human agents need to make decisions and they need to make decisions fast. Right. So the real time aspect really plays a part of that.

Diptanu Gon Choudhury:

Exactly.

Demetrios:

You can't have it be something that it'll get back to you in 30 seconds, or maybe 30 seconds is okay, but really the less time the better. And so traditionally when I think about using llms, I kind of take real time off the table. Have you had luck with making it more real time? Yeah.

Diptanu Gon Choudhury:

So there are two aspects of it. How quickly can your indexes be updated? As of last night, we can index all of Wikipedia under five minutes on AWS. We can run up to like 5000 extractors with indexify concurrently and parallel. I feel like we got the indexing part covered. Unless obviously you are using a model as behind an API where we don't have any control. But assuming you're using some kind of embedding model or some kind of extractor model, right, like a named entity extractor or an speech to text model that you control and you understand the I Ops, we can scale it out and our system can kind of handle the scale of getting it indexed really quickly. Now on the generation side, that's where it's a little bit more nuanced, right? Generation depends on how big the generation model is. If you're using GPD four, then obviously you would be playing with the latency budgets that OpenAI provides.

Diptanu Gon Choudhury:

If you're using some other form of models like mixture MoE or something which is very optimized and you have worked on making the model optimized, then obviously you can cut it down. So it depends on the end to end stack. It's not like a single piece of software. It's not like a monolithic piece of software. So it depends on a lot of different factors. But I can confidently claim that we have gotten the indexing side of real time aspects covered as long as the models people are using are reasonable and they have enough compute in their cluster.

Demetrios:

Yeah. Okay. Now talking again about the idea of rethinking the developer experience with this and almost reimagining what Spark would be if it were created today.

Diptanu Gon Choudhury:

Exactly.

Demetrios:

How do you think that there are manifestations in what you've built that play off of things that could only happen because you created it today as opposed to even two years ago.

Diptanu Gon Choudhury:

Yeah. So I think, for example, take Spark, right? Spark was born out of big data, like the 2011 twelve era of big data. In fact, I was one of the committers on Apache Mesos, the cluster scheduler that Spark used for a long time. And then when I was at Hashicorp, we tried to contribute support for Nomad in Spark. What I'm trying to say is that Spark is a task scheduler at the end of the day and it uses an underlying scheduler. So the teams that manage spark today or any other similar tools, they have like tens or 15 people, or they're using like a hosted solution, which is super complex to manage. Right. A spark cluster is not easy to manage.

Diptanu Gon Choudhury:

I'm not saying it's a bad thing or whatever. Software written at any given point in time reflect the world in which it was born. And so obviously it's from that era of systems engineering and so on. And since then, systems engineering has progressed quite a lot. I feel like we have learned how to make software which is scalable, but yet simpler to understand and to operate and so on. And the other big thing in spark that I feel like is missing or any skills, Ray, is that they are not natively integrated into the data stack. Right. They don't have an opinion on what the data stack is.

Diptanu Gon Choudhury:

They're like excellent Mapreduce systems, and then the data stuff is layered on top. And to a certain extent that has allowed them to generalize to so many different use cases. People use spark for everything. At Facebook, I was using Spark for batch transcoding of speech, to text, for various use cases with a lot of issues under the hood. Right? So they are tied to the big data storage infrastructure. So when I am reimagining Spark, I almost can take the position that we are going to use blob storage for ingestion and writing raw data, and we will have low latency serving infrastructure in the form of something like postgres or something like clickhouse or something for serving like structured data or semi structured data. And then we have something like Qdrant, which is very geared towards doing vector search and so on. And so we understand the shape of the storage system now.

Diptanu Gon Choudhury:

We understand that developers want to integrate with them. So now we can control the compute layer such that the compute layer is optimized for doing the compute and producing data such that they can be written in those data stores, right? So we understand the I Ops, right? The I O, what is it called? The I O characteristics of the underlying storage system really well. And we understand that the use case is that people want to consume those data in llms, right? So we can make design decisions such that how we write into those, into the storage system, how we serve very specifically for llms, that I feel like a developer would be making those decisions themselves, like if they were using some other tool.

Demetrios:

Yeah, it does feel like optimizing for that and recognizing that spark is almost like a swiss army knife. As you mentioned, you can do a million things with it, but sometimes you don't want to do a million things. You just want to do one thing and you want it to be really easy to be able to do that one thing. I had a friend who worked at some enterprise and he was talking about how spark engineers have all the job security in the world, because a, like you said, you need a lot of them, and b, it's hard stuff being able to work on that and getting really deep and knowing it and the ins and outs of it. So I can feel where you're coming from on that one.

Diptanu Gon Choudhury:

Yeah, I mean, we basically integrated the compute engine with the storage so developers don't have to think about it. Plug in whatever storage you want. We support, obviously, like all the blob stores, and we support Qdrant and postgres right now, indexify in the future can even have other storage engines. And now all an application developer needs to do is deploy this on AWS or GCP or whatever, right? Have enough compute, point it to the storage systems, and then now build your application. You don't need to make any of the hard decisions or build a distributed systems by bringing together like five different tools and spend like five months building the data layer, focus on the application, build your agents.

Demetrios:

So there is something else. As we are winding down, I want to ask you one last thing, and if anyone has any questions, feel free to throw them in the chat. I am monitoring that also, but I am wondering about advice that you have for people that are building rag based applications, because I feel like you've probably seen quite a few out there in the wild. And so what are some optimizations or some nice hacks that you've seen that have worked really well? Yeah.

Diptanu Gon Choudhury:

So I think, first of all, there is a recent paper, like a rack survey paper. I really like it. Maybe you can have the link on the show notes if you have one. There was a recent survey paper, I really liked it, and it covers a lot of tips and tricks that people can use with Rag. But essentially, Rag is an information. Rag is like a two step process in its essence. One is the document selection process and the document reading process. Document selection is how do you retrieve the most important information out of million documents that might be there, and then the reading process is how do you jam them in the context of a model, and so that the model can kind of ground its generation based on the context.

Diptanu Gon Choudhury:

So I think the most tricky part here, and the part which has the most tips and tricks is the document selection part. And that is like a classic information retrieval problem. So I would suggest people doing a lot of experimentation around ranking algorithms, hitting different type of indexes, and refining the results by merging results from different indexes. One thing that always works for me is reducing the search space of the documents that I am selecting in a very systematic manner. So like using some kind of hybrid search where someone does the embedding lookup first, and then does the keyword lookup, or vice versa, or does lookups parallel and then merges results together? Those kind of things where the search space is narrowed down always works for me.

Demetrios:

So I think one of the Qdrant team members would love to know because I've been talking to them quite frequently about this, the evaluating of retrieval. Have you found any tricks or tips around that and evaluating the quality of what is retrieved?

Diptanu Gon Choudhury:

So I haven't come across a golden one trick that fits every use case type thing like solution for evaluation. Evaluation is really hard. There are open source projects like ragas who are trying to solve it, and everyone is trying to solve various, various aspects of evaluating like rag exactly. Some of them try to evaluate how accurate the results are, some people are trying to evaluate how diverse the answers are, and so on. I think the most important thing that our design partners care about is factual accuracy and factual accuracy. One process that has worked really well is like having a critique model. So let the generation model generate some data and then have a critique model go and try to find citations and look up how accurate the data is, how accurate the generation is, and then feed that back into the system. One another thing like going back to the previous point is what tricks can someone use for doing rag really well? I feel like people don't fine tune embedding models that much.

Diptanu Gon Choudhury:

I think if people are using an embedding model, like sentence transformer or anything like off the shelf, they should look into fine tuning the embedding models on their data set that they are embedding. And I think a combination of fine tuning the embedding models and kind of like doing some factual accuracy checks lead to a long way in getting like rag working really well.

Demetrios:

Yeah, it's an interesting one. And I'll probably leave it here on the extra model that is basically checking factual accuracy. You've always got these trade offs that you're playing with, right? And one of the trade offs is going to be, maybe you're making another LLM call, which could be more costly, but you're gaining trust or you're gaining confidence that what it's outputting is actually what it says it is. And it's actually factually correct, as you said. So it's like, what price can you put on trust? And we're going back to that whole thing that I saw on Chevy's website where they were saying that a Tesla is better. It's like that hopefully doesn't happen anymore as people deploy this stuff and they recognize that humans are cunning when it comes to playing around with chat bots. So this has been fascinating, man. I appreciate you coming on here and chatting me with it.

Demetrios:

I encourage everyone to go and either reach out to you on LinkedIn, I know you are on there, and we'll leave a link to your LinkedIn in the chat too. And if not, check out Tensorleg, check out indexify, and we will be in touch. Man, this was great.

Diptanu Gon Choudhury:

Yeah, same. It was really great chatting with you about this, Demetrius, and thanks for having me today.

Demetrios:

Cheers. I'll talk to you later.

|

qdrant-landing/content/blog/insight-generation-platform-for-lifescience-corporation-hooman-sedghamiz-vector-space-talks-014.md | ---

draft: false

title: Insight Generation Platform for LifeScience Corporation - Hooman

Sedghamiz | Vector Space Talks

slug: insight-generation-platform

short_description: Hooman Sedghamiz explores the potential of large language