url

stringlengths 66

66

| text

stringlengths 141

41.9k

| num_labels

sequencelengths 1

8

| arr_labels

sequencelengths 82

82

| labels

sequencelengths 1

8

|

|---|---|---|---|---|

https://api.github.com/repos/huggingface/transformers/issues/34715 |

TITLE

The error caused by the missing espeak library

COMMENTS

3

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

**System Info**

- `transformers` version: 4.46.2

- Platform: Linux-5.4.0-198-generic-x86_64-with-glibc2.35

- Python version: 3.11.9

- Huggingface_hub version: 0.26.2

- Safetensors version: 0.4.5

- Accelerate version: not installed

- Accelerate config: not found

- PyTorch version (GPU?): 2.4.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: <fill in>

- Using GPU in script?: <fill in>

- GPU type: Tesla V100-SXM2-16GB

### Who can help?

_No response_

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

1. **Docker Image**: Use the Docker image `pytorch/pytorch:2.4.0-cuda12.4-cudnn9-devel`.

2. **Install Requirements**:

```

transformers

other necessary packages

```

3. **Run the First Test Code**:

```python

from transformers.models.auto.tokenization_auto import AutoTokenizer as A

b = A.from_pretrained("facebook/wav2vec2-xlsr-53-espeak-cv-ft", cache_dir=None, force_download=True, local_files_only=False, revision='main')

print("b", b)

# b False

```

4. **After Analysis**, run the following test code:

```python

from transformers.models.wav2vec2_phoneme.tokenization_wav2vec2_phoneme import (

Wav2Vec2PhonemeCTCTokenizer,

)

kwargs = {

"cache_dir": None,

"force_download": True,

"local_files_only": False,

"revision": "main",

"_from_auto": True,

"_commit_hash": None,

}

A = Wav2Vec2PhonemeCTCTokenizer("facebook/wav2vec2-xlsr-53-espeak-cv-ft", **kwargs)

```

### Expected behavior

In step 3, the Tokenizer was not loaded correctly.

Step 4 throws the following error:

```

Traceback (most recent call last):

File "/workspace/test.py", line 14, in <module>

A = Wav2Vec2PhonemeCTCTokenizer(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/conda/lib/python3.11/site-packages/transformers/models/wav2vec2_phoneme/tokenization_wav2vec2_phoneme.py", line 136, in __init__

self.init_backend(self.phonemizer_lang)

File "/opt/conda/lib/python3.11/site-packages/transformers/models/wav2vec2_phoneme/tokenization_wav2vec2_phoneme.py", line 185, in init_backend

self.backend = BACKENDS[self.phonemizer_backend](phonemizer_lang, language_switch="remove-flags")

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/opt/conda/lib/python3.11/site-packages/phonemizer/backend/espeak/espeak.py", line 45, in __init__

super().__init__(

File "/opt/conda/lib/python3.11/site-packages/phonemizer/backend/espeak/base.py", line 39, in __init__

super().__init__(

File "/opt/conda/lib/python3.11/site-packages/phonemizer/backend/base.py", line 77, in __init__

raise RuntimeError( # pragma: nocover

RuntimeError: espeak not installed on your system

``` | [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/33665 |

TITLE

[Tests] Diverse Whisper fixes

COMMENTS

12

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

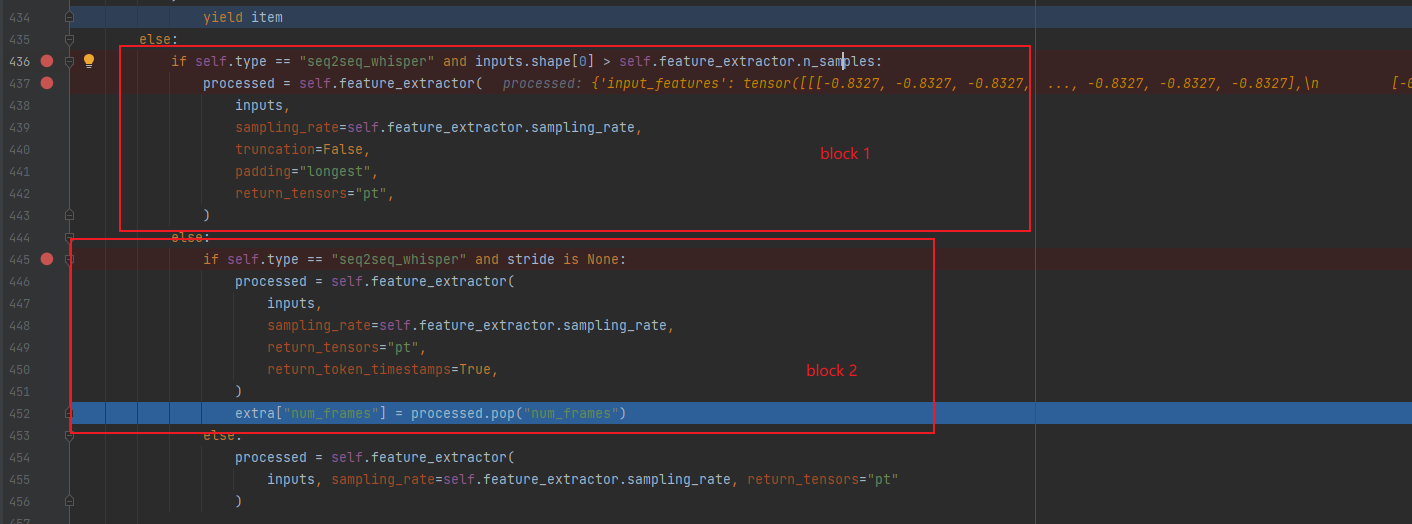

# What does this PR do?

There's a lot of pending failing tests with Whisper. This PR addresses some issues:

1. #31683 and #31770 mentioned a out-of-range word level timestamps. This happens because `decoder_inputs_ids` were once `forced_input_ids`. This had an impact on the `beam_indices`.

`beam_indices` has a length of `decoder_input_ids + potentially_generated_ids` but doesn't take into account `decoder_input_ids` when keeping track of the indices. In other words `beam_indices[0]` is really the beam indice of the first generated token, instead of `decoder_input_ids[0]`.

2. The Flash-Attention 2 attention mask was causing an issue

3. The remaining work is done on the modeling tests. Note that some of these tests were failing because of straightforward reasons - e.g the output was a dict - and are actually still failing, but their reasons for failing are not straightforward anymore. Debugging will be easier though.

**Note:** With #33450 and this, we're down from 29 failing tests to 17

| [

73

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"run-slow"

] |

https://api.github.com/repos/huggingface/transformers/issues/34622 |

TITLE

Question on OWLv2 Model Input Size Flexibility in Hugging Face

COMMENTS

3

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

I noticed that in Google Research's OWLv2 implementation, the model can accept images of varying sizes, as it allows the input image size to be adjusted. Does Hugging Face’s version of OWLv2 support this same flexibility in input image sizes? | [

62

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"Vision"

] |

https://api.github.com/repos/huggingface/transformers/issues/34583 |

TITLE

Add support for Apple's Depth-Pro

COMMENTS

86

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 3

rocket: 0

eyes: 0

BODY

# What does this PR do?

Fixes #34020

This PR adds Apple's Depth Pro model to Hugging Face Transformers. Depth Pro is a foundation model for zero-shot metric monocular depth estimation. It leverages a multi-scale vision transformer optimized for dense predictions. It downsamples an image at several scales. At each scale, it is split into patches, which are processed by a ViT-based (Dinov2) patch encoder, with weights shared across scales. Patches are merged into feature maps, upsampled, and fused via a DPT decoder.

Relevant Links

- Research Paper: [Depth Pro: Sharp Monocular Metric Depth in Less Than a Second](https://arxiv.org/pdf/2410.02073)

- Authors: [Aleksei Bochkovskii](https://arxiv.org/search/cs?searchtype=author&query=Bochkovskii,+A), [Amaël Delaunoy](https://arxiv.org/search/cs?searchtype=author&query=Delaunoy,+A), and others

- Implementation: [apple/ml-depth-pro](https://github.com/apple/ml-depth-pro)

- Models Weights: [apple/DepthPro](https://huggingface.co/apple/DepthPro)

## Before submitting

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#create-a-pull-request),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

@amyeroberts, @qubvel

| [

77,

62

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0

] | [

"New model",

"Vision"

] |

https://api.github.com/repos/huggingface/transformers/issues/35349 |

TITLE

A warning message showing that `MultiScaleDeformableAttention.so` is not found in `/root/.cache/torch_extensions` if `ninja` is installed with `transformers`

COMMENTS

12

REACTIONS

+1: 1

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

* `transformers`: `4.47.1`

* `torch`: `2.5.1`

* `timm`: `1.0.12`

* `ninja`: `1.11.1.3`

* `python`: `3.10.14`

* `pip`: `23.0.1`

* CUDA runtime installed by `torch`: `nvidia-cuda-runtime-cu12==12.4.127`

* OS (in container): Debian GNU/Linux 12 (bookworm)

* OS (native device): Windows 11 Enterprise 23H2 (`10.0.22631 Build 22631`)

* Docker version: `27.3.1, build ce12230`

* NVIDIA Driver: `565.57.02`

### Who can help?

I am asking help for [`DeformableDetrModel`](https://huggingface.co/docs/transformers/v4.47.1/en/model_doc/deformable_detr#transformers.DeformableDetrModel)

vision models: @amyeroberts, @qubvel

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

1. Start a new docker container by

```sh

docker run --gpus all -it --rm --shm-size=1g python:3.10-slim bash

```

2. Install dependencies

```sh

pip install transformers[torch] requests pillow timm

```

3. Run the following script (copied from [the document](https://huggingface.co/docs/transformers/v4.47.1/en/model_doc/deformable_detr#transformers.DeformableDetrModel.forward.example)), it works fine and does not show any message.

```python

from transformers import AutoImageProcessor, DeformableDetrModel

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr")

model = DeformableDetrModel.from_pretrained("SenseTime/deformable-detr")

inputs = image_processor(images=image, return_tensors="pt")

outputs = model(**inputs)

last_hidden_states = outputs.last_hidden_state

list(last_hidden_states.shape)

```

4. Install ninja:

```sh

pip install ninja

```

5. Run [the same script](https://huggingface.co/docs/transformers/v4.47.1/en/model_doc/deformable_detr#transformers.DeformableDetrModel.forward.example) again, this time, the following warning messages will show

```text

!! WARNING !!

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

Your compiler (c++) is not compatible with the compiler Pytorch was

built with for this platform, which is g++ on linux. Please

use g++ to to compile your extension. Alternatively, you may

compile PyTorch from source using c++, and then you can also use

c++ to compile your extension.

See https://github.com/pytorch/pytorch/blob/master/CONTRIBUTING.md for help

with compiling PyTorch from source.

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!

!! WARNING !!

warnings.warn(WRONG_COMPILER_WARNING.format(

Could not load the custom kernel for multi-scale deformable attention: CUDA_HOME environment variable is not set. Please set it to your CUDA install root.

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

Could not load the custom kernel for multi-scale deformable attention: /root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/MultiScaleDeformableAttention.so: cannot open shared object file: No such file or directory

```

Certainly, `/root/.cache/torch_extensions/py310_cu124/MultiScaleDeformableAttention/` is empty.

The issue happens only when both `ninja` and `transformers` are installed. I believe that the following issue may be related to this issue:

https://app.semanticdiff.com/gh/huggingface/transformers/pull/32834/overview

### Expected behavior

It seems that ninja will let `DeformableDetrModel` throw unexpected error messages (despite that the script still works). That's may be because I am using a container without any compiler or CUDA preinstalled (the CUDA run time is installed by `pip`).

I think there should be a check that automatically turn of the `ninja` related functionalities even if `ninja` is installed by `pip`, as long as the requirements like compiler version, CUDA path, or something, are not fulfilled.

| [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/34699 |

TITLE

TypeError: Accelerator.__init__() got an unexpected keyword argument 'dispatch_batches'

COMMENTS

8

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

transformers: 4.39.3

python: 3.10.12

### Who can help?

_No response_

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

An error may occur at line 580 of [run_ner.py](https://github.com/huggingface/transformers/blob/v4.39.3/examples/pytorch/token-classification/run_ner.py)

```python

trainer = Trainer(

model=model,

args=training_args,

train_dataset=train_dataset if training_args.do_train else None,

eval_dataset=eval_dataset if training_args.do_eval else None,

tokenizer=tokenizer,

data_collator=data_collator,

compute_metrics=compute_metrics,

)

```

### Expected behavior

```

Traceback (most recent call last):

File "/usr/src/app/llm_model_test/ner_train/run_ner.py", line 666, in <module>

main()

File "/usr/src/app/llm_model_test/ner_train/run_ner.py", line 580, in main

trainer = Trainer(

File "/usr/local/lib/python3.10/dist-packages/transformers/trainer.py", line 373, in __init__

self.create_accelerator_and_postprocess()

File "/usr/local/lib/python3.10/dist-packages/transformers/trainer.py", line 4252, in create_accelerator_and_postprocess

self.accelerator = Accelerator(

TypeError: Accelerator.__init__() got an unexpected keyword argument 'dispatch_batches'

```

Please Help Me... | [

27,

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"dependencies",

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/35979 |

TITLE

Fix custom kernel for DeformableDetr, RT-Detr, GroundingDINO, OmDet-Turbo in Pytorch 2.6.0

COMMENTS

6

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

# What does this PR do?

Updates

- tesnor.type().is_cuda() -> tesnor.is_cuda();

- tensor.data<...> -> tensor.data_ptr<...>

The following message appears in logs:

```

Tensor.type() is deprecated. Instead use Tensor.options(), which in many cases (e.g. in a constructor)

is a drop-in replacement. If you were using data from type(), that is now available from Tensor

itself, so instead of tensor.type().scalar_type(), use tensor.scalar_type() instead and instead of

tensor.type().backend() use tensor.device().

```

Fixes #35976

Might be relevant:

- https://github.com/pytorch/pytorch/issues/28472

- https://discuss.pytorch.org/t/kernel-launch-deprecated-packed-accessor-arguments-and-tensor-type-alternative/138875

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md#create-a-pull-request),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/main/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/main/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- text models: @ArthurZucker

- vision models: @amyeroberts, @qubvel

- speech models: @ylacombe, @eustlb

- graph models: @clefourrier

Library:

- flax: @sanchit-gandhi

- generate: @zucchini-nlp (visual-language models) or @gante (all others)

- pipelines: @Rocketknight1

- tensorflow: @gante and @Rocketknight1

- tokenizers: @ArthurZucker

- trainer: @muellerzr and @SunMarc

- chat templates: @Rocketknight1

Integrations:

- deepspeed: HF Trainer/Accelerate: @muellerzr

- ray/raytune: @richardliaw, @amogkam

- Big Model Inference: @SunMarc

- quantization (bitsandbytes, autogpt): @SunMarc @MekkCyber

Documentation: @stevhliu

HF projects:

- accelerate: [different repo](https://github.com/huggingface/accelerate)

- datasets: [different repo](https://github.com/huggingface/datasets)

- diffusers: [different repo](https://github.com/huggingface/diffusers)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Maintained examples (not research project or legacy):

- Flax: @sanchit-gandhi

- PyTorch: See Models above and tag the person corresponding to the modality of the example.

- TensorFlow: @Rocketknight1

-->

| [

62

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"Vision"

] |

https://api.github.com/repos/huggingface/transformers/issues/36023 |

TITLE

CEP_AI

COMMENTS

0

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### Model description

CEP of Subject Ai

### Open source status

- [ ] The model implementation is available

- [ ] The model weights are available

### Provide useful links for the implementation

_No response_ | [

77

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0

] | [

"New model"

] |

https://api.github.com/repos/huggingface/transformers/issues/35767 |

TITLE

Issue: Error with _eos_token_tensor when using Generator with GenerationMixin

COMMENTS

1

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

e-readability-summarization/src/inference$ python run_2.py

Traceback (most recent call last):

File "/home/surenoobster/Documents/project/src/inference/run_2.py", line 87, in <module>

output = generator.generate(

^^^^^^^^^^^^^^^^^^^

File "/home/surenoobster/anaconda3/lib/python3.12/site-packages/torch/utils/_contextlib.py", line 116, in decorate_context

return func(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^

File "/home/surenoobster/Documents/project/src/inference/generation_2.py", line 572, in generate

stopping_criteria = self.model._get_stopping_criteria(

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/surenoobster/anaconda3/lib/python3.12/site-packages/transformers/generation/utils.py", line 1126, in _get_stopping_criteria

if generation_config._eos_token_tensor is not None:

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

AttributeError: 'GenerationConfig' object has no attribute '_eos_token_tensor'

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [x] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

I'm not able to get it , getting problem with device

### Expected behavior

The Generator should initialize the required token tensors correctly to ensure compatibility with GenerationMixin and avoid errors. | [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/36037 |

TITLE

Fix qwen2-vl generate calls with synced_gpus

COMMENTS

1

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

When using `synced_gpus`, after one peer finishes generating, the cache position in the generation process continues to increase. This leads to the input IDs going out of bounds, resulting in errors. The issue specifically occurs in the following line of code:

[modeling_qwen2_vl.py#L1739](https://github.com/huggingface/transformers/blob/main/src/transformers/models/qwen2_vl/modeling_qwen2_vl.py#L1739).

The root cause seems to be the difference in the implementation of the `prepare_inputs_for_generation` function compared to the default implementation found here:

[utils.py#L388](https://github.com/huggingface/transformers/blob/main/src/transformers/generation/utils.py#L388).

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Happened when running this code

https://github.com/Deep-Agent/R1-V/blob/main/src/open-r1-multimodal/src/open_r1/trainer/grpo_trainer.py#L372

### Expected behavior

Keep consistent with the default implementation | [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/34024 |

TITLE

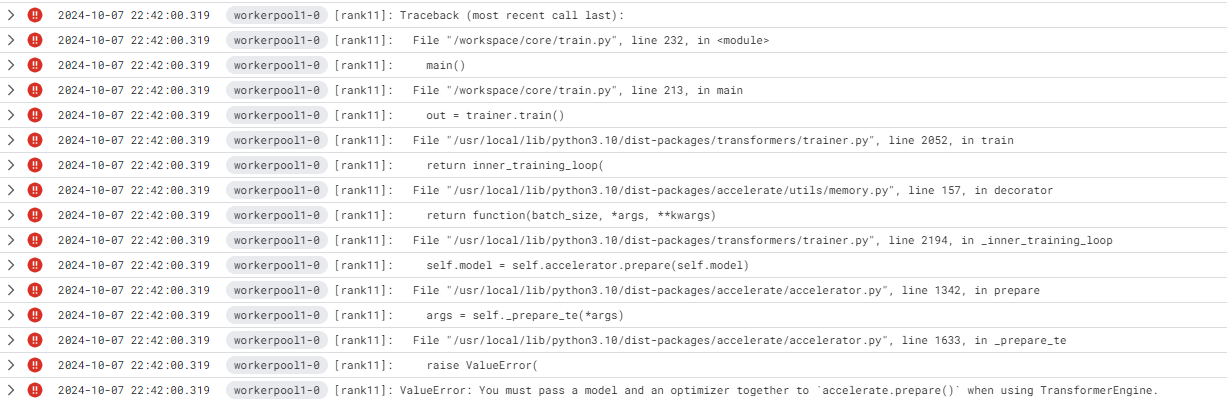

HF Trainer do not support Pytorch FSDP with FP8; ValueError: You must pass a model and an optimizer together to `accelerate.prepare()` when using TransformerEngine.

COMMENTS

0

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

acc_cfg.yml:

compute_environment: LOCAL_MACHINE

debug: false

distributed_type: FSDP

downcast_bf16: 'no'

enable_cpu_affinity: false

fsdp_config:

fsdp_activation_checkpointing: true

fsdp_auto_wrap_policy: NO_WRAP

fsdp_backward_prefetch: NO_PREFETCH

fsdp_cpu_ram_efficient_loading: true

fsdp_forward_prefetch: true

fsdp_offload_params: true

fsdp_sharding_strategy: FULL_SHARD

fsdp_state_dict_type: SHARDED_STATE_DICT

fsdp_sync_module_states: true

fsdp_use_orig_params: true

machine_rank: 0

main_process_ip: 0.0.0.0

main_process_port: 0

main_training_function: main

mixed_precision: fp8

fp8_config:

amax_compute_algorithm: max

amax_history_length: 1024

backend: TE

fp8_format: HYBRID

interval: 1

margin: 0

override_linear_precision: false

use_autocast_during_eval: true

num_machines: 3

num_processes: 24

rdzv_backend: etcd-v2

same_network: false

tpu_env: []

tpu_use_cluster: false

tpu_use_sudo: false

use_cpu: false

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

* accelerate launch --config_file acc_cfg.yml train.py $TRAINING_ARGS

* the train.py is any training script that train using transformers.Trainer

* $TRAINING_ARGS are the TrainingArguments plus some path to data

### Expected behavior

Train Paligemma model with FSDP and FP8. | [

66,

64,

17,

80

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0

] | [

"trainer",

"bug",

"PyTorch FSDP",

"Accelerate"

] |

https://api.github.com/repos/huggingface/transformers/issues/35507 |

TITLE

Memory Access out of bounds in mra/cuda_kernel.cu::index_max_cuda_kernel()

COMMENTS

2

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

* OS: Linux ubuntu 22.04 LTS

* Device: A100-80GB

* docker: nvidia/pytorch:24.04-py3

* transformers: latest, 4.47.0

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

## Reproduction

1. pip install the latest transformers

2. prepare the UT test enviroments by `pip install -e .[testing]`

3. `pytest tests/models/mra/test_modeling_mra.py`

## Analysis

There might be some memory access out-of-bound behaviours in CUDA kernel `index_max_cuda_kernel()`

https://github.com/huggingface/transformers/blob/main/src/transformers/kernels/mra/cuda_kernel.cu#L6C1-L58C2

Note that `max_buffer` in this kernel is `extern __shared__ float` type, which means `max_buffer` would be stored in shared memory.

According to https://github.com/huggingface/transformers/blob/main/src/transformers/kernels/mra/cuda_launch.cu#L24-L35, CUDA would launch this kernel with

* gird size: `batch_size`

* block size: 256

* shared memory size: `A_num_block * 32 * sizeof(float)`

In case that `A_num_block` < 4, the for statement below might accidentally locate the memory out of `A_num_block * 32`, since num_thread here is 256, and threadIdx.x is [0, 255].

```

for (int idx_start = 0; idx_start < 32 * num_block; idx_start = idx_start + num_thread) {

```

Therefore, when threadblocks of threads try to access `max_buffer`, it would be wiser and more careful to always add `if` statements before to avoid memory access out of bounds.

So We suggest to add `if` statements in two places:

### Expected behavior

UT tests should all pass! | [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/36057 |

TITLE

past_key_values type support bug

COMMENTS

1

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

In many `XXXXForCausalLM` Class, the past_key_values signature of the forward function is: `past_key_values: Optional[Union[Cache, List[torch.FloatTensor]]] = None,` but the corresponding past_key_values signature of the forward function of the `XXXXModel` Class is: `past_key_values: Optional[Cache] = None,` and the function implementation does not support the `List` type. `past_key_values` will causes an error when calling `self.model` use List type `past_key_values`.

Like `LlamaForCausalLM` and `Qwen2ForCausalLM` | [

74

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0

] | [

"Documentation"

] |

https://api.github.com/repos/huggingface/transformers/issues/36142 |

TITLE

Bump cryptography from 43.0.1 to 44.0.1 in /examples/research_projects/decision_transformer

COMMENTS

1

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

Bumps [cryptography](https://github.com/pyca/cryptography) from 43.0.1 to 44.0.1.

<details>

<summary>Changelog</summary>

<p><em>Sourced from <a href="https://github.com/pyca/cryptography/blob/main/CHANGELOG.rst">cryptography's changelog</a>.</em></p>

<blockquote>

<p>44.0.1 - 2025-02-11</p>

<pre><code>

* Updated Windows, macOS, and Linux wheels to be compiled with OpenSSL 3.4.1.

* We now build ``armv7l`` ``manylinux`` wheels and publish them to PyPI.

* We now build ``manylinux_2_34`` wheels and publish them to PyPI.

<p>.. _v44-0-0:</p>

<p>44.0.0 - 2024-11-27

</code></pre></p>

<ul>

<li><strong>BACKWARDS INCOMPATIBLE:</strong> Dropped support for LibreSSL < 3.9.</li>

<li>Deprecated Python 3.7 support. Python 3.7 is no longer supported by the

Python core team. Support for Python 3.7 will be removed in a future

<code>cryptography</code> release.</li>

<li>Updated Windows, macOS, and Linux wheels to be compiled with OpenSSL 3.4.0.</li>

<li>macOS wheels are now built against the macOS 10.13 SDK. Users on older

versions of macOS should upgrade, or they will need to build

<code>cryptography</code> themselves.</li>

<li>Enforce the :rfc:<code>5280</code> requirement that extended key usage extensions must

not be empty.</li>

<li>Added support for timestamp extraction to the

:class:<code>~cryptography.fernet.MultiFernet</code> class.</li>

<li>Relax the Authority Key Identifier requirements on root CA certificates

during X.509 verification to allow fields permitted by :rfc:<code>5280</code> but

forbidden by the CA/Browser BRs.</li>

<li>Added support for :class:<code>~cryptography.hazmat.primitives.kdf.argon2.Argon2id</code>

when using OpenSSL 3.2.0+.</li>

<li>Added support for the :class:<code>~cryptography.x509.Admissions</code> certificate extension.</li>

<li>Added basic support for PKCS7 decryption (including S/MIME 3.2) via

:func:<code>~cryptography.hazmat.primitives.serialization.pkcs7.pkcs7_decrypt_der</code>,

:func:<code>~cryptography.hazmat.primitives.serialization.pkcs7.pkcs7_decrypt_pem</code>, and

:func:<code>~cryptography.hazmat.primitives.serialization.pkcs7.pkcs7_decrypt_smime</code>.</li>

</ul>

<p>.. _v43-0-3:</p>

<p>43.0.3 - 2024-10-18</p>

<pre><code>

* Fixed release metadata for ``cryptography-vectors``

<p>.. _v43-0-2:</p>

<p>43.0.2 - 2024-10-18

</code></pre></p>

<ul>

<li>Fixed compilation when using LibreSSL 4.0.0.</li>

</ul>

<p>.. _v43-0-1:</p>

</blockquote>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a href="https://github.com/pyca/cryptography/commit/adaaaed77db676bbaa9d171175db81dce056e2a7"><code>adaaaed</code></a> Bump for 44.0.1 release (<a href="https://redirect.github.com/pyca/cryptography/issues/12441">#12441</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/ccc61dabe38b86956bf218565cd4e82b918345a1"><code>ccc61da</code></a> [backport] test and build on armv7l (<a href="https://redirect.github.com/pyca/cryptography/issues/12420">#12420</a>) (<a href="https://redirect.github.com/pyca/cryptography/issues/12431">#12431</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/f299a48153650f2dd87716343f2daa7cd39a1f59"><code>f299a48</code></a> remove deprecated call (<a href="https://redirect.github.com/pyca/cryptography/issues/12052">#12052</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/439eb0594a9ffb7c9adedb2490998d83914d141e"><code>439eb05</code></a> Bump version for 44.0.0 (<a href="https://redirect.github.com/pyca/cryptography/issues/12051">#12051</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/2c5ad4d8dcec1b8f833198bc2f3b4634c4fd9d78"><code>2c5ad4d</code></a> chore(deps): bump maturin from 1.7.4 to 1.7.5 in /.github/requirements (<a href="https://redirect.github.com/pyca/cryptography/issues/12050">#12050</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/d23968adddd79aa8508d7c1f985da09383b3808f"><code>d23968a</code></a> chore(deps): bump libc from 0.2.165 to 0.2.166 (<a href="https://redirect.github.com/pyca/cryptography/issues/12049">#12049</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/133c0e02edf2f172318eb27d8f50525ed64c9ec3"><code>133c0e0</code></a> Bump x509-limbo and/or wycheproof in CI (<a href="https://redirect.github.com/pyca/cryptography/issues/12047">#12047</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/f2259d7aa0d134c839ebe298baa8b63de9ead804"><code>f2259d7</code></a> Bump BoringSSL and/or OpenSSL in CI (<a href="https://redirect.github.com/pyca/cryptography/issues/12046">#12046</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/e201c870b89fd2606d67230a97e50c3badb07907"><code>e201c87</code></a> fixed metadata in changelog (<a href="https://redirect.github.com/pyca/cryptography/issues/12044">#12044</a>)</li>

<li><a href="https://github.com/pyca/cryptography/commit/c6104cc3669585941dc1d2b9c6507621c53d242f"><code>c6104cc</code></a> Prohibit Python 3.9.0, 3.9.1 -- they have a bug that causes errors (<a href="https://redirect.github.com/pyca/cryptography/issues/12045">#12045</a>)</li>

<li>Additional commits viewable in <a href="https://github.com/pyca/cryptography/compare/43.0.1...44.0.1">compare view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself)

You can disable automated security fix PRs for this repo from the [Security Alerts page](https://github.com/huggingface/transformers/network/alerts).

</details> | [

27,

60

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"dependencies",

"python"

] |

https://api.github.com/repos/huggingface/transformers/issues/34727 |

TITLE

[Idefics3] processing_idefics3 - IndexError: list index out of range for multiple image input

COMMENTS

2

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

- `transformers` version: 4.46.2

- Platform: Linux-5.4.0-1134-aws-x86_64-with-glibc2.31

- Python version: 3.10.2

- Huggingface_hub version: 0.26.2

- Safetensors version: 0.4.5

- Accelerate version: 1.1.1

- Accelerate config: not found

- PyTorch version (GPU?): 2.5.1+cu124 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using distributed or parallel set-up in script?: No

- Using GPU in script?: Yes

- GPU type: Tesla T4

### Who can help?

@amyeroberts , @quvb

### Information

- [X] The official example scripts

- [ ] My own modified scripts

### Tasks

- [X] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

Code to reproduce:

from PIL import Image

img1=Image.open('Image1.JPG')

img2=Image.open('Image2.JPG')

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[img1,img2], return_tensors="pt")

inputs = {k: v.to(DEVICE) for k, v in inputs.items()}

# Generate

generated_ids = model.generate(**inputs, max_new_tokens=512)

generated_texts = processor.batch_decode(generated_ids, skip_special_tokens=True)

print(generated_texts)

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

Cell In[4], line 6

3 img2=Image.open('Image2.JPG')

5 prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

----> 6 inputs = processor(text=[prompt,prompt], images=[img1,img2], return_tensors="pt")

7 inputs = {k: v.to(DEVICE) for k, v in inputs.items()}

9 # Generate

File ~/envs/default/lib/python3.10/site-packages/transformers/models/idefics3/processing_idefics3.py:302, in Idefics3Processor.__call__(self, images, text, audio, videos, image_seq_len, **kwargs)

300 sample = split_sample[0]

301 for i, image_prompt_string in enumerate(image_prompt_strings):

--> 302 sample += image_prompt_string + split_sample[i + 1]

303 prompt_strings.append(sample)

305 text_inputs = self.tokenizer(text=prompt_strings, **output_kwargs["text_kwargs"])

IndexError: list index out of range

### Expected behavior

I would expect Model to take 2 images in the input and provide generation using these 2 images as context. | [

64,

62,

12

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug",

"Vision",

"Multimodal"

] |

https://api.github.com/repos/huggingface/transformers/issues/34176 |

TITLE

[Bug] transformers `TPU` support broken on `v4.45.0`

COMMENTS

23

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 1

BODY

### System Info

transformers: v4.45.0 and up (any of v4.45.0 / v4.45.1 / v4.45.2)

accelerate: v1.0.1 (same result on v0.34.2)

### Who can help?

trainer experts: @muellerzr @SunMarc

accelerate expert: @muellerzr

text models expert: @ArthurZucker

Thank you guys!

### Information

- [X] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [X] My own task or dataset (give details below)

### Reproduction

Minimal working code is [Here](https://gist.github.com/steveepreston/acd125a08214c631ba8389eb61a13798). Code follows [GoogleCloudPlatform example](https://github.com/GoogleCloudPlatform/vertex-ai-samples/blob/main/notebooks/official/training/tpuv5e_llama2_pytorch_finetuning_and_serving.ipynb)

on TPU VM, train done like a charm on transformers from v4.43.1 to v4.44.2, but when upgrading to any of v4.45.0 / v4.45.1 / v4.45.2 it throws this Error: `RuntimeError: There are currently no available devices found, must be one of 'XPU', 'CUDA', or 'NPU'.`

**Error Traceback:**

General traceback is: callling `SFTTrainer()` > `self.accelerator = Accelerator(**args)` (transformers/trainer.py)

<details>

<summary>Click here to Show Full Error Traceback</summary>

```python

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

Cell In[48], line 4

1 from trl import SFTTrainer

2 from transformers import AutoTokenizer, AutoModelForCausalLM, TrainingArguments

----> 4 trainer = SFTTrainer(

5 model=base_model,

6 train_dataset=data,

7 args=TrainingArguments(

8 per_device_train_batch_size=BATCH_SIZE, # This is actually the global batch size for SPMD.

9 num_train_epochs=1,

10 max_steps=-1,

11 output_dir="/output_dir",

12 optim="adafactor",

13 logging_steps=1,

14 dataloader_drop_last = True, # Required for SPMD.

15 fsdp="full_shard",

16 fsdp_config=fsdp_config,

17 ),

18 peft_config=lora_config,

19 dataset_text_field="quote",

20 max_seq_length=max_seq_length,

21 packing=True,

22 )

File /usr/local/lib/python3.10/site-packages/huggingface_hub/utils/_deprecation.py:101, in _deprecate_arguments.<locals>._inner_deprecate_positional_args.<locals>.inner_f(*args, **kwargs)

99 message += "\n\n" + custom_message

100 warnings.warn(message, FutureWarning)

--> 101 return f(*args, **kwargs)

File /usr/local/lib/python3.10/site-packages/trl/trainer/sft_trainer.py:401, in SFTTrainer.__init__(self, model, args, data_collator, train_dataset, eval_dataset, tokenizer, model_init, compute_metrics, callbacks, optimizers, preprocess_logits_for_metrics, peft_config, dataset_text_field, packing, formatting_func, max_seq_length, infinite, num_of_sequences, chars_per_token, dataset_num_proc, dataset_batch_size, neftune_noise_alpha, model_init_kwargs, dataset_kwargs, eval_packing)

395 if tokenizer.padding_side is not None and tokenizer.padding_side != "right":

396 warnings.warn(

397 "You passed a tokenizer with `padding_side` not equal to `right` to the SFTTrainer. This might lead to some unexpected behaviour due to "

398 "overflow issues when training a model in half-precision. You might consider adding `tokenizer.padding_side = 'right'` to your code."

399 )

--> 401 super().__init__(

402 model=model,

403 args=args,

404 data_collator=data_collator,

405 train_dataset=train_dataset,

406 eval_dataset=eval_dataset,

407 tokenizer=tokenizer,

408 model_init=model_init,

409 compute_metrics=compute_metrics,

410 callbacks=callbacks,

411 optimizers=optimizers,

412 preprocess_logits_for_metrics=preprocess_logits_for_metrics,

413 )

415 # Add tags for models that have been loaded with the correct transformers version

416 if hasattr(self.model, "add_model_tags"):

File /usr/local/lib/python3.10/site-packages/transformers/trainer.py:411, in Trainer.__init__(self, model, args, data_collator, train_dataset, eval_dataset, tokenizer, model_init, compute_metrics, callbacks, optimizers, preprocess_logits_for_metrics)

408 self.deepspeed = None

409 self.is_in_train = False

--> 411 self.create_accelerator_and_postprocess()

413 # memory metrics - must set up as early as possible

414 self._memory_tracker = TrainerMemoryTracker(self.args.skip_memory_metrics)

File /usr/local/lib/python3.10/site-packages/transformers/trainer.py:4858, in Trainer.create_accelerator_and_postprocess(self)

4855 args.update(accelerator_config)

4857 # create accelerator object

-> 4858 self.accelerator = Accelerator(**args)

4859 # some Trainer classes need to use `gather` instead of `gather_for_metrics`, thus we store a flag

4860 self.gather_function = self.accelerator.gather_for_metrics

File /usr/local/lib/python3.10/site-packages/accelerate/accelerator.py:349, in Accelerator.__init__(self, device_placement, split_batches, mixed_precision, gradient_accumulation_steps, cpu, dataloader_config, deepspeed_plugin, fsdp_plugin, megatron_lm_plugin, rng_types, log_with, project_dir, project_config, gradient_accumulation_plugin, step_scheduler_with_optimizer, kwargs_handlers, dynamo_backend, deepspeed_plugins)

345 raise ValueError(f"FSDP requires PyTorch >= {FSDP_PYTORCH_VERSION}")

347 if fsdp_plugin is None: # init from env variables

348 fsdp_plugin = (

--> 349 FullyShardedDataParallelPlugin() if os.environ.get("ACCELERATE_USE_FSDP", "false") == "true" else None

350 )

351 else:

352 if not isinstance(fsdp_plugin, FullyShardedDataParallelPlugin):

File <string>:21, in __init__(self, sharding_strategy, backward_prefetch, mixed_precision_policy, auto_wrap_policy, cpu_offload, ignored_modules, state_dict_type, state_dict_config, optim_state_dict_config, limit_all_gathers, use_orig_params, param_init_fn, sync_module_states, forward_prefetch, activation_checkpointing, cpu_ram_efficient_loading, transformer_cls_names_to_wrap, min_num_params)

File /usr/local/lib/python3.10/site-packages/accelerate/utils/dataclasses.py:1684, in FullyShardedDataParallelPlugin.__post_init__(self)

1682 device = torch.xpu.current_device()

1683 else:

-> 1684 raise RuntimeError(

1685 "There are currently no available devices found, must be one of 'XPU', 'CUDA', or 'NPU'."

1686 )

1687 # Create a function that will be used to initialize the parameters of the model

1688 # when using `sync_module_states`

1689 self.param_init_fn = lambda x: x.to_empty(device=device, recurse=False)

RuntimeError: There are currently no available devices found, must be one of 'XPU', 'CUDA', or 'NPU'.

```

</details>

**My observation and guess**

I tested multiple times, and can confirm that this error is Directly Caused by only changing version of `transformers`. Therefore `accelerate` version was fixed during all runs, my guess is something changed on `v4.45.0` (maybe on `trainer.py`) that affects `args` in the `self.accelerator = Accelerator(**args)`, so that error will raised by `accelerate` .

### Expected behavior

my guess: `args` corrected and `self.accelerator = Accelerator(**args)` called correctly. so `accelerate` can work on `TPU`. | [

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/34162 |

TITLE

requests.exceptions.ReadTimeout on already cached/downloaded model using SentenceTransformers

COMMENTS

5

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

transformers version: 44.2

python version: 3.11.6

system OS: Linux

### Who can help?

_No response_

### Information

- [ ] The official example scripts

- [X] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

When HuggingFace servers are down or you have no internet connection, try to initialize an already downloaded/cached model. I was using SentenceTransformers (running SentenceTransformer(model_name_or_path=model_id, device=my_device), but the problem comes from the transformers library, so I'm not sure which library should make the changes.

### Expected behavior

The model loads properly without requiring any connection to the hub. | [

67,

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"Usage",

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/33998 |

TITLE

Is the BOS token id of 128000 **hardcoded** into the llama 3.2 tokenizer?

COMMENTS

13

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

- `transformers` version: 4.45.1

- Platform: Linux-5.15.154+-x86_64-with-glibc2.31

- Python version: 3.10.13

- Huggingface_hub version: 0.23.2

- Safetensors version: 0.4.3

- Accelerate version: 0.30.1

- Accelerate config: not found

- PyTorch version (GPU?): 2.1.2+cpu (False)

- Tensorflow version (GPU?): 2.15.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.8.4 (cpu)

- Jax version: 0.4.28

- JaxLib version: 0.4.28

- Using distributed or parallel set-up in script?: <fill in>

### Who can help?

@ArthurZucker @itazap

### Information

- [ ] The official example scripts

- [ ] My own modified scripts

### Tasks

- [ ] An officially supported task in the `examples` folder (such as GLUE/SQuAD, ...)

- [ ] My own task or dataset (give details below)

### Reproduction

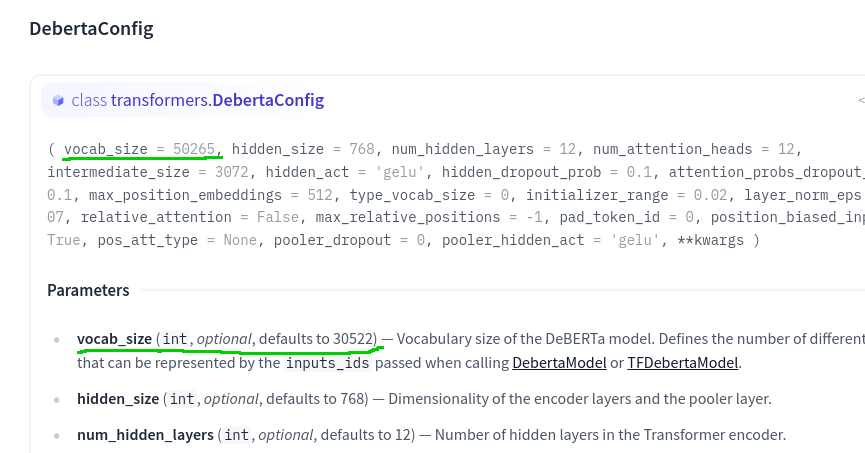

I trained the llama 3.2 tokenizer using an Amharic language corpus and a vocab size of `28k`, but when I use it to tokenize text, the first token id is still `128000` when it should have been the new tokenizer's **BOS token id** of `0`.

And here's a tokenization of an example text. As can be seen, the first token id is `128000` when it should have been `0`.

```python

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("rasyosef/llama-3.2-amharic-tokenizer-28k")

text = "ሁሉም ነገር"

inputs = tokenizer(text, return_tensors="pt")

print(inputs["input_ids"])

```

Output:

```

tensor([[128000, 1704, 802]])

```

### Expected behavior

The first token id of the tokenized text should be the new tokenizer's **BOS token id** of `0` instead of the original llama 3.2 tokenizer's BOS token id of `128000`. The vocab size is `28000` and the number `128000` should not appear anywhere in the `input_ids` list.

This is causing index out of range errors when indexing the embedding matrix of a newly initialized model. | [

47,

64

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"Core: Tokenization",

"bug"

] |

https://api.github.com/repos/huggingface/transformers/issues/36168 |

TITLE

Bump transformers from 4.38.0 to 4.48.0 in /examples/research_projects/adversarial

COMMENTS

1

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

Bumps [transformers](https://github.com/huggingface/transformers) from 4.38.0 to 4.48.0.

<details>

<summary>Release notes</summary>

<p><em>Sourced from <a href="https://github.com/huggingface/transformers/releases">transformers's releases</a>.</em></p>

<blockquote>

<h2>v4.48.0: ModernBERT, Aria, TimmWrapper, ColPali, Falcon3, Bamba, VitPose, DinoV2 w/ Registers, Emu3, Cohere v2, TextNet, DiffLlama, PixtralLarge, Moonshine</h2>

<h2>New models</h2>

<h3>ModernBERT</h3>

<p>The ModernBert model was proposed in <a href="https://arxiv.org/abs/2412.13663">Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference</a> by Benjamin Warner, Antoine Chaffin, Benjamin Clavié, Orion Weller, Oskar Hallström, Said Taghadouini, Alexis Galalgher, Raja Bisas, Faisal Ladhak, Tom Aarsen, Nathan Cooper, Grifin Adams, Jeremy Howard and Iacopo Poli.</p>

<p>It is a refresh of the traditional encoder architecture, as used in previous models such as <a href="https://huggingface.co/docs/transformers/en/model_doc/bert">BERT</a> and <a href="https://huggingface.co/docs/transformers/en/model_doc/roberta">RoBERTa</a>.</p>

<p>It builds on BERT and implements many modern architectural improvements which have been developed since its original release, such as:</p>

<ul>

<li><a href="https://huggingface.co/blog/designing-positional-encoding">Rotary Positional Embeddings</a> to support sequences of up to 8192 tokens.</li>

<li><a href="https://arxiv.org/abs/2208.08124">Unpadding</a> to ensure no compute is wasted on padding tokens, speeding up processing time for batches with mixed-length sequences.</li>

<li><a href="https://arxiv.org/abs/2002.05202">GeGLU</a> Replacing the original MLP layers with GeGLU layers, shown to improve performance.</li>

<li><a href="https://arxiv.org/abs/2004.05150v2">Alternating Attention</a> where most attention layers employ a sliding window of 128 tokens, with Global Attention only used every 3 layers.</li>

<li><a href="https://github.com/Dao-AILab/flash-attention">Flash Attention</a> to speed up processing.</li>

<li>A model designed following recent <a href="https://arxiv.org/abs/2401.14489">The Case for Co-Designing Model Architectures with Hardware</a>, ensuring maximum efficiency across inference GPUs.</li>

<li>Modern training data scales (2 trillion tokens) and mixtures (including code ande math data)</li>

</ul>

<p><img src="https://github.com/user-attachments/assets/4256c0b1-9b40-4d71-ac42-fc94827d5e9d" alt="image" /></p>

<ul>

<li>Add ModernBERT to Transformers by <a href="https://github.com/warner-benjamin"><code>@warner-benjamin</code></a> in <a href="https://redirect.github.com/huggingface/transformers/issues/35158">#35158</a></li>

</ul>

<h3>Aria</h3>

<p>The Aria model was proposed in <a href="https://huggingface.co/papers/2410.05993">Aria: An Open Multimodal Native Mixture-of-Experts Model</a> by Li et al. from the Rhymes.AI team.</p>

<p>Aria is an open multimodal-native model with best-in-class performance across a wide range of multimodal, language, and coding tasks. It has a Mixture-of-Experts architecture, with respectively 3.9B and 3.5B activated parameters per visual token and text token.</p>

<ul>

<li>Add Aria by <a href="https://github.com/aymeric-roucher"><code>@aymeric-roucher</code></a> in <a href="https://redirect.github.com/huggingface/transformers/issues/34157">#34157</a>

<img src="https://github.com/user-attachments/assets/ef41fcc9-2c5f-4a75-ab1a-438f73d3d7e2" alt="image" /></li>

</ul>

<h3>TimmWrapper</h3>

<p>We add a <code>TimmWrapper</code> set of classes such that timm models can be loaded in as transformer models into the library.</p>

<p>Here's a general usage example:</p>

<pre lang="py"><code>import torch

from urllib.request import urlopen

from PIL import Image

from transformers import AutoConfig, AutoModelForImageClassification, AutoImageProcessor

<p>checkpoint = "timm/resnet50.a1_in1k"

img = Image.open(urlopen(

'<a href="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png">https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png</a>'

))</p>

<p>image_processor = AutoImageProcessor.from_pretrained(checkpoint)

</tr></table>

</code></pre></p>

</blockquote>

<p>... (truncated)</p>

</details>

<details>

<summary>Commits</summary>

<ul>

<li><a href="https://github.com/huggingface/transformers/commit/6bc0fbcfa7acb6ac4937e7456a76c2f7975fefec"><code>6bc0fbc</code></a> [WIP] Emu3: add model (<a href="https://redirect.github.com/huggingface/transformers/issues/33770">#33770</a>)</li>

<li><a href="https://github.com/huggingface/transformers/commit/59e28c30fa3a91213f569bccef73f082afa8c656"><code>59e28c3</code></a> Fix flex_attention in training mode (<a href="https://redirect.github.com/huggingface/transformers/issues/35605">#35605</a>)</li>

<li><a href="https://github.com/huggingface/transformers/commit/7cf6230e25078742b21907ae49d1542747606457"><code>7cf6230</code></a> push a fix for now</li>

<li><a href="https://github.com/huggingface/transformers/commit/d6f446ffa79811d35484d445bc5c7932e8a536d6"><code>d6f446f</code></a> when filtering we can't use the convert script as we removed them</li>

<li><a href="https://github.com/huggingface/transformers/commit/8ce1e9578af6151e4192d59c345e2ad86ee789d4"><code>8ce1e95</code></a> [test-all]</li>

<li><a href="https://github.com/huggingface/transformers/commit/af2d7caff393cf8881396b73d92d0595b6a3b2ae"><code>af2d7ca</code></a> Add Moonshine (<a href="https://redirect.github.com/huggingface/transformers/issues/34784">#34784</a>)</li>

<li><a href="https://github.com/huggingface/transformers/commit/42b8e7916b6b6dff5cb77252286db1aa07b7b41e"><code>42b8e79</code></a> ModernBert: reuse GemmaRotaryEmbedding via modular + Integration tests (<a href="https://redirect.github.com/huggingface/transformers/issues/35459">#35459</a>)</li>

<li><a href="https://github.com/huggingface/transformers/commit/e39c9f7a78fa2960a7045e8fc5a2d96b5d7eebf1"><code>e39c9f7</code></a> v4.48-release</li>

<li><a href="https://github.com/huggingface/transformers/commit/8de7b1ba8d126a6fc9f9bcc3173a71b46f0c3601"><code>8de7b1b</code></a> Add flex_attn to diffllama (<a href="https://redirect.github.com/huggingface/transformers/issues/35601">#35601</a>)</li>

<li><a href="https://github.com/huggingface/transformers/commit/1e3ddcb2d0380d0d909a44edc217dff68956ec5e"><code>1e3ddcb</code></a> ModernBERT bug fixes (<a href="https://redirect.github.com/huggingface/transformers/issues/35404">#35404</a>)</li>

<li>Additional commits viewable in <a href="https://github.com/huggingface/transformers/compare/v4.38.0...v4.48.0">compare view</a></li>

</ul>

</details>

<br />

[](https://docs.github.com/en/github/managing-security-vulnerabilities/about-dependabot-security-updates#about-compatibility-scores)

Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually

- `@dependabot show <dependency name> ignore conditions` will show all of the ignore conditions of the specified dependency

- `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself)

You can disable automated security fix PRs for this repo from the [Security Alerts page](https://github.com/huggingface/transformers/network/alerts).

</details> | [

27,

60

] | [

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

1,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0,

0

] | [

"dependencies",

"python"

] |

https://api.github.com/repos/huggingface/transformers/issues/34446 |

TITLE

Beit image classification have different results compared from versions prior to 4.43.0

COMMENTS

10

REACTIONS

+1: 0

-1: 0

laugh: 0

hooray: 0

heart: 0

rocket: 0

eyes: 0

BODY

### System Info

- `transformers` version: 4.43.0

- Platform: Windows-10-10.0.19045-SP0

- Python version: 3.10.9

- Huggingface_hub version: 0.26.1

- Safetensors version: 0.4.5

- Accelerate version: 0.23.0

- Accelerate config: not found

- PyTorch version (GPU?): 1.13.1+cu117 (True)

- Tensorflow version (GPU?): not installed (NA)