language:

- en

- fr

- ro

- de

- multilingual

widget:

- text: 'Translate to German: My name is Arthur'

example_title: Translation

- text: >-

Please answer to the following question. Who is going to be the next

Ballon d'or?

example_title: Question Answering

- text: >-

Q: Can Geoffrey Hinton have a conversation with George Washington? Give

the rationale before answering.

example_title: Logical reasoning

- text: >-

Please answer the following question. What is the boiling point of

Nitrogen?

example_title: Scientific knowledge

- text: >-

Answer the following yes/no question. Can you write a whole Haiku in a

single tweet?

example_title: Yes/no question

- text: >-

Answer the following yes/no question by reasoning step-by-step. Can you

write a whole Haiku in a single tweet?

example_title: Reasoning task

- text: 'Q: ( False or not False or False ) is? A: Let''s think step by step'

example_title: Boolean Expressions

- text: >-

The square root of x is the cube root of y. What is y to the power of 2,

if x = 4?

example_title: Math reasoning

- text: >-

Premise: At my age you will probably have learnt one lesson. Hypothesis:

It's not certain how many lessons you'll learn by your thirties. Does the

premise entail the hypothesis?

example_title: Premise and hypothesis

tags:

- text2text-generation

datasets:

- svakulenk0/qrecc

- taskmaster2

- djaym7/wiki_dialog

- deepmind/code_contests

- lambada

- gsm8k

- aqua_rat

- esnli

- quasc

- qed

license: apache-2.0

Model Card for FLAN-T5 XL

Table of Contents

- TL;DR

- Model Details

- Usage

- Uses

- Bias, Risks, and Limitations

- Training Details

- Evaluation

- Environmental Impact

- Citation

TL;DR

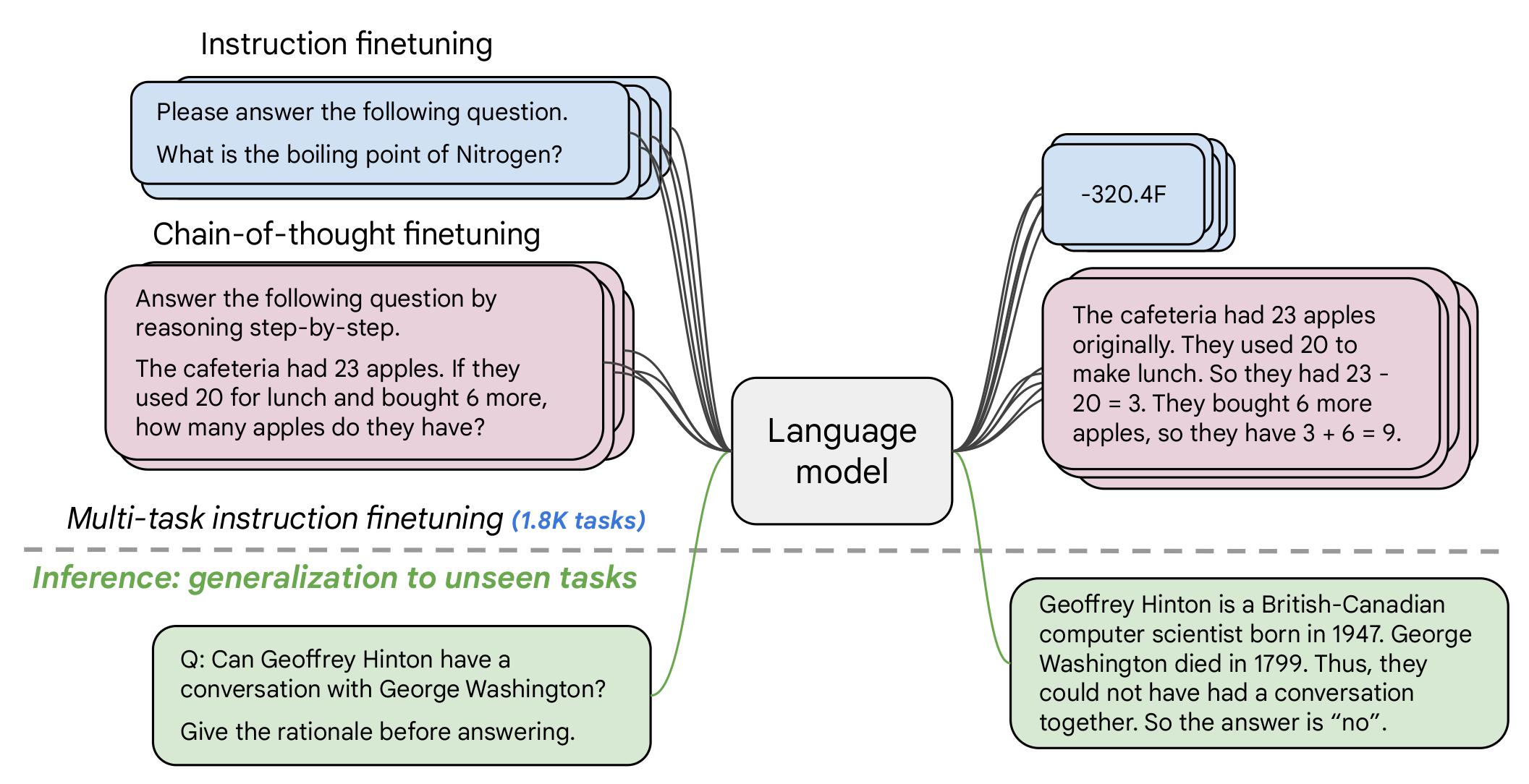

If you already know T5, FLAN-T5 is just better at everything. For the same number of parameters, these models have been fine-tuned on more than 1000 additional tasks covering also more languages. As mentioned in the first few lines of the abstract :

Flan-PaLM 540B achieves state-of-the-art performance on several benchmarks, such as 75.2% on five-shot MMLU. We also publicly release Flan-T5 checkpoints,1 which achieve strong few-shot performance even compared to much larger models, such as PaLM 62B. Overall, instruction finetuning is a general method for improving the performance and usability of pretrained language models.

Disclaimer: Content from this model card has been written by the Hugging Face team, and parts of it were copy pasted from the T5 model card.

Model Details

Model Description

The details are in the original google/flan-t5-xl