Model card for DePlot

Table of Contents

TL;DR

The abstract of the paper states that:

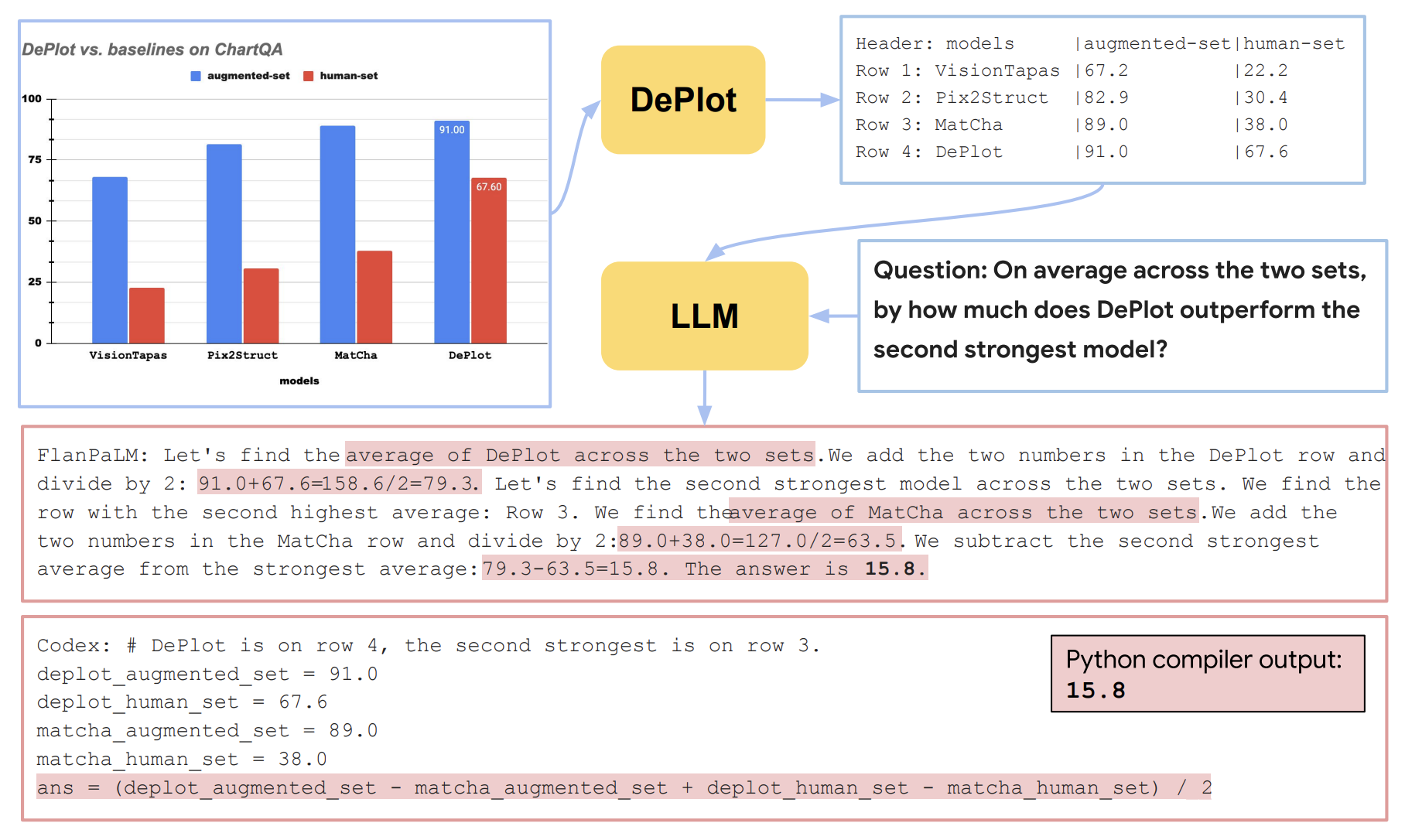

Visual language such as charts and plots is ubiquitous in the human world. Comprehending plots and charts requires strong reasoning skills. Prior state-of-the-art (SOTA) models require at least tens of thousands of training examples and their reasoning capabilities are still much limited, especially on complex human-written queries. This paper presents the first one-shot solution to visual language reasoning. We decompose the challenge of visual language reasoning into two steps: (1) plot-to-text translation, and (2) reasoning over the translated text. The key in this method is a modality conversion module, named as DePlot, which translates the image of a plot or chart to a linearized table. The output of DePlot can then be directly used to prompt a pretrained large language model (LLM), exploiting the few-shot reasoning capabilities of LLMs. To obtain DePlot, we standardize the plot-to-table task by establishing unified task formats and metrics, and train DePlot end-to-end on this task. DePlot can then be used off-the-shelf together with LLMs in a plug-and-play fashion. Compared with a SOTA model finetuned on more than >28k data points, DePlot+LLM with just one-shot prompting achieves a 24.0% improvement over finetuned SOTA on human-written queries from the task of chart QA.

Using the model

You can run a prediction by querying an input image together with a question as follows:

from transformers import Pix2StructProcessor, Pix2StructForConditionalGeneration

import requests

from PIL import Image

processor = Pix2StructProcessor.from_pretrained('google/deplot')

model = Pix2StructForConditionalGeneration.from_pretrained('google/deplot')

url = "https://raw.githubusercontent.com/vis-nlp/ChartQA/main/ChartQA%20Dataset/val/png/5090.png"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(images=image, text="Generate underlying data table of the figure below:", return_tensors="pt")

predictions = model.generate(**inputs, max_new_tokens=512)

print(processor.decode(predictions[0], skip_special_tokens=True))

Converting from T5x to huggingface

You can use the convert_pix2struct_checkpoint_to_pytorch.py script as follows:

python convert_pix2struct_checkpoint_to_pytorch.py --t5x_checkpoint_path PATH_TO_T5X_CHECKPOINTS --pytorch_dump_path PATH_TO_SAVE --is_vqa

if you are converting a large model, run:

python convert_pix2struct_checkpoint_to_pytorch.py --t5x_checkpoint_path PATH_TO_T5X_CHECKPOINTS --pytorch_dump_path PATH_TO_SAVE --use-large --is_vqa

Once saved, you can push your converted model with the following snippet:

from transformers import Pix2StructForConditionalGeneration, Pix2StructProcessor

model = Pix2StructForConditionalGeneration.from_pretrained(PATH_TO_SAVE)

processor = Pix2StructProcessor.from_pretrained(PATH_TO_SAVE)

model.push_to_hub("USERNAME/MODEL_NAME")

processor.push_to_hub("USERNAME/MODEL_NAME")

Contribution

This model was originally contributed by Fangyu Liu, Julian Martin Eisenschlos et al. and added to the Hugging Face ecosystem by Younes Belkada.

Citation

If you want to cite this work, please consider citing the original paper:

@misc{liu2022deplot,

title={DePlot: One-shot visual language reasoning by plot-to-table translation},

author={Liu, Fangyu and Eisenschlos, Julian Martin and Piccinno, Francesco and Krichene, Syrine and Pang, Chenxi and Lee, Kenton and Joshi, Mandar and Chen, Wenhu and Collier, Nigel and Altun, Yasemin},

year={2022},

eprint={2212.10505},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

- Downloads last month

- 9,071