metadata

license: apache-2.0

datasets:

- jojo0217/korean_rlhf_dataset

language:

- ko

pipeline_tag: text-generation

성균관대학교 산학협력 데이터로 만든 테스트 모델입니다.

기존 10만 7천개의 데이터 + 2천개 일상대화 추가 데이터를 첨가하여 학습하였습니다.

모델은 EleutherAI/polyglot-ko-5.8b를 base로 학습 되었으며

학습 parameter은 다음과 같습니다.

batch_size: 128

micro_batch_size: 8

num_epochs: 3

learning_rate: 3e-4

cutoff_len: 1024

lora_r: 8

lora_alpha: 16

lora_dropout: 0.05

weight_decay: 0.1

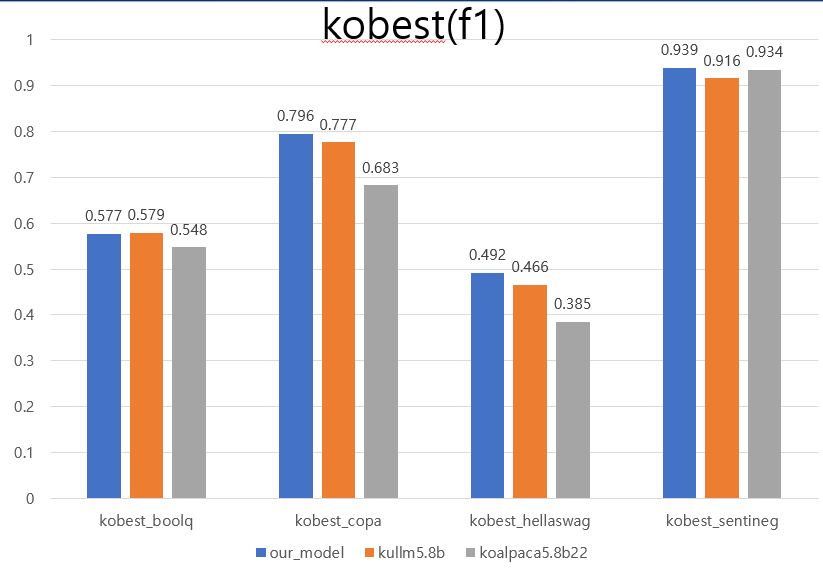

측정한 kobest 10shot 점수는 다음과 같습니다.

모델 prompt template는 kullm의 template를 사용하였습니다.

테스트 코드는 다음과 같습니다.

from transformers import pipeline, AutoModelForCausalLM, AutoTokenizer

model_name="jojo0217/ChatSKKU5.8B"

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto",

load_in_8bit=True,#만약 양자화 끄고 싶다면 false

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

pipe = pipeline(

"text-generation",

model=model,

tokenizer=model_name,

device_map="auto"

)

def answer(message):

prompt=f"아래는 작업을 설명하는 명령어입니다. 요청을 적절히 완료하는 응답을 작성하세요.\n\n### 명령어:\n{message}"

ans = pipe(

prompt + "\n\n### 응답:",

do_sample=True,

max_new_tokens=512,

temperature=0.7,

repetition_penalty = 1.0,

return_full_text=False,

eos_token_id=2,

)

msg = ans[0]["generated_text"]

return msg

answer('성균관대학교에대해 알려줘')