ANLP Ass-3

How to run model

- Since models are already saved, you just have to run pythons scripts for inference.

python3 main.py # Prompt tuning

python3 main1.py # Last layer finetuned model

python3 main2.py # LoRA

- In case you want to train, set train = True in main function, and run the same script.

- You can download models from here, https://huggingface.co/kyrylokumar/gpt2-finetuned/tree/main, which I just created.

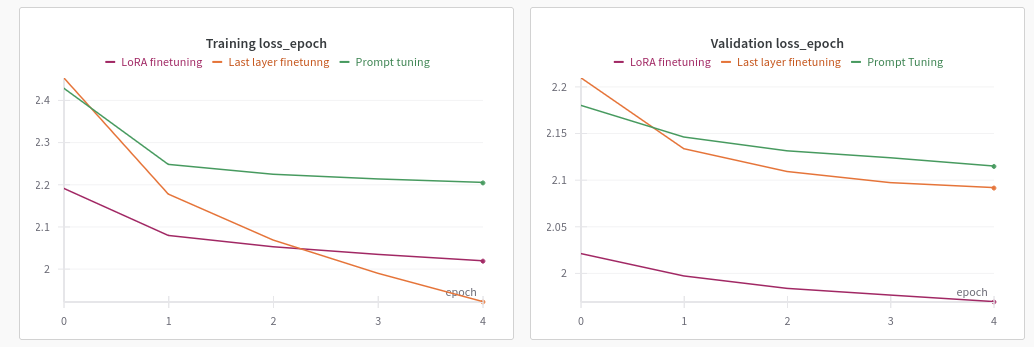

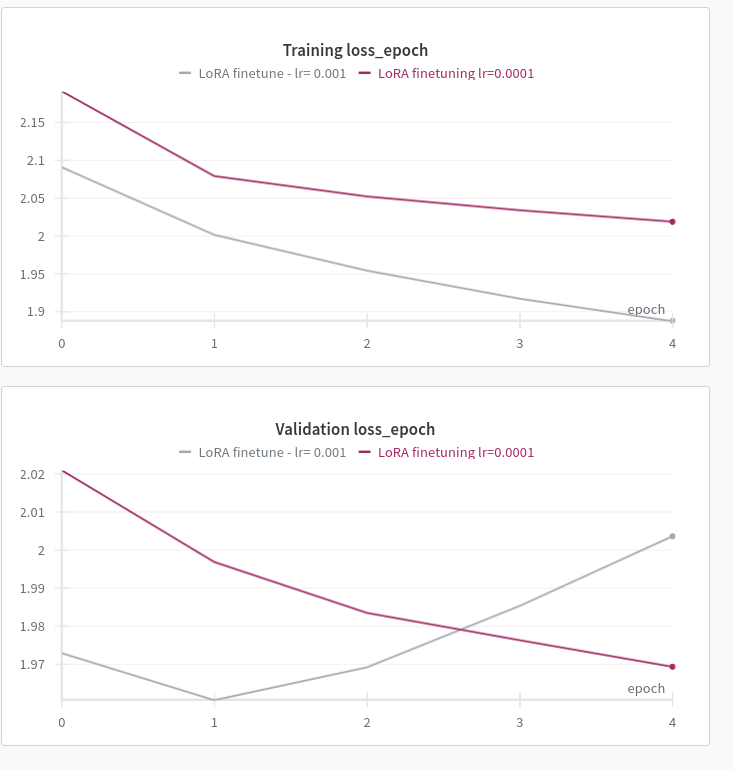

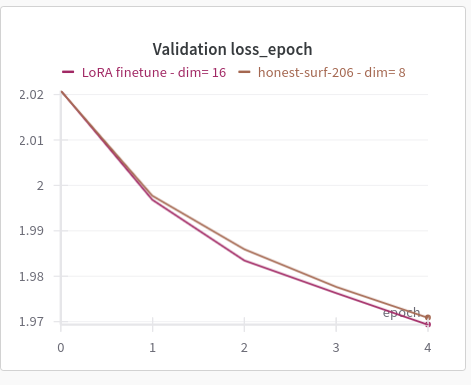

Comparison of losses of 3 methods:

- After hyperparameter tuning, LoRA method gets the best possible Validation Loss = 1.96. Final layer finetuning and Prompt tuning follow next.

Last layer finetuning overfits faster:

- lr=1e-3 is best for LoRA and Prompt tuning. But if used with last-layer-finetuning it overfits, and has increasing validation loss. So it requires lower lr = 1e-4, with AdamW.

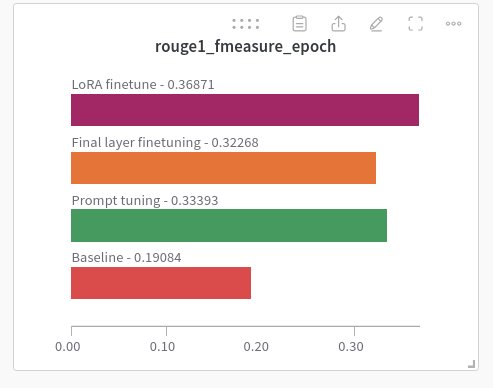

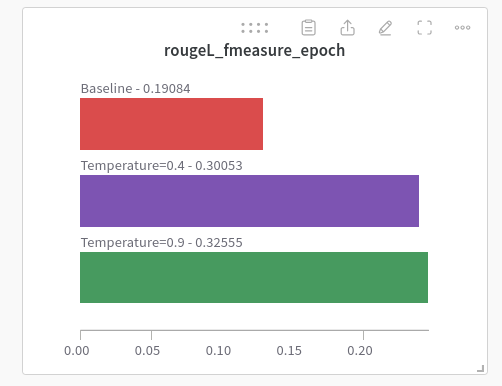

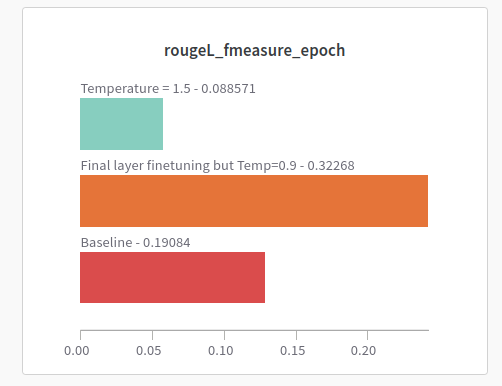

Comparison of ROUGE scores of 3 methods:

- Baseline used here, has hard prompt with words “Summarize” appended multiple times to beginning.

GPT2 model alone is already pretty good:

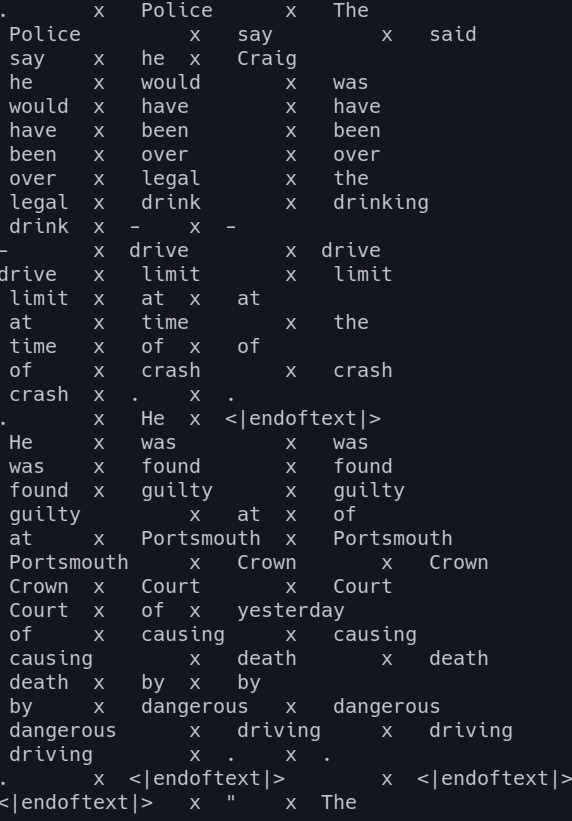

- GPT2 alone is pretty good in next prediction. For example, given article and first few lines of summary it is pretty accurate.

- Following 3 columns are: Last word in context, GT prediction, Actual prediction (argmax)

- Even without training it generates coherent predictions. So, even in the beginning loss is already low. However Rouge score is not good, since model is not predicting a compact summary, but just continuation of article.

- There are couple of things to do to improve the language of predicted paragraphs.

Effect of sampler and temperature on Rouge Score:

- Always choosing word with highest probability leads to poor vocabulary use. We want model to be more creative.

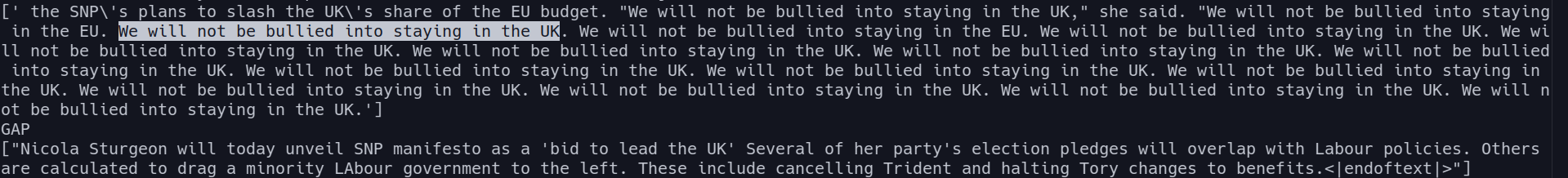

- A common problem, I notice model gets stuck in loops where it predicts same sentence over and over. In following image, paragraph 1 is prediction and paragraph 2 after gap is GT.

- For this purpose we sample from distribution of probabilities. Here, I use top_p sampling with temperature 0.9, and probability 0.9. Sampler is important and is a reason why gpt2 alone can get upto 20 of ROUGE score, up from ~10 without it,

Varying temperature

- Middle range of temperature seams to be more optimal, temperature of t=1.5 leads to model predict too diverse words leading to drop in score.

Effect of learning rate:

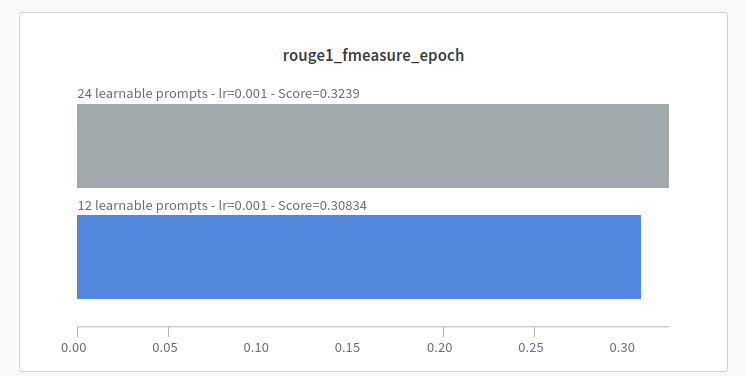

Effect of number of learnable tokens (in prompt tuning):

- More learnable prompts is better.

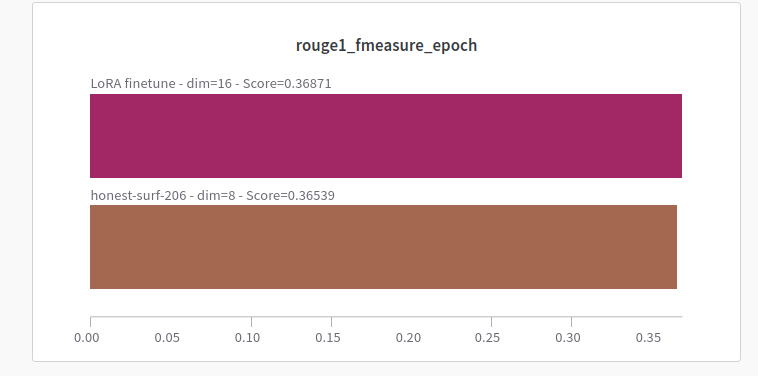

Effect of size of Lora dimension:

Dimension 16 is slightly better everywhere. The fact that dim=8 is enough implies this is low dimensional task.

Tricks to optimize runtime:

Basic:

- Increase num of threads for dataloader.

- Use mixed precision in Lightning.

Intermediate

- Scale number of GPUs and thus batch size. I moved from 2080Ti to A100-40GB

- Moving to this GPU architecture allows us to unlock fast tensor multiplication with lower accuracy.

- Use bfloat16-mixed.

Advanced

- Using torch.compile(). Since Python is interpreter, computer does not know what function is executed next, which in turn requires many redundant access to GPU memory. Torch.compile allows to compile the model to binary and optimizing the pipeline.

- Previous points, optimize training time. For optimizing inference time , we utilize kv-cache. Since, generation of tokens is done autoregressively, there are many re-calculation. They can be cached out (due to effect of causal mask, their values will not change even in future generation steps)>

All this brings training time from ~35 minutes to 3 minutes per epoch. I myself am surprised.

Inference/test time actually takes much more because of redcurrant nature, approx. 5 mins.

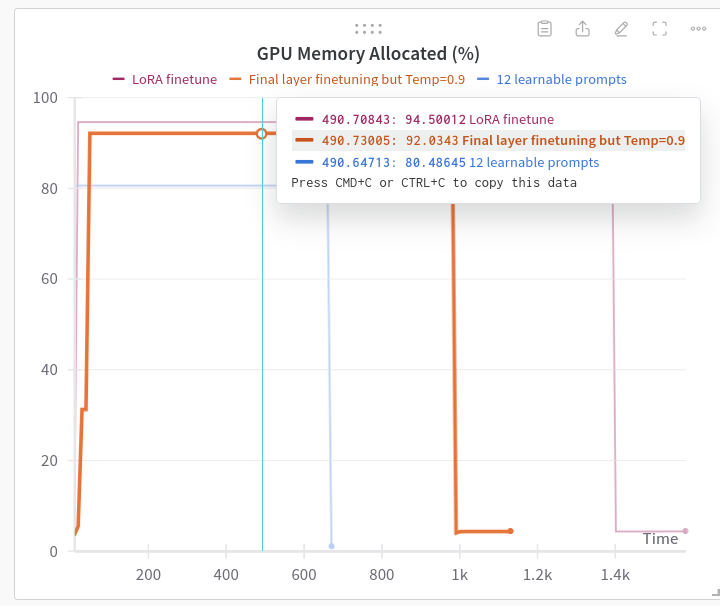

Trainable parameters, runtime and GPU Utilization

GPU:

- The above chart shows allocation of memory in % for same batch size = 25 for 3 methods. The GPU observed was A100-40GB.

- Final layer uses more GPU because final layer alone is 40M params out of 124M. Also, gpt2 model shares embedding and unembedding are same. So, the gradients are travelling towards the input too.

- LoRA finetune may be taking more than expected because, it does not work together with torch.compile, killing memory optimizations.

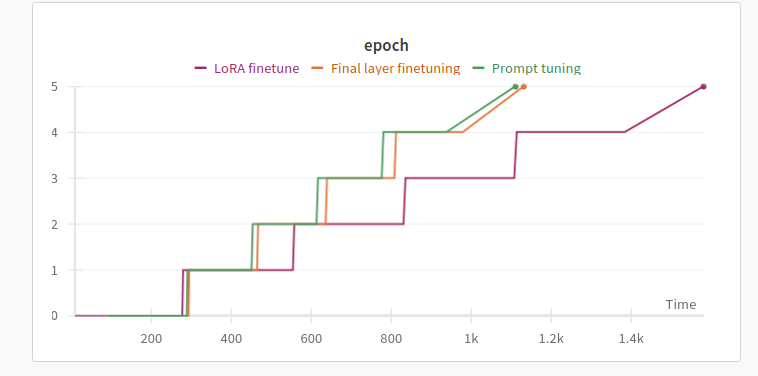

Time taken:

- After all optimizations, it takes 3-4 mins to run per epoch. LoRA finetune takes longer because it could not be compiled. FInal layer takes marginally more than prompt tuning, as there are more params to update.

Num of trainable params:

GPT2: 124M

Last layer: 40M

Prompt learning: 18,432

LoRA: 589,824

Miscellaneous observations:

Words predicted after token are weird:

- Probably because model is never forced to predict anything after

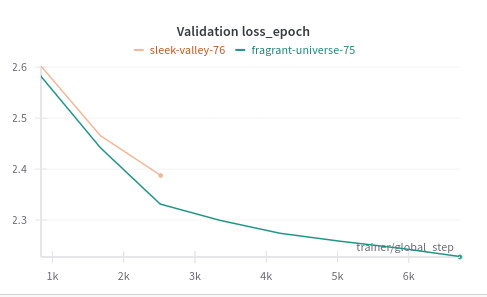

Having a custom token separating article and summary helps:

- The curve with better loss has a new token which separates input and output

Interesting questions:

Q1. Concept of Soft Prompts: How does the introduction of ”soft prompts” address the limitations of discrete text prompts in large language mod- els? Why might soft prompts be considered a more flexible and efficient approach for task-specific conditioning

Ans:

Discrete Prompts' Limitations:

- Limited Expressivity: Difficult to encode complex instructions or nuanced context. Like trying to give driving directions with only street names, no turns.

- Prompt Engineering Bottleneck: Finding the perfect wording is time-consuming and often requires trial-and-error.

Soft Prompts as a Solution:

- Continuous Representation: Soft prompts are embeddings (vectors) learned through optimization, representing the prompt's meaning in a more flexible, dense format. Think of it as a "summary" of the prompt's essence.

- Enhanced Expressiveness: These continuous vectors can capture more nuanced information than discrete text. Like having a detailed map with all the landmarks and turns.

- Efficiency: Soft prompts can be shorter (fewer tokens) than equivalent text prompts, reducing computational cost.

- Adaptability: They can be fine-tuned for specific tasks, making the model more specialized without retraining the entire LLM

Q2. Scaling and Efficiency in Prompt Tuning: How does the efficiency of prompt tuning relate to the scale of the language model? Discuss the implications of this relationship for future developments in large-scale lan- guage models and their adaptability to specific tasks.

Ans:

- Prompt Tuning's Efficiency Advantage: Prompt tuning modifies only a small set of task-specific parameters, leaving the pre-trained LLM's weights frozen. This is drastically more efficient than fine-tuning the entire model.

- Scaling and Efficiency: The efficiency gains of prompt tuning become more pronounced with increasing model size. As LLMs grow larger, fine-tuning becomes increasingly computationally expensive. Prompt tuning, however, maintains its relatively low overhead, regardless of LLM scale.

- Domain-Specific Benefits: This approach could revolutionize fields like:

- Healthcare: Adapting large medical LLMs to specific diseases or patient populations.

- Finance: Fine-tuning models for specific market analysis or risk assessment.

- Law: Specializing LLMs for different legal domains (patent law, contract law).

- Education: Personalizing educational LLMs for individual student needs.

Q3. Understanding LoRA: What are the key principles behind Low-Rank Adaptation (LoRA) in fine-tuning large language models? How does LoRA improve upon traditional fine-tuning methods regarding efficiency and performance?

Ans:

Low-Rank Assumption: LoRA assumes that the changes to the weight matrices during fine-tuning are low-rank. This means the updates can be efficiently represented by a smaller set of matrices. Think of it like compressing a large image by focusing on the essential details.

Rank Decomposition: LoRA decomposes the weight updates into two smaller matrices (A and B). The original weight update is approximated by their product (A x B). This reduces the number of parameters needing adjustment during training.

Reduced Memory Footprint: Training only the smaller LoRA matrices requires significantly less memory, enabling fine-tuning of much larger models on consumer-grade hardware.

Faster Training: Fewer parameters to update translates to faster training times.

Q4. Theoretical Implications of LoRA: Discuss the theoretical implica- tions of introducing low-rank adaptations to the parameter space of large language models. How does this affect the expressiveness and generaliza- tion capabilities of the model compared to standard fine-tuning?

Ans:

- Sufficient for Downstream Tasks (low-rank tasks): Empirical results show that reduced capacity is often sufficient for many downstream tasks. This suggests that the necessary adaptations for these tasks lie within this lower-dimensional space. It finds the optimal solution within a smaller, but highly relevant, search space..

- Generalization:

- Regularization Effect: The low-rank constraint acts as a form of regularization, preventing overfitting to the training data. This can improve generalization to unseen examples. I

- Task Specificity: The frozen pre-trained weights provide a strong base of general knowledge, while the LoRA updates add task-specific expertise. This balance can lead to better generalization within the target task domain.

- Intrinsic Low-Rank Structure: LoRA's effectiveness suggests that the changes induced by fine-tuning might inherently possess a low-rank structure. This implies a certain degree of redundancy or structure within the vast parameter space of LLMs.

- Modular Adaptation: LoRA can be seen as a way to introduce modular adaptations to the pre-trained model, where each LoRA module specializes in a particular task.

Wandb Link: https://wandb.ai/cvit_kyrylo/Anlp-3/workspace?nw=nwuserkyryloshyvam