metadata

license: mit

tags:

- stable-diffusion

- stable-diffusion-diffusers

inference: false

library_name: diffusers

SDXL-VAE-FP16-Fix

SDXL-VAE-FP16-Fix is the SDXL VAE*, but modified to run in fp16 precision without generating NaNs.

| VAE | Decoding in float32 / bfloat16 precision |

Decoding in float16 precision |

|---|---|---|

| SDXL-VAE | ✅  |

⚠️  |

| SDXL-VAE-FP16-Fix | ✅  |

✅  |

🧨 Diffusers Usage

Just load this checkpoint via AutoencoderKL:

import torch

from diffusers import DiffusionPipeline, AutoencoderKL

vae = AutoencoderKL.from_pretrained("madebyollin/sdxl-vae-fp16-fix", torch_dtype=torch.float16)

pipe = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-xl-base-1.0", vae=vae, torch_dtype=torch.float16, variant="fp16", use_safetensors=True)

pipe.to("cuda")

refiner = DiffusionPipeline.from_pretrained("stabilityai/stable-diffusion-xl-refiner-1.0", vae=vae, torch_dtype=torch.float16, use_safetensors=True, variant="fp16")

refiner.to("cuda")

n_steps = 40

high_noise_frac = 0.7

prompt = "A majestic lion jumping from a big stone at night"

image = pipe(prompt=prompt, num_inference_steps=n_steps, denoising_end=high_noise_frac, output_type="latent").images

image = refiner(prompt=prompt, num_inference_steps=n_steps, denoising_start=high_noise_frac, image=image).images[0]

image

Automatic1111 Usage

- Download the fixed sdxl.vae.safetensors file

- Move this

sdxl.vae.safetensorsfile into the webui folder understable-diffusion-webui/models/VAE - In your webui settings, select the fixed VAE you just added

- If you were using the

--no-half-vaecommand line arg for SDXL (inwebui-user.bator wherever), you can now remove it

(Disclaimer - I haven't tested this, just aggregating various instructions I've seen elsewhere :P PRs to improve these instructions are welcomed!)

Details

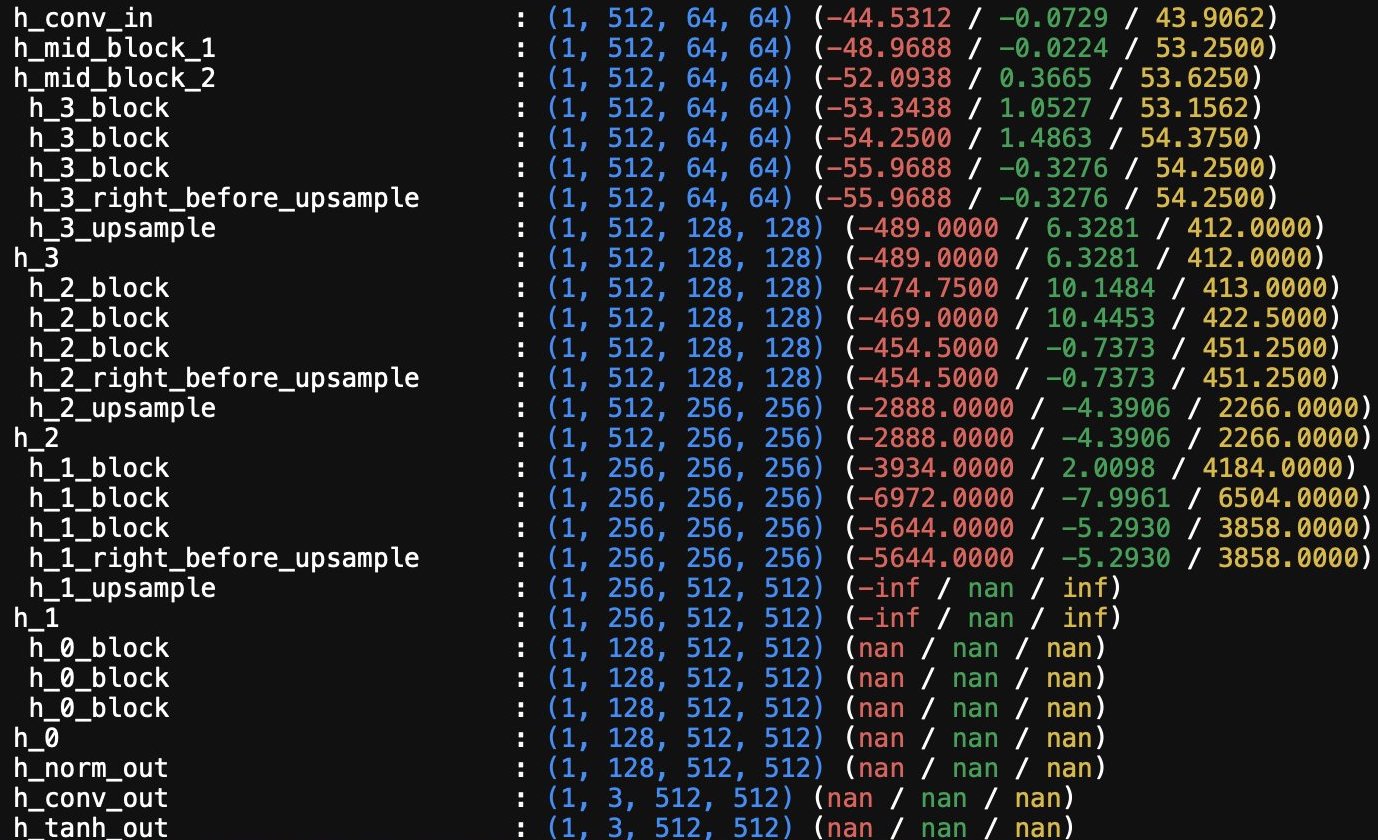

SDXL-VAE generates NaNs in fp16 because the internal activation values are too big:

SDXL-VAE-FP16-Fix was created by finetuning the SDXL-VAE to:

- keep the final output the same, but

- make the internal activation values smaller, by

- scaling down weights and biases within the network

There are slight discrepancies between the output of SDXL-VAE-FP16-Fix and SDXL-VAE, but the decoded images should be close enough for most purposes.

* sdxl-vae-fp16-fix is specifically based on SDXL-VAE (0.9), but it works with SDXL 1.0 too