metadata

license: apache-2.0

library_name: peft

tags:

- falcon

- falcon-7b

- code

- code instruct

- instruct code

- code alpaca

- python code

- code copilot

- copilot

- python coding assistant

- coding assistant

datasets:

- iamtarun/python_code_instructions_18k_alpaca

base_model: tiiuae/falcon-7b

Training procedure

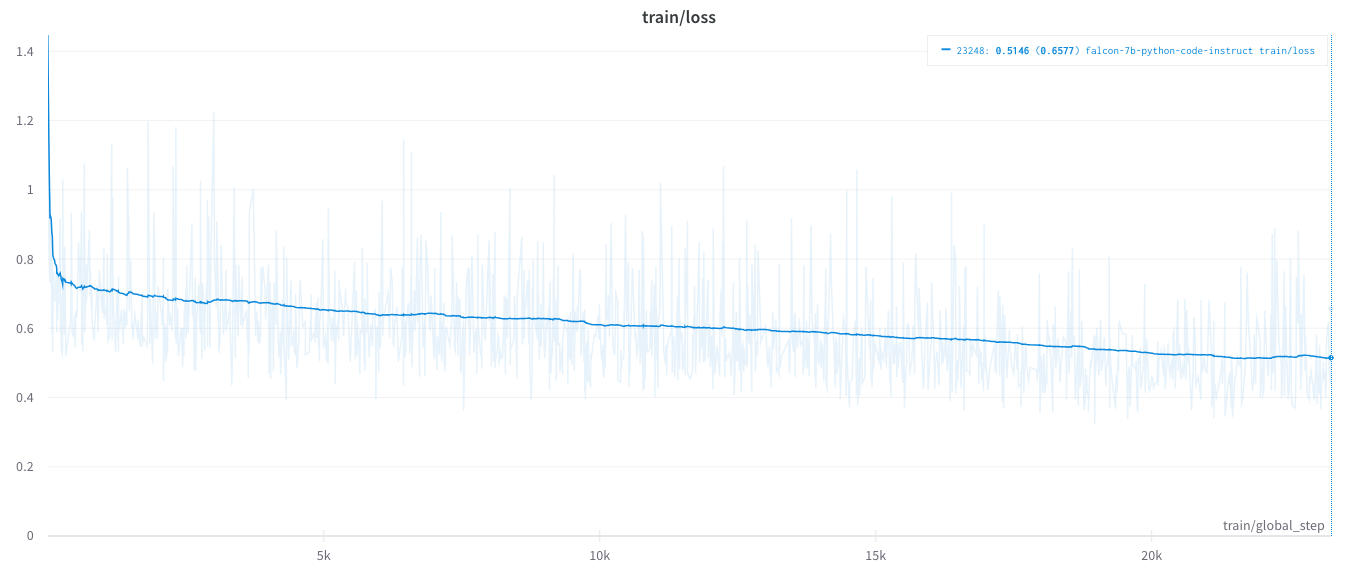

We finetuned Falcon-7B LLM on Python-Code-Instructions Dataset (iamtarun/python_code_instructions_18k_alpaca) for 10 epochs or ~ 23,000 steps using MonsterAPI no-code LLM finetuner.

The dataset contains problem descriptions and code in python language. This dataset is taken from sahil2801/code_instructions_120k, which adds a prompt column in alpaca style.

The finetuning session got completed in 7.3 hours and costed us only $17.5 for the entire finetuning run!

Hyperparameters & Run details:

- Model Path: tiiuae/falcon-7b

- Dataset: iamtarun/python_code_instructions_18k_alpaca

- Learning rate: 0.0002

- Number of epochs: 10

- Data split: Training: 95% / Validation: 5%

- Gradient accumulation steps: 1

Framework versions

- PEFT 0.4.0