ManiBERT

This model is a fine-tuned version of roberta-base on data from the Manifesto Project.

Model description

This model was trained on 115,943 manually annotated sentences to classify text into one of 56 political categories:

Intended uses & limitations

The model output reproduces the limitations of the dataset in terms of country coverage, time span, domain definitions and potential biases of the annotators - as any supervised machine learning model would. Applying the model to other types of data (other types of texts, countries etc.) will reduce performance.

from transformers import pipeline

import pandas as pd

classifier = pipeline(

task="text-classification",

model="niksmer/ManiBERT")

# Load text data you want to classify

text = pd.read_csv("example.csv")["text_you_want_to_classify"].to_list()

# Inference

output = classifier(text)

# Print output

pd.DataFrame(output).head()

Train Data

ManiBERT was trained on the English-speaking subset of the Manifesto Project Dataset (MPDS2021a). The model was trained on 115,943 sentences from 163 political manifestos in 7 English-speaking countries (Australia, Canada, Ireland, New Zealand, South Africa, United Kingdom, United States). The manifestos were published between 1992 - 2020.

| Country | Count manifestos | Count sentences | Time span |

|---|---|---|---|

| Australia | 18 | 14,887 | 2010-2016 |

| Ireland | 23 | 24,966 | 2007-2016 |

| Canada | 14 | 12,344 | 2004-2008 & 2015 |

| New Zealand | 46 | 35,079 | 1993-2017 |

| South Africa | 29 | 13,334 | 1994-2019 |

| USA | 9 | 13,188 | 1992 & 2004-2020 |

| United Kingdom | 34 | 30,936 | 1997-2019 |

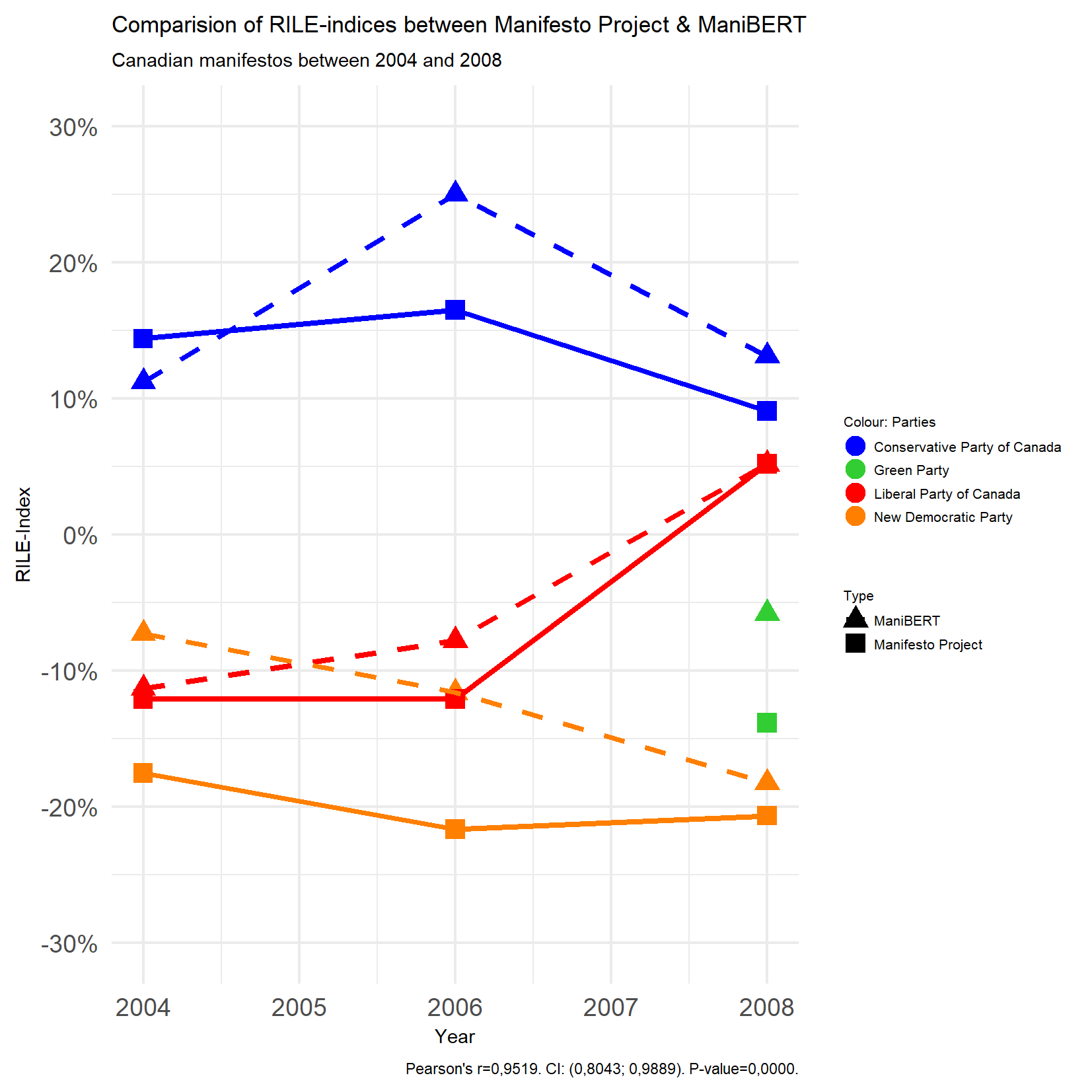

Canadian manifestos between 2004 and 2008 are used as test data.

The resulting Datasets are higly (!) imbalanced. See Evaluation.

Evaluation

| Description | Label | Count Train Data | Count Validation Data | Count Test Data | Validation F1-Score | Test F1-Score |

|---|---|---|---|---|---|---|

| Foreign Special Relationships: Positive | 0 | 545 | 96 | 60 | 0.43 | 0.45 |

| Foreign Special Relationships: Negative | 1 | 66 | 14 | 22 | 0.22 | 0.09 |

| Anti-Imperialism | 2 | 93 | 16 | 1 | 0.16 | 0.00 |

| Military: Positive | 3 | 1,969 | 356 | 159 | 0.69 | 0.63 |

| Military: Negative | 4 | 489 | 89 | 52 | 0.59 | 0.63 |

| Peace | 5 | 418 | 80 | 49 | 0.57 | 0.64 |

| Internationalism: Positive | 6 | 2,401 | 417 | 404 | 0.60 | 0.54 |

| European Community/Union or Latin America Integration: Positive | 7 | 930 | 156 | 20 | 0.58 | 0.32 |

| Internationalism: Negative | 8 | 209 | 40 | 57 | 0.28 | 0.05 |

| European Community/Union or Latin America Integration: Negative | 9 | 520 | 81 | 0 | 0.39 | - |

| Freedom and Human Rights | 10 | 2,196 | 389 | 76 | 0.50 | 0.34 |

| Democracy | 11 | 3,045 | 534 | 206 | 0.53 | 0.51 |

| Constitutionalism: Positive | 12 | 259 | 48 | 12 | 0.34 | 0.22 |

| Constitutionalism: Negative | 13 | 380 | 72 | 2 | 0.34 | 0.00 |

| Decentralisation: Positive | 14 | 2,791 | 481 | 331 | 0.49 | 0.45 |

| Centralisation: Positive | 15 | 150 | 33 | 71 | 0.11 | 0.00 |

| Governmental and Administrative Efficiency | 16 | 3,905 | 711 | 105 | 0.50 | 0.32 |

| Political Corruption | 17 | 900 | 186 | 234 | 0.59 | 0.55 |

| Political Authority | 18 | 3,488 | 627 | 300 | 0.51 | 0.39 |

| Free Market Economy | 19 | 1,768 | 309 | 53 | 0.40 | 0.16 |

| Incentives: Positive | 20 | 3,100 | 544 | 81 | 0.52 | 0.28 |

| Market Regulation | 21 | 3,562 | 616 | 210 | 0.50 | 0.36 |

| Economic Planning | 22 | 533 | 93 | 67 | 0.31 | 0.12 |

| Corporatism/ Mixed Economy | 23 | 193 | 32 | 23 | 0.28 | 0.33 |

| Protectionism: Positive | 24 | 633 | 103 | 180 | 0.44 | 0.22 |

| Protectionism: Negative | 25 | 723 | 118 | 149 | 0.52 | 0.40 |

| Economic Goals | 26 | 817 | 139 | 148 | 0.05 | 0.00 |

| Keynesian Demand Management | 27 | 160 | 25 | 9 | 0.00 | 0.00 |

| Economic Growth: Positive | 28 | 3,142 | 607 | 374 | 0.53 | 0.30 |

| Technology and Infrastructure: Positive | 29 | 8,643 | 1,529 | 339 | 0.71 | 0.56 |

| Controlled Economy | 30 | 567 | 96 | 94 | 0.47 | 0.16 |

| Nationalisation | 31 | 832 | 157 | 27 | 0.56 | 0.16 |

| Economic Orthodoxy | 32 | 1,721 | 287 | 184 | 0.55 | 0.48 |

| Marxist Analysis: Positive | 33 | 148 | 33 | 0 | 0.20 | - |

| Anti-Growth Economy and Sustainability | 34 | 2,676 | 452 | 250 | 0.43 | 0.33 |

| Environmental Protection | 35 | 6,731 | 1,163 | 934 | 0.70 | 0.67 |

| Culture: Positive | 36 | 2,082 | 358 | 92 | 0.69 | 0.56 |

| Equality: Positive | 37 | 6,630 | 1,126 | 361 | 0.57 | 0.43 |

| Welfare State Expansion | 38 | 13,486 | 2,405 | 990 | 0.72 | 0.61 |

| Welfare State Limitation | 39 | 926 | 151 | 2 | 0.45 | 0.00 |

| Education Expansion | 40 | 7,191 | 1,324 | 274 | 0.78 | 0.63 |

| Education Limitation | 41 | 154 | 27 | 1 | 0.17 | 0.00 |

| National Way of Life: Positive | 42 | 2,105 | 385 | 395 | 0.48 | 0.34 |

| National Way of Life: Negative | 43 | 743 | 147 | 2 | 0.27 | 0.00 |

| Traditional Morality: Positive | 44 | 1,375 | 234 | 19 | 0.55 | 0.14 |

| Traditional Morality: Negative | 45 | 291 | 54 | 38 | 0.30 | 0.23 |

| Law and Order | 46 | 5,582 | 949 | 381 | 0.72 | 0.71 |

| Civic Mindedness: Positive | 47 | 1,348 | 229 | 27 | 0.45 | 0.28 |

| Multiculturalism: Positive | 48 | 2,006 | 355 | 71 | 0.61 | 0.35 |

| Multiculturalism: Negative | 49 | 144 | 31 | 7 | 0.33 | 0.00 |

| Labour Groups: Positive | 50 | 3,856 | 707 | 57 | 0.64 | 0.14 |

| Labour Groups: Negative | 51 | 208 | 35 | 0 | 0.44 | - |

| Agriculture and Farmers | 52 | 2,996 | 490 | 130 | 0.67 | 0.56 |

| Middle Class and Professional Groups | 53 | 271 | 38 | 12 | 0.38 | 0.40 |

| Underprivileged Minority Groups | 54 | 1,417 | 252 | 82 | 0.34 | 0.33 |

| Non-economic Demographic Groups | 55 | 2,429 | 435 | 106 | 0.42 | 0.24 |

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

training_args = TrainingArguments(

warmup_ratio=0.05,

weight_decay=0.1,

learning_rate=5e-05,

fp16 = True,

evaluation_strategy="epoch",

num_train_epochs=5,

per_device_train_batch_size=16,

overwrite_output_dir=True,

per_device_eval_batch_size=16,

save_strategy="no",

logging_dir='logs',

logging_strategy= 'steps',

logging_steps=10,

push_to_hub=True,

hub_strategy="end")

Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1-micro | F1-macro | F1-weighted | Precision | Recall |

|---|---|---|---|---|---|---|---|---|---|

| 1.7638 | 1.0 | 1812 | 1.6471 | 0.5531 | 0.5531 | 0.3354 | 0.5368 | 0.5531 | 0.5531 |

| 1.4501 | 2.0 | 3624 | 1.5167 | 0.5807 | 0.5807 | 0.3921 | 0.5655 | 0.5807 | 0.5807 |

| 1.0638 | 3.0 | 5436 | 1.5017 | 0.5893 | 0.5893 | 0.4240 | 0.5789 | 0.5893 | 0.5893 |

| 0.9263 | 4.0 | 7248 | 1.5173 | 0.5975 | 0.5975 | 0.4499 | 0.5901 | 0.5975 | 0.5975 |

| 0.7859 | 5.0 | 9060 | 1.5574 | 0.5978 | 0.5978 | 0.4564 | 0.5903 | 0.5978 | 0.5978 |

Overall evaluation

| Type | Micro F1-Score | Macro F1-Score | Weighted F1-Score |

|---|---|---|---|

| Validation | 0.60 | 0.46 | 0.59 |

| Test | 0.48 | 0.30 | 0.47 |

Evaluation based on saliency theory

Saliency theory is a theory to analyse politial text data. In sum, parties tend to write about policies in which they think that they are seen as competent. Voters tend to assign advantages in policy competence in line to the assumed ideology of parties. Therefore you can analyze the share of policies parties tend to write about in their manifestos to analyze the party ideology.

The Manifesto Project presented for such an analysis the rile-index. For a quick overview, check this.

In the following plot, the predicted and original rile-indices are shown per manifesto in the test dataset. Overall the pearson correlation between the predicted and original rile-indices is 0.95. As alternative, you can use RoBERTa-RILE.

Framework versions

- Transformers 4.16.2

- Pytorch 1.9.0+cu102

- Datasets 1.8.0

- Tokenizers 0.10.3

- Downloads last month

- 10