Cosmo-demo

|

|

|

|

|

|

|---|---|---|---|---|---|

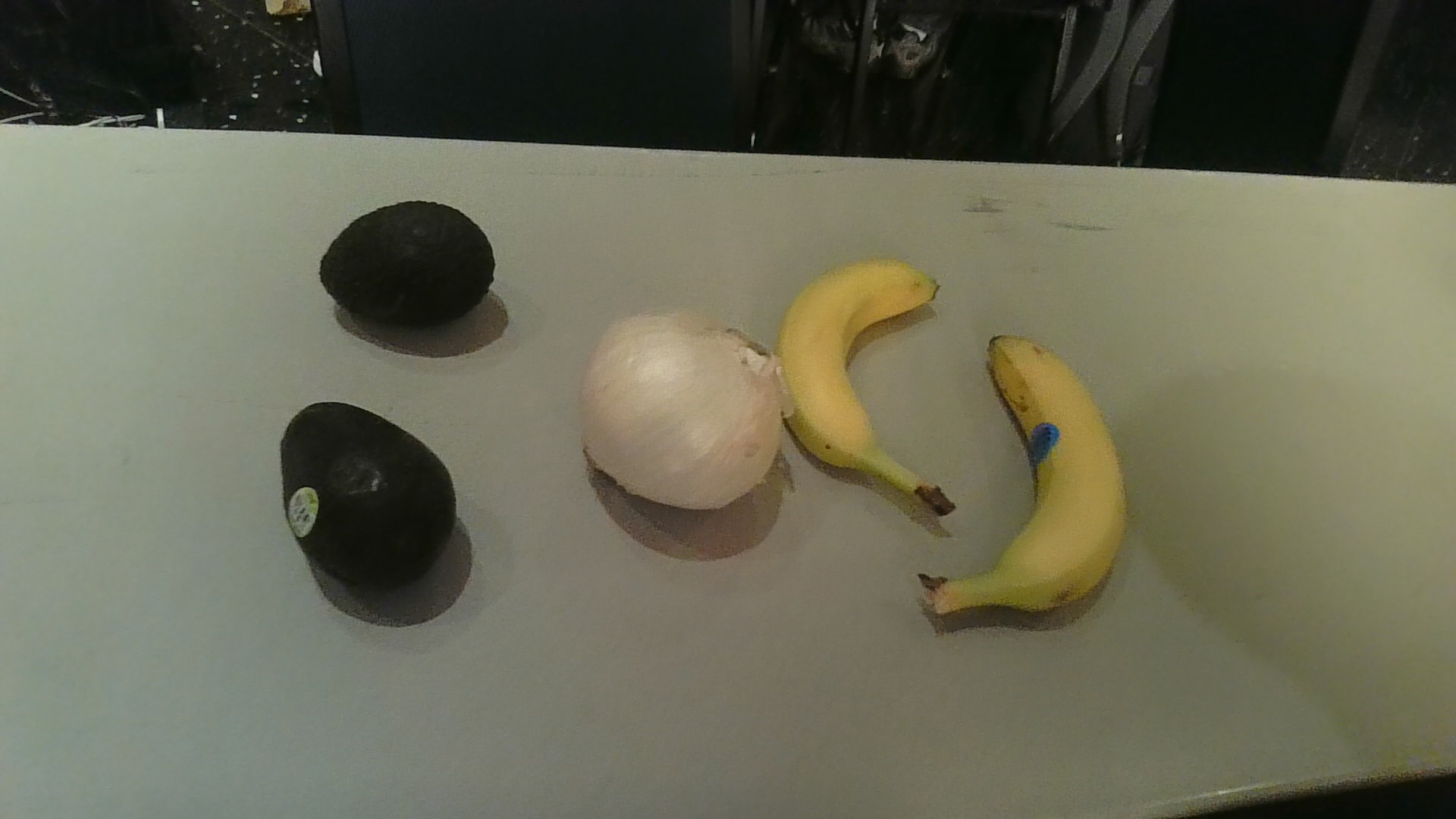

| Model Output: 1 onion | Model Output: 1 avocado | Model Output: 2 banana, 1 dorito | Model Output: 5 banana | Model Output: 1 avocado, 1 dorito | Model Output: 2 avocado, 2 banana, 1 onion |

Development Process

This model was created with the intention of supplmenting our mobile application as an ingredient counter. Such that a user could take an image and have AI determine whether they have the necessary ingredients to create a recipe. As a result of the straightforward usecase, we were motivated to use a lightweight model for this classification task. We were recommended Moondream by a mentor from Intel, which we fine-tuned in this project.

Upon exploring potential datasets, we found that there was no existing data which included various ingredients within the same scene in addition to quantitative labeling data for each ingredient. We decided to work with a small subset of potential ingredients for our project, to minimize the volatility of our demo-model and prove the efficacy of this feature. During the Hackathon, we acquired 3 produce items and a bag of chips from a local grocery store, which we then used to capture image data and label the quantities of said items.

Intel Tools

We utilized the Jupyter server provided by Intel to fine-tune, and deployed on a IDC compute instance with a Small VM - Intel® Xeon 4th Gen ® Scalable processor to connect with our frontend.

We combined both the gemma_xpu_finetuning.ipynb script and a script for fine-tuning Moondream in order to fine-tune Moondream with IPEX configuration on Intel servers.

Model Details

- Model Name: Moondream Fine-tuned Variant

- Task: Ingredient Classification and Counting

- Repository Name: nyap/cosmo-demo

- Original Model: vikhyatk/moondream2

Intended Use

- Primary Use Case: This model counts and classifies ingredients (exclusively avocados, bananas, doritos, and onions during CalHacks) in images.

- Associated Application: This model is applied through a cooking assistant mobile application built in React-native.

Data

- Dataset: Used a very small dataset of a little less than 100 images collected during the Hackathon. Including images of avocados, bananas, doritos, and onions from various angles and in different combinations.

- Data Collection: Images were taken of various tables with the ingredients arranged, before being manually labelled with the count/type of ingredients in each image.

Evaluation Metrics

- Performance Metrics: The model performed with perfect accuracy on our small test set. With a negligible (2.64e-7) cross entropy loss.

Training

- Training Procedure: Created a basic dataloader class to read image data and expected output. PIL images were then passed through the VIT provided with Moondream. Moondream was then fine-tuned using the default Adam optimizer with a batch size of 8 for 30 epochs.

- Hardware: The model was trained with XPU (Intel(R) Data Center GPU Max 1100) provided by Intel mentors at the Berkeley AI Hackathon.

Limitations

- Current Limitations: The model may struggle with images containing overlapping ingredients or items not seen during training. It also only has a limited dataset, so different backgrounds or types of ingredients would not likely not be counted.

- Potential Improvements: Incorporate a full-scale dataset with labelled ingredient counts to make the model more knowledgable and applicable within our application. Tune hyperparameters beyond what was done during the Hackathon to optimize performance.

Additional Information

- License: Model released under MIT License.

- Contact Information: For inquiries, contact nyap@umich.edu.

- Acknowledgments: Acknowledge Intel team for starter code and assistance in fine-tuning.

- Downloads last month

- 41