|

--- |

|

language: |

|

- multilingual |

|

- en |

|

- de |

|

- fr |

|

- ja |

|

license: mit |

|

tags: |

|

- object-detection |

|

- vision |

|

- generated_from_trainer |

|

- DocLayNet |

|

- LayoutXLM |

|

- COCO |

|

- PDF |

|

- IBM |

|

- Financial-Reports |

|

- Finance |

|

- Manuals |

|

- Scientific-Articles |

|

- Science |

|

- Laws |

|

- Law |

|

- Regulations |

|

- Patents |

|

- Government-Tenders |

|

- object-detection |

|

- image-segmentation |

|

- token-classification |

|

inference: false |

|

datasets: |

|

- pierreguillou/DocLayNet-base |

|

metrics: |

|

- precision |

|

- recall |

|

- f1 |

|

- accuracy |

|

model-index: |

|

- name: pierreguillou/layout-xlm-base-finetuned-with-DocLayNet-base-at-paragraphlevel-ml512 |

|

results: |

|

- task: |

|

name: Token Classification |

|

type: token-classification |

|

metrics: |

|

- name: f1 |

|

type: f1 |

|

value: 0.7739 |

|

- name: accuracy |

|

type: accuracy |

|

value: 0.8655 |

|

--- |

|

|

|

# Document Understanding model (finetuned LiLT base at paragraph level on DocLayNet base) |

|

|

|

This model is a fine-tuned version of [microsoft/layoutxlm-base](https://huggingface.co/microsoft/layoutxlm-base) with the [DocLayNet base](https://huggingface.co/datasets/pierreguillou/DocLayNet-base) dataset. |

|

It achieves the following results on the evaluation set: |

|

|

|

- Loss: 0.1796 |

|

- Precision: 0.8062 |

|

- Recall: 0.7441 |

|

- F1: 0.7739 |

|

- Token Accuracy: 0.9693 |

|

- Paragraph Accuracy: 0.87 |

|

|

|

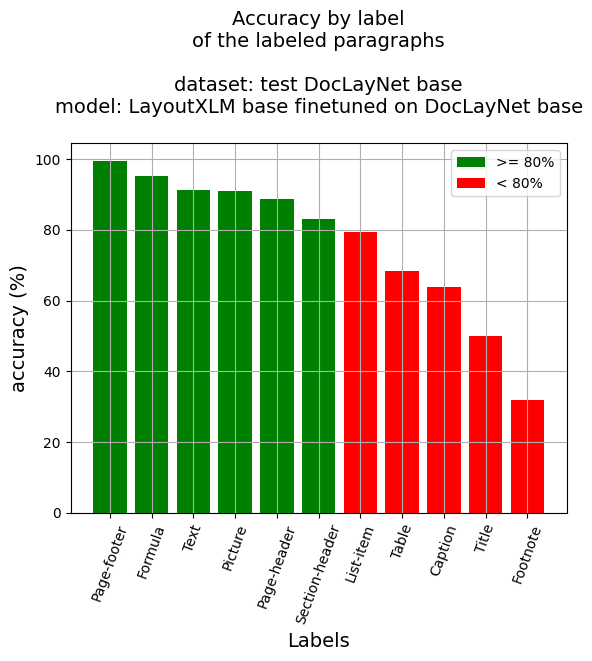

## Accuracy at paragraph level |

|

|

|

|

|

|

|

Paragraph Accuracy: 86.55% |

|

|

|

|

|

|

|

Accuracy by label |

|

- Caption: 63.76% |

|

- Footnote: 31.91% |

|

- Formula: 95.33% |

|

- List-item: 79.31% |

|

- Page-footer: 99.51% |

|

- Page-header: 88.75% |

|

- Picture: 90.91% |

|

- Section-header: 83.16% |

|

- Table: 68.25% |

|

- Text: 91.37% |

|

- Title: 50.0% |

|

|

|

## References |

|

|

|

### Other models |

|

|

|

- LayoutXLM base |

|

- [Document Understanding model (at paragraph level)](https://huggingface.co/pierreguillou/layout-xlm-base-finetuned-with-DocLayNet-base-at-paragraphlevel-ml512) |

|

- [Document Understanding model (at paragraph level)](https://huggingface.co/pierreguillou/layout-xlm-base-finetuned-with-DocLayNet-base-at-linelevel-ml384) |

|

- LiLT base |

|

- [Document Understanding model (at line level)](https://huggingface.co/pierreguillou/layout-xlm-base-finetuned-with-DocLayNet-base-at-linelevel-ml384) |

|

- [Document Understanding model (at paragraph level)](https://huggingface.co/pierreguillou/lilt-xlm-roberta-base-finetuned-with-DocLayNet-base-at-paragraphlevel-ml512) |

|

- [Document Understanding model (at line level)](https://huggingface.co/pierreguillou/lilt-xlm-roberta-base-finetuned-with-DocLayNet-base-at-linelevel-ml384) |

|

|

|

### Blog posts |

|

|

|

- Layout XLM base |

|

- (03/31/2023) [Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level with LayoutXLM base](https://medium.com/@pierre_guillou/document-ai-inference-app-and-fine-tuning-notebook-for-document-understanding-at-paragraph-level-3507af80573d) |

|

- (03/25/2023) [Document AI | APP to compare the Document Understanding LiLT and LayoutXLM (base) models at line level](https://medium.com/@pierre_guillou/document-ai-app-to-compare-the-document-understanding-lilt-and-layoutxlm-base-models-at-line-1c53eb481a15) |

|

- (03/05/2023) [Document AI | Inference APP and fine-tuning notebook for Document Understanding at line level with LayoutXLM base](https://medium.com/@pierre_guillou/document-ai-inference-app-and-fine-tuning-notebook-for-document-understanding-at-line-level-with-b08fdca5f4dc) |

|

- LiLT base |

|

- (02/16/2023) [Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level](https://medium.com/@pierre_guillou/document-ai-inference-app-and-fine-tuning-notebook-for-document-understanding-at-paragraph-level-c18d16e53cf8) |

|

- (02/14/2023) [Document AI | Inference APP for Document Understanding at line level](https://medium.com/@pierre_guillou/document-ai-inference-app-for-document-understanding-at-line-level-a35bbfa98893) |

|

- (02/10/2023) [Document AI | Document Understanding model at line level with LiLT, Tesseract and DocLayNet dataset](https://medium.com/@pierre_guillou/document-ai-document-understanding-model-at-line-level-with-lilt-tesseract-and-doclaynet-dataset-347107a643b8) |

|

- (01/31/2023) [Document AI | DocLayNet image viewer APP](https://medium.com/@pierre_guillou/document-ai-doclaynet-image-viewer-app-3ac54c19956) |

|

- (01/27/2023) [Document AI | Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)](https://medium.com/@pierre_guillou/document-ai-processing-of-doclaynet-dataset-to-be-used-by-layout-models-of-the-hugging-face-hub-308d8bd81cdb) |

|

|

|

### Notebooks (paragraph level) |

|

- Layout XLM base |

|

- [Document AI | Inference at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb) |

|

- [Document AI | Inference APP at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb) |

|

- [Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LayoutXLM_base_on_DocLayNet_base_in_any_language_at_paragraphlevel_ml_512.ipynb) |

|

- LiLT base |

|

- [Document AI | Inference APP at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb) |

|

- [Document AI | Inference at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb) |

|

- [Document AI | Fine-tune LiLT on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LiLT_on_DocLayNet_base_in_any_language_at_paragraphlevel_ml_512.ipynb) |

|

|

|

### Notebooks (line level) |

|

- Layout XLM base |

|

- [Document AI | Inference APP at line level with 2 Document Understanding models (LiLT and LayoutXLM base fine-tuned on DocLayNet base dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LiLT_&_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb) |

|

- [Document AI | Inference at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb) |

|

- [Document AI | Inference APP at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb) |

|

- [Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LayoutXLM_base_on_DocLayNet_base_in_any_language_at_linelevel_ml_384.ipynb) |

|

- LiLT base |

|

- [Document AI | Inference at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb) |

|

- [Document AI | Inference APP at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb) |

|

- [Document AI | Fine-tune LiLT on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LiLT_on_DocLayNet_base_in_any_language_at_linelevel_ml_384.ipynb) |

|

- [DocLayNet image viewer APP](https://github.com/piegu/language-models/blob/master/DocLayNet_image_viewer_APP.ipynb) |

|

- [Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)](processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb) |

|

|

|

## APP |

|

|

|

You can test this model with this APP in Hugging Face Spaces: [Inference APP for Document Understanding at paragraph level (v2)](https://huggingface.co/spaces/pierreguillou/Inference-APP-Document-Understanding-at-paragraphlevel-v2). |

|

|

|

|

|

|

|

You can run as well the corresponding notebook: [Document AI | Inference APP at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)]() |

|

|

|

## DocLayNet dataset |

|

|

|

[DocLayNet dataset](https://github.com/DS4SD/DocLayNet) (IBM) provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories. |

|

|

|

Until today, the dataset can be downloaded through direct links or as a dataset from Hugging Face datasets: |

|

- direct links: [doclaynet_core.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_core.zip) (28 GiB), [doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip) (7.5 GiB) |

|

- Hugging Face dataset library: [dataset DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet) |

|

|

|

Paper: [DocLayNet: A Large Human-Annotated Dataset for Document-Layout Analysis](https://arxiv.org/abs/2206.01062) (06/02/2022) |

|

|

|

## Model description |

|

|

|

The model was finetuned at **paragraph level on chunk of 512 tokens with overlap of 128 tokens**. Thus, the model was trained with all layout and text data of all pages of the dataset. |

|

|

|

At inference time, a calculation of best probabilities give the label to each paragraph bounding boxes. |

|

|

|

## Inference |

|

|

|

See notebook: [Document AI | Inference at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)]() |

|

|

|

## Training and evaluation data |

|

|

|

See notebook: [Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)]() |

|

|

|

## Training procedure |

|

|

|

### Training hyperparameters |

|

|

|

The following hyperparameters were used during training: |

|

- learning_rate: 2e-05 |

|

- train_batch_size: 8 |

|

- eval_batch_size: 16 |

|

- seed: 42 |

|

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08 |

|

- lr_scheduler_type: linear |

|

- lr_scheduler_warmup_ratio: 0.1 |

|

- num_epochs: 4 |

|

- mixed_precision_training: Native AMP |

|

|

|

### Training results |

|

|

|

| Training Loss | Epoch | Step | Accuracy | F1 | Validation Loss | Precision | Recall | |

|

|:-------------:|:-----:|:----:|:--------:|:------:|:---------------:|:---------:|:------:| |

|

| No log | 0.11 | 200 | 0.8842 | 0.1066 | 0.4428 | 0.1154 | 0.0991 | |

|

| No log | 0.21 | 400 | 0.9243 | 0.4440 | 0.3040 | 0.4548 | 0.4336 | |

|

| 0.7241 | 0.32 | 600 | 0.9359 | 0.5544 | 0.2265 | 0.5330 | 0.5775 | |

|

| 0.7241 | 0.43 | 800 | 0.9479 | 0.6015 | 0.2140 | 0.6013 | 0.6017 | |

|

| 0.2343 | 0.53 | 1000 | 0.9402 | 0.6132 | 0.2852 | 0.6642 | 0.5695 | |

|

| 0.2343 | 0.64 | 1200 | 0.9540 | 0.6604 | 0.1694 | 0.6565 | 0.6644 | |

|

| 0.2343 | 0.75 | 1400 | 0.9354 | 0.6198 | 0.2308 | 0.5119 | 0.7854 | |

|

| 0.1913 | 0.85 | 1600 | 0.9594 | 0.6590 | 0.1601 | 0.7190 | 0.6082 | |

|

| 0.1913 | 0.96 | 1800 | 0.9541 | 0.6597 | 0.1671 | 0.5790 | 0.7664 | |

|

| 0.1346 | 1.07 | 2000 | 0.9612 | 0.6986 | 0.1580 | 0.6838 | 0.7140 | |

|

| 0.1346 | 1.17 | 2200 | 0.9597 | 0.6897 | 0.1423 | 0.6618 | 0.7200 | |

|

| 0.1346 | 1.28 | 2400 | 0.9663 | 0.6980 | 0.1580 | 0.7490 | 0.6535 | |

|

| 0.098 | 1.39 | 2600 | 0.9616 | 0.6800 | 0.1394 | 0.7044 | 0.6573 | |

|

| 0.098 | 1.49 | 2800 | 0.9686 | 0.7251 | 0.1756 | 0.6893 | 0.7649 | |

|

| 0.0999 | 1.6 | 3000 | 0.9636 | 0.6985 | 0.1542 | 0.7127 | 0.6848 | |

|

| 0.0999 | 1.71 | 3200 | 0.9670 | 0.7097 | 0.1187 | 0.7538 | 0.6705 | |

|

| 0.0999 | 1.81 | 3400 | 0.9585 | 0.7427 | 0.1793 | 0.7602 | 0.7260 | |

|

| 0.0972 | 1.92 | 3600 | 0.9621 | 0.7189 | 0.1836 | 0.7576 | 0.6839 | |

|

| 0.0972 | 2.03 | 3800 | 0.9642 | 0.7189 | 0.1465 | 0.7388 | 0.6999 | |

|

| 0.0662 | 2.13 | 4000 | 0.9691 | 0.7450 | 0.1409 | 0.7615 | 0.7292 | |

|

| 0.0662 | 2.24 | 4200 | 0.9615 | 0.7432 | 0.1720 | 0.7435 | 0.7429 | |

|

| 0.0662 | 2.35 | 4400 | 0.9667 | 0.7338 | 0.1440 | 0.7469 | 0.7212 | |

|

| 0.0581 | 2.45 | 4600 | 0.9657 | 0.7135 | 0.1928 | 0.7458 | 0.6839 | |

|

| 0.0581 | 2.56 | 4800 | 0.9692 | 0.7378 | 0.1645 | 0.7467 | 0.7292 | |

|

| 0.0538 | 2.67 | 5000 | 0.9656 | 0.7619 | 0.1517 | 0.7700 | 0.7541 | |

|

| 0.0538 | 2.77 | 5200 | 0.9684 | 0.7728 | 0.1676 | 0.8227 | 0.7286 | |

|

| 0.0538 | 2.88 | 5400 | 0.9725 | 0.7608 | 0.1277 | 0.7865 | 0.7367 | |

|

| 0.0432 | 2.99 | 5600 | 0.9693 | 0.7784 | 0.1532 | 0.7891 | 0.7681 | |

|

| 0.0432 | 3.09 | 5800 | 0.9692 | 0.7783 | 0.1701 | 0.8067 | 0.7519 | |

|

| 0.0272 | 3.2 | 6000 | 0.9732 | 0.7798 | 0.1159 | 0.8072 | 0.7542 | |

|

| 0.0272 | 3.3 | 6200 | 0.9720 | 0.7797 | 0.1835 | 0.7926 | 0.7672 | |

|

| 0.0272 | 3.41 | 6400 | 0.9730 | 0.7894 | 0.1481 | 0.8183 | 0.7624 | |

|

| 0.0274 | 3.52 | 6600 | 0.9686 | 0.7655 | 0.1552 | 0.7958 | 0.7373 | |

|

| 0.0274 | 3.62 | 6800 | 0.9698 | 0.7724 | 0.1523 | 0.8068 | 0.7407 | |

|

| 0.0246 | 3.73 | 7000 | 0.9691 | 0.7720 | 0.1673 | 0.7960 | 0.7493 | |

|

| 0.0246 | 3.84 | 7200 | 0.9688 | 0.7695 | 0.1333 | 0.7986 | 0.7424 | |

|

| 0.0246 | 3.94 | 7400 | 0.1796 | 0.8062 | 0.7441 | 0.7739 | 0.9693 | |

|

|

|

|

|

### Framework versions |

|

|

|

- Transformers 4.27.3 |

|

- Pytorch 1.10.0+cu111 |

|

- Datasets 2.10.1 |

|

- Tokenizers 0.13.2 |