license: mit

language:

- gl

Galician text here

Model description

Fine-tuning of a text-to-text model to accomplish the data-to-text task in Galician. Taking as base the MT5-base multilingual pre-trained model, the fine-tuning technique is applied to train a model able to generate descriptions from structured data in Galician language.

How to generate texts from tabular data

- Open bash terminal

- Install Python 3.10

- To generate a text from any of the 568 test records from our dataset, you must use the following command:

python generate_text.py -i <data_id> -o <output_path>

- The -i argument is used to indicate the ID of the table from which we want our model to generate a text. Only IDs from 0 to 569 are valid, as these are the records from the test partition, i.e., examples the model was not trained on.

- The -o argument is used to indicate the path where the file with the generated text will be created. If not provided, the file will be created in the current directory.

Training

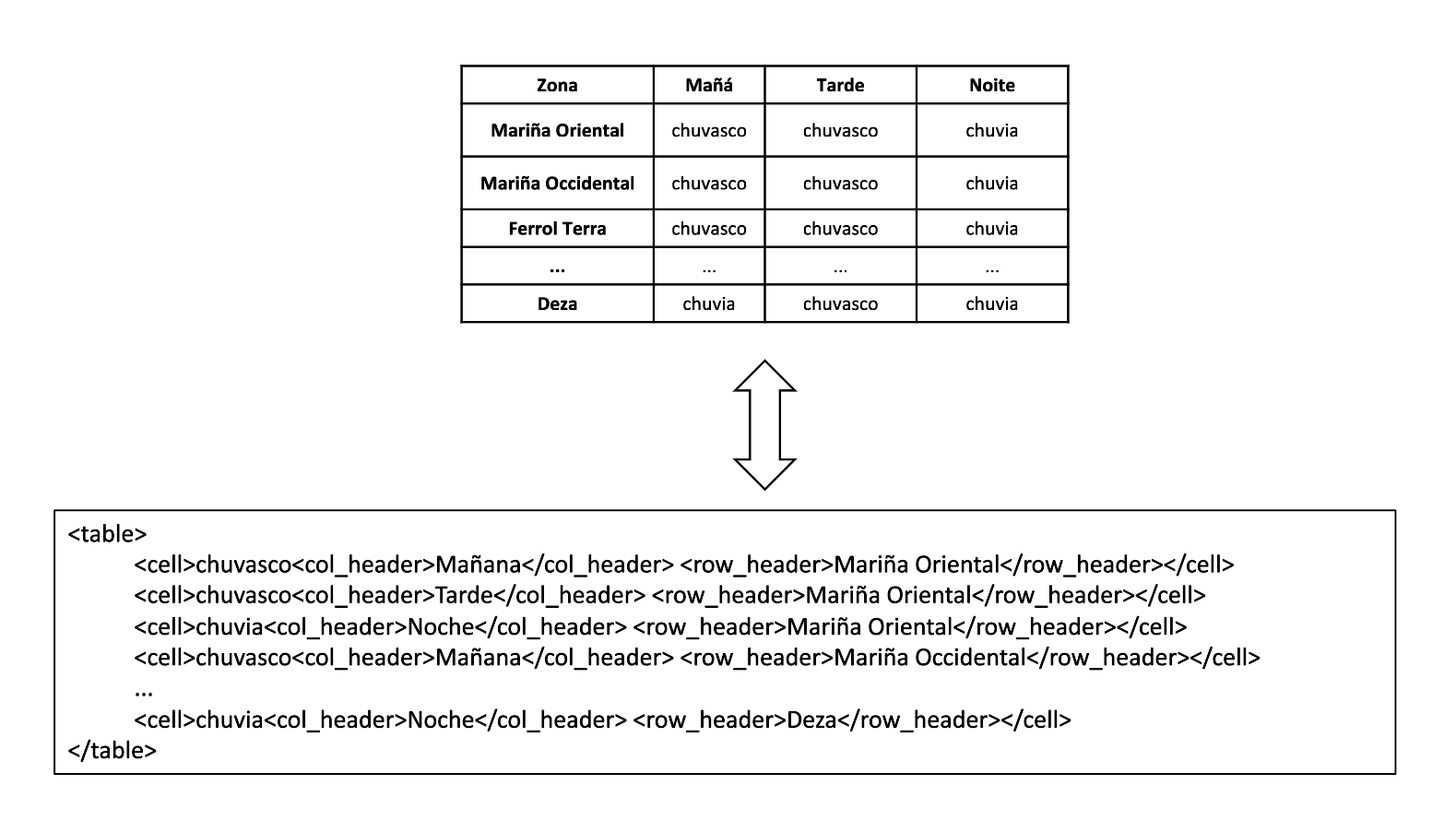

The first dataset for data-to-text in Galician was used to train the model. The dataset was released by Proxecto Nós and it is available in the next repository: https://zenodo.org/record/7661650#.ZA8aZ3bMJro. The dataset is made up of 3,302 records of meteorological prediction tabular data along with handwritten textual descriptions in Galician. The chosen base model uses as input text format instead of structured data, so we performed a "linearization" process on our tabular data before using them to fine-tune the model. The process consist of transforming the data tables of our dataset into labelled text format as follows:

This way, the text-to-text model can understand the content of the data tables and their related texts in order to learn how to generate descriptive texts from new data tables.

To perform the fine-tuning on the base model the following hypermarameters were used during training:

- Batch size: 8

- Optimizer: Adam

- Learning rate: 1e-5

- Training epochs: 1000

Model License

MIT License

Copyright (c) 2023 Proxecto Nós

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

Funding

This research was funded by the project "Nós: o galego na sociedade e economía da intelixencia artificial", the result of an agreement between Xunta de Galicia and Universidade de Santiago de Compostela, and by grants ED431G2019/04 and ED431C2022/19 from the Consellaría de Educación, Universidade e Formación Profesional and by the European Regional Development Fund (ERDF/FEDER programme).

Citation

If you use this model in your work, please cite as follows:

González Corbelle, Javier; Bugarín Diz, Alberto. 2023 Nos_D2T-gl. URL: https://huggingface.co/proxectonos/Nos_D2T-gl/